By Yuanyi

Knative is an open-source serverless application orchestration framework built on Kubernetes. It aims to establish cloud-native and cross-platform standards for serverless application orchestration. Key features of Knative include request-based auto scaling, scale-to-zero, multi-version management, traffic-based canary release, and event-driven capabilities.

Elasticity is a crucial aspect of serverless application architectures. This article delves into the various elasticity capabilities provided by Knative, the most popular open-source serverless application framework in the CNCF community.

(Note: This article is based on Knative 1.8.0 for analysis.)

Knative supports automatic elastic implementation through Knative Pod Autoscaler (KPA) based on requests, along with HPA in Kubernetes. Additionally, Knative offers flexible scaling mechanisms to accommodate specific business requirements. This article also explores precise elasticity implementation through MSE and request-based elasticity prediction through AHPA.

Let's begin by discussing Knative's most appealing elasticity feature: KPA.

Elasticity based on CPU or memory may not accurately represent actual service usage. However, elasticity based on concurrency or the number of requests processed per second (QPS/RPS) can directly reflect web service performance. Knative provides automatic elasticity based on requests.

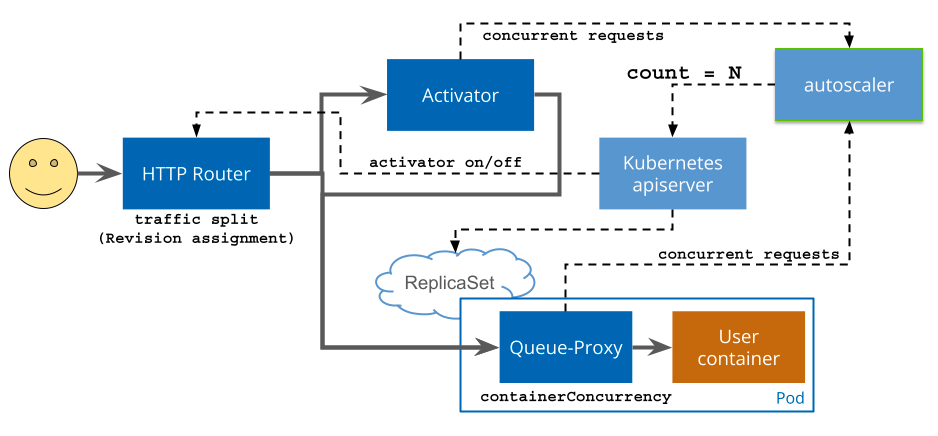

To obtain the number of requests for the current service, Knative Serving injects a queue-proxy container into each pod. This container collects concurrency or request per second (RPS) metrics from user containers. At regular intervals, the Autoscaler retrieves these metrics and adjusts the number of pods in the Deployment following the corresponding algorithm, thereby achieving request-based auto scaling.

Photo Source: https://knative.dev/docs/serving/request-flow/

Autoscaler performs elastic scaling based on the average number of requests (or concurrency) per pod. By default, Knative uses automatic scaling based on concurrency, with a maximum concurrency of 100 pods. Knative also introduces the concept of target-utilization-percentage, which ranges from 0 to 1, with a default value of 0.7.

Taking concurrency-based scaling as an example, the number of pods is calculated as follows:

Number of pods = Total number of concurrent requests/(Maximum concurrency of pods × Target utilization percentage)For instance, if a service has a maximum pod concurrency of 10, receives 100 concurrent requests, and the target utilization percentage is set to 0.7, the Autoscaler will create approximately 15 pods (100 / (0.7 × 10) is roughly equal to 15).

When using KPA, the number of pods is automatically scaled down to 0 when there are no traffic requests. When a traffic request arrives, the number of pods scales up from 0. How does Knative accomplish this? The answer lies in mode switching.

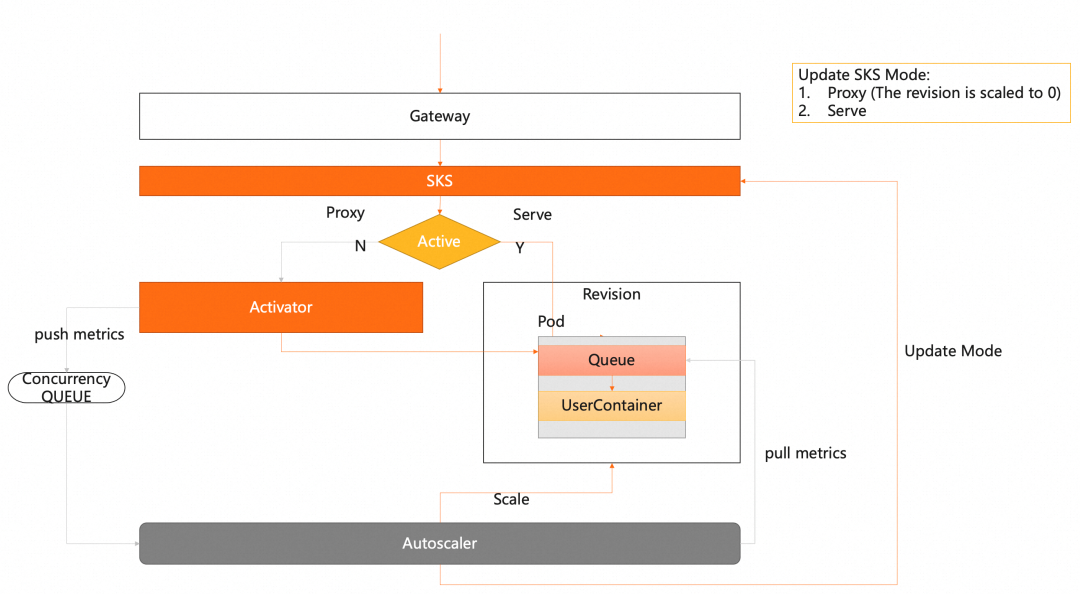

Knative defines two request access modes: Proxy and Serve. The Proxy mode, as the name suggests, acts as a proxy mode where requests are forwarded by the activator component. The Serve mode allows direct requests sent from the gateway to the pods without going through the activator proxy. As shown in the following figure:

The Autoscaler component is responsible for mode switching. When the number of requests is 0, the Autoscaler switches the request mode to Proxy. At this point, the request is sent to the activator component through the gateway. After receiving the request, the activator places it in the queue and sends metrics to notify the Autoscaler to scale up the pods. Once the activator detects that the pods are ready for scaling, it forwards the request. The Autoscaler also identifies the ready pods and switches the mode to Serve.

KPA involves two concepts related to elasticity: Stable mode and Panic mode. These modes help achieve refined elasticity based on requests.

In Stable mode, the average pod concurrency is calculated over a stable window period, which is set to 60 seconds by default.

Panic mode is based on the panic window period, calculated using the stable window period and panic-window-percentage parameters. The panic-window-percentage value ranges from 0 to 1, with a default of 0.1. The panic window period is calculated as follows: panic window period = stable window period × panic-window-percentage. The default is 6 seconds. The average pod concurrency is calculated over this 6-second period.

KPA calculates the required number of pods based on the average pod concurrency in both Stable and Panic modes.

Which value is used for elasticity to take effect? This is determined based on whether the number of pods calculated in Panic mode exceeds the Panic threshold. The panic threshold is calculated as panic-threshold-percentage/100, with a default panic-threshold-percentage of 200. This means the default panic threshold is 2. If the number of pods calculated in Panic mode is greater than or equal to twice the current number of ready pods, the number of pods in Panic mode is used for elasticity. Otherwise, the number of pods in Stable mode is used.

Clearly, Panic mode is designed to handle burst traffic scenarios. The sensitivity of elasticity can be adjusted using the configurable parameters mentioned above.

In KPA, you can set the burst request capacity (target-burst-capacity) to protect pods from unexpected surge in traffic. This parameter value determines whether the request is switched to Proxy mode, where the activator component acts as a request buffer. If the calculation (current number of ready pods × maximum concurrency - burst request capacity - concurrency calculated in Panic mode) is less than 0, it means that the burst traffic exceeds the capacity threshold. In this case, the request is switched to the activator for buffering. When the burst request capacity value is set to 0, the request is switched to the activator only when the pod is scaled down to 0. If the number of pods is greater than 0 and the container-concurrency-target-percentage is set to 100, the request always goes through the activator. A value of -1 indicates unlimited target burst capacity, in which case the request always goes through the activator.

To reduce the frequency of pod scaling for pods with high startup costs, KPA allows you to set the pod delay scale-down time and the pod scale-to-zero retention time.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/scale-down-delay: ""60s"

autoscaling.knative.dev/scale-to-zero-pod-retention-period: "1m5s"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:73fbdd56In Knative, you can configure the target threshold utilization rate to a smaller value to scale up pods beyond the actual required number. This preheats the resources before the requests reach the target concurrency. For example, if the containerConcurrency is set to 10 and the target utilization rate is set to 70 percent, the Autoscaler will create a new pod when the average number of concurrent requests across all existing pods reaches 7. It takes some time for the pods to be created and ready. By reducing the target utilization rate, you can proactively scale up pods, reducing the response latency caused by cold starts.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/target-utilization-percentage: "70"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:73fbdd56After understanding the working mechanism of the Knative Pod Autoscaler, let's now explore how to configure KPA. Knative provides two methods for configuring KPA: global mode and revision mode.

In global mode, you can modify the ConfigMap: config-autoscaler in the Kubernetes. Run the following command to view the config-autoscaler:

kubectl -n knative-serving get cm config-autoscalerapiVersion: v1

kind: ConfigMap

metadata:

name: config-autoscaler

namespace: knative-serving

data:

container-concurrency-target-default: "100"

container-concurrency-target-percentage: "70"

requests-per-second-target-default: "200"

target-burst-capacity: "211"

stable-window: "60s"

panic-window-percentage: "10.0"

panic-threshold-percentage: "200.0"

max-scale-up-rate: "1000.0"

max-scale-down-rate: "2.0"

enable-scale-to-zero: "true"

scale-to-zero-grace-period: "30s"

scale-to-zero-pod-retention-period: "0s"

pod-autoscaler-class: "kpa.autoscaling.knative.dev"

activator-capacity: "100.0"

initial-scale: "1"

allow-zero-initial-scale: "false"

min-scale: "0"

max-scale: "0"

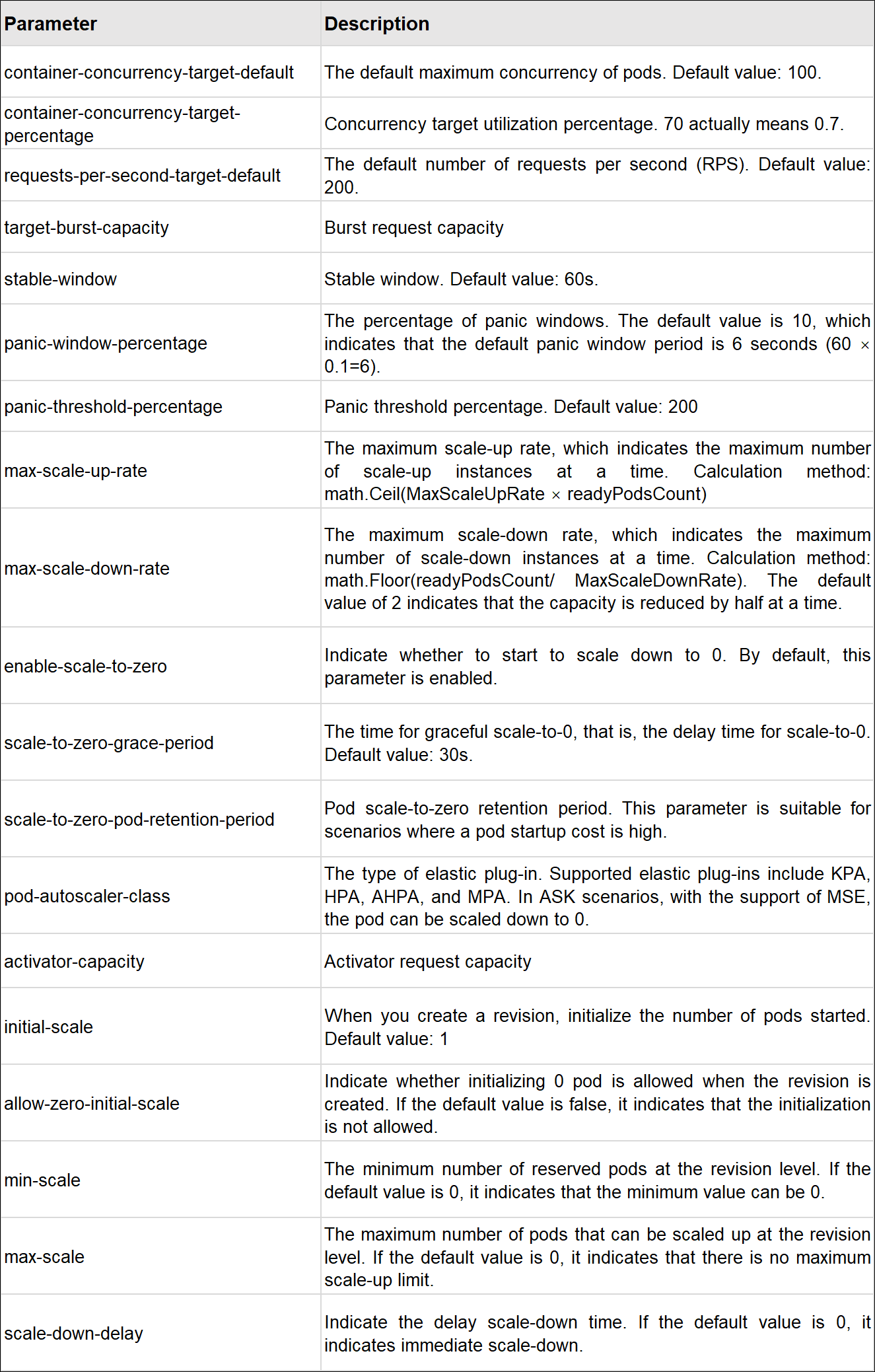

scale-down-delay: "0s"Parameter description:

In Knative, you can configure elasticity metrics for each revision. Some configuration parameters are shown as follows:

Metric type

Target threshold

Pod scale-to-0 retention period

Target utilization percentage

Examples:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/metric: "concurrency"

autoscaling.knative.dev/target: "50"

autoscaling.knative.dev/scale-to-zero-pod-retention-period: "1m5s"

autoscaling.knative.dev/target-utilization-percentage: "80"For Kubernetes HPA, Knative also provides natural configuration support. You can use automatic elasticity based on CPU or memory in Knative.

• CPU-based elastic configuration

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: "hpa.autoscaling.knative.dev"

autoscaling.knative.dev/metric: "cpu"• Memory-based elastic configuration

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: "hpa.autoscaling.knative.dev"

autoscaling.knative.dev/metric: "memory"Knative offers a flexible plugin mechanism (pod-autoscaler-class) to support different elasticity policies. Alibaba Cloud Container Service Knative supports the following elastic plugins: KPA, HPA, MPA, and AHPA with predictive capability.

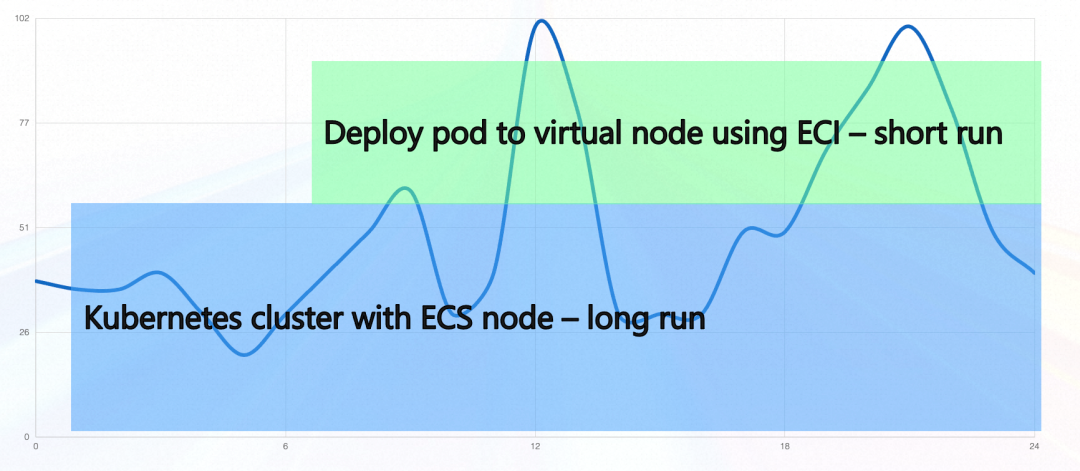

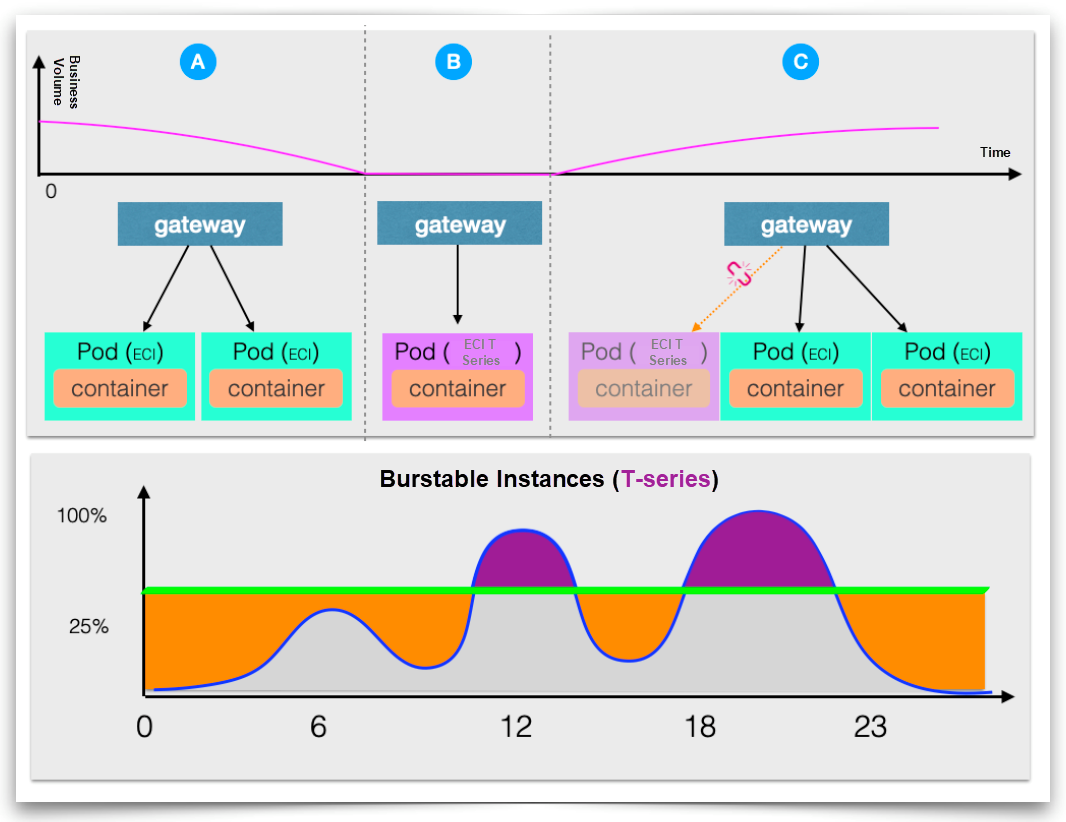

In addition to the native capabilities of KPA, we provide the ability to reserve resource pools. This feature can be used in the following scenarios:

• Mixing ECS and ECI: If you want to use ECS resources under normal conditions and switch to ECI resources during burst traffic, you can reserve resource pools. For example, if a single pod can handle 10 concurrent requests and the number of pods in the reserved resource pool is 5, ECS resources can handle up to 50 concurrent requests under normal conditions. If the number of concurrent requests exceeds 50, Knative will scale up the number of pods to meet the demand, using ECI resources for the newly scaled-up pods.

• Resource preheating: In scenarios where ECI resources are fully utilized, you can also preheat resources by reserving resource pools. During a business trough, the default computational instance is replaced by a reserved instance. When the first request arrives, the reserved instance is used to provide services, and the default specification instance is triggered for scale-up. After the compute-optimized instances are created, all new requests are forwarded to these instances. Reserved instances are released after they process all the requests sent to them. This seamless switchover mechanism reduces instance costs and the latency caused by cold starts.

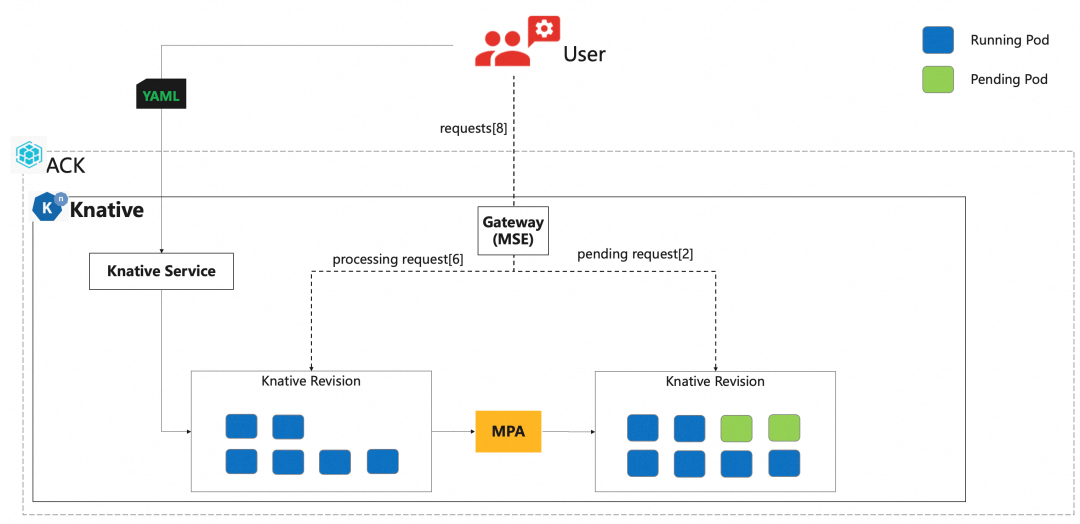

The throughput rate of a single pod to process requests is limited. If multiple requests are forwarded to the same pod, the server can become overloaded. Therefore, it is important to accurately control the number of concurrent requests processed by a single pod. In some AIGC scenarios, a single request may consume a large amount of GPU resources. Hence, it is necessary to limit the number of requests that can be concurrently processed by each pod.

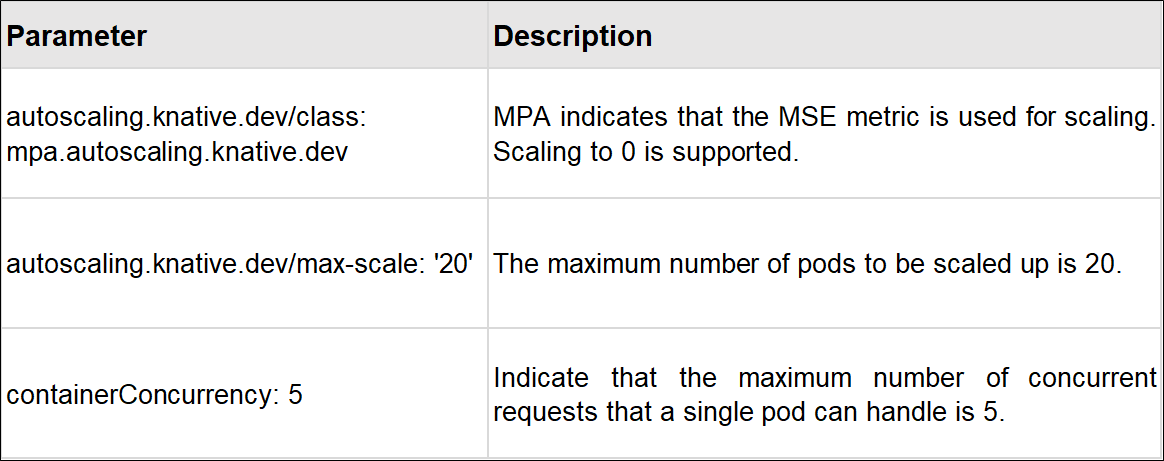

Knative, in combination with the MSE cloud-native gateway, provides the MPA elastic plugin to achieve precise control of elasticity based on concurrency.

MPA obtains concurrency from the MSE gateway and calculates the required number of pods for scaling. The MSE gateway can accurately forward based on requests.

Examples:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: mpa.autoscaling.knative.dev

autoscaling.knative.dev/max-scale: '20'

spec:

containerConcurrency: 5

containers:

- image: registry-vpc.cn-beijing.aliyuncs.com/knative-sample/helloworld-go:73fbdd56

env:

- name: TARGET

value: "Knative"Parameter description:

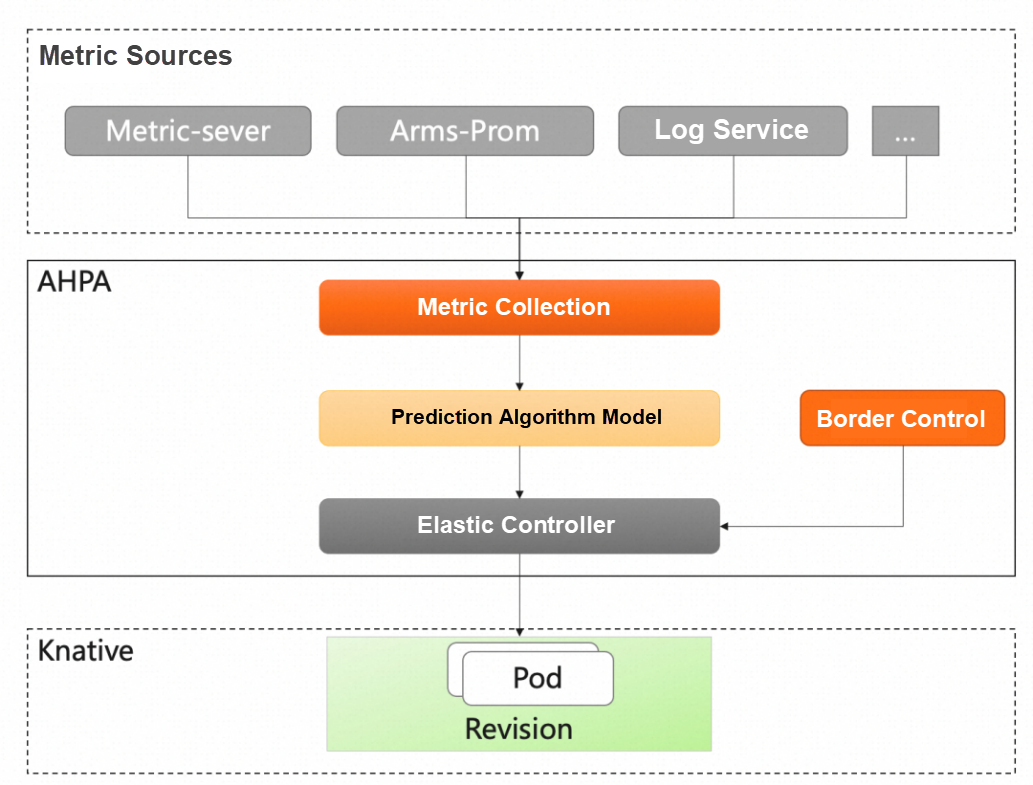

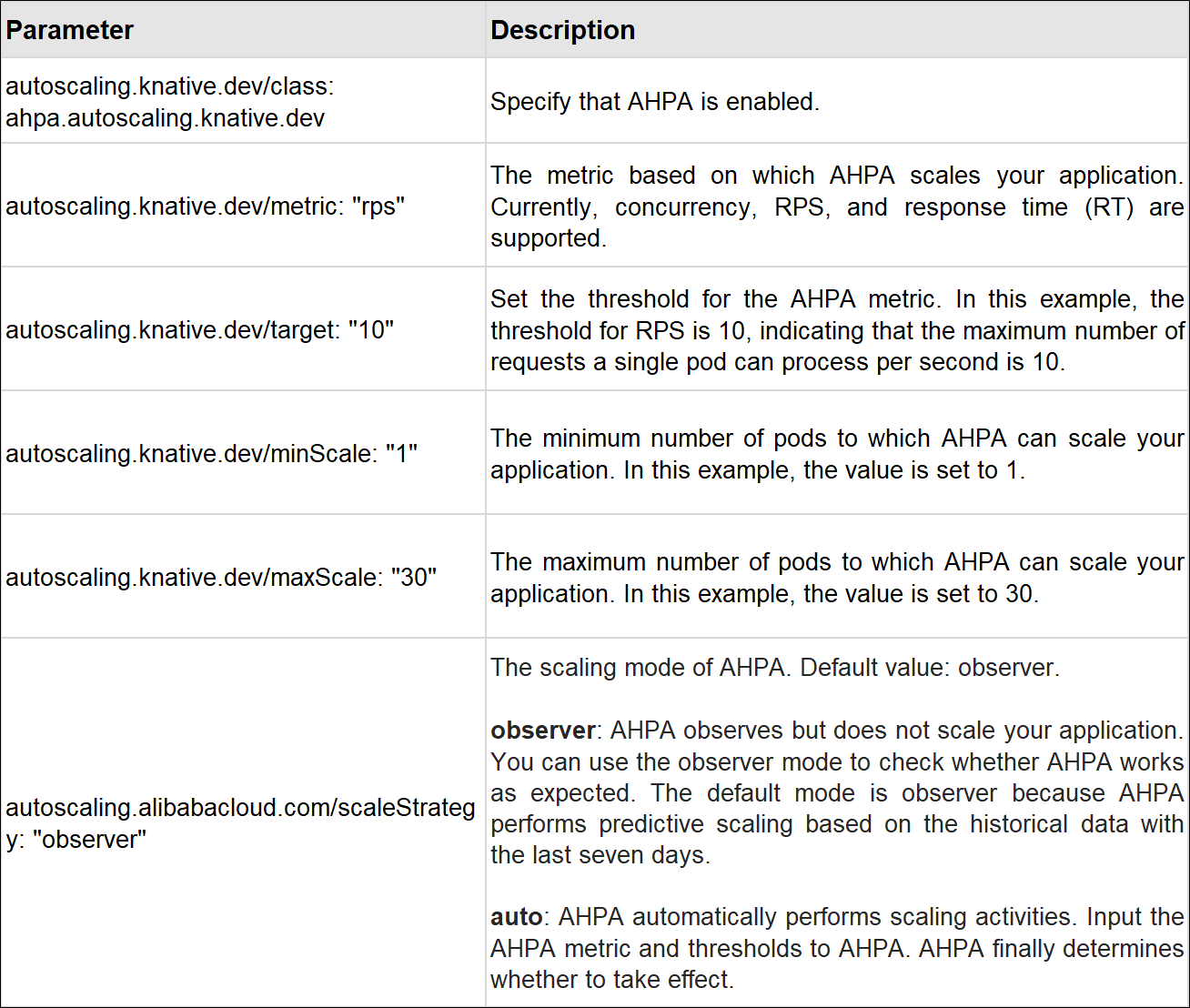

Container Service for Advanced Horizontal Pod Autoscaler (AHPA) can automatically identify the elastic cycle and predict the capacity based on historical business metrics to solve the problem of elastic lag.

Knative supports AHPA elasticity. When requests are periodic, you can use elastic prediction to preheat resources. Compared with lowering the threshold for resource preheating, AHPA can maximize resource utilization.

In addition, because AHPA supports custom metric configuration, the combination of Knative and AHPA can achieve automatic elasticity based on MSMQ and response delay time.

The following code shows how to configure AHPA based on RPS:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: autoscale-go

namespace: default

spec:

template:

metadata:

labels:

app: autoscale-go

annotations:

autoscaling.knative.dev/class: ahpa.autoscaling.knative.dev

autoscaling.knative.dev/target: "10"

autoscaling.knative.dev/metric: "rps"

autoscaling.knative.dev/minScale: "1"

autoscaling.knative.dev/maxScale: "30"

autoscaling.alibabacloud.com/scaleStrategy: "observer"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/autoscale-go:0.1Parameter description:

This article introduces the typical elastic implementation KPA in Knative, including how to achieve request-based automatic elasticity, scaling down to 0, and dealing with burst traffic. It also covers the expansion and enhancement of Knative's elasticity functions, such as reserved resource pools, precise elasticity, and elastic prediction capabilities.

How to Select Open Source Microservices? Detailed Comparison of Spring Cloud, Dubbo, gRPC, and Istio

667 posts | 55 followers

FollowAlibaba Cloud Native Community - April 9, 2024

Alibaba Container Service - March 7, 2025

Alibaba Container Service - July 22, 2021

Alibaba Cloud Native Community - March 6, 2023

Alibaba Container Service - May 12, 2021

Alibaba Cloud Native Community - November 15, 2023

667 posts | 55 followers

Follow NAT(NAT Gateway)

NAT(NAT Gateway)

A public Internet gateway for flexible usage of network resources and access to VPC.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn MoreMore Posts by Alibaba Cloud Native Community