Recently, Alibaba Cloud's research on multi-model GPU pooling services was accepted to the prestigious Symposium on Operating Systems Principles (SOSP) 2025 conference. The paper introduces Aegaeon, a multi-model hybrid serving system that significantly improves GPU resource utilization. The core technologies behind Aegaeon have already been deployed in Alibaba Cloud Model Studio.

SOSP, organized by ACM SIGOPS, is one of the top-tier conferences in computer systems. Often called the "Oscars of operating systems research," SOSP accepts only a few dozen papers each year, representing the most significant advancements in operating systems and systems software. This year's SOSP accepted just 66 papers. The integration of system software with large AI model technologies is a prominent trend reflected in this year's accepted papers.

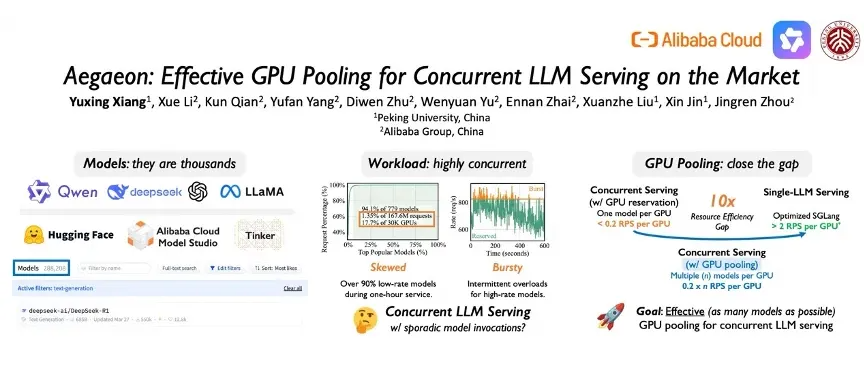

The number of global AI models is experiencing continuous growth. According to statistics, Hugging Face now hosts over one million models. In real-world deployments, a small subset of popular models dominates inference requests, while over 90% of models see infrequent use. The current de facto standard solution is to reserve at least one inference instance per model, which results in significant GPU resource underutilization.

In their paper, the research team introduces Aegaeon, an innovative multi-model hybrid serving system that enables token-level multi-model serving to achieve significantly higher GPU utilization when serving numerous large language models (LLMs) concurrently.

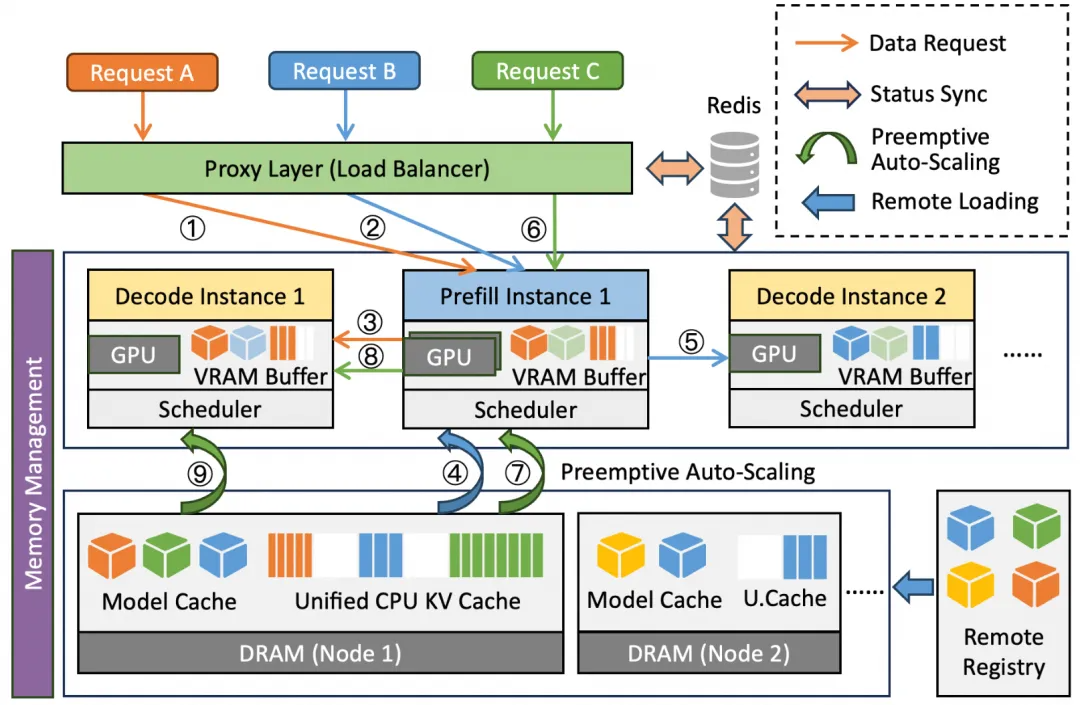

Aegaeon's serving architecture is designed around three key components: a proxy layer, a GPU pool, and a memory manager.

Image | Research framework

Proxy layer: This layer receives and dispatches inference requests, ensuring load balancing and fault tolerance. State synchronization is achieved through shared memory mechanisms such as Redis. Aegaeon can direct requests from different models to the same instance, enhancing resource sharing and scheduling flexibility.

GPU pool: This pool serves as a centralized resource pool comprising a set of virtualized GPU instances provided by cloud vendors. Each instance may consist of one or more GPUs hosted on a single physical machine. Within Aegaeon, a single instance performs either prefill or decoding operations and, guided by a token-level scheduler, serves requests from multiple models concurrently, making model switching a critical operation.

Memory manager: This component coordinates host and GPU memory resources across nodes in the serving cluster, with two primary goals:

1) QuickLoader caches model weights in available memory, significantly reducing the latency of loading models from remote repositories.

2) The GPU-CPU key-value (KV) management mechanism provides unified storage and management for KV caches.

Aegaeon pioneers token-level scheduling, enabling dynamic model switching decisions after each generated token. By leveraging accurate execution time prediction and an innovative token-level scheduling algorithm, Aegaeon precisely determines model switching needs, enabling concurrent multi-model serving while meeting latency requirements.

Furthermore, through component reuse, fine-grained GPU memory management, and optimized KV cache synchronization, Aegaeon reduces model switching overhead by up to 97%, ensuring real-time token-level scheduling with sub-second model switching latency.

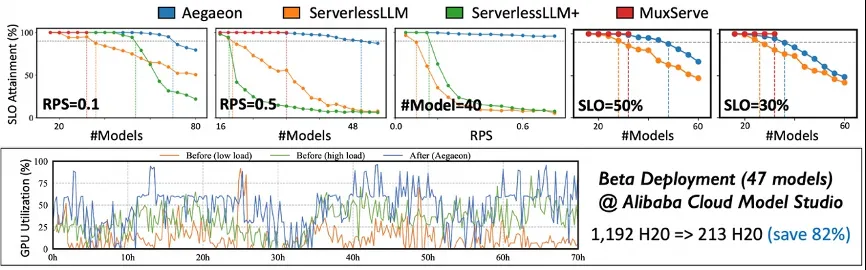

Aegaeon supports concurrent serving of up to seven different models on a single GPU, achieving 1.5× to 9× higher effective throughput and 2× to 2.5× greater request handling capacity compared to existing mainstream systems.

Image | Aegaeon significantly boosts GPU utilization

Aegaeon's core technologies have been deployed in Alibaba Cloud Model Studio, supporting inference for dozens of models while reducing GPU consumption by 82%. To date, Alibaba Cloud Model Studio has launched more than 200 industry-leading models, including Qwen, Wan, and DeepSeek, and has seen a fifteen-fold increase in model invocations over the past year.

Alibaba Cloud Recognized as a Leader in Two Gartner® Reports

1,292 posts | 455 followers

FollowAlibaba Cloud Community - December 1, 2025

Farruh - December 5, 2025

Alibaba Container Service - April 3, 2025

Justin See - November 7, 2025

Justin See - December 1, 2025

Kidd Ip - August 12, 2025

1,292 posts | 455 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community