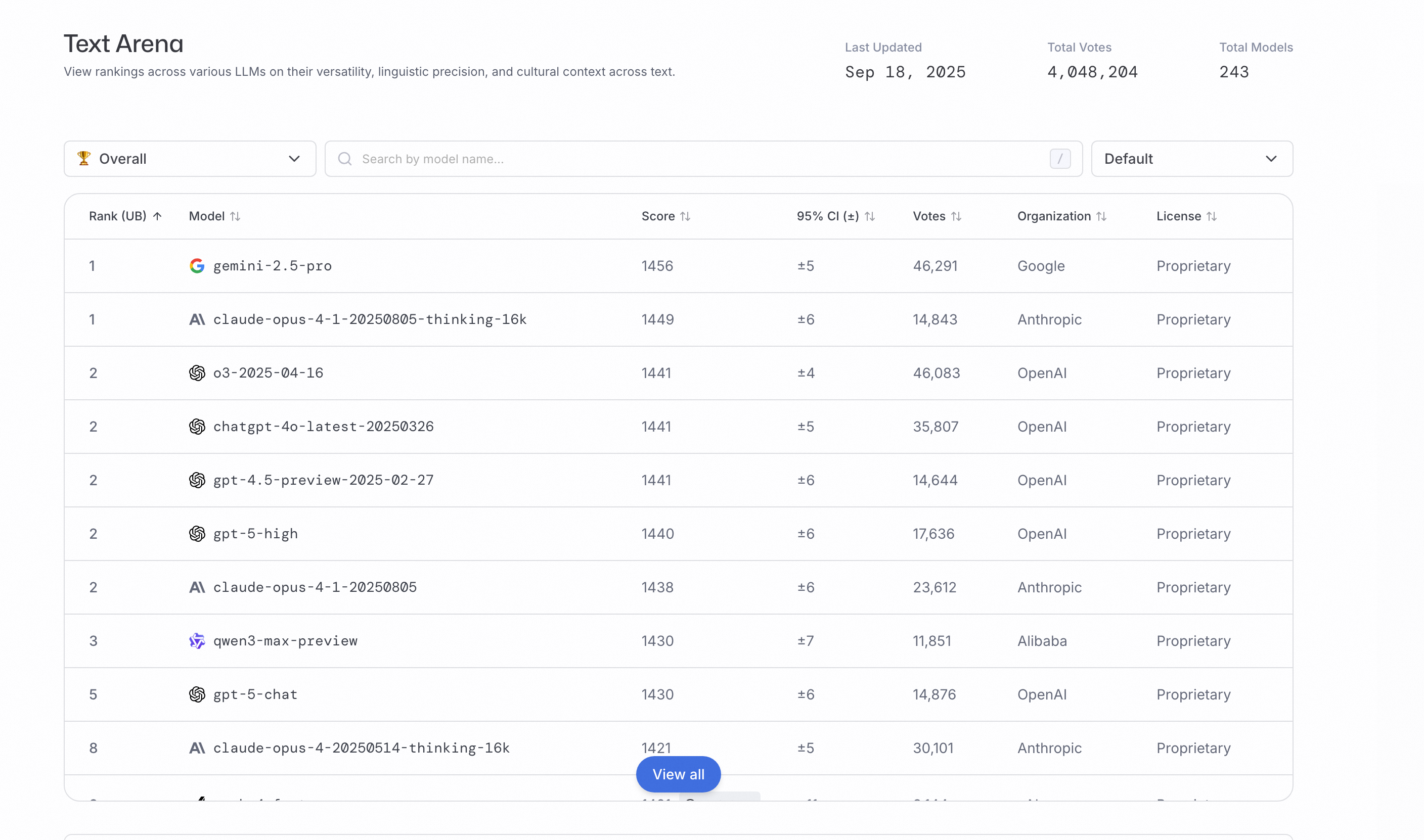

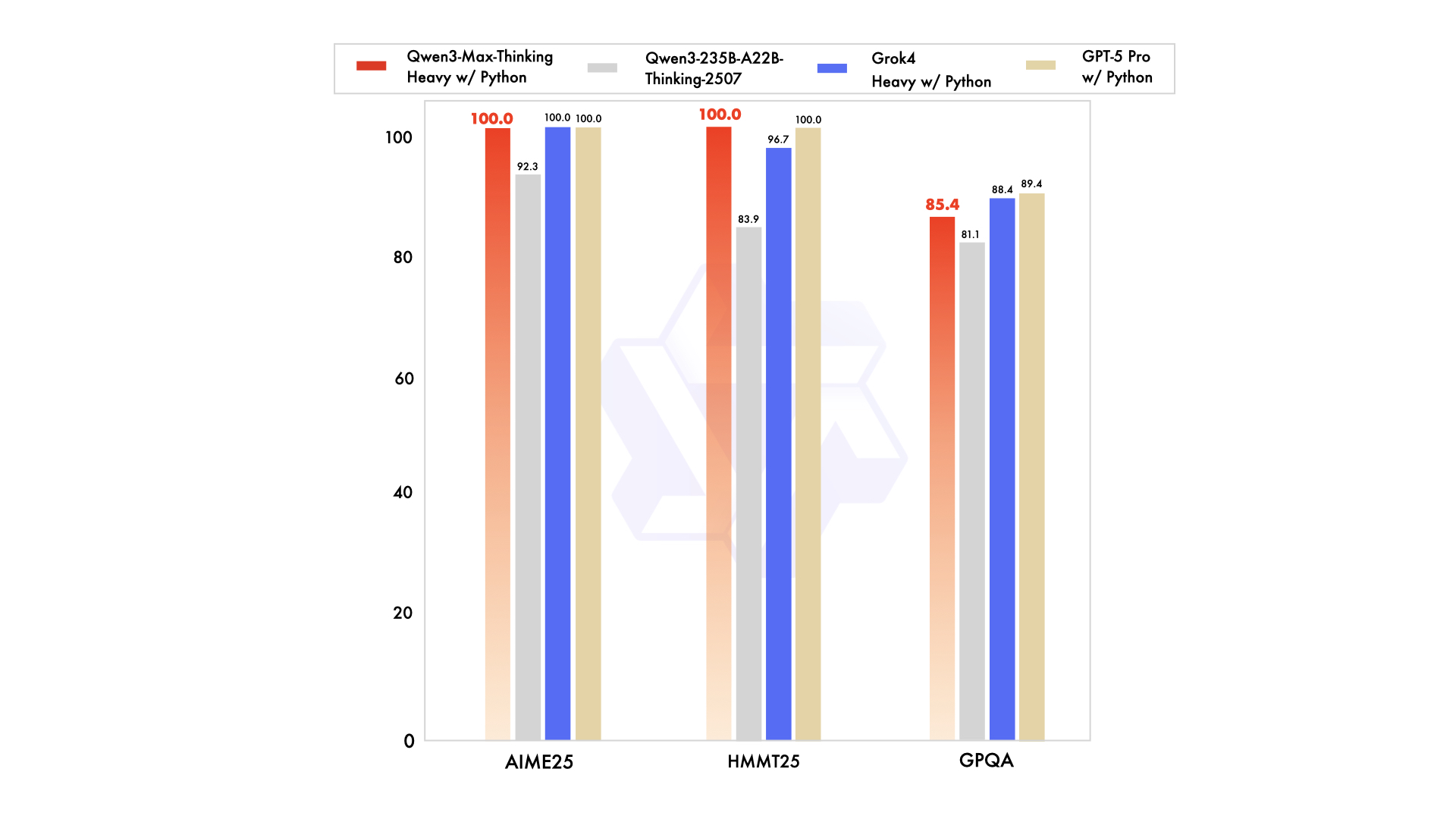

Following the release of the Qwen3-2507 series, we are thrilled to introduce Qwen3-Max — our largest and most capable model to date. The preview version of Qwen3-Max-Instruct currently ranks third on the Text Arena leaderboard, surpassing GPT-5-Chat. The official release further enhances performance in coding and agent capabilities, achieving state-of-the-art results across a comprehensive suite of benchmarks — including knowledge, reasoning, coding, instruction following, human preference alignment, agent tasks, and multilingual understanding. We invite you to try Qwen3-Max-Instruct via its API on Alibaba Cloud or explore it directly on Qwen Chat. Meanwhile, Qwen3-Max-Thinking — still under active training — is already demonstrating remarkable potential. When augmented with tool usage and scaled test-time compute, the Thinking variant has achieved 100% on challenging reasoning benchmarks such as AIME 25 and HMMT. We look forward to releasing it publicly in the near future.

The Qwen3-Max model has over 1 trillion parameters and was pretrained on 36 trillion tokens. Its architecture follows the design paradigm of the Qwen3 series, incorporating our proposed global-batch load balancing loss.

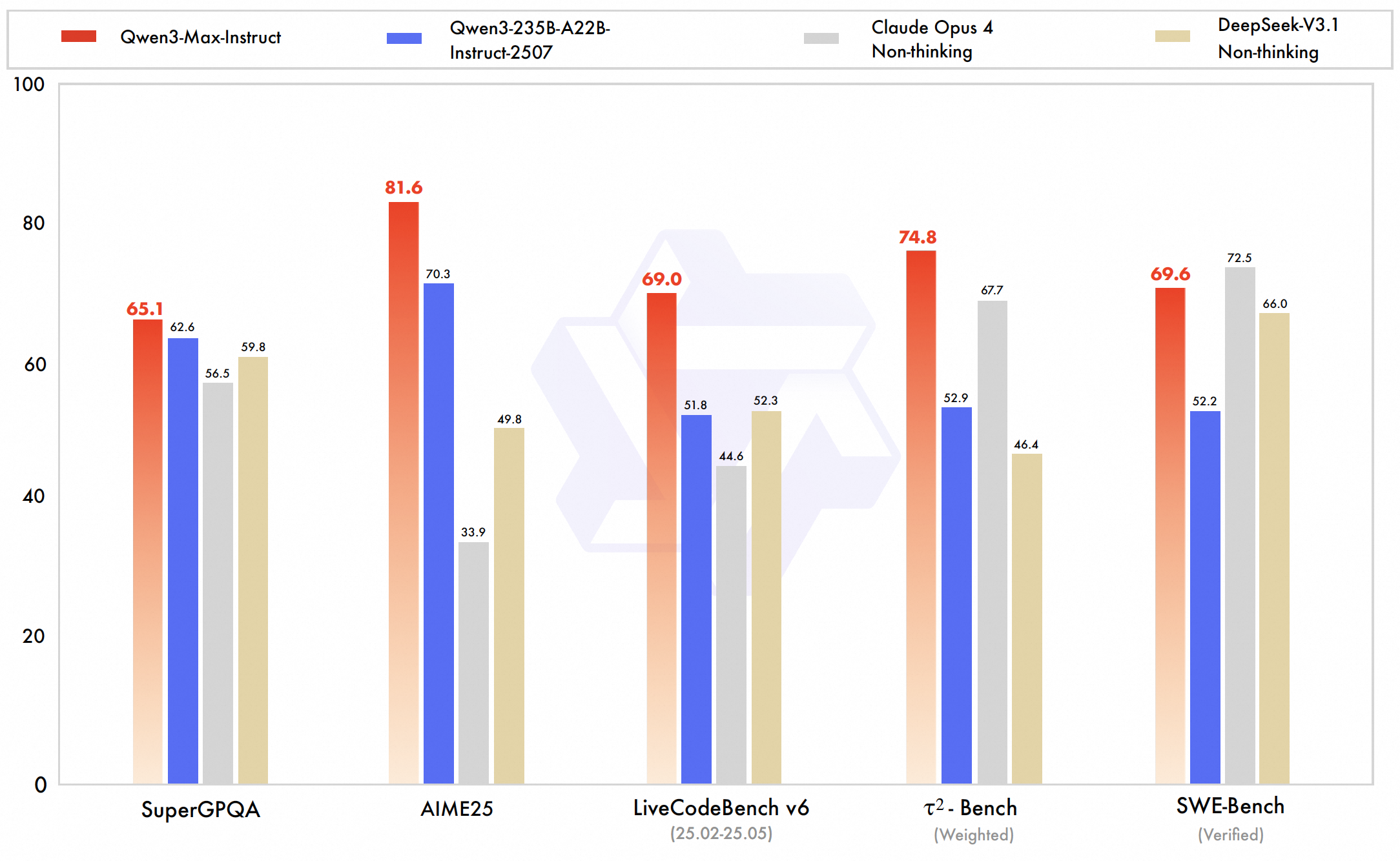

The preview version of Qwen3-Max-Instruct has secured a top-three global ranking on LMArena text leaderboard. The official release further elevates its capabilities — particularly in coding and agent performance. On SWE-Bench Verified, a benchmark focused on solving real-world coding challenges, Qwen3-Max-Instruct achieves an impressive score of 69.6, placing it firmly among the world’s top-performing models. Moreover, on Tau2-Bench — a rigorous evaluation of agent tool-calling proficiency — Qwen3-Max-Instruct delivers a breakthrough score of 74.8, surpassing both Claude Opus 4 and DeepSeek V3.1.

The reasoning variant of Qwen3-Max, named Qwen3-Max-Thinking, is demonstrating extraordinary performance. By integrating a code interpreter and leveraging parallel test-time compute techniques, it achieves unprecedented reasoning capabilities — most notably attaining perfect 100-point scores on the challenging mathematical reasoning benchmarks AIME 25 and HMMT. We are currently engaged in intensive training of Qwen3-Max-Thinking and look forward to delivering it to you soon.

Now Qwen3-Max-Instruct is available in Qwen Chat, and you can directly chat with the powerful model. Meanwhile, the API of Qwen3-Max-Instruct (whose model name is qwen3-max) is available. You can first register an Alibaba Cloud account and activate Alibaba Cloud Model Studio service, and then navigate to the console and create an API key.

Since the APIs of Qwen are OpenAI-API compatible, we can directly follow the common practice of using OpenAI APIs. Below is an example of using Qwen3-Max-Instruct in Python:

from openai import OpenAI

import os

client = OpenAI(

api_key=os.getenv("API_KEY"),

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen3-max",

messages=[

{'role': 'user', 'content': 'Give me a short introduction to large language model.'}

]

)

print(completion.choices[0].message)Feel free to cite the following article if you find Qwen3-Max helpful.

@misc{qwen3max,

title = {Qwen3-Max: Just Scale it},

author = {Qwen Team},

month = {September},

year = {2025}

}Original source: https://qwen.ai/blog?id=241398b9cd6353de490b0f82806c7848c5d2777d&from=research.latest-advancements-list

Alibaba's Quark Launches AI Chat Assistant, Powered by Qwen Model

Alibaba Cloud Boosts GPU Utilization with AI Infrastructure Breakthrough at SOSP 2025

1,301 posts | 456 followers

FollowAlibaba Cloud Native Community - November 21, 2025

Alibaba Cloud Community - September 12, 2025

Alibaba Cloud Community - September 30, 2025

Alibaba Cloud Community - September 27, 2025

Alibaba Cloud Community - September 16, 2025

Farruh - December 5, 2025

1,301 posts | 456 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community