By Linbo He (Technical Expert at Alibaba Cloud), Shaoqiang Chen (Senior Software Architect at Intel), and Lin Yu (Senior Software Engineer at Intel)

OpenYurt v1.2.0 was released on January 30, 2023. It implemented several features that the community has been demanding most. OpenYurt has more distinctive features. Its main features include Kubernetes non-intrusion, cloud-edge-terminal collaboration, programmable resource access control, and declarative cloud-native device management.

v1.2 focuses on node pool governance and aims to build the differentiated technical competitiveness of the OpenYurt system. The main features include reduced traffic cost of cloud-edge communication, enhanced edge autonomy, and smoother cross-region communication.

In the collaborative scene of the cloud edge, the edge is connected to the cloud through the public network. When a large number of business Pods and microservice systems are deployed in the cluster (introducing a large number of Services and Endpointslices), a large amount of bandwidth is consumed between the edge nodes and the cloud, which puts great pressure on the user's public network traffic cost.

The community has always had a strong demand for reducing cloud-edge communication traffic. How can we meet the demand without intruding and modifying Kubernetes? The first solution is to add a sync component in the node pool to synchronize cloud data in real-time and then distribute the cloud data to each component in the node pool. However, the implementation of this solution will face considerable challenges. First, data access requests are actively initiated by the edge to the cloud. How can the sync component intercept these requests and distribute data? If the sync component fails, requests from the edge will be interrupted, and it is quite difficult to ensure the high availability of the sync component.

The OpenYurt community pioneered the cloud-edge traffic reuse mechanism based on pool-coordinator+YurtHub. This mechanism seamlessly integrates with the cloud-edge communication links of native Kubernetes and ensures the high availability of communication links (YurtHub Leader election), reducing the cost of cloud-edge communication.

In a node pool, the data that nodes obtain from the cloud can be divided into two types:

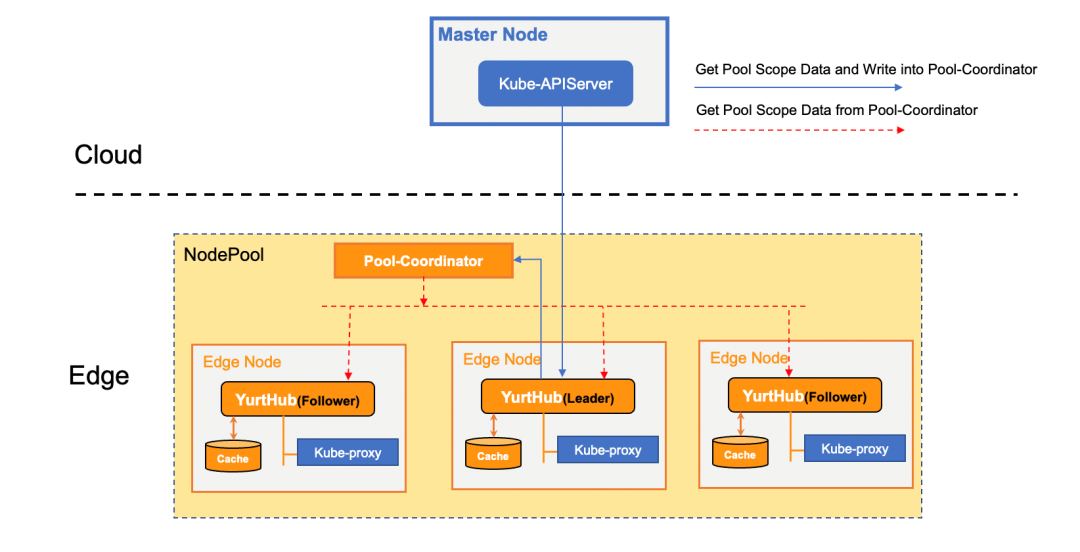

At the same time, through the test [1], it is found that the data that occupies the bandwidth of the cloud-edge communication is pool scope data. OpenYurt v1.2 significantly reduces the traffic cost of cloud-edge communication by reusing pool scope data in the node pool, as shown in the following figure:

Through the cooperation between Pool-Coordinator and Yurthub, there is only the pool scope data of cloud-side communication within a single node pool, thus significantly reducing the amount of cloud-edge communication data and the cloud outbound traffic peak by 90% compared to native Kubernetes.

In addition, it brings an interesting capability. Through traffic reuse of Endpointslices, even if edge nodes are disconnected from the cloud network, they can still perceive the service topology status of the cluster in real-time.

Edge autonomy capability can ensure uninterrupted service provisioning from edge businesses in cloud-edge collaboration scenarios. The edge autonomy capability includes data caching at the edge side to ensure service recovery when nodes restart in the scenario of cloud-edge network disconnection. It also includes the enhancement of the eviction policy for Pods at the control side (cloud controller).

In native Kubernetes, if the heartbeat of an edge node has not been reported for a certain period, the cloud controller will evict the Pod on the node (delete and rebuild it on the normal node).

Edge businesses have different requirements in cloud-edge collaboration scenarios. The business requires that when the heartbeat cannot be reported due to the cloud-edge network disconnection (the node itself now is normal), the Pod can be maintained (no Pod eviction occurs), and the Pod is migrated and only rebuilt when the node fails.

Compared with the complex network situation of cloud-edge public network connection, the network connection is in good condition when the nodes in the same node pool are in the same local area network. A common solution in the industry is mutual network detection among edge nodes to build a distributed node health detection mechanism. However, the network detection in this solution will generate a large amount of east-west traffic and a certain amount of calculation on each node. As the size of the node pool increases, the detection effect will face certain challenges.

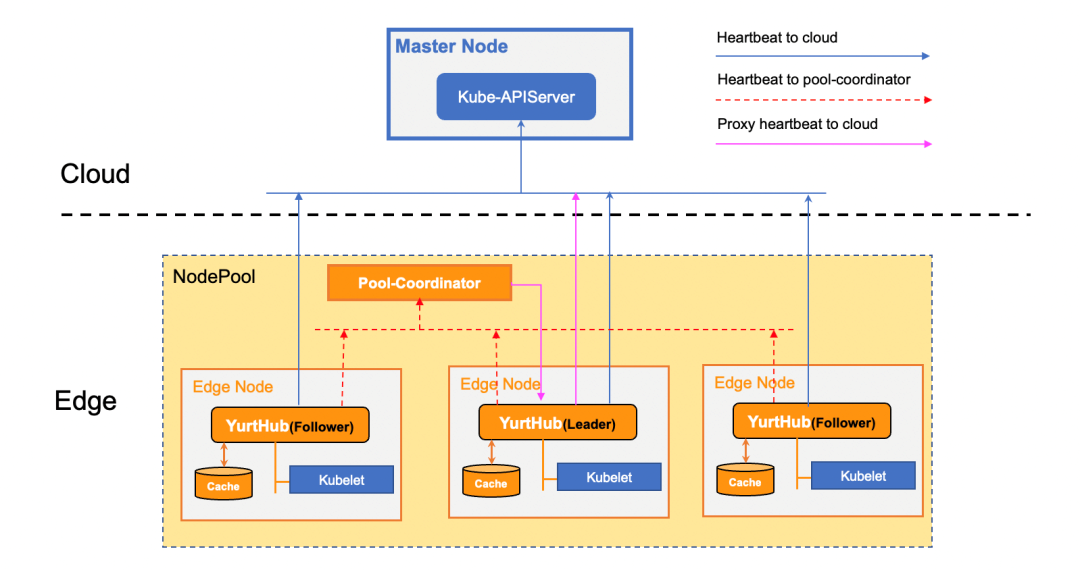

OpenYurt v1.2 provides a centralized heartbeat proxy mechanism based on pool-coordinator+YurtHub. This mechanism can reduce the east-west communication traffic in node pools and the overall computing power requirements and maintain Pod when the cloud-edge network is disconnected, as shown in the following figure:

The heartbeat proxy mechanism jointly implemented by Pool-Coordinator and YurtHub successfully ensures that the heartbeat can continue to be reported to the cloud when the cloud-edge network of a node is disconnected, thus ensuring that the business Pods on the node will not be evicted. At the same time, the node whose heartbeat is reported by the proxy, Leader YurtHub, will be added with special taints in real-time to restrict scheduling new Pod to this node.

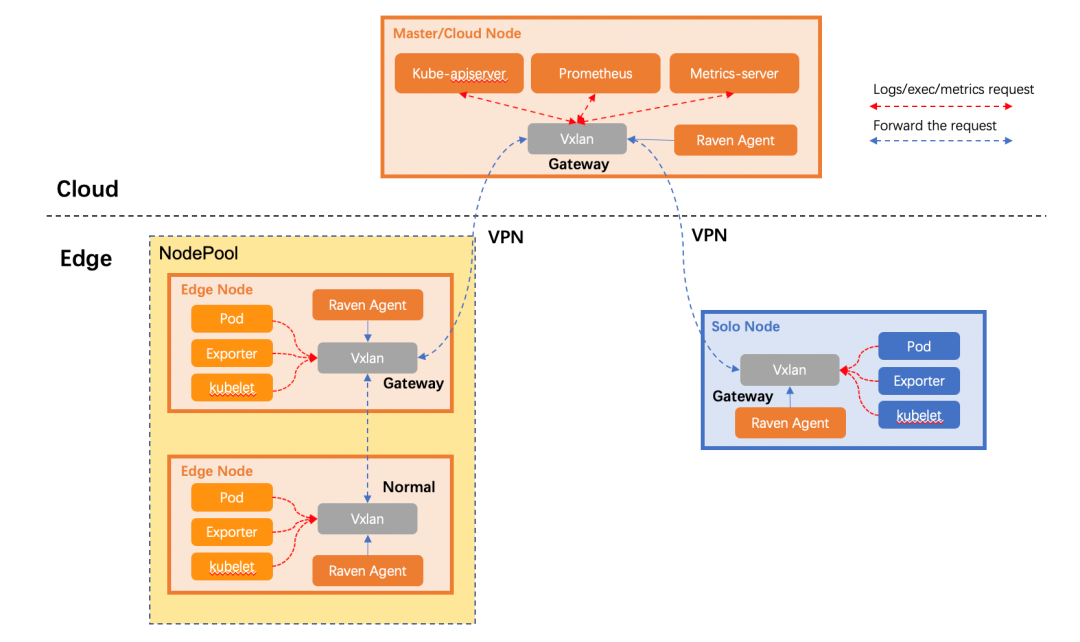

The Raven project of the OpenYurt community has evolved to v0.3. This project mainly provides a 3-layer cross-region communication capability. Compared with the SuperEdge provided by Yurt-Tunnel, which only supports 7-layer traffic forwarding from cloud to edge, Raven can provide a more general cross-region communication capability (cloud-edge interconnection/edge-to-edge interconnection), as shown in the following figure:

The Raven solution is seamlessly compatible with the native CNI solution. Cross-region traffic forwarding is imperceptible to applications. Therefore, in cloud-edge and edge-to-edge cross-region communication, application mutual access in OpenYurt can maintain a consistent user experience in native Kubernetes. Starting from OpenYurt v1.2, we recommend using Raven instead of YurtTunnel.

The development of OpenYurt v1.3 is progressing. If you are interested, you are welcome to participate in the co-construction to explore the de facto standard of a stable, reliable, and non-intrusive cloud-native edge computing platform.

In-Depth Interpretation of OpenYurt v1.2: Edge-Cloud Network Optimization

OpenKruise V1.4 Release, New Job Sidecar Terminator Capability

640 posts | 55 followers

FollowAlibaba Container Service - October 30, 2024

Alibaba Cloud Native Community - May 4, 2023

Alibaba Container Service - November 7, 2024

Alibaba Developer - April 18, 2022

Alibaba Developer - January 11, 2021

Alibaba Developer - March 8, 2021

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community