CNCF OpenYurt, an intelligent open-source platform for cloud-native edge computing, recently released version 1.2. OpenYurt is the industry's first non-invasive intelligent edge computing platform for cloud-native systems, with a full range of cloud, edge, and terminal integration capabilities. It can quickly realize the efficient delivery, O&M, and management of massive edge computing services and heterogeneous computing power.

In version 1.2, OpenYurt follows the concept of node pool governance proposed by the community and introduces the Pool-Coordinator component, which proposes a set of edge governance solutions for cloud-edge scenarios from the perspective of resources, networks, applications, monitoring, etc. This article focuses on the solution to network optimization in cloud-edge scenarios. Please stay tuned.

As early as the beginning of 2022, the community proposed the concept of node pool governance. Node pool is an ecological concept of OpenYurt, and it represents a group of edge machine nodes that communicate with each other in a cluster. Edge IoT devices and computing power often rely on multiple machine nodes to work in cloud-edge collaboration scenarios. The node pool concept is a new dimension for the OpenYurt ecosystem in terms of the control granularity of edge computing power. The node pool governance focuses on the resources, network, lifecycle, and observable metrics of edge machines, which helps provide edge-side perspectives and management ideas.

Pool-Coordinator implements this concept for the first time in the community. It proposes a set of edge governance solutions for cloud-edge scenarios from the perspective of resources, networks, applications, monitoring, etc., with a high availability capability.

The requirements of basic edge components for the edge-cloud network drive OpenYurt to provide developers with more reasonable and optimized solutions.

The first requirement is the basic conditions of cloud-edge network bandwidth. The cloud-edge network bandwidth is often limited by various aspects in cloud-native edge computing scenarios (such as machine resources, physical distance, and capital cost). This results in low bandwidth for some manufacturers and users and extremely low bandwidth for node pools in specific environments.

The second requirement is the amount of cluster resource data. Edge clusters often cover a large number of regions, machine nodes, and physical devices. Compared with ordinary clusters, edge clusters cover a broad area and have a larger scale, leading to relatively more overall cluster resources. Some basic cluster components (such as proxy components, CNI components, DNS components, and even some business components, such as device drivers and edge infrastructure middleware) need to use the Kubernetes List/Watch mechanism to pull full resources and listen for anomalies during startup. This process requires high network conditions.

The third requirement is the importance of basic edge components. When a new node is managed into an edge cluster, the initialization program will pull up a series of OpenYurt basic edge components and configurations. Only after successful initialization will the business program be scheduled to the node. If the basic edge component fails to pull dependent resources due to bandwidth problems, the business applications on edge machines cannot be started, and the computing power expansion of edge nodes fails. On the other hand, if a running basic edge component continuously fails to pull resources, the network, proxy, and storage resources may be out of sync with the central component, causing business risks.

Pool-Coordinator provides edge-side caching for resources in the Pool-Scope. This reduces the cloud-edge network bandwidth problems caused by a large number of list/watch requests for these resources.

In the deployment phase, developers can install the Pool-Coordinator component to the cluster by installing the Chart package. This process uses the YurtAppDaemon resource of the OpenYurt ecosystem to deploy the component to all edge node pools with the granularity of the node pool. Each node pool has one instance.

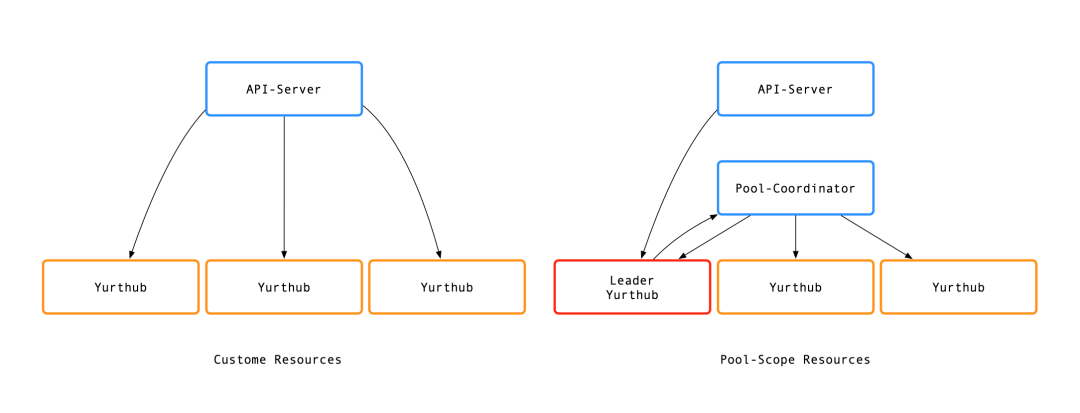

After the Pool-Coordinator instance is started, the Leader YurtHub, selected through the leader selection mechanism, pulls Pool-Scope resources (such as endpoints and endpointslice) to the edge and then synchronizes them to Pool-Coordinator and caches them for use by all nodes in the node pool.

YurtHub running on all nodes within the node pool proxies Pool-Scope resource read requests to Pool-Coordinator. In theory, only one cloud-edge persistent connection is required for a node pool to process all requests for one resource. Pool-Coordinator is used to process read requests for these resources in the node pool. This reduces cloud-edge network consumption. Pool-Coordinator can reduce a large number of requests caused by the startup or reconstruction of basic edge containers and reduce the amount of cloud-edge resource delivery during runtime, especially in weak network scenarios with bandwidth requirements.

By default, Pool-Scope resources are defined as two types of resources: endpoints and endpointslices. These two types of resources account for a relatively high proportion of the cloud-edge traffic of YurtHub, which is more obvious in large-scale clusters. In addition, Pool-Scope resources are required to be public resources in the node pool, regardless of the node to which the caller belongs, which applies to the two types of resources above. Pool-Scope resources can be configured by users with other resources (such as Pods, Node, and CR) to cope with network optimization scenarios with a large number of specific resources.

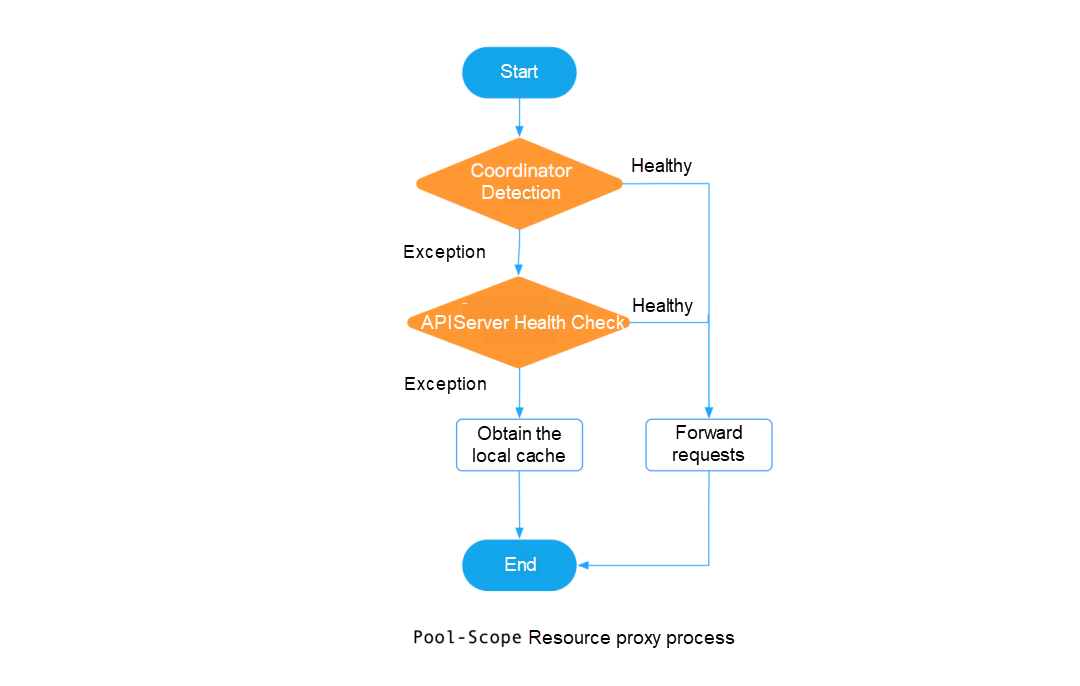

When a new node pool creates and adds computing power resources, YurtHub starts first as the underlying process of edge machine nodes. It preferentially connects to the APIServer directly to execute the normal initialization logic. After a node is added to the cluster, the Yurt-App-Manager component of OpenYurt schedules the edge node pool component, Pool-Coordinator, to the machine as needed. When Pool-Coordinator is successfully started, YurtHub will detect its availability status and interact with it. YurtHub provides the --enable-coordinator startup parameter. If this parameter is set to true, YurtHub will detect the Pool-coordinator component, which provides support for flexible traffic switching and on-demand startup in the node pool.

There are read and write conflicts in all YurtHub instances in a node pool. We specify that these YurtHub instances select a Leader through the distributed lock in Pool-Coordinator, and only the Leader YurtHub is responsible for writing cache resources. The other Followers will listen to the Leader Lease resources stored in Pool-Coordinator at all times to determine whether the Leader is alive. Only the alive Leader can ensure the correctness and timeliness of cached resources. If the Leader YurtHub exits due to some reasons (such as disconnection from the central network and its own reasons), a new Leader will be selected among the other Followers to ensure the availability of edge caching.

In this process, we provide the Grace Period mechanism. When the Leader YurtHub is unhealthy due to a short-term cloud-edge network failure, the traffic in the edge node pool will not be switched to the cloud until after a specific time. This prevents the bandwidth from being completely full due to network jitter and traffic switching of a large number of nodes.

When Pool-Coordinator exits due to an exception, edge YurtHub will detect it and forward the proxy request to the cloud to obtain a correct response. After being rebuilt, Pool-Coordinator will restart the leader selection mechanism and rebuild edge caching to reduce the cloud-edge bandwidth consumption.

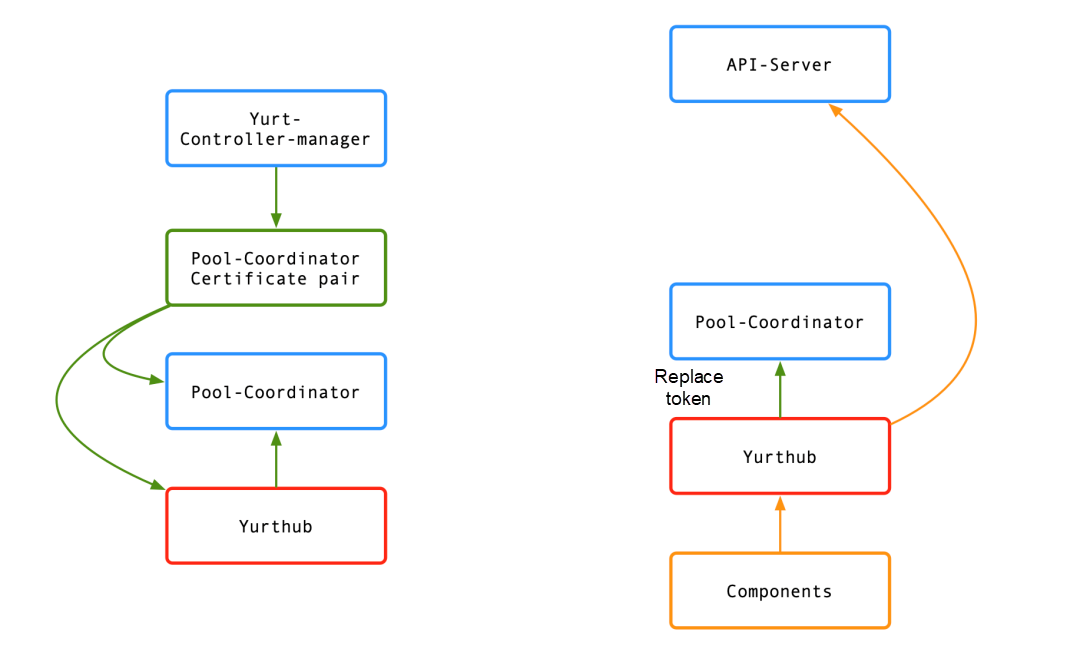

Pool-Coordinator provides a complete certificate management mechanism and ensures correct permissions during traffic forwarding.

A certificate is issued by the Yurt-Controller-Manager during the system initialization phase. The certificate is obtained by YurtHub and Pool-Coordinator to ensure YurtHub has permissions and can read and write the cache safely.

When the Pool-Scope resource request is forwarded to the cache, the Token is replaced to ensure the request has the read and write permissions on the cache.

With the release of v1.2, Pool-Coordinator will continue to focus on the stability construction and ecological component construction, which includes observability, robust optimization of network jitter, the self-control capability of network disconnection, and the Pool-Coordinator container O&M capability. Pool-Coordinator will also be implemented on a large scale in industrial production and will be iterated and optimized in the production process like other OpenYurt eco-components to improve system stability.

We welcome your participation in the construction of Pool-Coordinator!

Zhixin Li (OpenYurt Member and Apache Dubbo PMC) focuses on system architecture and solutions for cloud-native edge computing.

Yifei Zhang (OpenYurt Member and SEL Lab of Zhejiang University)

A Quick View of OpenYurt v1.2: Cloud-Edge Traffic Peak Reduced by 90% Compared to Native Kubernetes

634 posts | 55 followers

FollowAlibaba Cloud Native Community - June 30, 2023

Alibaba Cloud Native Community - August 17, 2022

Alibaba Developer - March 30, 2022

Alibaba Cloud Native Community - May 4, 2023

Alibaba Cloud Community - January 11, 2023

Alibaba Cloud Native Community - July 17, 2023

634 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community