By Yunyi

In certain business situations, there is a need to convert a string into an integer while ensuring that it remains unique globally, such as in the scenario of calculating BitMap dictionary indices. A common approach involves sorting the dataset by the strings that require global mapping using ORDER BY, and then using the sorted numbers as the reshaped mapping of the strings. While this method can achieve the desired outcome, processing a large amount of data (in the billions or more) at once may lead to significant time consumption or even failure to run.

This article introduces a method that leverages distributed computing resources to calculate the global dictionary index, thus addressing the performance bottleneck caused by the previous method of distributing all data to a single reducer for standalone sorting in scenarios involving large data volumes.

The ID in the sort_data dataset has already been unique with the data level of 0.93 billion which directly opens the global window and sorts the dataset as follows:

INSERT OVERWRITE TABLE test_data_result

SELECT id

,ROW_NUMBER() OVER (ORDER BY id ASC ) AS rn

FROM test_data

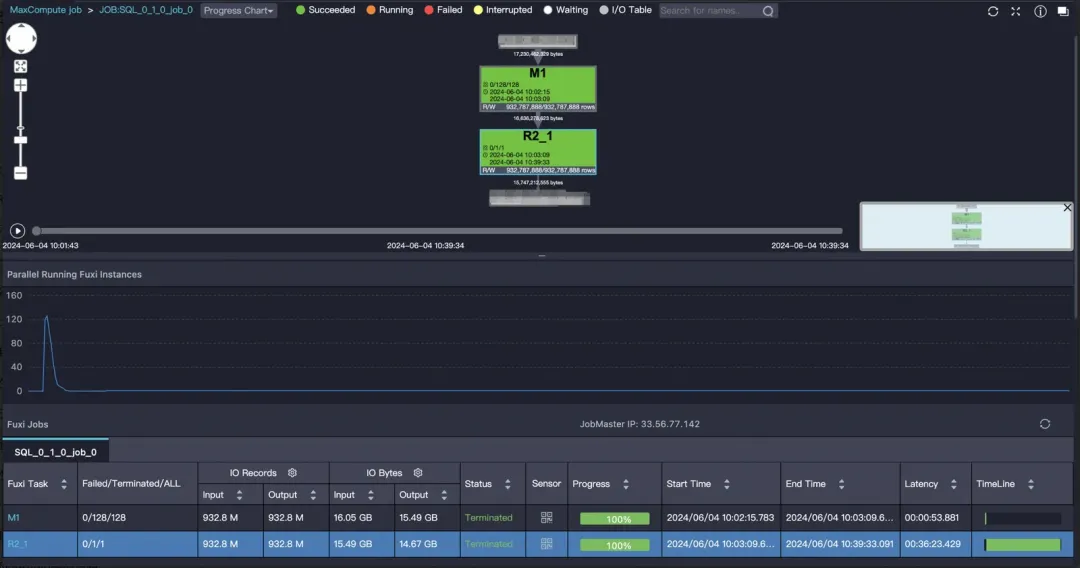

;DAG:

It takes 30 minutes with only one reducer. Setting set odps.sql.reducer.instances=256 here does not work. In this mode, only one reducer can be guaranteed to be globally unique.

In a scenario of computing under large data volume, we should try to prevent it from evolving into standalone computing. The better situation is to run for a long time because the data volume may not be able to run normally. How do we take full advantage of horizontal scaling of computing resources to solve this problem? The idea is to perform distributed local sorting by bucket, and then obtain a global index based on the bucket size. Similar to the base address addressing of operating system instruction addressing, we can do absolute address mapping for each ID and use a base address plus a relative address to obtain an absolute address.

The sample code is shown as follows:

-- Step 1

WITH hash_bucket AS

(

SELECT id

,ROW_NUMBER() OVER (PARTITION BY bucket_no ORDER BY id ASC ) AS bucket_rel_index

,COUNT(1) OVER (PARTITION BY bucket_no ) AS bucket_size

,bucket_no

FROM (

SELECT id

,ABS(HASH(id)) % 100000 AS bucket_no

FROM test_data

)

)

-- Step 2

,bucket_base AS

(

SELECT bucket_no

,SUM(bucket_size) OVER (ORDER BY bucket_no ASC ) - bucket_size AS bucket_base

FROM (

SELECT DISTINCT bucket_no

,bucket_size

FROM hash_bucket

)

)

-- Step 3

INSERT OVERWRITE TABLE sort_data_result_1

SELECT /*+ MAPJOIN(t2) */

t1.id

,t2.bucket_base + bucket_rel_index AS id_index

FROM hash_bucket t1

JOIN bucket_base t2

ON t1.bucket_no = t2.bucket_no

;

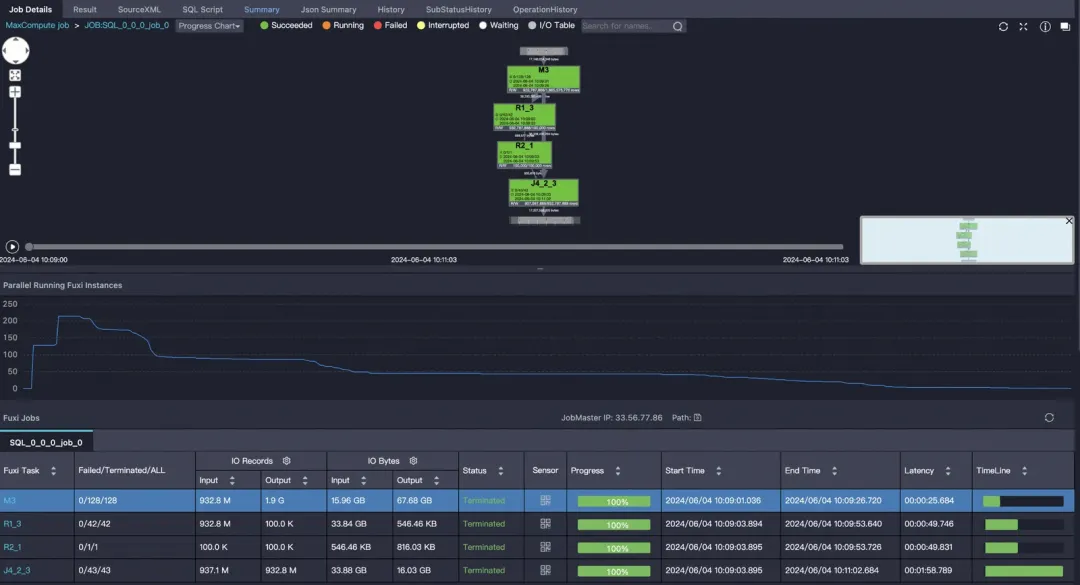

After optimization, standalone computing is avoided, which takes 2 minutes.

Daily optimization records may not be the best solution. Welcome discussions about other ways.

1,347 posts | 477 followers

FollowAlibaba Cloud MaxCompute - February 18, 2024

digoal - March 20, 2019

ApsaraDB - January 28, 2026

Alibaba Cloud MaxCompute - April 25, 2019

ApsaraDB - May 7, 2021

Hologres - July 1, 2021

1,347 posts | 477 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba Cloud Community