Reinforcement learning (RL) has become a core ingredient in advancing the reasoning capabilities of large language models (LLMs). Modern RL pipelines enable models to solve harder mathematical problems, write complex code, and reason over multimodal inputs. In practice, group‑based policy optimization—where multiple responses are sampled per prompt and their rewards are normalized within the group—has emerged as a dominant training paradigm for LLMs. However, despite its empirical success, stable and performant policy optimization remains challenging. A critical challenge lies in the variance of token‑level importance ratios, especially in large Mixture‑of‑Experts (MoE) models. These ratios quantify how far the current policy deviates from the behavior policy used to generate the training samples. When ratios fluctuate excessively (as they often do with expert routing or long autoregressive outputs), policy updates become noisy and unstable.

Existing solutions such as GRPO (token‑level clipping) and GSPO (sequence‑level clipping) attempt to control this instability by enforcing hard clipping: whenever the importance ratio falls outside a fixed band, gradients are truncated. While this reduces catastrophic updates, it introduces two inherent limitations:

As a result, GRPO and GSPO often struggle to strike a balance between stability, sample efficiency, and consistent learning progress. To address these limitations, we propose Soft Adaptive Policy Optimization (SAPO), an RL method designed for stable and performant optimization of LLMs. SAPO replaces hard clipping with a smooth, temperature‑controlled gating function that adaptively down‑weights off‑policy updates while preserving useful gradients. Unlike existing methods, SAPO offers:

This unified design allows SAPO to achieve stable and effective learning.

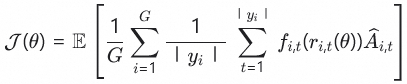

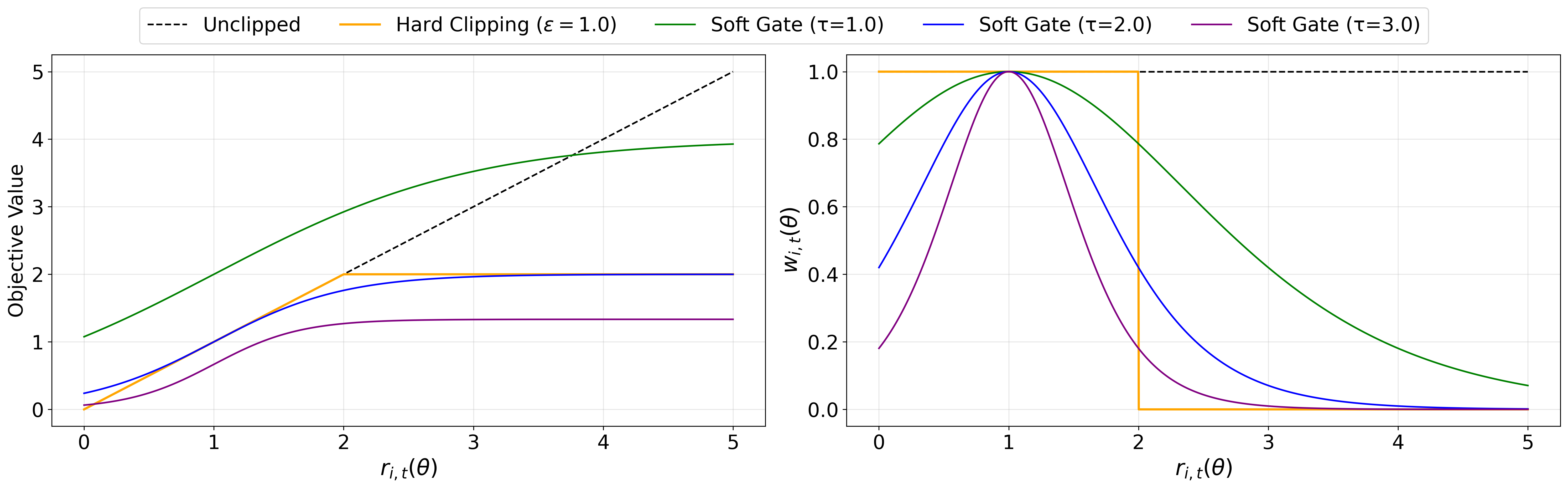

SAPO optimizes the following surrogate objective:

where

is the token‑level importance ratio

is the token‑level importance ratio is the group‑normalized advantage

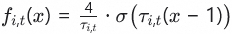

is the group‑normalized advantage is a smooth gating function defined as

is a smooth gating function defined as  , with different temperatures

, with different temperatures  and

and  for positive and negative advantages, respectively.

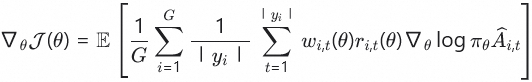

for positive and negative advantages, respectively.The gradient takes the form

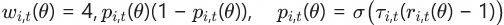

where the weight is

This weight peaks at  and decays smoothly on both sides.

and decays smoothly on both sides.

Let  be the length‑normalized sequence‑level importance ratio:

be the length‑normalized sequence‑level importance ratio:

If the policy updates are small and the token log‑ratios within a sequence have low variance—two assumptions that empirically hold for most sequences—then the average SAPO token gate becomes approximately a sequence‑level gate of the form

. This means SAPO behaves like GSPO at the sequence level but with a continuous trust region instead of hard clipping.

. This means SAPO behaves like GSPO at the sequence level but with a continuous trust region instead of hard clipping.

Key advantage over GSPO: If a few tokens in a sequence are very off‑policy,

This improves sample efficiency.

GRPO uses hard clipping:

This creates brittle, discontinuous optimization behavior.

SAPO replaces the hard cutoff with a smooth decay:

This allows SAPO to provide a more balanced way to retain useful learning signals while preventing unstable policy shifts.

Negative advantages increase the logits of many inappropriate tokens, especially in large vocabularies.

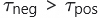

SAPO uses higher temperature for negative tokens  , which causes negative contributions to decay faster when off‑policy. Empirically, this simple asymmetry significantly improves RL training stability and performance.

, which causes negative contributions to decay faster when off‑policy. Empirically, this simple asymmetry significantly improves RL training stability and performance.

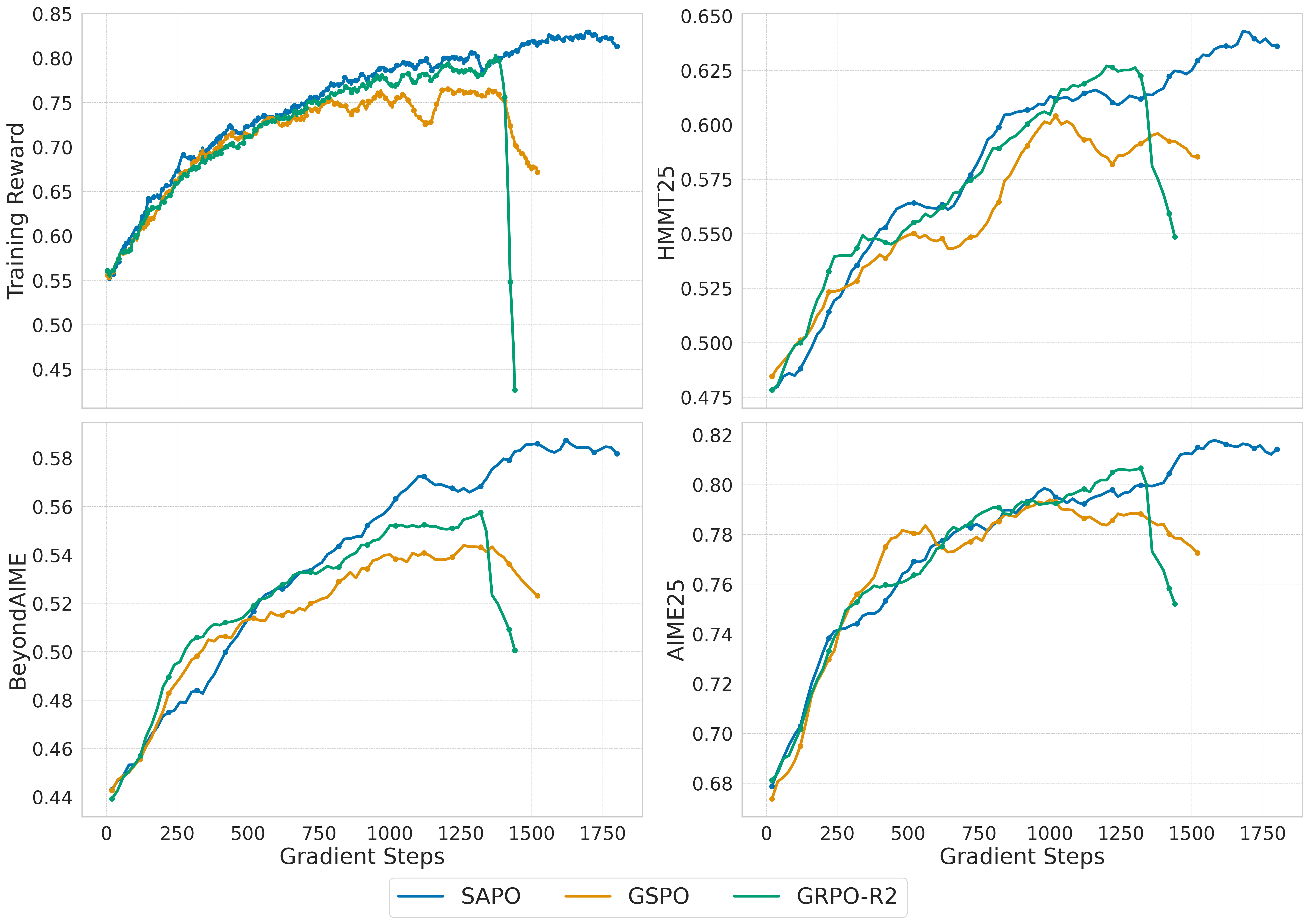

We compare SAPO against GSPO and GRPO‑R2 (GRPO with routing replay) using a cold‑start model fine-tuned from Qwen3-30B-A3B-Base.

Findings:

Temperature ablations confirm that:

provides the most stable training

provides the most stable training

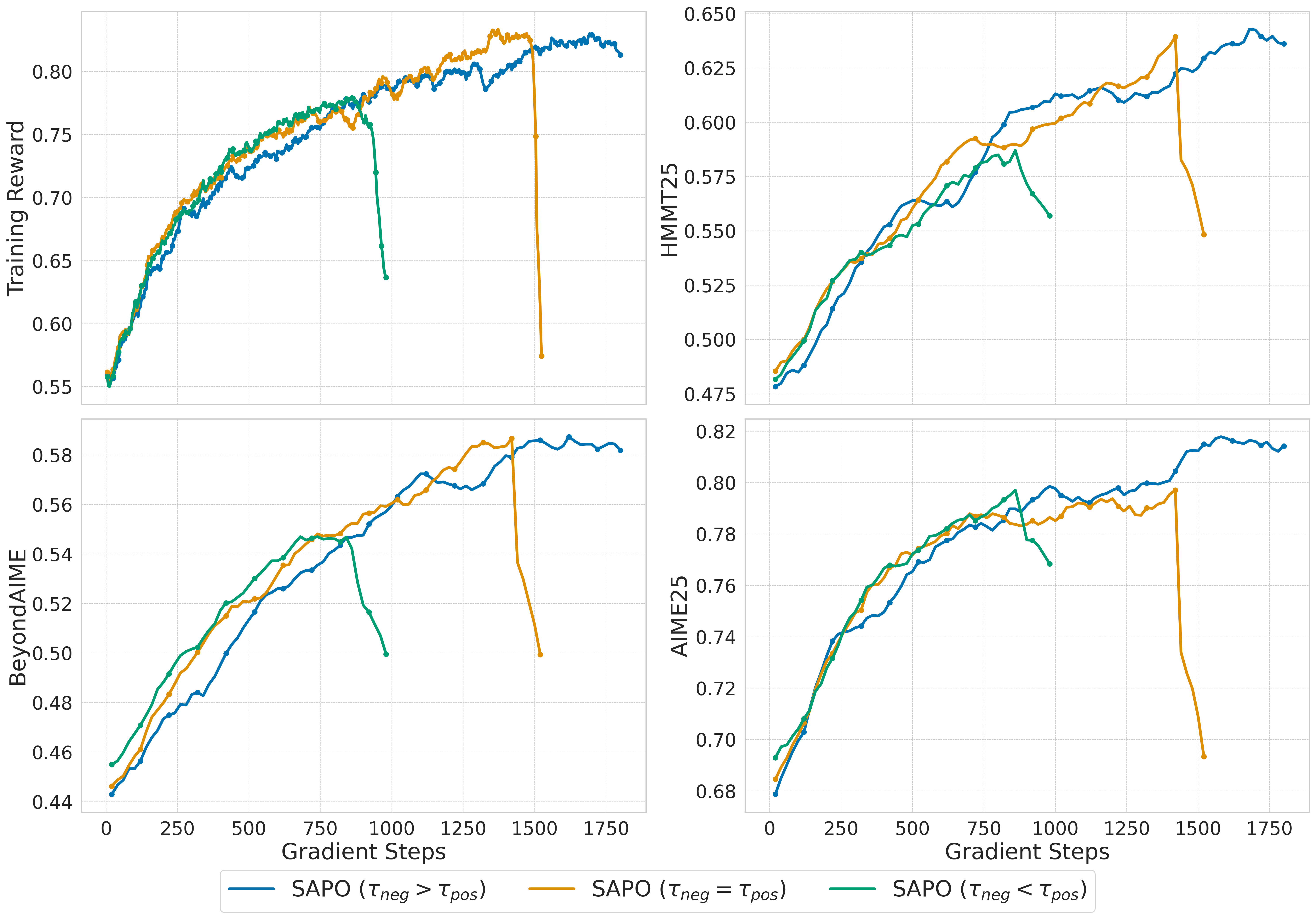

SAPO consistently improves performance across models of varying sizes and across both MoE and dense architectures. For comparison, we train a preliminary cold-start checkpoint of Qwen3‑VL‑30B‑A3B on a mixture of math, coding, logic, and multimodal tasks. Evaluation benchmarks include:

Results: SAPO consistently outperforms both GSPO and GRPO‑R2 under the same compute budget.

SAPO offers a practical way to stabilize and enhance RL training for LLMs:

As RL continues to drive frontier LLM capabilities, we expect that SAPO will become a foundational component of RL training pipelines.

For full technical details, theoretical analysis, and extensive experiments, please refer to our paper:

Soft Adaptive Policy Optimization

If you find our work helpful, feel free to cite it.

@article{sapo,

title={Soft Adaptive Policy Optimization},

author={Gao, Chang and Zheng, Chujie and Chen, Xiong-Hui and Dang, Kai and Liu, Shixuan and Yu, Bowen and Yang, An and Bai, Shuai and Zhou, Jingren and Lin, Junyang},

journal={arXiv preprint arXiv:2511.20347},

year={2025}

}See the original source here.

Become an Agentic Enterprise Today: Salesforce on Alibaba Cloud at the Apsara Conference

Qwen3-Omni-Flash-2025-12-01: Hear You. See You. Follow Smarter!

1,328 posts | 464 followers

FollowFarruh - April 1, 2025

Alibaba Clouder - April 20, 2020

Yongbin Li - September 17, 2020

Alibaba Cloud Community - May 13, 2025

Alibaba Clouder - October 14, 2019

Alibaba Cloud Community - January 6, 2025

1,328 posts | 464 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Community