By Yemo

Through 15 years of exponential traffic growth from both Double 11 and Alibaba Cloud, we built LoongCollector, an observability agent that delivers 10x higher throughput with 80% reduction in resource usage than open-source alternatives, proving that extreme performance and enterprise reliability can coexist under the most demanding production loads.

Back in the early 2010s, Alibaba’s infrastructure was facing a tidal wave: every Singles’ Day (11.11), traffic would surge to record-breaking levels, pushing our systems to their absolute limits. Our observability stack—tasked with collecting logs, metrics, and traces from millions of servers—was devouring CPU and memory just to keep up. At that time, there were no lightweight, high-performance agents on the market: Fluent Bit hadn’t been invented, Vector was still a distant idea, Logstash was a memory-hungry beast.

The math was brutal: Just a 1% efficiency gain in data collection would save us millions across our massive infrastructure. When you’re processing petabytes of observability data every day, performance isn’t optional—it’s mission-critical.

So, in 2013, we set out to build our own: a lightweight, high-performance, and rock-solid data collector. Over the next decade, iLogtail (now LoongCollector) was battle-tested by the world’s largest e-commerce events, the migration of Alibaba Group to the cloud, and the rise of containerized infrastructure. By 2022, we had open-sourced a collector that could run anywhere—on bare metal, virtual machines, or Kubernetes clusters—capable of handling everything from file logs and container output to metrics, all while using minimal resources.

Today, LoongCollector powers tens of millions of deployments, reliably collecting hundreds of petabytes of observability data every day for Alibaba, Ant Group, and thousands of enterprise customers. The result? Massive cost savings, a unified data collection layer, and a new standard for performance in the observability world.

When processing petabytes of observability data costs you millions, every performance improvement directly impacts your bottom line. A 1% efficiency improvement translates to millions in infrastructure savings across large-scale deployments.

That's when we knew we had to share these numbers with the world.

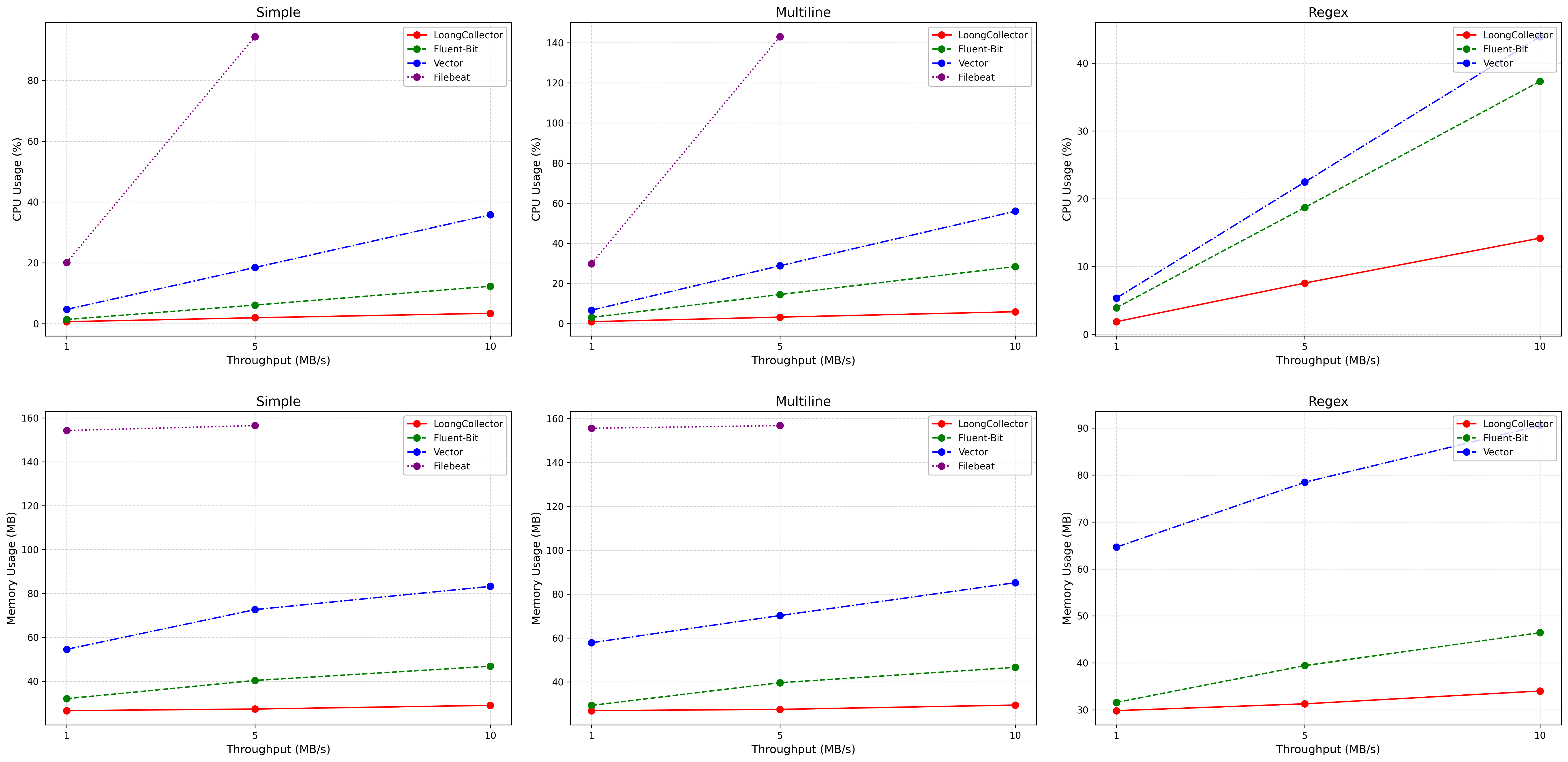

We ran LoongCollector against every major open-source alternative in controlled, reproducible benchmarks. The results weren't just impressive—they were game-changing.

| Log Type | LoongCollector | FluentBit | Vector | Filebeat |

|---|---|---|---|---|

| Single Line | 546 MB/s | 36 MB/s | 38 MB/s | 9 MB/s |

| Multi-line | 238 MB/s | 24 MB/s | 22 MB/s | 6 MB/s |

| Regex Parsing | 68 MB/s | 19 MB/s | 12 MB/s | Not Supported |

📈 Breaking Point Analysis: While competitors hit CPU saturation at ~40 MB/s, LoongCollector maintains linear scaling up to 546 MB/s on a single processing thread—the theoretical maximum of our test environment.

The real story isn't just raw throughput—it's doing more with dramatically less. At identical 10 MB/s processing loads:

| Scenario | LoongCollector | FluentBit | Vector | Filebeat |

|---|---|---|---|---|

| Simple Line (512B) | 3.40% CPU 29.01 MB RAM |

12.29% CPU (+261%) 46.84 MB RAM (+61%) |

35.80% CPU (+952%) 83.24 MB RAM (+186%) |

Performance Insufficient |

| Multi-line (512B) | 5.82% CPU 29.39 MB RAM |

28.35% CPU (+387%) 46.39 MB RAM (+57%) |

55.99% CPU (+862%) 85.17 MB RAM (+189%) |

Performance Insufficient |

| Regex (512B) | 14.20% CPU 34.02 MB RAM |

37.32% CPU (+162%) 46.44 MB RAM (+36%) |

43.90% CPU (+209%) 90.51 MB RAM (+166%) |

Not Supported |

1. 10x Higher Maximum Throughput - Process 10x more data on identical hardware

2. 80% Lower Resource Usage - Reduce infrastructure costs immediately

3. Linear Scaling - Performance grows predictably with resources

4. Zero Data Loss Guarantee - Maintain reliability while achieving breakthrough performance

5. Native Multi-Protocol Support – Seamlessly handle logs, metrics, and traces on a single platform—without any impact on performance

Traditional log agents create multiple string copies during parsing. Each extracted field requires a separate memory allocation, and the original log content is duplicated multiple times across different processing stages. This approach leads to excessive memory allocations and CPU overhead, especially when processing high-volume logs with complex parsing requirements.

LoongCollector introduces a shared memory pool (SourceBuffer) for each PipelineEventGroup, where all string data is stored once. Instead of copying extracted fields, LoongCollector uses string_view references that point to specific segments of the original data.

Pipeline Event Group

├── Shared Memory Pool (SourceBuffer)

│ └── "2025-01-01 10:00:00 [INFO] Processing user request from 192.168.1.100"

├── String Views (zero-copy references)

│ ├── timestamp: string_view(0, 19) // "2025-01-01 10:00:00"

│ ├── level: string_view(20, 4) // "INFO"

│ ├── message: string_view(26, 22) // "Processing user request"

│ └── ip: string_view(50, 13) // "192.168.1.100"

└── Events referencing original data| Component | Traditional | LoongCollector | Improvement |

|---|---|---|---|

| String Operations | 4 copies | 0 copies | 100% reduction |

| Memory Allocations | Per field | Per group | 80% reduction |

| Regex Extraction | 4 field copies | 4 string_view refs | 100% elimination |

| CPU Overhead | High | Minimal | 15% improvement |

Traditional log agents create and destroy PipelineEvent objects for every log entry, leading to frequent memory allocations and deallocations. This approach causes significant CPU overhead (10% of total processing time) and creates memory fragmentation. Simple global object pools introduce lock contention in multi-threaded environments, while thread-local pools fail to handle cross-thread scenarios effectively.

LoongCollector implements intelligent object pooling with thread-aware allocation strategies that eliminate lock contention while handling complex multi-threaded scenarios. The system uses different pooling strategies based on whether events are allocated and deallocated in the same thread or across different threads.

┌──────────────────┐

│ Processor Thread │──── [Lock-free Pool] ──── Direct Reuse

└──────────────────┘When events are created and destroyed within the same Processor Runner thread, each thread maintains its own lock-free event pool. Since only one thread accesses each pool, no synchronization overhead is required.

┌────────────────┐ ┌─────────────────┐

│ Input Thread │────▶│ Processor Thread│

└────────────────┘ └─────────────────┘

│ │

└── [Double Buffer Pool] ──┘For events created in Input Runner threads but consumed in Processor Runner threads, we implement a double-buffer strategy:

| Aspect | Traditional | LoongCollector | Improvement |

|---|---|---|---|

| Object creation | Per event | Pool reuse | 90% reduction |

| Memory fragmentation | High | Minimal | 80% reduction |

Standard serialization involves creating intermediate Protobuf objects before converting to network bytes. This two-step process requires additional memory allocations and CPU cycles for object construction and serialization, leading to unnecessary overhead in high-throughput scenarios.

LoongCollector bypasses intermediate object creation by directly serializing PipelineEventGroup data according to Protobuf wire format. This eliminates the temporary object allocation and reduces memory pressure during serialization.

Traditional: PipelineEventGroup → ProtoBuf Object → Serialized Bytes → Network

LoongCollector: PipelineEventGroup → Serialized Bytes → Network| Metric | Traditional | LoongCollector | Improvement |

|---|---|---|---|

| Serialization CPU | 12.5% | 5.8% | 54% reduction |

| Memory allocations | 3 copies | 1 copy | 67% reduction |

While LoongCollector demonstrates impressive performance advantages, its reliability architecture is equally noteworthy. The following sections detail how LoongCollector achieves enterprise-grade stability and fault tolerance while maintaining its performance edge.

LoongCollector's multi-tenant architecture ensures isolation between different pipelines while maintaining optimal resource utilization. The system implements a high-low watermark feedback queue mechanism that prevents any single pipeline from affecting others.

┌─ LoongCollector Multi-Tenant Pipeline Architecture ───────────────────┐

│ │

│ ┌─ Pipeline A ─┐ ┌─ Pipeline B ─┐ ┌─ Pipeline C ─┐ │

│ │ │ │ │ │ │ │

│ │ Input Plugin │ │ Input Plugin │ │ Input Plugin │ │

│ │ ↓ │ │ ↓ │ │ ↓ │ │

│ │ Process Queue│ │ Process Queue│ │ Process Queue│ │

│ │ ↓ │ │ ↓ │ │ ↓ │ │

│ │ Sender Queue │ │ Sender Queue │ │ Sender Queue │ │

│ │ ↓ │ │ ↓ │ │ ↓ │ │

│ │ Flusher │ │ Flusher │ │ Flusher │ │

│ └──────────────┘ └──────────────┘ └──────────────┘ │

│ │ │ │ │

│ └───────────────────┼─────────────────┘ │

│ │ │

│ ┌─ Shared Runners ────────────────────────────────────────────────┐ │

│ │ │ │

│ │ ┌─ Input Runners ─┐ ┌─ Processor Runners ┐ ┌─ Flusher Runners ─┐ │ │

│ │ │ • Pipeline │ │ • Priority-based │ │ • Watermark-based │ │ │

│ │ │ isolation │ │ scheduling │ │ throttling │ │ │

│ │ │ • Independent │ │ • Fair resource │ │ • Back-pressure │ │ │

│ │ │ event pools │ │ allocation │ │ control │ │ │

│ │ └─────────────────┘ └────────────────────┘ └───────────────────┘ │ │

│ └──────────────────────────────────────────────────────────────────┘ │

└───────────────────────────────────────────────────────────────────────┘┌─ High-Low Watermark Feedback System ─────────────────────┐

│ │

│ ┌─ Queue State Management ─┐ ┌─ Feedback Mechanism ──┐ │

│ │ │ │ │ │

│ │ ┌─── Normal State ───┐ │ │ ┌──── Upstream ────┐ │ │

│ │ │ Size < Low │ │ │ │ Check │ │ │

│ │ │ Accept all data │ │ │ │ Before Write │ │ │

│ │ └────────────────────┘ │ │ └──────────────────┘ │ │

│ │ │ │ │ │ │

│ │ ▼ │ │ │ │

│ │ ┌── High Watermark ──┐ │ │ │ │

│ │ │ Size >= High │ │ │ ┌──── Downstream ──┐ │ │

│ │ │ Stop accepting │ │ │ │ Feedback Enabled │ │ │

│ │ │ non-urgent data │ │ │ └──────────────────┘ │ │

│ │ └────────────────────┘ │ │ │ │

│ │ │ │ │ │ │

│ │ ▼ │ │ │ │

│ │ ┌─ Recovery State ──┐ │ │ │ │

│ │ │ Size <= Low │ │ │ │ │

│ │ │ Resume accepting data │ │ │ │

│ │ └───────────────────┘ │ │ │ │

│ └──────────────────────────┘ └───────────────────────┘ │

└──────────────────────────────────────────────────────────┘Enterprise environments run multiple pipelines with different criticality levels. Our priority-aware round-robin scheduler ensures fairness while respecting business priorities. The system implements a sophisticated multi-level scheduling algorithm that guarantees resource allocation fairness while maintaining strict priority enforcement.

The core scheduling algorithm ensures both fairness within priority levels and strict priority enforcement between levels. The system follows strict priority ordering while maintaining fair round-robin scheduling within each priority level.

1. Priority Enforcement: Higher priority pipelines are always processed before lower priority ones

2. Fair Round-Robin: Within the same priority level, pipelines are processed in round-robin order

3. Continuity: If the last processed pipeline was in the current level, continue from the next pipeline in that level

4. Resource Yielding: Lower priority pipelines yield resources to higher priority ones when resources are constrained

┌─ High Priority ────────────────────────────────────────────────────┐

│ ┌───────────┐ │

│ │ Pipeline1 │ ◄─── Always processed first │

│ └───────────┘ │

│ │ │

│ ▼ (Priority transition) │

└────────────────────────────────────────────────────────────────────┘

┌─ Medium Priority (Round-robin cycle) ──────────────────────────────┐

│ ┌───────────┐ ┌─────────────────┐ ┌────────────┐ │

│ │ Pipeline2 │───▶│ Pipeline3(Last) │───▶│ Pipeline 4 │ │

│ └───────────┘ └─────────────────┘ └────────────┘ │

│ ▲ │ │

│ └────────────────────────────────────────┘ │

│ │

│ Note: Last processed was Pipeline3, so next starts from Pipeline4 │

│ │ │

│ ▼ (Priority transition) │

└────────────────────────────────────────────────────────────────────┘

┌─ Low Priority (Round-robin cycle) ─────────────────────────────────┐

│ ┌───────────┐ ┌───────────┐ │

│ │ Pipeline5 │───▶│ Pipeline6 │ │

│ └───────────┘ └───────────┘ │

│ ▲ │ │

│ └───────────────────┘ │

│ │

│ Note: Processed only when higher priority pipelines have no data │

└────────────────────────────────────────────────────────────────────┘When one destination fails, traditional agents often affect all pipelines. LoongCollector implements adaptive concurrency limiting per destination.

┌─ ConcurrencyLimiter Configuration ───────────────────────────────────────┐

│ │

│ ┌─ Failure Rate Thresholds ────────────────────────────────────────────┐ │

│ │ │ │

│ │ ┌─ No Fallback Zone ─┐ ┌─ Slow Fallback Zone ─┐ ┌─ Fast Fallback ──┐ │ │

│ │ │ │ │ │ │ │ │ │

│ │ │ 0% ─────────── 10% │ │ 10% ──────────── 40% │ │ 40% ─────── 100% │ │ │

│ │ │ │ │ │ │ │ │ │

│ │ │ Maintain Current │ │ Multiply by 0.8 │ │ Multiply by 0.5 │ │ │

│ │ │ Concurrency │ │ (Slow Decrease) │ │ (Fast Decrease) │ │ │

│ │ └────────────────────┘ └──────────────────────┘ └──────────────────┘ │ │

│ └──────────────────────────────────────────────────────────────────────┘ │

│ │

│ ┌─ Recovery Mechanism ─┐ │

│ │ • Additive Increase │ ← +1 when success rate = 100% │

│ │ • Gradual Recovery │ ← Linear scaling back to max │

│ └──────────────────────┘ │

└──────────────────────────────────────────────────────────────────────────┘Each concurrency limiter uses an adaptive rate limiting algorithm inspired by AIMD (Additive Increase, Multiplicative Decrease) network congestion control. When sending failures occur, the concurrency is quickly reduced. When sends succeed, concurrency gradually increases. To avoid fluctuations from network jitter, statistics are collected over a time window/batch of data to prevent rapid concurrency oscillation.

By using this strategy, when network anomalies occur at a sending destination, the allowed data packets for that destination can quickly decay, minimizing the impact on other sending destinations. In network interruption scenarios, the sleep period approach maximizes reduction of unnecessary sends while ensuring timely recovery of data transmission within a limited time once the network is restored.

LoongCollector has been validated in some of the world's most demanding production environments, processing real-world workloads that would break most observability systems. As the core data collection engine powering Alibaba Cloud SLS (Simple Log Service)—one of the world's largest cloud-native observability platforms—LoongCollector processes observability data for tens of millions of applications across Alibaba's global infrastructure.

Scalability

Network Resilience

Chaos Engineering

LoongCollector represents more than just performance optimization—it's a fundamental rethinking of how observability data should be collected, processed, and delivered at scale. By open-sourcing this technology, we're democratizing access to enterprise-grade performance that was previously available only to the largest tech companies.

🚀 GitHub Repository: https://github.com/alibaba/loongcollector

📊 Benchmark Suite: Clone our complete benchmark tests and reproduce these results in your environment

📖 Documentation: Comprehensive guides for migration, optimization, and advanced configurations

💬 Community Discussion: Join our Discord for technical discussions and architecture deep-dives

Challenge us: If you're running Filebeat, FluentBit, or Vector in production, we're confident LoongCollector will deliver significant improvements in your environment. Run our benchmark suite and let the data speak.

Contribute: LoongCollector is built by engineers, for engineers. Whether it's performance optimizations, new data source integrations, or reliability improvements—every contribution shapes the future of observability infrastructure.

How does this compare to your current observability stack?

What performance bottlenecks are you experiencing?

What additional optimizations would you like to see?

We're confident in our numbers, but we want to see yours. Run our benchmark suite against your current setup and share the results. If you can beat our performance, we'll feature your optimizations in our next release.

The next time your log collection agent consumes more resources than your actual application, remember: there's a better way. LoongCollector proves that high performance and enterprise reliability aren't mutually exclusive—they're the foundation of modern observability infrastructure.

Built with ❤ by the Alibaba Cloud Observability Team. Battle-tested across Hundreds PB of daily production data and tens of millions of instances.

Best Practices for Gin Framework Observability Without Cumbersome Manual Instrumentation

664 posts | 55 followers

FollowNeel_Shah - December 25, 2025

Alibaba Cloud Community - October 14, 2025

Alibaba Cloud Native Community - September 4, 2025

Alibaba Cloud Native - September 12, 2024

ApsaraDB - October 13, 2021

Alibaba Cloud Native Community - January 19, 2026

664 posts | 55 followers

Follow Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Cloud Native Community