By Shantanu Kaushik

Cloud-native applications are lightweight, independent, and loosely-coupled services that are designed to deliver higher business value. Cloud-native enables highly accelerated application development that includes observability, metric monitoring, and feedback collection for a more business value-centric approach.

Organizations have to change the way they deliver solutions and how they go about expanding their businesses. The time has come where fast-paced, application-centric models have to be applied to keep up with ever-evolving industry standards. The primary objective of the cloud-native infrastructure is to empower the cloud computing architecture and implement applications using the latest techniques and technologies.

When you develop for the cloud, there is an assumption of method protocols that have been applied as a standard for applications to provide consistent functionality. The application is flexible enough to be sent to production panning cloud environments. These environments can include multi-cloud, hybrid cloud, and your default cloud environment.

Organizations have shifted towards the cloud to bring in a more flexible and reliable approach to their solutions. It started at a steady pace and has grown rapidly over the last few years. Strategists have been wise in most cases where they did not adopt a plug-and-play cloud introduction and did not enable a hybrid cloud setup. If you want to use some public cloud services while keeping some applications on-premises, you already have a hybrid setup.

The most noteworthy benefit of the cloud is the ability to provision resources on-demand and on-the-fly. The automation of complex steps to enable higher productivity cycles and resource management ensures a stable and elastic system. Application lifecycle automation with the DevOps pipeline is another marvel associated with the cloud.

The question is, “How will you utilize all the benefits associated with the cloud?” The answer is cloud-native. Cloud-native development builds applications to enable continuous integration based on feature addition requirements or security upgrades. The main aim is to accelerate the complete application lifecycle with relatively higher fault tolerance.

Collaboration gained a new meaning with DevOps. Organizations are working continuously to automate the entire application lifecycle and introduce more automation points to extract better value out of a solution. Automation is the key to seamless collaboration between teams. There is continuous evolution in the industry towards a more optimized solution with new methods, such as DataOps, AIOps, and DevSecOps.

While building cloud-native applications, you need to remember the base concepts that are the practical aims of taking the cloud-native way.

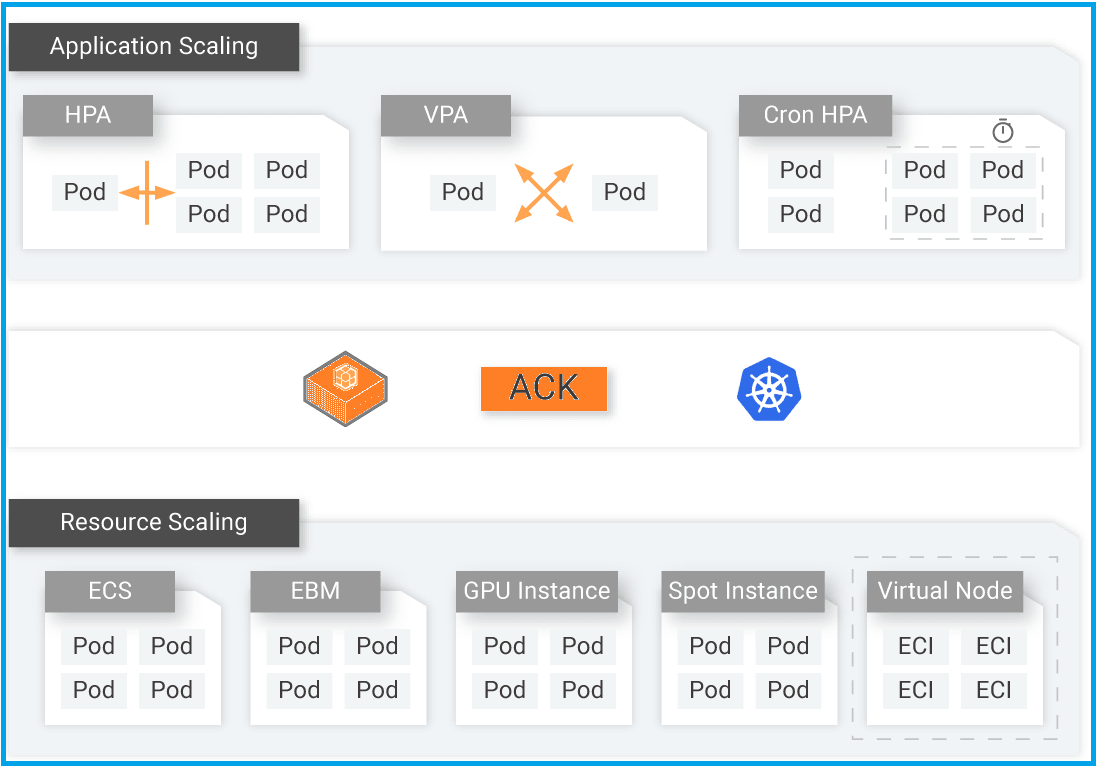

Services don’t always have steady workloads. The demands and traffic load situations change continually. Alibaba Cloud enables Auto Scaling to adjust the required resources accordingly without any human intervention or manual adjustments. Manual adjustments can lead to error-prone systems.

Cloud-native utilizes Kubernetes on Alibaba Cloud. The Container Service for Kubernetes (ACK) uses the Auto Scaling functionality to adjust the number of containers depending on usage. However, you can easily control how Auto Scaling responds to service requests using policies and rules.

Alibaba Cloud ACK utilizes the Application Real-Time Monitoring Service (ARMS), Application High Availability Service (AHAS), and log service to analyze service resource usage and monitor the service for metrics collection.

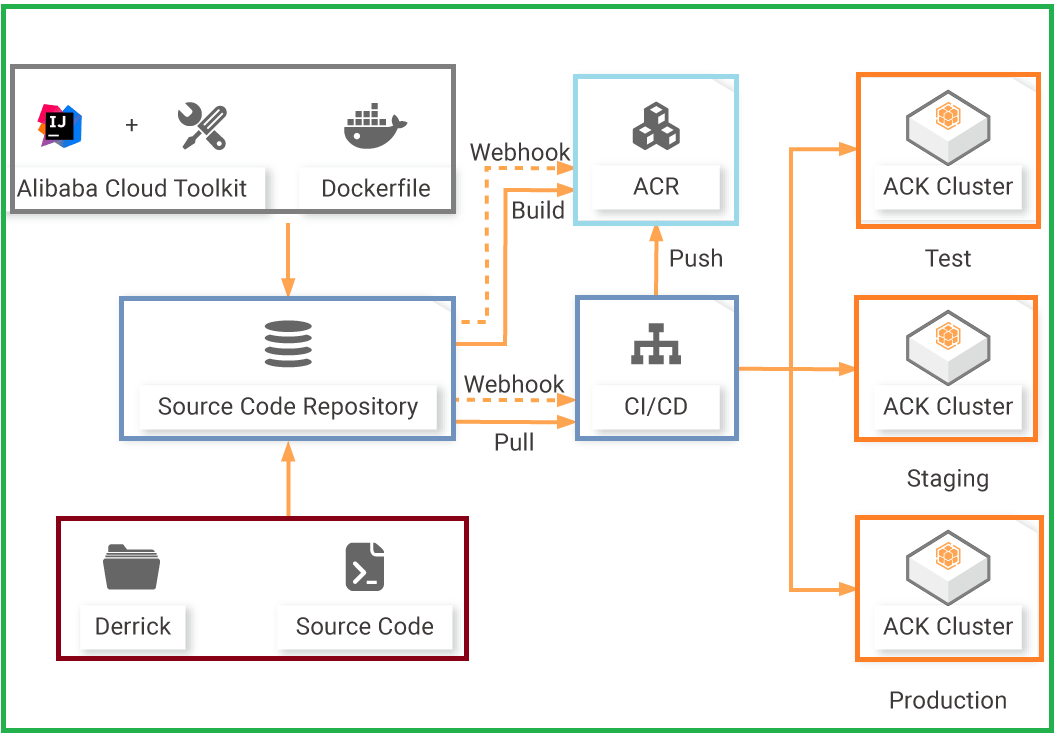

With the cloud-native architecture at your disposal, you can set up an effective DevOps pipeline to improve the efficiency of your SDLC and accelerate application development. An effective DevOps pipeline includes a code repository (ACR) and an automation service module that helps administer continuous integration scenarios. Jenkins is a popular choice with extension support from Alibaba Cloud. Code Repository with Kubernetes processes the application image to replicate it across the globe to any location that is present in its directory. The entire process is automated with a channeled approach for build, packaging, and delivery.

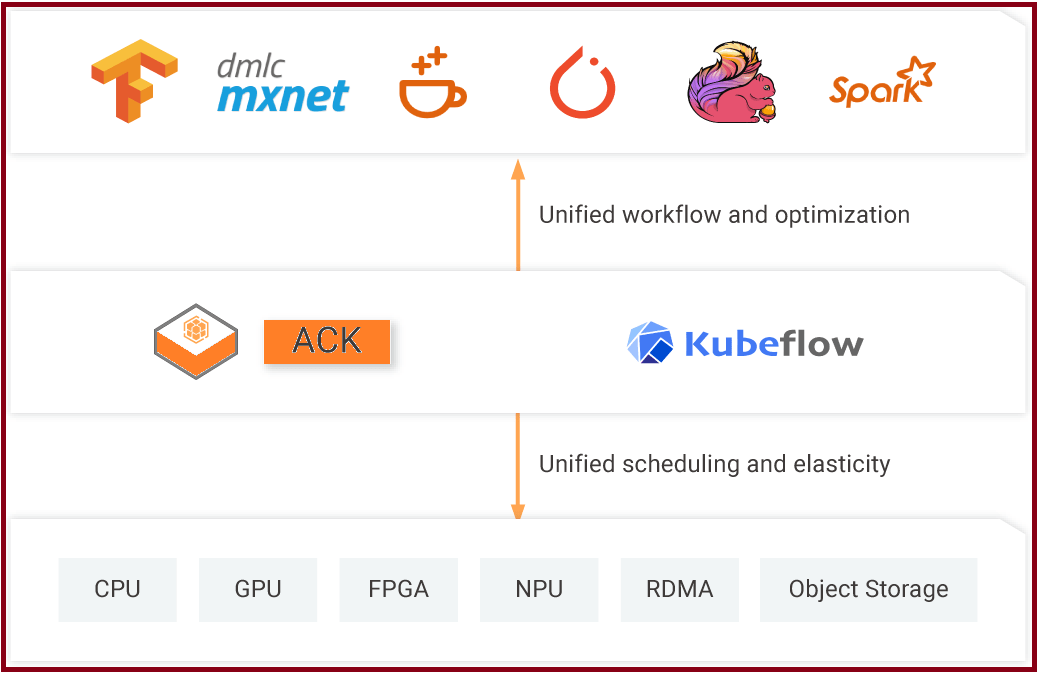

Artificial Intelligence (AI) creates resource-hogging workload scenarios, as it is a repetitive process. The computing capabilities required to process AI workloads need an extensively-managed service scenario with properly managed resources.

AI workloads include data analysis cycles and training to develop models at a very large scale. The cloud-native architecture allows AI deep-learning development and inference platforms that work with AI frameworks like Tensorflow, PyTorch, and MXNET.

Alibaba Cloud solutions with cloud-native architecture have introduced a plugin called Kubeflow that makes machine learning and AI-based workflows deployment extremely easy with Kubernetes. These solutions developed with Alibaba Cloud (using the frameworks mentioned above) can be sent to production using images. These images can be integrated with deep-learning solutions like Arena. You can use resources, such as Elastic Compute Service (ECS), Alibaba Cloud e-HPC, Object Storage Service (OSS), Server Load Balancer (SLB), and E-MapReduce, to work with data preparation, model development and training, predictions, and evaluation among other tasks.

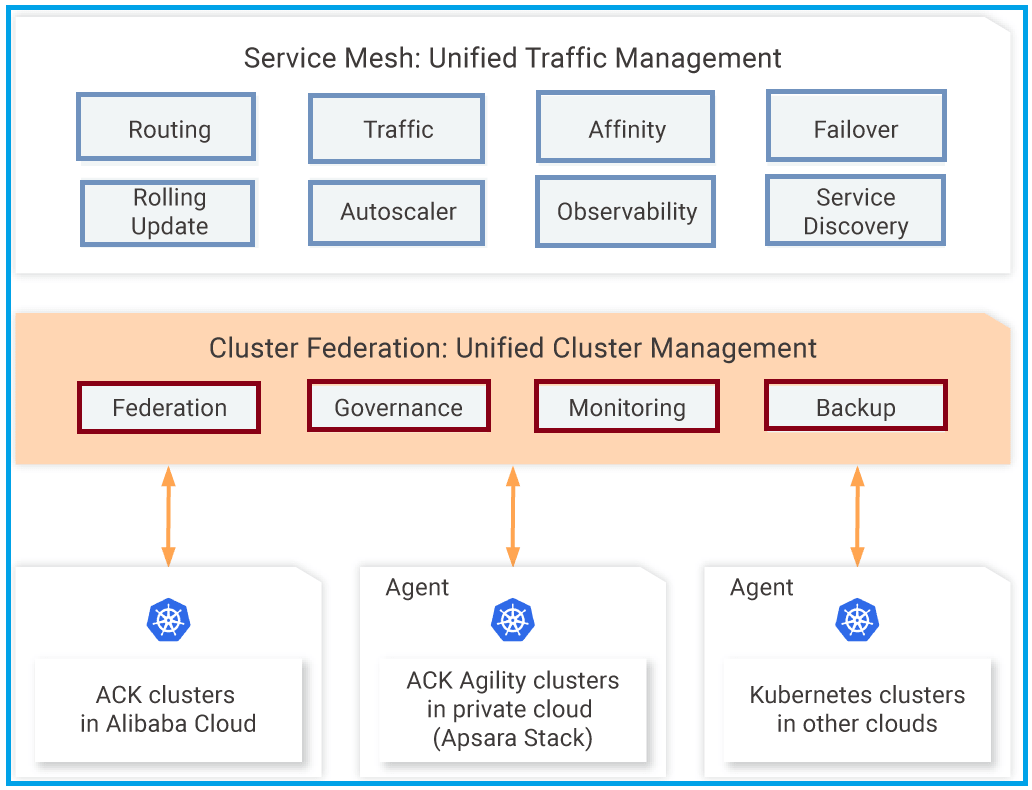

When compared to multi-cloud, a single cloud is much more prone to service outages or disaster scenarios. Multi-cloud can offer enormous benefits over a single cloud architecture since you can leverage high-availability across multiple regions. Hybrid cloud sails in the same boat as multi-cloud when it comes to high-availability scenarios that handle critical workloads.

Alibaba Cloud Container Service for Kubernetes (ACK) provides a highly scalable architecture that works as an operating system with Kubernetes driving the distributed architecture to enable a multitude of features to provide a flexible solution.

Cloud-native development leverages the modularity of an architecture. There are more factors to consider, including the loose coupling of services and the independence that microservices offer. Microservices utilize APIs to communicate and enable different business capabilities. Containerization helps applications run independently. The communication between microservices can be managed using service mesh.

You don’t have to start with microservices architecture implementation to enable and accelerate application delivery with cloud-native. DevOps workflows, such as continuous integration and continuous deployment (CI/CD), fully-automated deployment operations, and standardized development environments, also support legacy applications.

2,599 posts | 762 followers

FollowAlibaba Developer - July 13, 2021

Alibaba Developer - September 6, 2021

Alibaba Clouder - December 3, 2020

Alibaba Clouder - January 4, 2021

Alibaba Cloud Native Community - November 5, 2020

Alibaba Clouder - October 27, 2020

2,599 posts | 762 followers

Follow Auto Scaling

Auto Scaling

Auto Scaling automatically adjusts computing resources based on your business cycle

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Hybrid Cloud Solution

Hybrid Cloud Solution

Highly reliable and secure deployment solutions for enterprises to fully experience the unique benefits of the hybrid cloud

Learn MoreMore Posts by Alibaba Clouder