By Li Yuqian from Alibaba Cloud ECS

As enterprises move towards digitalization and full cloud migration, more enterprises will grow on the cloud. The cluster management and scheduling technology of cloud computing support all on-cloud business applications. Engineers should understand cluster management scheduling today as they used to know the operating system. Engineers in the cloud era need to have a better understanding of the cloud in order to make the most out of the cloud.

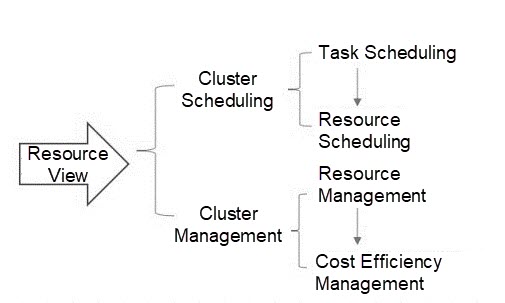

Figure 1: Cluster Scheduling and Management in Resource View

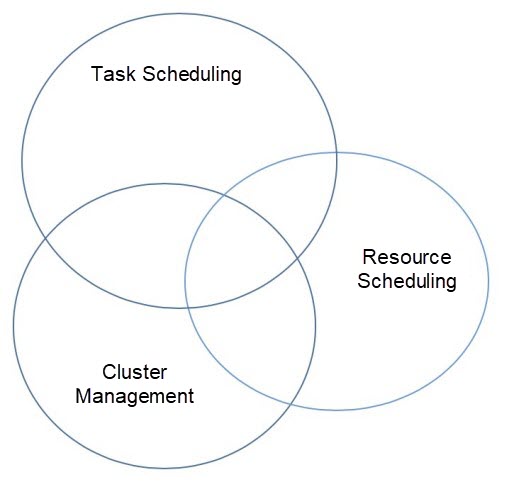

As shown in Figure 1, cluster scheduling includes task scheduling and resource scheduling, and cluster management includes resource management and cost efficiency management. Sometimes, they can be simplified into task scheduling, resource scheduling, and resource management, which helps dilute the cost efficiency management. On the other hand, the best practice process of task scheduling, resource scheduling, and resource management is the practical process of cost efficiency management, which can be told from the relationship shown in Figure 2.

Figure 2: Relationship between Job Scheduling, Resource Scheduling, and Cluster Management

For example, if a user places an order to purchase resources from the Alibaba Cloud console or OpenAPI, it is equivalent to a task. When many users purchase ECS computing resources at the same time, the multiple user requests equal multiple tasks. Each user needs to queue and perform task scheduling, such as routing. After receiving specific tasks, the backend scheduling system needs to select the most suitable physical machine from the physical data center, allocate resources, and create computing instances. This process is called resource scheduling. Physical resources for scheduling include servers, procurement requirements, software and hardware initialization, service launch, and fault maintenance. This process of resource supply management represents cluster management.

Cluster scheduling and management in this article can be simplified and understood as cluster resource scheduling and management.

Today, under the background of enterprise digital transformation and cloud migration, if enterprises understand the working path of cloud suppliers for resource services, it is also helpful for them to connect and use the cloud and manage and optimize their resources at the same time.

I have been engaged in cluster resource scheduling and management for many years. Based on my experience, I think large-scale cluster scheduling and management started at the beginning of DataCenter as One Computer. For a time, systems, such as Borg (billed as the nuclear weapons of Internet companies), were not always shared with the outside world. With the evolution and development of technologies, especially the rapid development of cloud computing over the past decade, cluster scheduling and management technologies have once again come into public sight, typically represented by Google open-source and Kubernetes, an open-source cluster scheduling and management system incubated by CNCF. Kubernetes is called a distributed operating system corresponding to the Linux stand-alone operating system. The relevant descriptions are listed below:

From a commercial perspective, Kubernetes only provides the kernel state for distributed operating systems. As for the user state content needed for commercialization, the community is trying to attract programmers from around the world to work on it together. From the perspective of technology and commerce, there is no doubt that Kubernetes, as a system program at an operating system-level, lays the foundation for the system with its business abstraction, architecture design, and interface definition. It also improves its vitality, especially the vitality of ecological development and the probability of commercial profits.

Today, cluster scheduling and management systems represented by Kubernetes are no longer mysterious, just like search engine technology. If cluster scheduling and management function as throttling within an enterprise, then search engine advertising technology serves as opening source. An enterprise must implement the co-existence of opening source and throttling to survive.

For a long time, large-scale cluster scheduling and management in particular would be a necessary infrastructure capability for large digital enterprises. The development of this field deserves continuous exploration. Scheduling and management of cluster resources deal with numerous contents, which makes them equivalent to the foundation of IT services. This kind of system needs and relies heavily on design drawings, since once it goes wrong, the cost of subsequent correction is huge.

There are three basic principles for architecture design at the IaaS layer:

1. Simplification

This one is mainly about implementing a resource scheduling and management system with the active link and dependent components being as simple as possible. For example, performance collection should be simple enough without the integration of businesses with data analysis or alerting, which should be directed to the data system for centralized processing.

2. Stability

Server resources are the cornerstone of IT systems, with businesses running on them. When a customer's business runs on the cloud, it means the cloud platform is fully responsible for the customer, and the cloud platform must ensure the stability of its servers. Therefore, the technical design of resource scheduling and management must give priority to stability. For example, customer resources are scheduled by scattering the customer instances across different physical machines as much as possible. The purpose is to prevent the failure of a physical machine from affecting multiple instances of the same customer. Scattering requires more physical server resources, and cloud platforms have to pay more for these resources.

3. Exception-Oriented Design

Hardware failures objectively exist, and software bugs are also unavoidable. Therefore, exception-oriented design is necessary. It provides architecture-based support for exceptions. For example, after the abnormal events are defined, if an exception occurs in the entire system, the exception subscription processing module can detect the exception and perform exception processing quickly.

Architecture design is a broad topic. In my opinion, we must design and make trade-offs based on the specific business. The main factors are listed below:

1. Business Design

It aims to comb and define the customers, problems, values, and their relationships with upstream and downstream systems and the boundaries so the appropriate trade-off can be made in the course of system architecture design.

2. Data Design

For example, data consistency, timeliness, security, data storage, and access models

3. Design of the Active Link or High-Frequency Link

Every system has its own core service business scenario. The requests and processing in this scenario constitute an active link or a high-frequency link. The core and high-frequency links may unconsciously overlay more services. Thus, subtraction of services is recommended to ensure the stability and controllability of the main scenario.

4. Stability Design

The overall design before, during, and after the event need to be comprehensively considered, including sufficient prevention in advance, rapid positioning, stop-loss, recovery, post-event summary, and fault drill.

5. Exception-Oriented Design

Always assume there will be exceptions in the system considered at the beginning. This reduces the complexity of troubleshooting subsequent faults significantly.

6. Large-Scale Design

The importance of scale depends. Generally, when the business is just in the initial stage, there is no need to pay attention to the impact of scale. However, combing some ideas of design from the beginning may also better serve large-scale requests.

7. Organization Design

There is a saying that the organization determines the structure. In the process of project R&D, the influence of organization structure also needs to be considered.

Of course, this is not a an exhaustive list. Depending on your organization, you may have other factors affecting architecture design, including drills, tests, throttling, microservices, and capacity planning.

First of all, any architecture must be able to solve the problem. In the infrastructure field, architecture design must put scale and stability first and cost-effectiveness second. In vertical business links, infrastructure is at a lower level, and the lower the level, the simpler it is. Thus, infrastructure is the key to trade-offs. The cluster scheduling architecture is designed to be simple enough while solving problems and supporting large scale with stability as the priority.

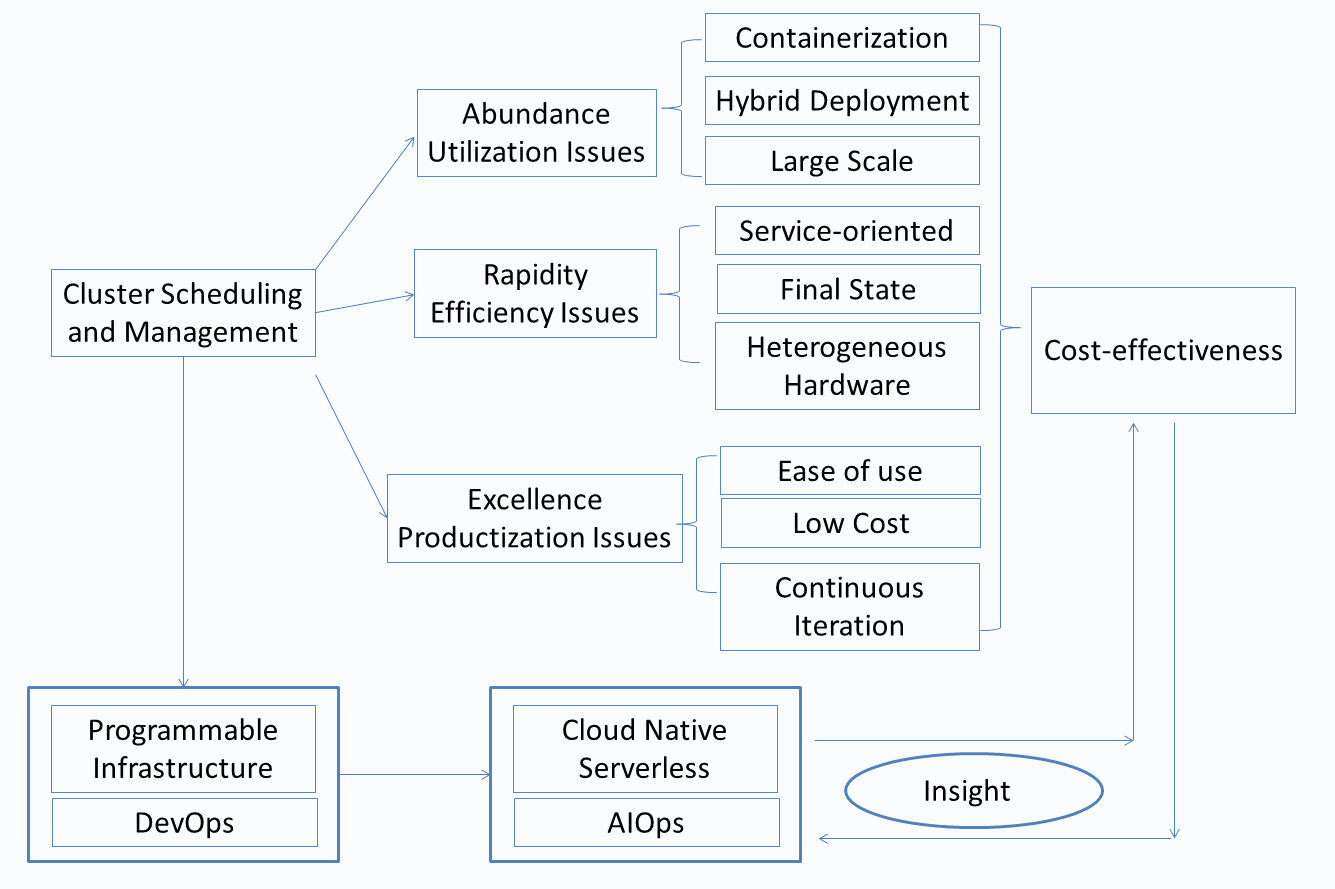

This section makes a four-word description of the major issues that need to be addressed during cluster scheduling and management, as shown in Figure 3.

Figure 3: "Abundance, Rapidity, Excellence, and Cost-Effectiveness" in Cluster Scheduling and Management

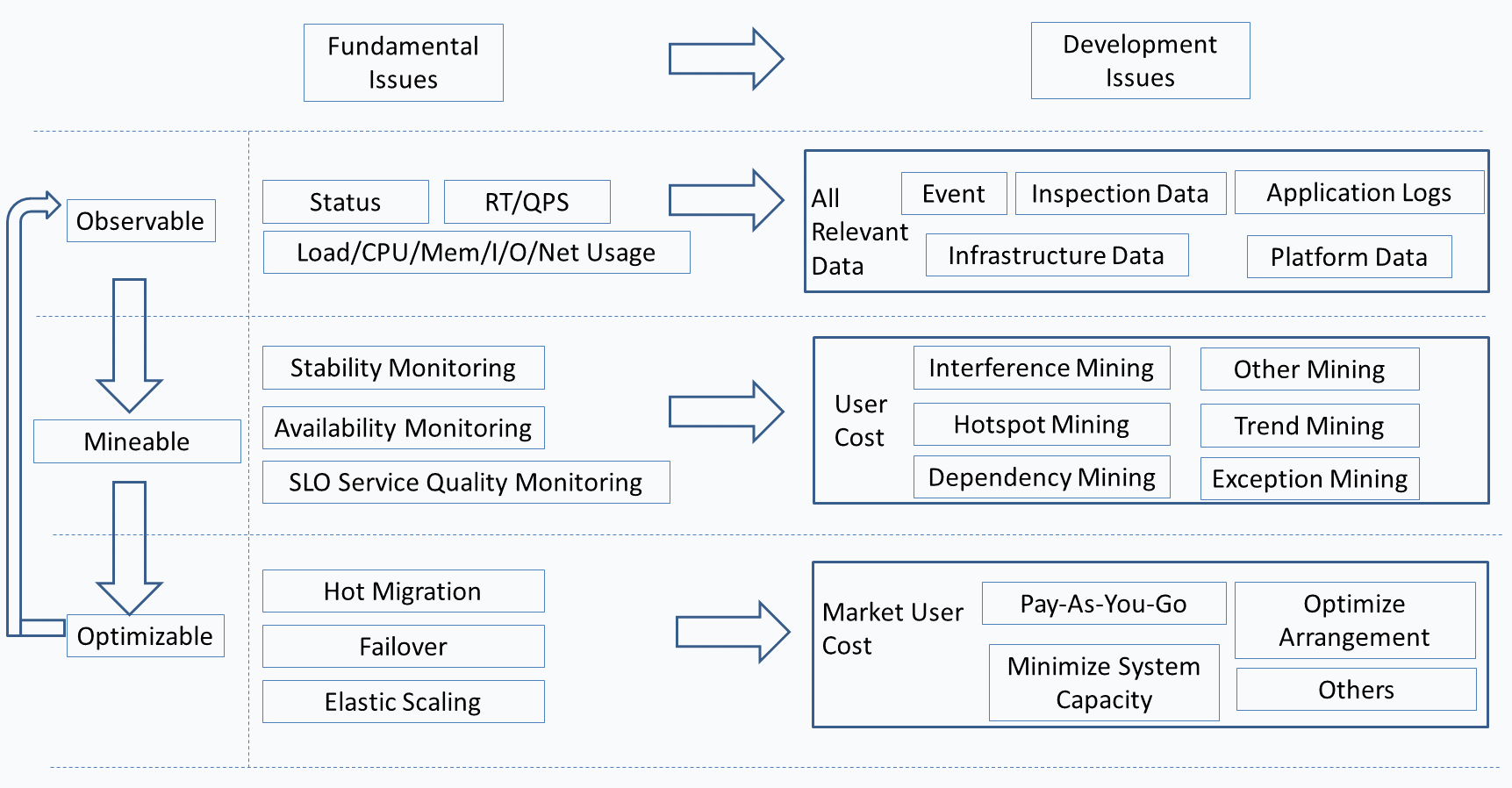

Macroscopically, the cluster scheduling architecture supports utilization, efficiency, and productization. It also supports the evolution from programmable infrastructure + DevOps to cloud-native Serverless + AIOps. In this process, it is necessary to establish a systematic Insight capability. Insight is broken down further, and the focus of its different development stages is shown in Figure 4.

Figure 4: Breaking Down Insight and the Focus of its Different Stages of Development

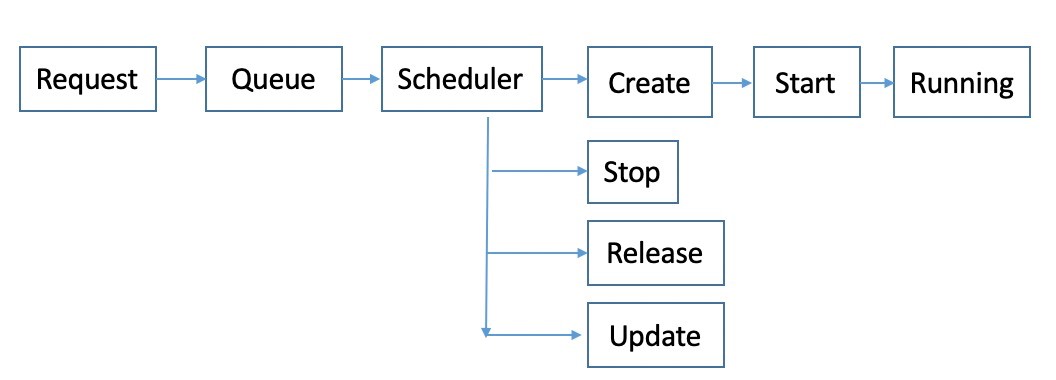

The resource request is processed as shown in Figure 5. First, submit a request. After the request is passed, it enters the allocation queue for scheduling. The instance resource allocation is next, and the instance startup and running is last. If the request is denied, the reason could be the quota and permission restriction, incorrect parameters, or insufficient resource stock.

Figure 5: Resource Request Processing

Process-oriented scheduling architecture allows users to view key information throughout the entire process, and they also need to focus on exception results actively to handle exceptions. For R&D, it is necessary to properly detect the returned status of downstream APIs and clean up resources to avoid resource leaks. In addition, process-oriented scheduling could be defined accordingly. A business requirement consists of more steps. From one of the steps, it can be regarded as service-oriented scheduling.

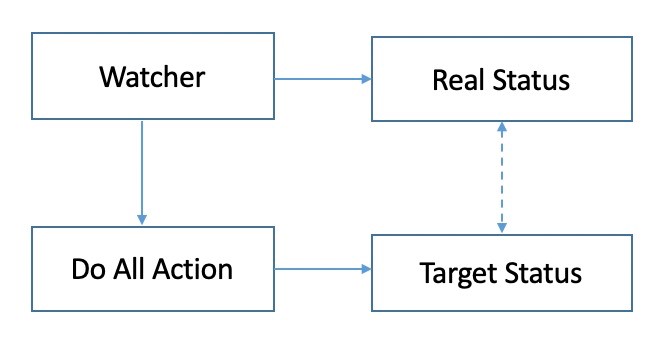

The abstraction of final-state-oriented scheduling architecture is shown in figure 6. Compared with process-oriented scheduling, the final-state-oriented scheduling shields the process details with failures in the process imperceptible to users. As a result, the infrastructure is programmable in that the final state for each scenario and requirement can be programmed, which is equal to programming the entire infrastructure.

Figure 6: Final State-Oriented Abstraction

The final state indicates that the target state must be maintained continuously rather than once. The final state represents a concept or requirement, and the specific mistakes in realizing this concept or requirement are made transparent. Therefore, the final state is closer to people's behaviors and habits. What you think or what you see is what you get. To achieve the final state from the business perspective, one possible strategy is to make a shift in the requirements from business consistency and final state to storage consistency. In other words, rely on the requirement for storage consistency to simplify the one for service consistency.

For example, the workflow-based state change mechanism supports the splitting of requirements with the re-entry and concurrency of subtasks to change a set of states continuously and drive the business towards the target state. For example, the consistency of resource view is implemented through distributed storage, locks, and message subscriptions. The Paxis protocol, the Raft protocol, and their improved versions reduce the complexity of the business significantly.

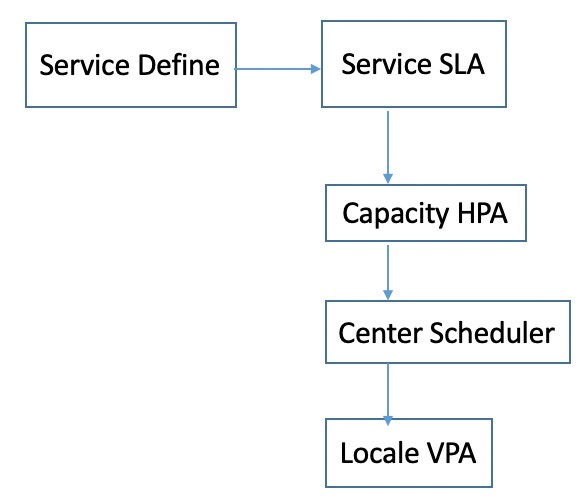

The abstraction of service-oriented scheduling architecture is shown in Figure 7. Users only need to pay attention to the business logic. The infrastructure manages the server resources required by the business, the server delivery process, and server orchestration and scheduling. The services or abstracted computational power are delivered.

Figure 7: Service-Oriented Abstraction

Hadoop, YARN scheduling, and big data computing services are all moving towards service-oriented scheduling. In particular, as cloud computing converges gradually, the infrastructure, common, and basic work is blocked step by step. Undoubtedly, for common users, cluster scheduling is eliminated gradually, and cluster management is managed and maintained by tools provided by third-party ISV providers. In the future, more users will focus on business development. New innovations will no longer be limited by the R&D, O&M, and management of infrastructure resources.

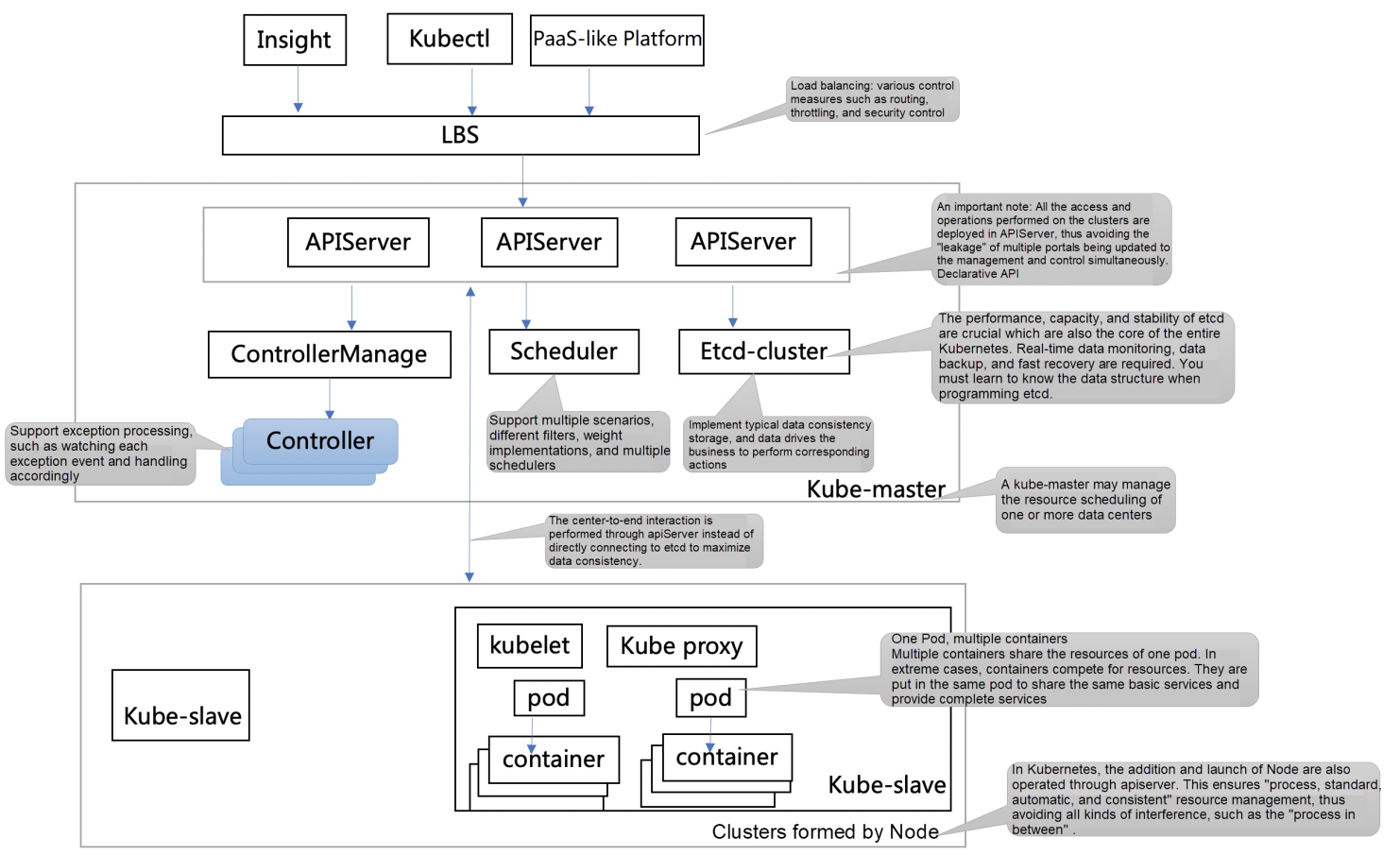

Figure 8 shows an architecture design case based on Kubernetes. The core of this architecture design is still the Kubernetes-native architecture. The figure includes modules, such as Insight and LBS, which are conducive to achieving stability. Insight can observe the entire system, identify potential risks, give early warning, and intervene in a timely manner. Kubernetes is designed for final states. It requires the manual intervention and one-click termination of some final-state-oriented mechanisms. This allows system modules to lock up accordingly and re-lock when the problem is solved. LBS checks ensure load balancing and traffic control. There are some annotations on the relevant components in the preceding figure, which reflect the differences between other scheduling architectures.

Figure 8: Architecture Design Case Based on Kubernetes

Kubernetes has shown strong ecological influence. There are various cases of Kubernetes application and optimization. We have only provided an interpretation here. I hope my interpretation can help you understand final-state-oriented design.

In general, business development needs to focus on the most suitable architecture design rather than optimal architecture design. The business development is guaranteed at the right time, and in-depth iteration and improvement of the architecture are implemented through large-scale services. Large data centers need to manage large-scale servers and serve as the underlying infrastructure of businesses, so they have extremely high requirements for architecture, stability, and performance.

I hope this article will be helpful to those engaged in related work to avoid making mistakes as much as possible in real-world practices.

Li Yuqian is an Alibaba Cloud Elastic Compute Technical Expert with five years of practical and comprehensive experience managing and scheduling large-scale cluster resources and long-time service and co-location scheduling. He is also good at stability-first cluster scheduling strategy, stability architecture design, global stability data analysis practice, and Java and Go programming languages. He has submitted over ten related invention patents.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Technological Practices: Experiences in Cloud Resource Scheduling

What are the Differences and Functions of the Redo Log, Undo Log, and Binlog in MySQL?

2,605 posts | 747 followers

FollowApache Flink Community China - December 25, 2020

Apache Flink Community China - August 12, 2022

Alibaba Cloud Native - May 23, 2022

Apache Flink Community China - September 16, 2020

Alibaba Cloud Community - February 8, 2023

Alibaba Cloud Native - May 23, 2022

2,605 posts | 747 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Clouder