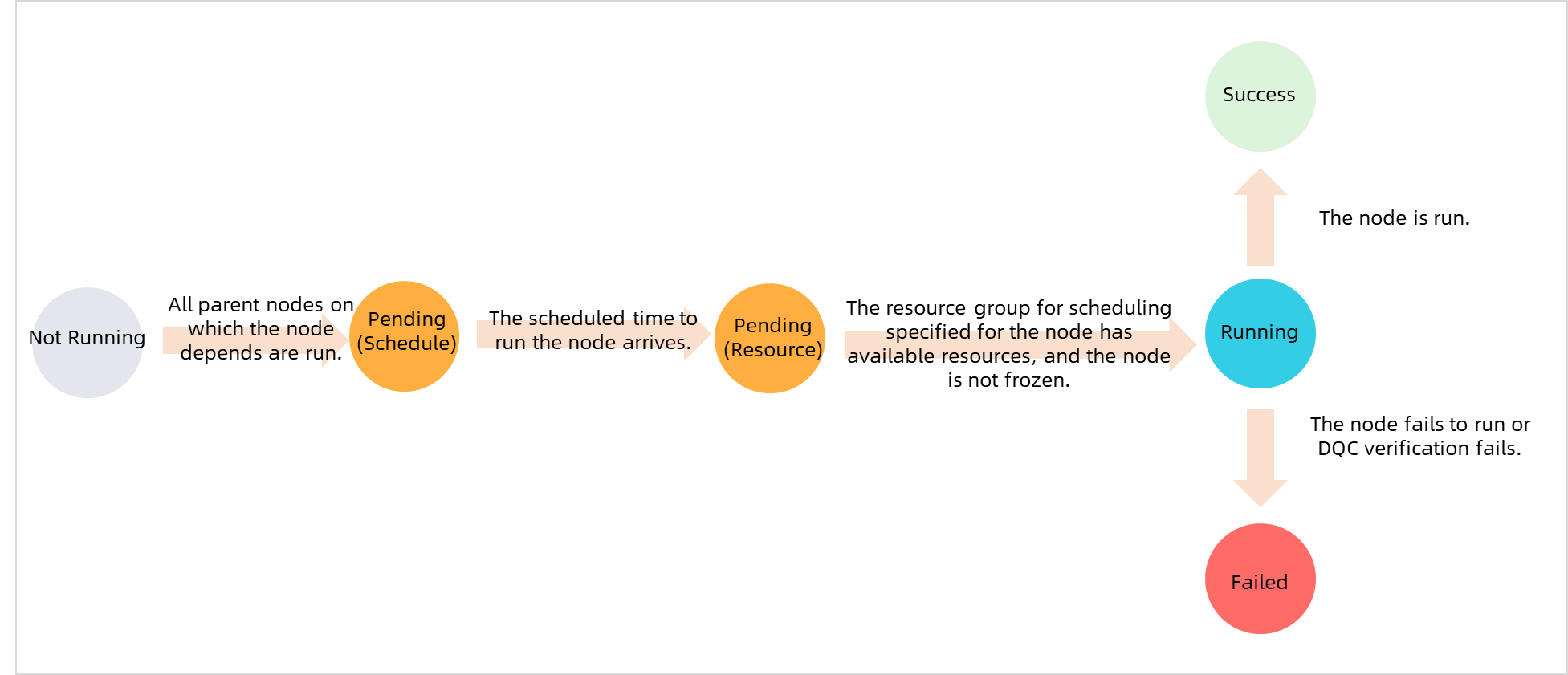

The running of instances generated for a node is affected by various factors, such as the scheduling time of the current node, the scheduling time of ancestor nodes, the time at which ancestor instances finish running, and the remaining resources in the resource group that is used to run the instances. The scheduling time of nodes is specified in DataStudio. This topic describes how to use the Intelligent Diagnosis feature to quickly identify the reason why an instance is not run as expected.

Prerequisites

Auto triggered instances are generated for nodes. After you commit and deploy an auto triggered node to the scheduling system, DataWorks generates instances for the auto triggered node based on the value of the Instance Generation Mode parameter that you configured in DataStudio.

Background information

In Operation Center, you can view the status, color, or status icon of an instance to determine the stage of the instance or identify the reason why the instance is not run as expected. For more information, see View log data.

Color and status icon: In Operation Center, different colors and status icons are used to represent the status of instances. The following table describes the mappings between status icons in different colors and states of instances.

Status: You can also perform the following operations to view the status of an instance: Open the directed acyclic graph (DAG) of the instance. Right-click the instance and select More from the short-cut menu. On the General tab, view the value of the Node Status parameter.

No. | Status | Icon | Flowchart |

1 | Run Successfully |

|

|

2 | Not run |

| |

3 | Failed To Run |

| |

4 | Running |

| |

5 | Wait time |

| |

6 | Freeze |

|

If the ancestor instances of the current instance are in the running state for a long period of time, you can use one of the following methods to fix the issue:

If the ancestor instances are generated by non-batch synchronization nodes, you can perform the following operations to view the cause.

If the ancestor instances are generated by batch synchronization nodes, one possible cause is that the ancestor instances are in the state of waiting for resources for a long period of time. Another possible cause is that the speed at which the logic of some code is processed is slow during the node running. For more information, see How to troubleshoot the issue that the execution duration of a batch synchronization node is long?

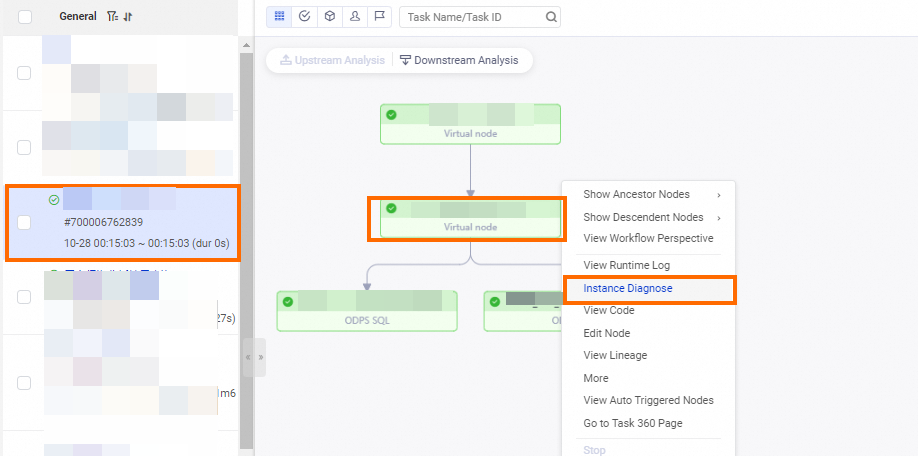

Go to the Intelligent Diagnosis page

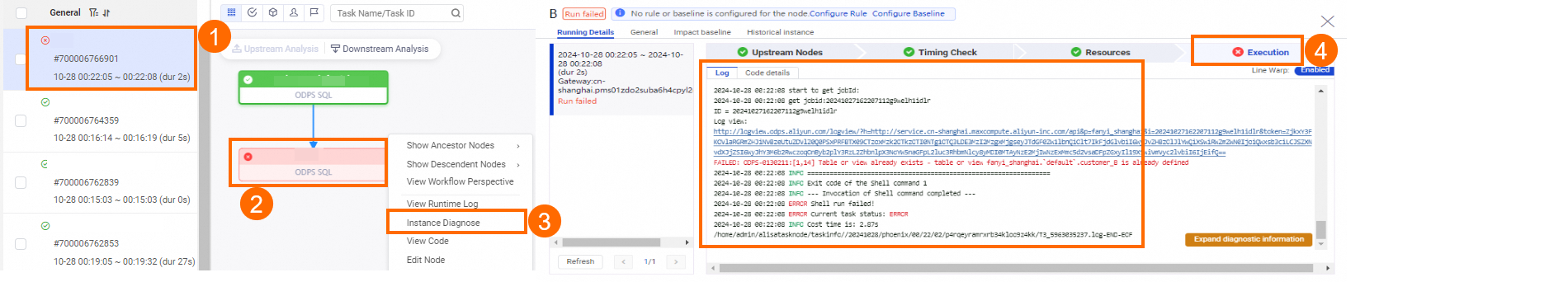

If an instance is not run as expected, you can locate the instance in Operation Center and go to the diagnosis page of the instance to identify the reason. The instance can be an auto triggered node instance, a data backfill instance, or a test instance. The following figure shows how to go to the diagnosis page of an instance.

Diagnosis procedure

Whether an instance can be successfully run is affected by multiple factors, such as upstream dependencies, scheduling time, resource usage in the resource group that is used to run the instance, and running details of the instance. If an instance is not run after the scheduling time elapses for a long period of time or the instance fails to run, you can use the Intelligent Diagnosis feature provided by DataWorks to identify the reason.

On the Intelligent Diagnosis page, you can perform the operations that are described in the following table.

Procedure | Description |

A node for which dependencies are configured can be run only after all its ancestor nodes finish running. In the Upstream Nodes step on the Running Details tab of the diagnosis page of the current instance, you can view the status of the ancestor instances of the current instance. If an ancestor instance fails to run, you can click Instance Diagnose in the Operation column that corresponds to the ancestor instance to identify the cause of the failure. | |

The scheduling time specified in DataStudio for the node for which the current instance is generated is the time at which the node is expected to start to run. In the Timing Check step on the Running Details tab of the diagnosis page of the current instance, you can check whether the scheduling time that is specified for the current instance arrives. The automatic check for the scheduling time of an instance is triggered only after all ancestor instances of the current instance are successfully run. This condition ensures that the data required by the current instance is generated. If the scheduling time arrives, the current node immediately runs the current instance. | |

In most cases, an instance can start to run when the following conditions are met: Ancestor instances of the instance finish running and the scheduling time of the instance arrives. However, scheduling resources are limited. If the remaining resources in the resource group for scheduling that is used for the current instance are insufficient, the current instance enters the state of waiting for resources. In the Resources step on the Running Details tab of the diagnosis page of the current instance, you can view the resource usage. | |

If the conditions for the current instance to run are met, DataWorks issues the current instance to the corresponding compute engine instance or server that is used to run the current instance. If the current instance fails to run, you can identify the cause of the failure in the Execution step on the Running Details tab of the diagnosis page of the current instance. | |

For an instance for which monitoring rules or baselines are configured, you can view the status of the monitoring rules or baselines on the General, Impact baseline, and Historical instance tabs of the diagnosis page of the instance. |

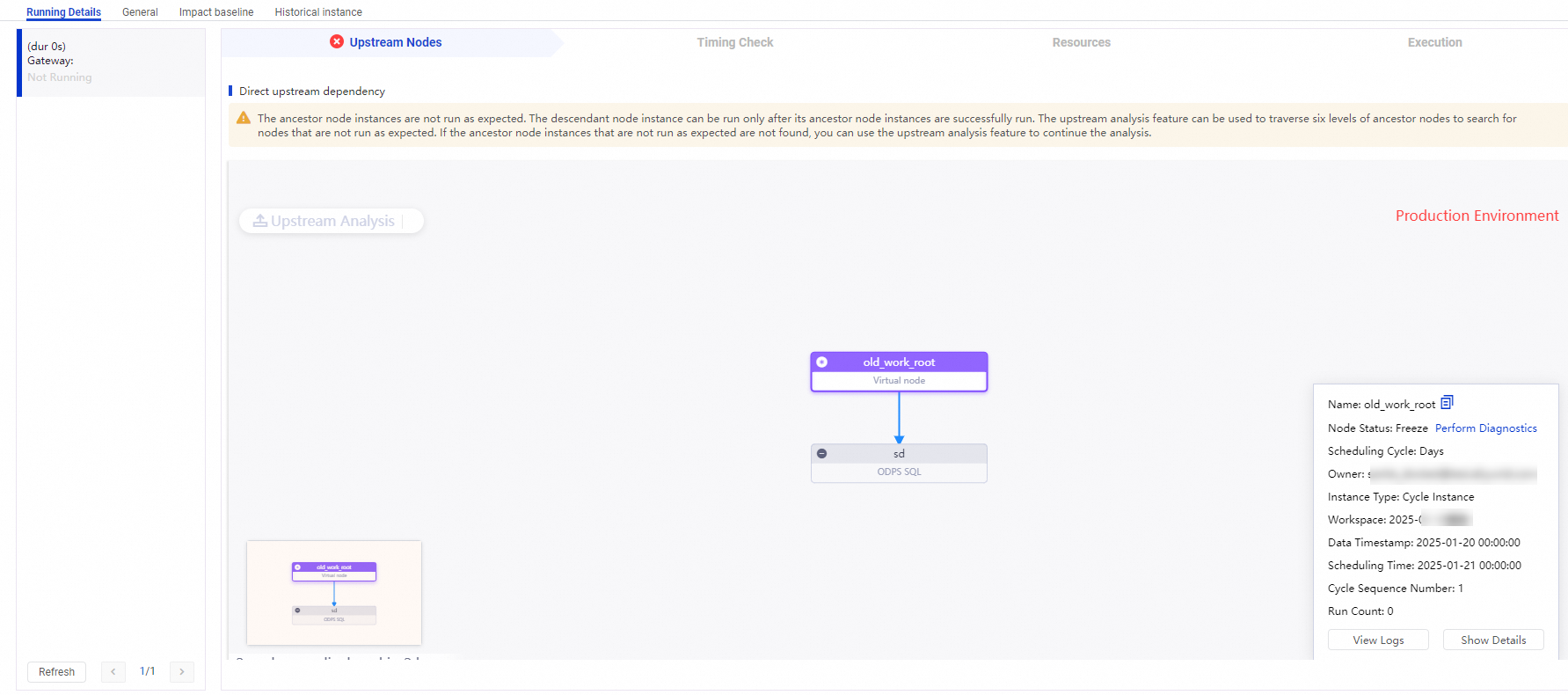

Check the status of ancestor instances

You can check the status of ancestor instances of the current instance to identify the key ancestor instances that block the running of the current instance.

Impact of ancestor instances on the running of the current instance

A node for which dependencies are configured can start to run only after all its ancestor nodes finish running. Ancestor instances of the current instance impose the following impacts on the running of the current instance:

Whether the current instance can run depends on whether ancestor instances of the current instance are successfully run.

After you configure scheduling dependencies between instances in DataWorks, the dependencies between data of the instances are established by default. If ancestor instances of the current instance are not run, the data on which the current instance depends is not generated. In this case, data quality issues occur if the current instance is run. To run the current instance, you must make sure that the scheduling time that is specified for the current instance arrives, and all ancestor instances of the current instance finish running.

The earliest time at which the current instance starts to run depends on the scheduling time of ancestor instances of the current instance.

Ancestor instances of the current instance can start to run only after the scheduling time that is specified for the ancestor instances arrives. If the time at which the current instance is scheduled to run is earlier than the scheduling time of ancestor instances of the current instance and the scheduling time of the current instance arrives, the current instance cannot start to run until ancestor instances of the current instance finish running. Therefore, the earliest time at which the current instance is scheduled to run depends on the scheduling time of ancestor instances of the current instance. For more information, see Impacts of dependencies between tasks on the running of the tasks.

Locate ancestor instances that are not run

If the status icon of the current instance is ![]() , the current instance is in the Pending (Ancestor) state. You can perform the following operations to view the ancestor instances that are not run: Open the DAG of the current instance. Right-click the current instance in the DAG and select Instance Diagnose from the short-cut menu. On the Running Details tab of the diagnosis page, click Upstream Nodes.

, the current instance is in the Pending (Ancestor) state. You can perform the following operations to view the ancestor instances that are not run: Open the DAG of the current instance. Right-click the current instance in the DAG and select Instance Diagnose from the short-cut menu. On the Running Details tab of the diagnosis page, click Upstream Nodes.

By default, the output of six levels of ancestor instances is traversed. If no ancestor instances that meet the conditions are found, click Upstream Analysis in the DAG of the current instance to continue data analysis.

Special cases:

Isolated nodes: If a node does not depend on any ancestor node, the node is an isolated node. For more information, see Scenario: Isolated node. This type of node cannot be run as scheduled. If the node for which the current instance is generated is an isolated node, configure ancestor nodes for the isolated node at the earliest opportunity.

Frozen ancestor instances: If ancestor instances of the current instance are frozen, the running of the current instance is also blocked. In this case, contact the owner who is responsible for ancestor instances of the current instance to identify the reason why the ancestor instances are frozen and adjust the business at the earliest opportunity.

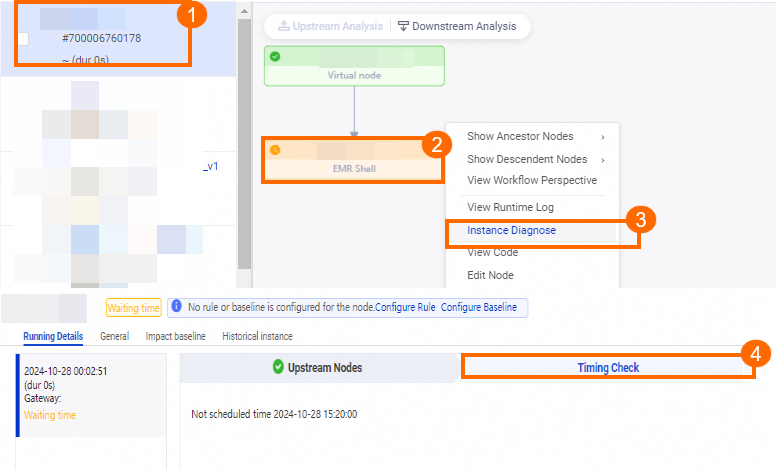

Check the scheduling time

The scheduling time defined for a node in DataStudio is the expected running time of an instance that is generated for the node. The automatic check on the scheduling time that is specified for the current instance is triggered only after all ancestor instances of the current instance are successfully run. This condition ensures that the data required by the current instance is generated. In most cases, if you perform a manual check, you can obtain one of the following results:

The scheduling time of the current instance arrives. However, ancestor instances of the current instance are still running.

In this scenario, after ancestor instances of the current instance finish running, the current instance is immediately run if scheduling resources for the current instance are sufficient.

Ancestor instances of the current instance finish running. However, the scheduling time of the current instance has not arrived.

In this scenario, the current instance can start to run only after the scheduling time of the current instance arrives. If the status icon of the current instance is

, the current instance is in the Pending (Schedule) state. You can view detailed information about the current instance in the Timing Check step on the End-to-end Diagnostics tab of the Intelligent Diagnosis page.

, the current instance is in the Pending (Schedule) state. You can view detailed information about the current instance in the Timing Check step on the End-to-end Diagnostics tab of the Intelligent Diagnosis page.

Check the scheduling resources

The current instance can start to run when the following conditions are met: Ancestor instances of the current instance finish running and the scheduling time of the current instance has arrived. However, scheduling resources are limited. If the remaining resources in the resource group for scheduling that is used for the current instance are insufficient, the current instance enters the state of waiting for resources.

In most cases, scheduling resources are different from the resources that are used to run instances. You can use a resource group for scheduling to only issue the node for which the current instance is generated to a desired compute engine instance. After you use a resource group for scheduling to issue the node for which the current instance is generated to the corresponding compute engine instance, the running of the node is blocked due to insufficient compute engine resources if other nodes are running for a long period of time by using the compute engine instance. For more information, see Overview.

Locate instances that occupy resources

If the status icon of an instance is ![]() , the instance is in the Pending (Resources) state. You can view the instances that occupy resources and adjust the business at the earliest opportunity in the Resources step on the End-to-end Diagnostics tab of the Intelligent Diagnosis page.

, the instance is in the Pending (Resources) state. You can view the instances that occupy resources and adjust the business at the earliest opportunity in the Resources step on the End-to-end Diagnostics tab of the Intelligent Diagnosis page.

Scenarios in which the current instance may enter the Pending (Resources) state

If a node has been run as expected for a long period of time but one of its instances suddenly enters the state of waiting for resources, check whether one of the scenarios described in the following table exist.

Scenario | Solution |

Instances that occupy resources for a long period of time exist and the resources are not released in a timely manner. As a result, the running of the current instance is blocked. | Check whether instances that occupy resources for a long period of time exist in the Resources step on the Running Details tab of the diagnosis page. Then, view run logs to identify the reason why the instances occupy resources for a long period of time. |

The number of instances that are run on the resource group used to run the current instance increases. | If the number of instances that are run on the resource group used to run the current instance increases, the current instance enters the state of waiting for resources. In this case, you can adjust the priority of the current instance or change the resource group for the current instance. |

Instances that occupy a large number of memory resources exist. | Check whether Shell nodes or PyODPS nodes that occupy a large number of memory resources in an exclusive resource group exist. |

The shared resource group for scheduling is shared by tenants in DataWorks. If you run nodes on the shared resource group for scheduling during peak hours, the nodes compete for the scheduling resources. As a result, the execution timeliness of nodes cannot be ensured. In most cases, peak hours range from

00:00 to 09:00. If you use the shared resource group for scheduling to schedule a node, and the node enters the state of waiting for resources, we recommend that you migrate the node to an exclusive resource group for scheduling. For more information, see Exclusive resource groups for scheduling.The maximum number of nodes that can run on an exclusive resource group for scheduling at the same time depends on the specifications in the resource group. For more information, see Exclusive resource groups for scheduling.

View the running details

If the conditions for the current instance to run are met, DataWorks issues the current instance to the resource group or the compute engine instance that is used to run the current instance. For more information about the issuing mechanism of DataWorks, see Overview.

If the status icon of the current instance is ![]() , the current instance is in the Failed state. You can identify the reason why the current instance fails to run in the Execution step on the Running Details tab of the diagnosis page.

, the current instance is in the Failed state. You can identify the reason why the current instance fails to run in the Execution step on the Running Details tab of the diagnosis page.  In most cases, an instance fails to run in the following scenarios:

In most cases, an instance fails to run in the following scenarios:

The code of the instance fails to be run. This indicates that the data synchronization logic or data processing logic fails to be executed.

Table data generated by the instance does not meet the configured data quality monitoring rules.

The instance is frozen.

View the code details of SQL nodes

For SQL nodes, you can view detailed log data of instances that are generated for the SQL nodes in the Execution step on the Running Details tab of the diagnosis page. DataWorks issues nodes to corresponding compute engine instances. If the SQL statements that you use to run the nodes fail to be executed, you can view the documentation of corresponding compute engines to identify the cause of the failure.

View the running details of synchronization nodes

If a synchronization node starts to run, the scheduling system of DataWorks starts to schedule the synchronization node. However, you can determine whether data starts to be synchronized based on only the details of log data. For more information, see Analyze run logs generated for a batch synchronization task. The following problems may occur during data synchronization:

WAIT is displayed in log data for a long period of time during data synchronization.

If WAIT is displayed in log data for a long period of time during data synchronization, the scheduling system of DataWorks has issued the synchronization node. Due to insufficient resources in the resource group that is used to run the synchronization node, the synchronization node enters the state of waiting for resources.

For example, an exclusive resource group for Data Integration that uses the specifications of 4 vCPUs and 8 GiB of memory supports a maximum of eight parallel threads. Three synchronization nodes are configured to run on the exclusive resource group for Data Integration. Three parallel threads are configured for each of the synchronization nodes. If two of the nodes are run in parallel on the resource group, the resource group for Data Integration can support two more parallel threads. In this case, the remaining node has to wait for resources in the resource group due to insufficient resources, and the logs of the node show that the node is in the

WAITstate. In this case, you can go to the Data Integration tab in the Execution step on the Running Details tab of the diagnosis page to view the following information: the instances that are running on the resource group for Data Integration when the current instance is in the state of waiting for resources and the amount of resources used by each instance.NoteEach synchronization node occupies one scheduling resource. If a synchronization node is not run as expected for a long period of time, the running of other nodes may be blocked.

If the resource usage is high but no nodes are run, or the number of nodes that are running on a resource group does not reach the upper limit but the current node cannot run, you can click the application link or scan the QR code below to join the DataWorks DingTalk group to contact pre-sales and after-sales personnel. You can contact the intelligent chatbot or on-duty personnel for consultation.

NoteThe maximum number of parallel threads supported by an exclusive resource group for Data Integration varies based on the specifications in the resource group. For more information, see Exclusive resource groups for Data Integration.

Data synchronization fails.

If a synchronization node fails to run, you can identify the cause of the failure based on the error message and specific plug-in descriptions. For more information, see FAQ about network connectivity and operations on resource groups.

View the monitoring details

For an instance for which monitoring rules or baselines are configured, you can perform the following operations to view the monitoring rules or baselines to which the instance belongs and the status of the monitoring rules or baselines: Go to the intelligent diagnosis page of the instance. Then, click View Details next to the prompt message displayed on the Running Details tab.

You can view monitoring details only when monitoring rules are configured. For more information, see View alert information.