This topic describes how to create a Log Service sink connector to synchronize data from a topic in your ApsaraMQ for Kafka instance to Log Service by using EventBridge.

Prerequisites

- ApsaraMQ for Kafka

- The connector feature is enabled for your ApsaraMQ for Kafka instance. For more information, see Enable the connector feature.

- A topic is created in the ApsaraMQ for Kafka instance. For more information, see Step 1: Create a topic.

- EventBridge

- EventBridge is activated and permissions are granted to a RAM user. For more information, see Activate EventBridge and grant permissions to a RAM user.

- A RAM role whose trusted entity is an Alibaba Cloud service is created and the permissions that you need to export and synchronize data are added to the RAM role. If you want the full log permissions, select the system policy and add the

AliyunLogFullAccesspermission to the RAM role. The following code describes how to configure the trust policy:{ "Statement": [ { "Action": "sts:AssumeRole", "Effect": "Allow", "Principal": { "Service": [ "eventbridge.aliyuncs.com" ] } } ], "Version": "1" }Note If you want to use a custom policy to add specific permissions to the RAM role, see Step 2: Grant permissions to the RAM user.

- Log Service

- A Log Service project is created. For more information, see Create a project.

- A Log Service Logstore is created. For more information, see Create a Logstore.

Background information

You can create a data synchronization task in the ApsaraMQ for Kafka console to synchronize data from a topic in ApsaraMQ for Kafka to the Log Service Logstore. This task is completed by using an event stream in EventBridge. For more information about event streams in EventBridge, see Overview.Usage notes

- You can synchronize data from a topic in a ApsaraMQ for Kafka instance only to a Log Service Logstore that resides in the same region as the Message Queue for Apache Kafka instance. For more information about the limits on connectors, see Limits.

- When you create a connector, ApsaraMQ for Kafka creates a service-linked role for you.

- If no service-linked role is available, ApsaraMQ for Kafka creates a role for you to export data from a topic in the ApsaraMQ for Kafka instance to a Logstore.

- If a service-linked role is available, ApsaraMQ for Kafka does not create a new role.

Create and deploy a Log Service sink connector

This section describes how to create and deploy a Log Service sink connector to synchronize data from ApsaraMQ for Kafka to Log Service.

- Log on to the ApsaraMQ for Kafka console. In the Resource Distribution section of the Overview page, click the name of the region where your instance is deployed.

- In the left-side navigation pane, click Connectors. Select the instance to which the connector belongs from the Select Instance drop-down list, and click Create Sink Connector (Export from Kafka).

- Perform the following operations to complete the Create Connector wizard.

- Go to the Connectors page, find the connector that you created, and then click Deploy in the Actions column. After you create and deploy the Log Service sink connector, an event stream is created in EventBridge in your account. The event stream has the same name and is in the same region as the Log Service sink connector.

Send messages

After you deploy the Log Service sink connector, you can send a message to the source topic in ApsaraMQ for Kafka to test whether messages can be synchronized to the Log Service Logstore.

- On the Connectors page, find the connector that you want to use and click Test in the Actions column.

- In the Send Message panel, configure the following parameters to send a test message.

Parameter Description Example Message Key The key of the test message that you want to send. demo Message Content The content of the test message that you want to send. {"key": "test"} Send to Specified Partition - Yes: Enter the ID of the partition to which you want to send the test message in the Partition ID text box. For information about how to query a partition ID, see View partition status.

- No: does not specify a partition for the test message.

No

View logs

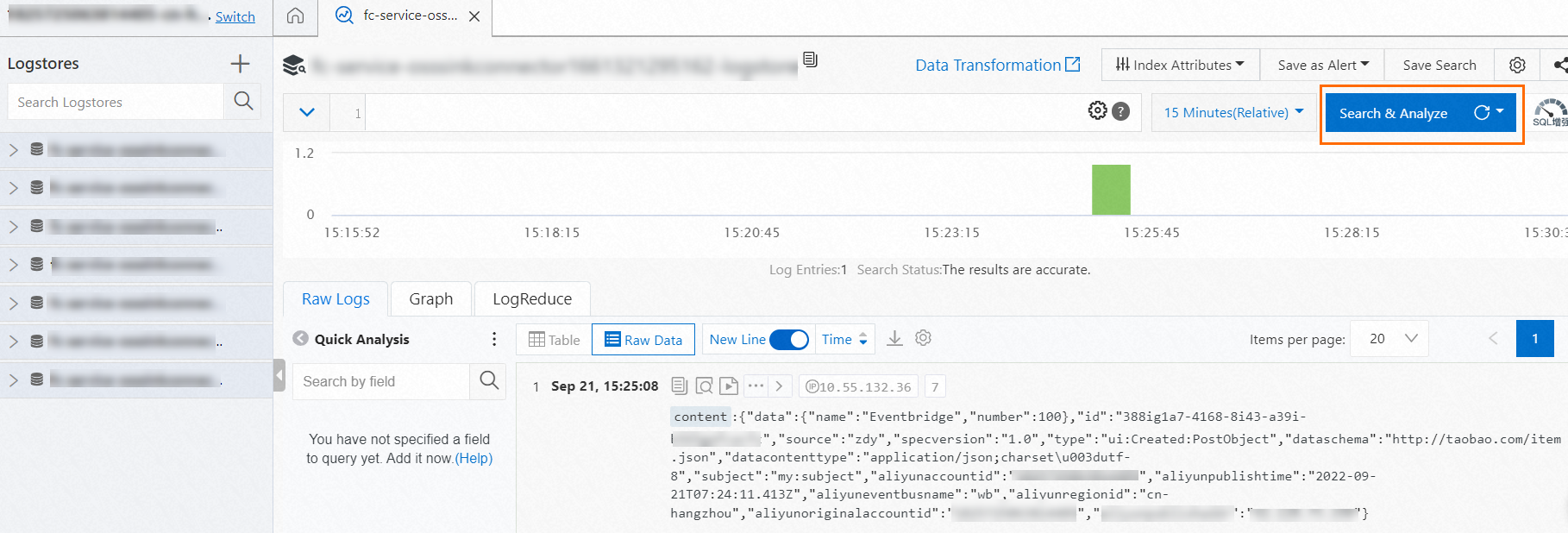

After you send a test message to the source topic in ApsaraMQ for Kafka, you can view logs in the Log Service console to check whether the message you sent is received.

- Log on to the Log Service console. In the Projects section, click the project that you want to view.

- On the Logstores page, click the Logstore that you want to manage.

- Click Search & Analyze to view the query and analysis results.

Check the synchronization progress

On the Connectors page, find the deployed synchronization task that is in the Running state. Click Consumption Progress in the Actions column to view the data synchronization status of the task.