You can collect container text logs from a Knative Service in DaemonSet mode. In DaemonSet mode, each node runs a logging agent to improve O&M efficiency. Container Service for Kubernetes (ACK) clusters are compatible with Simple Log Service (SLS) and support non-intrusive log collection. You can install a log collection component, which deploys a log collector pod on each node. This way, the component can collect logs from all containers on each node. You can analyze and manage containers based on the collected logs.

Prerequisites

Knative is deployed in your cluster. For more information, see Deploy and manage Knative.

A Knative Service is created. For more information, see Quickly deploy a Knative application.

Step 1: Install a log collection component

Install LoongCollector

Currently, LoongCollector is in canary release. Before you install LoongCollector, check the supported regions.

LoongCollector-based data collection: LoongCollector is a new-generation log collection agent that is provided by Simple Log Service. LoongCollector is an upgraded version of Logtail. LoongCollector is expected to integrate the capabilities of specific collection agents of Application Real-Time Monitoring Service (ARMS), such as Managed Service for Prometheus-based data collection and Extended Berkeley Packet Filter (eBPF) technology-based non-intrusive data collection.

Install the loongcollector component in an existing ACK cluster

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, click the cluster that you want to manage. In the left-side navigation pane, choose Operations > Add-ons.

On the Logs and Monitoring tab of the Add-ons page, find the loongcollector component and click Install.

NoteYou cannot install the loongcollector component and the logtail-ds component at the same time. If the logtail-ds component is installed in your cluster, you cannot directly upgrade the logtail-ds component to the loongcollector component. The upgrade solution is available soon.

After the LoongCollector components are installed, Simple Log Service automatically generates a project named k8s-log-${your_k8s_cluster_id} and resources in the project. You can log on to the Simple Log Service console to view the resources. The following table describes the resources.

Resource type | Resource name | Description | Example |

Machine group | k8s-group- | The machine group of loongcollector-ds, which is used in log collection scenarios. | k8s-group-my-cluster-123 |

k8s-group- | The machine group of loongcollector-cluster, which is used in metric collection scenarios. | k8s-group-my-cluster-123-cluster | |

k8s-group- | The machine group of a single instance, which is used to create a LoongCollector configuration for the single instance. | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | The Logstore is used to collect and store loongcollector-operator logs. Important Do not delete the | config-operation-log |

Install Logtail

Logtail-based data collection: Logtail is a log collection agent that is provided by Simple Log Service. You can use Logtail to collect logs from multiple data sources, such as Alibaba Cloud Elastic Compute Service (ECS) instances, servers in data centers, and servers from third-party cloud service providers. Logtail supports non-intrusive log collection based on log files. You do not need to modify your application code, and log collection does not affect the operation of your applications.

Install Logtail components in an existing ACK cluster

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the one you want to manage and click its name. In the left-side navigation pane, choose .

On the Logs and Monitoring tab of the Add-ons page, find the logtail-ds component and click Install.

Install Logtail components when you create an ACK cluster

Log on to the ACK console. In the left-side navigation pane, click Clusters.

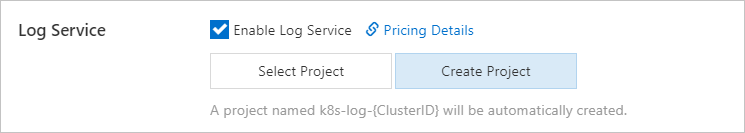

On the Clusters page, click Create Kubernetes Cluster. In the Component Configurations step of the wizard, select Enable Log Service.

This topic describes only the settings related to Simple Log Service. For more information about other settings, see Create an ACK managed cluster.

After you select Enable Log Service, the system prompts you to create a Simple Log Service project. You can use one of the following methods to create a project:

Select Project

You can select an existing project to manage the collected container logs.

Create Project

Simple Log Service automatically creates a project to manage the collected container logs.

ClusterIDindicates the unique identifier of the created Kubernetes cluster.

In the Component Configurations step of the wizard, Enable is selected for the Control Plane Component Logs parameter by default. If Enable is selected, the system automatically configures collection settings and collects logs from the control plane components of a cluster, and you are charged for the collected logs based on the pay-as-you-go billing method. You can determine whether to select Enable based on your business requirements. For more information, see Collect logs of control plane components in ACK managed clusters.

After the Logtail components are installed, Simple Log Service automatically generates a project named k8s-log-<YOUR_CLUSTER_ID> and resources in the project. You can log on to the Simple Log Service console to view the resources. The following table describes the resources.

Resource type | Resource name | Description | Example |

Machine group | k8s-group- | The machine group of logtail-daemonset, which is used in log collection scenarios. | k8s-group-my-cluster-123 |

k8s-group- | The machine group of logtail-statefulset, which is used in metric collection scenarios. | k8s-group-my-cluster-123-statefulset | |

k8s-group- | The machine group of a single instance, which is used to create a Logtail configuration for the single instance. | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | The Logstore is used to store logs of the alibaba-log-controller component. We recommend that you do not create a Logtail configuration for the Logstore. You can delete the Logstore. After the Logstore is deleted, the system no longer collects the operational logs of the alibaba-log-controller component. You are charged for the Logstore in the same manner as you are charged for regular Logstores. For more information, see Billable items of pay-by-ingested-data. | None |

Step 2: Create a collection configuration

This section describes four methods that you can use to create a collection configuration. We recommend that you use only one method to manage a collection configuration.

Configuration method | Configuration description | Scenario |

CRD - AliyunPipelineConfig (recommended) | You can use the AliyunPipelineConfig Custom Resource Definition (CRD), which is a Kubernetes CRD, to manage a Logtail configuration. | This method is suitable for scenarios that require complex collection and processing, and version consistency between the Logtail configuration and the Logtail container in an ACK cluster. Note The logtail-ds component installed on an ACK cluster must be later than V1.8.10. For more information about how to update Logtail, see Update Logtail to the latest version. |

Simple Log Service console | You can manage a Logtail configuration in the GUI based on quick deployment and configuration. | This method is suitable for scenarios in which simple settings are required to manage a Logtail configuration. If you use this method to manage a Logtail configuration, specific advanced features and custom settings cannot be used. |

Environment variable | You can use environment variables to configure parameters used to manage a Logtail configuration in an efficient manner. | You can use environment variables only to configure simple settings. Complex processing logic is not supported. Only single-line text logs are supported. You can use environment variables to create a Logtail configuration that can meet the following requirements:

|

CRD - AliyunLogConfig | You can use the AliyunLogConfig CRD, which is an old version CRD, to manage a Logtail configuration. | This method is suitable for known scenarios in which you can use the old version CRD to manage Logtail configurations. You must gradually replace the AliyunLogConfig CRD with the AliyunPipelineConfig CRD to obtain better extensibility and stability. For more information about the differences between the two CRDs, see CRDs. |

(Recommended) CRD - AliyunPipelineConfig

Create a Logtail configuration

Only the Logtail components V0.5.1 or later support AliyunPipelineConfig.

To create a Logtail configuration, you need to only create a CR from the AliyunPipelineConfig CRD. After the Logtail configuration is created, it is automatically applied. If you want to modify a Logtail configuration that is created based on a CR, you must modify the CR.

Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster.

Run the following command to create a YAML file.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.vim cube.yamlEnter the following script in the YAML file and configure the parameters based on your business requirements.

ImportantThe value of the

configNameparameter must be unique in the Simple Log Service project that you use to install the Logtail components.You must configure a CR for each Logtail configuration. If multiple CRs are associated with the same Logtail configuration, the CRs other than the first CR do not take effect.

For more information about the parameters related to the

AliyunPipelineConfigCRD, see (Recommended) Use AliyunPipelineConfig to manage a Logtail configuration. In this example, the Logtail configuration includes settings for text log collection. For more information, see CreateLogtailPipelineConfig.Make sure that the Logstore specified by the config.flushers.Logstore parameter exists. You can configure the spec.logstore parameter to automatically create a Logstore.

For the values of the

EndpointandRegionparameters, see Endpoints. TheRegionparameter indicates the region ID, such ascn-hangzhou.

Collect single-line text logs from specific containers

In this example, a Logtail configuration named

example-k8s-fileis created to collect single-line text logs from the containers whose names containappin a cluster. The file istest.LOG, and the path is/data/logs/app_1.The collected logs are stored in a Logstore named

k8s-file, which belongs to a project namedk8s-log-test.apiVersion: telemetry.alibabacloud.com/v1alpha1 # Create a CR from the ClusterAliyunPipelineConfig CRD. kind: ClusterAliyunPipelineConfig metadata: # Specify the name of the resource. The name must be unique in the current Kubernetes cluster. The name is the same as the name of the Logtail configuration that is created. name: example-k8s-file spec: # Specify the project to which logs are collected. project: name: k8s-log-test # Create a Logstore to store logs. logstores: - name: k8s-file # Configure the parameters for the Logtail configuration. config: # Configure the Logtail input plug-ins. inputs: # Use the input_file plug-in to collect text logs from containers. - Type: input_file # Specify the file path in the containers. FilePaths: - /data/logs/app_1/**/test.LOG # Enable the container discovery feature. EnableContainerDiscovery: true # Add conditions to filter containers. Multiple conditions are evaluated by using a logical AND. ContainerFilters: # Specify the namespace of the pod to which the required containers belong. Regular expression matching is supported. K8sNamespaceRegex: default # Specify the name of the required containers. Regular expression matching is supported. K8sContainerRegex: ^(.*app.*)$ # Configure the Logtail output plug-ins. flushers: # Use the flusher_sls plug-in to send logs to a specific Logstore. - Type: flusher_sls # Make sure that the Logstore exists. Logstore: k8s-file # Make sure that the endpoint is valid. For the Region field, enter the region ID. Endpoint: cn-hangzhou.log.aliyuncs.com Region: cn-hangzhou TelemetryType: logsCollect multi-line text logs from all containers and use regular expressions to parse the logs

In this example, a Logtail configuration named

example-k8s-fileis created to collect multi-line text logs from all containers in a cluster. The file istest.LOG, and the path is/data/logs/app_1. The collected logs are parsed in JSON mode and stored in a Logstore namedk8s-file, which belongs to a project namedk8s-log-test.The sample log provided in the following example is read by the input_file plug-in in the

{"content": "2024-06-19 16:35:00 INFO test log\nline-1\nline-2\nend"}format. Then, the log is parsed based on a regular expression into{"time": "2024-06-19 16:35:00", "level": "INFO", "msg": "test log\nline-1\nline-2\nend"}.apiVersion: telemetry.alibabacloud.com/v1alpha1 # Create a CR from the ClusterAliyunPipelineConfig CRD. kind: ClusterAliyunPipelineConfig metadata: # Specify the name of the resource. The name must be unique in the current Kubernetes cluster. The name is the same as the name of the Logtail configuration that is created. name: example-k8s-file spec: # Specify the project to which logs are collected. project: name: k8s-log-test # Create a Logstore to store logs. logstores: - name: k8s-file # Configure the parameters for the Logtail configuration. config: # Specify the sample log. You can leave this parameter empty. sample: | 2024-06-19 16:35:00 INFO test log line-1 line-2 end # Configure the Logtail input plug-ins. inputs: # Use the input_file plug-in to collect multi-line text logs from containers. - Type: input_file # Specify the file path in the containers. FilePaths: - /data/logs/app_1/**/test.LOG # Enable the container discovery feature. EnableContainerDiscovery: true # Enable multi-line log collection. Multiline: # Specify the custom mode to match the beginning of the first line of a log based on a regular expression. Mode: custom # Specify the regular expression that is used to match the beginning of the first line of a log. StartPattern: \d+-\d+-\d+.* # Specify the Logtail processing plug-ins. processors: # Use the processor_parse_regex_native plug-in to parse logs based on the specified regular expression. - Type: processor_parse_regex_native # Specify the name of the input field. SourceKey: content # Specify the regular expression that is used for the parsing. Use capturing groups to extract fields. Regex: (\d+-\d+-\d+\s*\d+:\d+:\d+)\s*(\S+)\s*(.*) # Specify the fields that you want to extract. Keys: ["time", "level", "msg"] # Configure the Logtail output plug-ins. flushers: # Use the flusher_sls plug-in to send logs to a specific Logstore. - Type: flusher_sls # Make sure that the Logstore exists. Logstore: k8s-file # Make sure that the endpoint is valid. Endpoint: cn-hangzhou.log.aliyuncs.com Region: cn-hangzhou TelemetryType: logsRun the following command to apply the Logtail configuration. After the Logtail configuration is applied, Logtail starts to collect text logs from the specified containers and send the logs to Simple Log Service.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.kubectl apply -f cube.yamlImportantAfter logs are collected, you must create indexes. Then, you can query and analyze the logs in the Logstore. For more information, see Create indexes.

CRD - AliyunLogConfig

To create a Logtail configuration, you need to only create a CR from the AliyunLogConfig CRD. After the Logtail configuration is created, it is automatically applied. If you want to modify a Logtail configuration that is created based on a CR, you must modify the CR.

Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster.

Run the following command to create a YAML file.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.vim cube.yamlEnter the following script in the YAML file and configure the parameters based on your business requirements.

ImportantThe value of the

configNameparameter must be unique in the Simple Log Service project that you use to install the Logtail components.If multiple CRs are associated with the same Logtail configuration, the Logtail configuration is affected when you delete or modify one of the CRs. After a CR is deleted or modified, the status of other associated CRs becomes inconsistent with the status of the Logtail configuration in Simple Log Service.

For more information about CR parameters, see Use AliyunLogConfig to manage a Logtail configuration. In this example, the Logtail configuration includes settings for text log collection. For more information, see CreateConfig.

Collect single-line text logs from specific containers

In this example, a Logtail configuration named

example-k8s-fileis created to collect single-line text logs from the containers of all the pods whose names begin withappin the cluster. The file istest.LOG, and the path is/data/logs/app_1. The collected logs are stored in a Logstore namedk8s-file, which belongs to a project namedk8s-log-test.apiVersion: log.alibabacloud.com/v1alpha1 kind: AliyunLogConfig metadata: # Specify the name of the resource. The name must be unique in the current Kubernetes cluster. name: example-k8s-file namespace: kube-system spec: # Specify the name of the project. If you leave this parameter empty, the project named k8s-log-<your_cluster_id> is used. project: k8s-log-test # Specify the name of the Logstore. If the specified Logstore does not exist, Simple Log Service automatically creates a Logstore. logstore: k8s-file # Configure the parameters for the Logtail configuration. logtailConfig: # Specify the type of the data source. If you want to collect text logs, set the value to file. inputType: file # Specify the name of the Logtail configuration. configName: example-k8s-file inputDetail: # Specify the simple mode to collect text logs. logType: common_reg_log # Specify the log file path. logPath: /data/logs/app_1 # Specify the log file name. You can use wildcard characters (* and ?) when you specify the log file name. Example: log_*.log. filePattern: test.LOG # Set the value to true if you want to collect text logs from containers. dockerFile: true # Specify conditions to filter containers. advanced: k8s: K8sPodRegex: '^(app.*)$'Run the following command to apply the Logtail configuration. After the Logtail configuration is applied, Logtail starts to collect text logs from the specified containers and send the logs to Simple Log Service.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.kubectl apply -f cube.yamlImportantAfter logs are collected, you must create indexes. Then, you can query and analyze the logs in the Logstore. For more information, see Create indexes.

Console

Log on to the Simple Log Service console.

In the Quick Data Import section, click Import Data. In the Import Data dialog box, click the Kubernetes - File card.

Select the required project and Logstore. Then, click Next. In this example, select the project that you use to install the Logtail components and the Logstore that you create.

In the Machine Group Configurations step, perform the following operations. For more information, see Machine groups.

Use one of the following settings based on your business requirements:

- Important

Subsequent settings vary based on the preceding settings.

Confirm that the required machine groups are added to the Applied Server Groups section. Then, click Next. After you install Logtail components in a Container Service for Kubernetes (ACK) cluster, Simple Log Service automatically creates a machine group named

k8s-group-${your_k8s_cluster_id}. You can directly use this machine group.ImportantIf you want to create a machine group, click Create Machine Group. In the panel that appears, configure the parameters to create a machine group. For more information, see Collect container logs from ACK clusters.

If the heartbeat status of a machine group is FAIL, click Automatic Retry. If the issue persists, see How do I troubleshoot an error that is related to a Logtail machine group in a host environment?

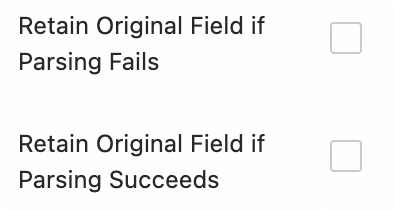

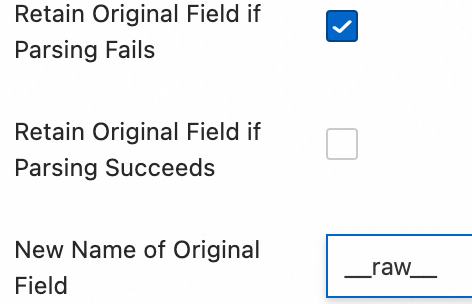

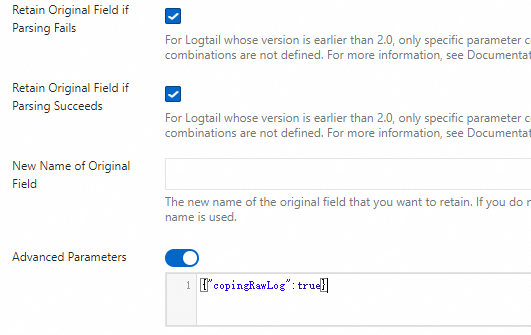

Create a Logtail configuration and click Next. Simple Log Service starts to collect logs after the Logtail configuration is created.

NoteA Logtail configuration requires up to 3 minutes to take effect.

Create indexes and preview data. Then, click Next. By default, full-text indexing is enabled in Simple Log Service. You can also configure field indexes based on collected logs in manual mode or automatic mode. To configure field indexes in automatic mode, click Automatic Index Generation. This way, Simple Log Service automatically creates field indexes. For more information, see Create indexes.

ImportantIf you want to query all fields in logs, we recommend that you use full-text indexes. If you want to query only specific fields, we recommend that you use field indexes. This helps reduce index traffic. If you want to analyze fields, you must create field indexes. You must include a SELECT statement in your query statement for analysis.

Click Query Log. Then, you are redirected to the query and analysis page of your Logstore.

You must wait approximately 1 minute for the indexes to take effect. Then, you can view the collected logs on the Raw Logs tab. For more information, see Guide to log query and analysis.

Environment variables

1. Enable SLS when you create a Knative Service

You can enable log collection based on the following YAML template when you create a Knative service.

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

Click the Services tab, select a namespace, and then click Create from Template. On the page that appears, select Custom in the Sample Template section. Use the following YAML file and follow the instructions on the page to create a Service.

YAML templates comply with the Kubernetes syntax. You can use

envto define log collection configurations and custom tags. You must also set thevolumeMountsandvolumesparameters. The following example shows how to configure Log Service in a pod:apiVersion: serving.knative.dev/v1 kind: Service metadata: name: helloworld-go-log spec: template: spec: containers: - name: my-demo-app image: 'registry.cn-hangzhou.aliyuncs.com/log-service/docker-log-test:latest' env: # Specify environment variables. - name: aliyun_logs_log-stdout value: stdout - name: aliyun_logs_log-varlog value: /var/demo/*.log - name: aliyun_logs_mytag1_tags value: tag1=v1 # Configure volume mounting. volumeMounts: - name: volumn-sls-mydemo mountPath: /var/demo # If the pod is repetitively restarted, you can add a sleep command to the startup parameters of the pod. command: ["sh", "-c"] # Run commands in the shell. args: ["sleep 3600"] # Set the sleep time to 1 hour (3600 seconds). volumes: - name: volumn-sls-mydemo emptyDir: {}Perform the following steps in sequence based on your business requirements:

NoteIf you have other log collection requirements, see (Optional) 2. Use environment variables to configure advanced settings.

Add log collection configurations and custom tags by using environment variables. All environment variables related to log collection must use

aliyun_logs_as the prefix.Add environment variables in the following format:

- name: aliyun_logs_log-stdout value: stdout - name: aliyun_logs_log-varlog value: /var/demo/*.logIn the preceding example, two environment variables in the following format are added to the log collection configuration:

aliyun_logs_{key}. The{keys}of the environment variables arelog-stdoutandlog-varlog.The

aliyun_logs_log-stdoutenvironment variable indicates that aLogstorenamedlog-stdoutis created to store thestdoutcollected from containers. The name of the collection configuration islog-stdout. This way, the stdout of containers is collected to theLogstorenamedlog-stdout.The

aliyun_logs_log-varlogenvironment variable indicates that aLogstorenamedlog-varlogis created to store the /var/demo/*.log files collected from containers. The name of the collection configuration islog-varlog. This way, the /var/demo/*.log files are collected to theLogstorenamedlog-varlog.

Add custom tags in the following format:

- name: aliyun_logs_mytag1_tags value: tag1=v1After a tag is added, the tag is automatically appended to the log data that is collected from the container.

mytag1specifies thetag name without underscores (_).

If you specify a log path to collect log files other than stdout, you must set the

volumeMountsparameter.In the preceding YAML template, the mountPath field in

volumeMountsis set to /var/demo. This allows Logtail to collect log data from the /var/demo*.log file.

After you modify the YAML template, click Create to submit the configurations.

(Optional) 2. Use environment variables to configure advanced settings

Environment variable-based Logtail configuration supports various parameters. You can use environment variables to configure advanced settings to meet your log collection requirements.

You cannot use environment variables to configure log collection in edge computing scenarios.

Variable | Description | Example | Usage note |

aliyun_logs_{key} |

|

|

|

aliyun_logs_{key}_tags | Optional. The variable is used to add tags to logs. The value must be in the {tag-key}={tag-value} format. | | N/A. |

aliyun_logs_{key}_project | Optional. The variable specifies a Simple Log Service project. The default project is the one that is generated after Logtail is installed. | | The project must be deployed in the same region as Logtail. |

aliyun_logs_{key}_logstore | Optional. The variable specifies a Simple Log Service Logstore. Default value: {key}. | | N/A. |

aliyun_logs_{key}_shard | Optional. The variable specifies the number of shards of the Logstore. Valid values: 1 to 10. Default value: 2. Note If the Logstore that you specify already exists, this variable does not take effect. | | N/A. |

aliyun_logs_{key}_ttl | Optional. The variable specifies the log retention period. Valid values: 1 to 3650.

Note If the Logstore that you specify already exists, this variable does not take effect. | | N/A. |

aliyun_logs_{key}_machinegroup | Optional. The variable specifies the machine group in which the application is deployed. The default machine group is the one in which Logtail is deployed. For more information about how to use this variable, see Collect container logs from an ACK cluster. | | N/A. |

aliyun_logs_{key}_logstoremode | Optional. The variable specifies the type of Logstore. Default value: standard. Valid values: standard and query. Note If the Logstore that you specify already exists, this variable does not take effect.

|

| To use this variable, make sure that the image version of the logtail-ds component is 1.3.1 or later. |

Step 3: Query and analyze logs

Log on to the Simple Log Service console.

In the Projects section, click the project that you want to manage to go to the details page of the project.

In the left-side navigation pane, click the

icon of the Logstore that you want to manage. In the drop-down list, select Search & Analysis to view the logs that are collected from your Kubernetes cluster.

icon of the Logstore that you want to manage. In the drop-down list, select Search & Analysis to view the logs that are collected from your Kubernetes cluster.

Default fields in container text logs

The following table describes the fields that are included by default in each container text log.

Field name | Description |

__tag__:__hostname__ | The name of the container host. |

__tag__:__path__ | The log file path in the container. |

__tag__:_container_ip_ | The IP address of the container. |

__tag__:_image_name_ | The name of the image that is used by the container. |

__tag__:_pod_name_ | The name of the pod. |

__tag__:_namespace_ | The namespace to which the pod belongs. |

__tag__:_pod_uid_ | The unique identifier (UID) of the pod. |

References

To troubleshoot log collection errors, see How do I view Logtail collection errors? and What do I do if errors occur when I collect logs from containers?

To deploy Logtail through DaemonSet to collect text logs from ACK clusters, see Collect container logs from ACK clusters.

You can view the Knative monitoring dashboard. For more information, see View the Knative monitoring dashboard.

Simple Log Service allows you to configure monitoring alerts. For more information, see Configure alerting for Knative Services.