ACK clusters are compatible with both Alibaba Cloud Prometheus and open source Prometheus by default. If the pre-configured Prometheus metrics do not meet your business needs, you can use custom Prometheus Query Language (PromQL) to create alert rules. These rules help you monitor the health of resources such as cluster nodes, hosts, container replicas, and workloads. An alert rule can trigger an alert and send a notification when a specified data metric reaches a threshold or a condition is met.

Prerequisites

Prometheus monitoring is enabled for your ACK cluster. For more information, see Use Alibaba Cloud Prometheus Monitoring (recommended) or Use open source Prometheus monitoring.

Configure Prometheus alert rules using custom PromQL

ACK clusters are compatible with both Alibaba Cloud Prometheus and open source Prometheus by default. You can use custom PromQL to configure alert rules based on Prometheus monitoring data. When the conditions of an alert rule are met, the system generates a corresponding alert event and sends a notification.

Alibaba Cloud Prometheus Monitoring

For more information about how to configure alert rules using custom PromQL in Alibaba Cloud Prometheus Monitoring, see Create a Prometheus alert rule.

Open source Prometheus monitoring

Configure an alert notification policy.

Open source Prometheus monitoring supports notification methods such as webhooks, DingTalk robots, and email. You can set the notification method for Prometheus alerts by configuring the

receiverparameter in the ack-prometheus-operator application. For more information, see Alerting configuration.Create an alert rule.

Deploy the PrometheusRule Custom Resource Definition (CRD) in the cluster to define alert rules. For more information, see Deploying Prometheus Rules.

apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: labels: # The labels must be consistent with ruleSelector.matchLabels of the Prometheus CRD. prometheus: example role: alert-rules name: prometheus-example-rules spec: groups: - name: example.rules rules: - alert: ExampleAlert # expr specifies the PromQL query and trigger condition. For this parameter, you can refer to the PromQL configuration column in the alert rule descriptions in this topic. expr: 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) > 90Check whether the alert rule is in effect.

Run the following command to map the Prometheus service in the cluster to port 9090 on your local machine.

kubectl port-forward svc/ack-prometheus-operator-prometheus 9090:9090 -n monitoringIn your browser, enter localhost:9090 to view the Prometheus server console.

At the top of the open source Prometheus page, choose .

On the Rules page, you can view the alert rules. If the target alert rule is displayed, the rule is in effect.

Alert rule descriptions

Based on extensive operations and maintenance (O&M) experience with clusters and applications, ACK provides the following recommended Prometheus alert rule configurations. These rules cover various aspects such as cluster stability, node anomalies, node resource usage, application container replica anomalies, workload anomalies, storage exceptions, and network exceptions.

The alert rules, which cover issues such as container replica anomalies and workload anomalies, are categorized into the following severity levels.

Critical: The issue affects the cluster, application, or even your business. It requires immediate attention.

Warning: The issue affects the cluster, application, or even your business. It requires investigation as soon as possible.

Normal: The alert is related to an important feature change.

The operation entry point mentioned in the Rule Description column is the Alert Rules tab on the Alerts page. To update the alert rules, log on to the Container Service for Kubernetes (ACK) console. In the Clusters list, click the name of the target cluster. In the navigation pane on the left, choose . On the Alerts page, click the Alert Rules tab to update the corresponding alert rules.

Abnormal container replicas

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Abnormal pod status | Critical | min_over_time(sum by (namespace, pod, phase) (kube_pod_status_phase{phase=~"Pending|Unknown|Failed"})[5m:1m]) > 0 | Triggers an alert if a pod has an abnormal status within the last 5 minutes. In the operation entry point, click Alert Rule Set for Pod Exceptions and configure the Pod anomaly alert rule. For more information, see Manage alerts in ACK. | For more information about how to troubleshoot abnormal pod statuses, see Troubleshoot pod exceptions. |

Pod startup failed | Critical | sum_over_time(increase(kube_pod_container_status_restarts_total{}[1m])[5m:1m]) > 3 | Triggers an alert if a pod fails to start more than 3 times within the last 5 minutes. In the operation entry point, click Alert Rule Set for Pod Exceptions and configure the Pod startup failures alert rule. For more information, see Manage alerts in ACK. | For more information about how to troubleshoot pod startup failures, see Troubleshoot pod exceptions. |

More than 1,000 pods failed to be scheduled | Critical | sum(sum(max_over_time(kube_pod_status_phase{ phase=~"Pending"}[5m])) by (pod)) > 1000 | Triggers an alert if a total of 1,000 pods are in the Pending state due to scheduling failures within the last 5 minutes. | This issue may be caused by excessive task pressure in a large-scale cluster scheduling scenario. ACK managed cluster Pro Edition provides enhanced core features such as cluster scheduling and offers a Service-level agreement (SLA). We recommend that you use ACK managed cluster Pro Edition. For more information, see Overview of ACK managed cluster Pro Edition. |

Frequent container CPU throttling | Warning | rate(container_cpu_cfs_throttled_seconds_total[3m]) * 100 > 25 | Triggers an alert if the container CPU is frequently throttled. This occurs when the throttled CPU time accounts for more than 25% of the total CPU time within the last 3 minutes. | CPU throttling reduces the CPU time slices allocated to processes in a container. This can increase the runtime of these processes and may slow down the business logic of the containerized application. In this case, check whether the CPU resource limit for the pod is set too low. We recommend that you use the CPU Burst policy to optimize CPU throttling. For more information, see Enable the CPU Burst policy. If the cluster nodes are multi-core servers, we recommend that you use CPU topology-aware scheduling to maximize the use of fragmented CPU resources. For more information, see Enable CPU topology-aware scheduling. |

CPU usage of a container replica pod is higher than 85% | Warning | (sum(irate(container_cpu_usage_seconds_total{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container!="",container!="POD"}[1m])) by (namespace,pod) / sum(container_spec_cpu_quota{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container!="",container!="POD"}/100000) by (namespace,pod) * 100 <= 100 or on() vector(0)) >= 85 | Triggers an alert when the CPU usage of a container replica pod exceeds 85% of its pod limit in a specified namespace or for a specified pod. If the pod does not have a limit configured, this alert rule does not take effect. The default threshold of 85% is a recommended value. You can adjust it as needed. To filter data for a specific pod or namespace, replace | High CPU usage in a pod can lead to CPU throttling and insufficient CPU time slice allocation, which affect the execution of processes in the pod. In this case, check whether the CPU |

Memory usage of a container replica pod is higher than 85% | Warning | ((sum(container_memory_working_set_bytes{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container !="",container!="POD"}) by (pod,namespace)/ sum(container_spec_memory_limit_bytes{pod=~"{{PodName}}.*",namespace=~"{{Namespace}}.*",container !="",container!="POD"}) by (pod, namespace) * 100) <= 100 or on() vector(0)) >= 85 | Triggers an alert when the memory usage of a container replica pod is higher than 85% of its pod limit. If the pod does not have a limit configured, this alert rule does not take effect. The default threshold of 85% is a recommended value. You can adjust it as needed. To filter data for a specific pod or namespace, replace | High memory usage in a pod can cause the pod to be terminated by an out-of-memory (OOM) killer, leading to a pod restart. In this case, check whether the memory |

Abnormal workloads

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Abnormal status of available Deployment replicas | Critical | kube_deployment_spec_replicas{} != kube_deployment_status_replicas_available{} | Triggers an alert when the number of available replicas for a Deployment does not match the desired number. In the operation entry point, click Alert Rule Set for Workload Exceptions and set the Deployment pod anomaly alert rule. For more information, see Manage alerts in ACK. | If a pod fails to start or has an abnormal status, see Troubleshoot pod exceptions. |

Abnormal status of DaemonSet replicas | Critical | ((100 - kube_daemonset_status_number_ready{} / kube_daemonset_status_desired_number_scheduled{} * 100) or (kube_daemonset_status_desired_number_scheduled{} - kube_daemonset_status_current_number_scheduled{})) > 0 | Triggers an alert when the number of available replicas for a DaemonSet does not match the desired number. In the operation entry point, click Alert Rule Set for Workload Exceptions and set the DaemonSet pod anomaly alert rule. For more information, see Manage alerts in ACK. | If a pod fails to start or has an abnormal status, see Troubleshoot pod exceptions. |

Abnormal scheduling of DaemonSet replicas | Critical | kube_daemonset_status_number_misscheduled{job} > 0 | Triggers an alert when a DaemonSet replica is scheduled abnormally. In the operation entry point, click Alert Rule Set for Workload Exceptions and set the DaemonSet pod scheduling errors alert rule. For more information, see Manage alerts in ACK. | If a pod fails to start or has an abnormal status, see Troubleshoot pod exceptions. |

Job failed | Critical | kube_job_status_failed{} > 0 | Triggers an alert when a Job fails to execute. In the operation entry point, click Alert Rule Set for Workload Exceptions and set the Job execution failures alert rule. For more information, see Manage alerts in ACK. |

|

Storage exceptions

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Abnormal PersistentVolume status | Critical | kube_persistentvolume_status_phase{phase=~"Failed|Pending"} > 0 | Triggers an alert when a persistent volume (PV) has an abnormal status. In the operation entry point, click Alert Rule Set for Storage Exceptions and set the PV anomaly alert rule. For more information, see Manage alerts in ACK. | For more information about how to troubleshoot abnormal PV statuses, see the disk mounting section in FAQ about disk PVs. |

Host disk usage is higher than 85% | Critical | (100 - node_filesystem_avail_bytes / node_filesystem_size_bytes * 100 ) >= 85 | Triggers an alert when the free space on a disk block device of a node is less than 10%. In the operation entry point, click Alert Rule Set for Resource Exceptions and set the Node - Disk usage ≥ 85% alert rule. For more information, see Manage alerts in ACK. | We recommend that you scale out the node or expand its disk. For more information, see the disk mounting section in FAQ about disk PVs. |

Abnormal node status

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Node remains in NotReady status for 3 minutes | Critical | (sum(max_over_time(kube_node_status_condition{condition="Ready",status="true"}[3m]) <= 0) by (node)) or (absent(kube_node_status_condition{condition="Ready",status="true"})) > 0 | Triggers an alert when a cluster node remains in the NotReady status for 3 minutes. In the operation entry point, click Alert Rule Set for Node Exceptions and set the Node changes to the unschedulable state alert rule. For more information, see Manage alerts in ACK. |

|

Abnormal host resource usage

The following section describes the difference between host resource metrics and node resource metrics:

Host resource metrics measure the resources of the physical machine or virtual machine on which the node runs.

In the usage formula, the numerator is the resource usage of all processes on the host, and the denominator is the maximum capacity of the host.

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Host memory usage is higher than 85% | Warning | (100 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes * 100) >= 85 | Triggers an alert when the host memory usage of the cluster is higher than 85%. In the operation entry point, click Alert Rule Set for Resource Exceptions and configure the Node - Memory usage ≥ 85% alert rule. For more information, see Manage alerts in ACK. Note The rules in the ACK alert configuration are provided by Cloud Monitor, and their metrics are consistent with the metrics of the corresponding Prometheus rules. The default threshold of 85% is a recommended value. You can adjust it as needed. |

|

Host memory usage is higher than 90% | Critical | (100 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes * 100) >= 90 | The memory usage of hosts in the cluster exceeds 90%. |

|

Host CPU usage is higher than 85% | Warning | 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) >= 85 | Triggers an alert when the host CPU usage of the cluster is higher than 85%. In the operation entry point, click Alert Rule Set for Resource Exceptions and configure the Node - CPU usage ≥ 85% alert rule. Note The rule in the ACK alerting configuration is provided by CloudMonitor ECS monitoring. The metric in the rule is equivalent to the metric in this Prometheus rule. The default threshold of 85% is a recommended value. You can adjust it as needed. For more information, see Manage alerts in ACK. |

|

Host CPU usage is higher than 90% | Critical | 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[2m])) * 100) >= 90 | Triggers an alert when the host CPU usage of the cluster is higher than 90%. |

|

Abnormal node resources

The following section describes the difference between node resource metrics and host resource metrics:

Node resource metrics measure the consumption of resources on a node relative to its allocatable capacity. The metric is the ratio of the resources consumed by containers on the node (numerator) to the allocatable resources on the node (denominator).

Take memory as an example:

Consumed resources: The total memory resources used by a node. This includes the working set memory of all running containers on the node. The working set memory includes the allocated and used memory of containers, the page cache allocated to containers, and more.

Allocatable resources: The amount of resources that can be allocated to containers. This excludes the resources consumed by the container engine layer on the host, which are the resources reserved for nodes in ACK. For more information, see Node resource reservation policy.

In the usage formula, the numerator is the resource usage of all containers on the node, and the denominator is the amount of resources that the node can allocate to containers (Allocatable).

Pod scheduling is based on resource requests, not actual usage.

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Node CPU usage is higher than 85% | Warning | sum(irate(container_cpu_usage_seconds_total{pod!=""}[1m])) by (node) / sum(kube_node_status_allocatable{resource="cpu"}) by (node) * 100 >= 85 | Triggers an alert when the CPU usage of a cluster node is higher than 85%. The formula is

|

|

Node CPU resource allocation rate is higher than 85% | Normal | (sum(sum(kube_pod_container_resource_requests{resource="cpu"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="cpu"}) by (node) * 100 >= 85 | Triggers an alert when the CPU resource allocation rate of a cluster node is higher than 85%. The formula is |

|

Node CPU oversold rate is higher than 300% | Warning | (sum(sum(kube_pod_container_resource_limits{resource="cpu"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="cpu"}) by (node) * 100 >= 300 | Triggers an alert when the CPU oversold rate of a cluster node is higher than 300%. The formula is The default threshold of 300% is a recommended value. You can adjust it as needed. |

|

Node memory usage is higher than 85% | Warning | sum(container_memory_working_set_bytes{pod!=""}) by (node) / sum(kube_node_status_allocatable{resource="memory"}) by (node) * 100 >= 85 | Triggers an alert when the memory usage of a cluster node is higher than 85%. The formula is

|

|

Node memory resource allocation rate is higher than 85% | Normal | (sum(sum(kube_pod_container_resource_requests{resource="memory"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="memory"}) by (node) * 100 >= 85 | Triggers an alert when the memory resource allocation rate of a cluster node is higher than 85%. The formula is |

|

Node memory oversold rate is higher than 300% | Warning | (sum(sum(kube_pod_container_resource_limits{resource="memory"}) by (pod, node) * on (pod) group_left max(kube_pod_status_ready{condition="true"}) by (pod, node)) by (node)) / sum(kube_node_status_allocatable{resource="memory"}) by (node) * 100 >= 300 | Triggers an alert when the memory oversold rate of a cluster node is higher than 300%. The formula is The default threshold of 300% is a recommended value. You can adjust it as needed. |

|

Network exceptions

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

Abnormal cluster CoreDNS availability - request count drops to zero | Critical | (sum(rate(coredns_dns_request_count_total{}[1m]))by(server,zone)<=0) or (sum(rate(coredns_dns_requests_total{}[1m]))by(server,zone)<=0) | This exception can be detected only in ACK managed clusters (Pro and Basic editions). | Check whether the CoreDNS pods in the cluster are normal. |

Abnormal cluster CoreDNS availability - panic exception | Critical | sum(rate(coredns_panic_count_total{}[3m])) > 0 | This exception can be detected only in ACK managed clusters (Pro and Basic editions). | Check whether the CoreDNS pods in the cluster are normal. |

Cluster Ingress controller certificate is about to expire | Warning | ((nginx_ingress_controller_ssl_expire_time_seconds - time()) / 24 / 3600) < 14 | You must install and deploy the ACK Ingress controller component and enable the Ingress feature. | Reissue the Ingress controller certificate. |

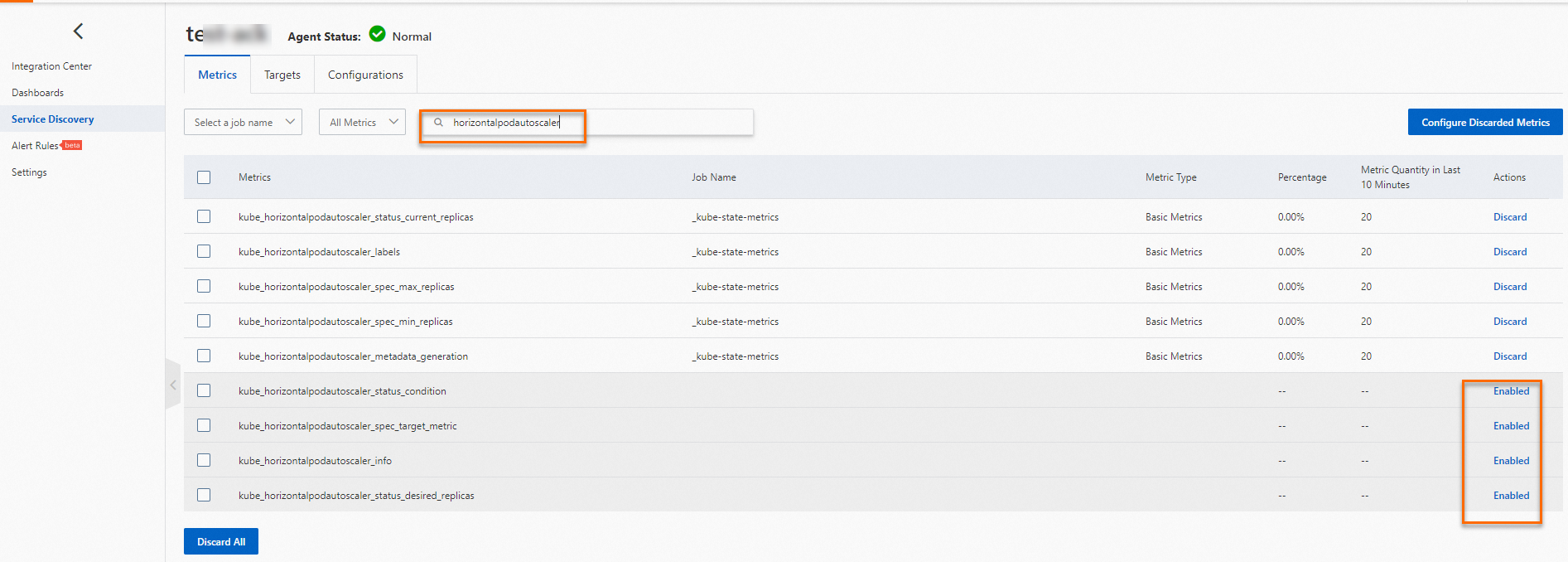

Auto Scaling exceptions

Description | Severity | PromQL configuration | Rule description | Common troubleshooting procedure |

HPA current replica count has reached the maximum | Warning | max(kube_horizontalpodautoscaler_spec_max_replicas) by (namespace, horizontalpodautoscaler) - max(kube_horizontalpodautoscaler_status_current_replicas) by (namespace, horizontalpodautoscaler) <= 0 | You must enable the | Check whether the Horizontal Pod Autoscaler (HPA) policy meets your expectations. If the business workload remains high, you may need to increase the maxReplicas value of the HPA or optimize application performance |

References

For more information about how to query Prometheus monitoring data from the console or using an API, see Query Prometheus monitoring data using PromQL.

You can use ACK Net Exporter to quickly discover and locate container network issues. For more information, see Use KubeSkoop to locate network issues.

For more information about common issues and solutions when you use Alibaba Cloud Prometheus, see Observability FAQ.