OpenKruise is a Kubernetes-based extension suite that focuses on automating cloud-native applications, including deployment, release, O&M, and availability protection. This article explores how OpenKruise can be used to implement end-to-end canary release, enabling automated O&M.

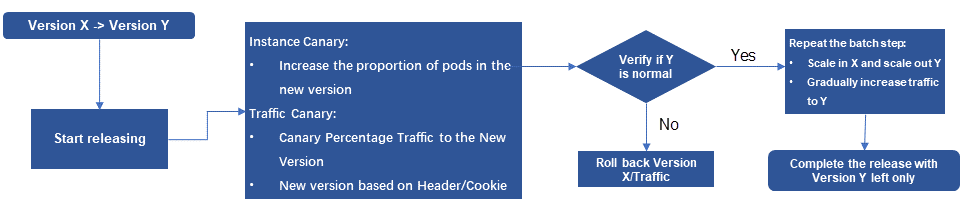

During application deployment, it is common practice to verify the normal functioning of a new version using a small amount of specific traffic, ensuring overall stability. This process is known as canary release. Canary release gradually increases the release scope, allowing for thorough verification of the new version's stability. If any issues arise, they can be detected promptly, and the scope of impact can be controlled to maintain overall stability.

Key features of canary release include:

• Gradual increase in release scope, avoiding simultaneous full release.

• Phased release process that allows careful verification of the new version's stability using canary release.

• Support for suspension, rollback, resumption, and automated status flow to provide flexible control over the release process and ensure stability.

According to survey data, 70% of online issues are caused by changes. Canary release, observability, and rollback are often referred to as the three key elements of safe production, as they help control risks and mitigate the impact of changes. By implementing canary release, organizations can release new versions more robustly and prevent losses caused by issues during the release process.

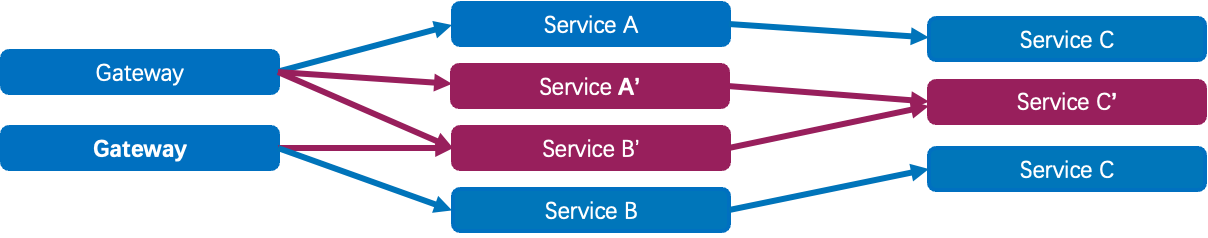

In the microservices architecture scenarios, the traditional canary release mode often cannot meet the complex and diversified requirements of microservice delivery. This is because:

• The microservice trace is long and complex. In the microservices architecture, the trace between services is complex, and any changes made to one service can impact the entire trace, thereby affecting the overall application stability.

• A canary release may involve multiple modules, and the entire trace must call the new version. Due to the interdependence of services in the microservices architecture, the modification of one service may lead to the adjustments of other services. As a result, new versions of multiple services need to be called at the same time during canary release. This increases the complexity and uncertainty of the release.

• Multiple projects in parallel need multiple environments to be deployed, which are inflexible to build and costly. In the microservices architecture, parallel development of multiple projects is common, requiring support from multiple environments. This increases the difficulty and cost of environment construction, leading to inefficient releases.

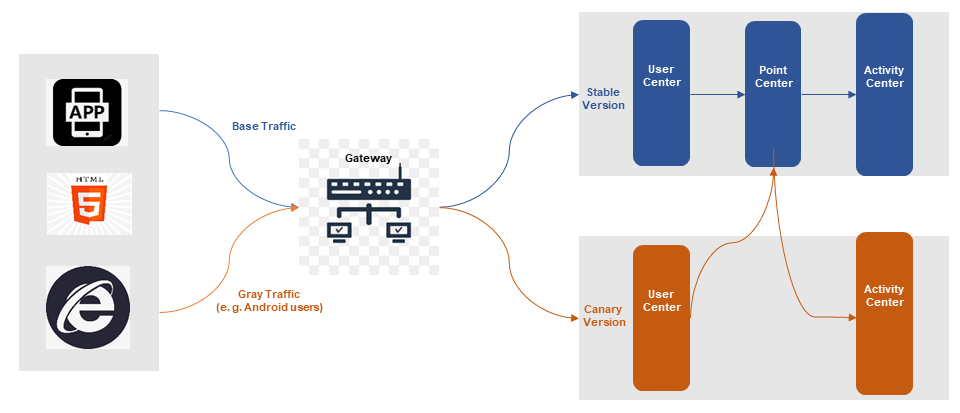

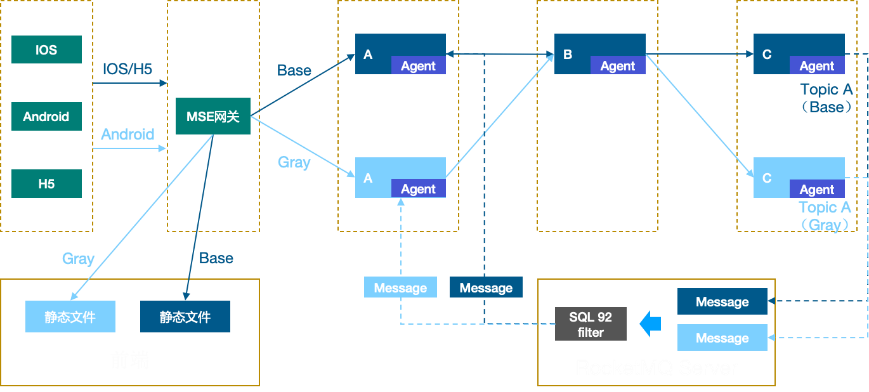

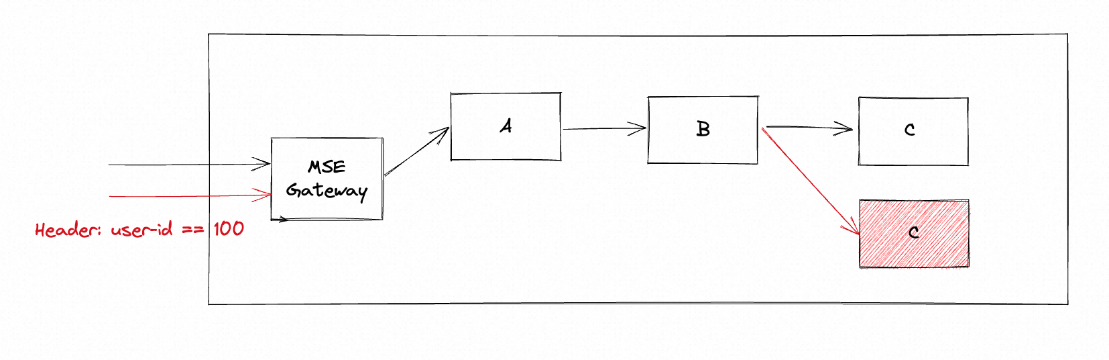

To address these challenges, a more flexible and controllable release method tailored to microservice scenarios is needed, giving rise to end-to-end canary release. Typically, each microservice application deploys a canary release environment or group to receive canary traffic. The objective is to ensure that traffic entering the upstream canary release environment can also enter the downstream canary release environment, allowing one request to always pass through the canary release environment, thus forming a "traffic swim lane". Even if some microservice applications in this swim lane lack a canary release environment, they can still return to the downstream application's canary release environment when making requests.

End-to-end canary release escorts microservice release

The end-to-end canary release enables independent release and traffic control for individual services based on their specific needs. It also allows simultaneous release and modification of multiple services to ensure the stability of the entire system. Additionally, the use of automated deployment methods facilitates faster and more reliable releases, ultimately improving release efficiency and stability.

Implementing end-to-end canary release of microservices in Kubernetes is a complex process that requires modifying and coordinating multiple components and configurations. Here are some specific steps and considerations:

• In a microservices architecture, the gateway serves as the entrance point for services. To implement route matching and traffic characteristics (such as header modification) for canary release, the gateway configuration needs to be adjusted according to the requirements.

• To achieve end-to-end canary release, a new canary release application environment needs to be deployed, and a canary release tag should be added to it. This allows directing traffic to the canary release environment for the canary release process.

• It is essential to verify that the traffic is functioning normally during the canary release. This involves upgrading the baseline environment, dismantling the canary release environment, and restoring the gateway configuration. If the traffic verification is successful, the baseline environment can be upgraded to the canary release version, the canary release environment can be removed, and the gateway configuration can be restored to ensure proper traffic flow.

• In case of exceptions such as service crashes or traffic issues, it is necessary to have a pre-designed rollback plan to quickly revert back to avoid significant losses.

Additionally, the production traffic follows an end-to-end path, meaning that traffic flow needs to be controlled across frontend, gateway, backend microservices, and other components. Apart from RPC/HTTP traffic, asynchronous calls like MQ traffic need to comply with end-to-end "swim lane" call rules, making the traffic control throughout the process even more complex.

To simplify the process of end-to-end canary release for microservices, automated tools and products like Microservice Engine (MSE) and KruiseRollout can be utilized. These tools and products facilitate the implementation of end-to-end canary release, improving release efficiency and stability.

.

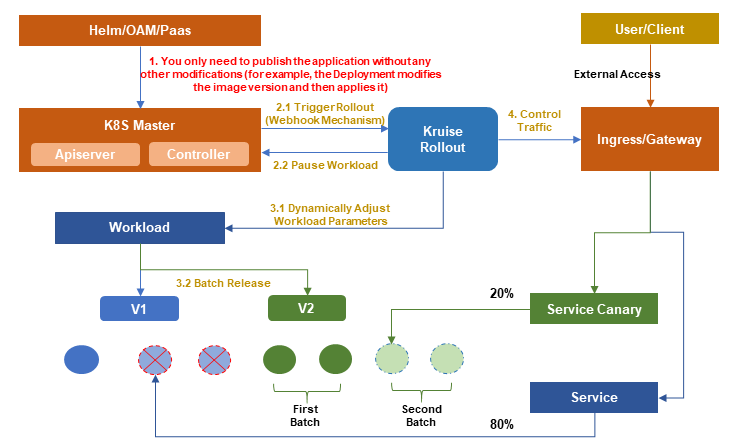

Kruise Rollout is a progressive delivery framework developed by the OpenKruise community. It provides a set of standard bypass Kubernetes release components that integrate traffic release with instance canary release. Kruise Rollout supports various release methods such as canary, blue-green, and A/B testing. It also supports automatic release processes based on custom metrics like Prometheus Metrics, ensuring scalability. Its key features include:

• Non-intrusive: Kruise Rollout does not interfere with the user's application delivery configuration. It extends progressive delivery capabilities through bypass mode, offering a plug-and-play effect.

• Scalability: Kruise Rollout supports multiple workloads, including Deployment, StatefulSet, CloneSet, and custom CRD workloads. For traffic scheduling, it utilizes Lua scripts to support multiple traffic scheduling solutions like NGINX, ALB, MSE, and Gateway APIs.

Kruise Rollout provides capabilities for canary, A/B testing, and blue-green releases. After a thorough understanding of Kruise Rollout, it is evident that its release model aligns well with MSE end-to-end canary release. Therefore, combining Kruise Rollout with MSE makes it easy for users to implement MSE end-to-end canary release capabilities.

For more information about how to deploy the demo application, see Implement an End-to-end Canary Release Based on MSE Ingress Gateways.

➜ ~ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

demo-mysql 1/1 1 1 30h

nacos-server 1/1 1 1 46h

spring-cloud-a 2/2 2 2 30h

spring-cloud-b 2/2 2 2 30h

spring-cloud-c 2/2 2 2 30hAfter the application is deployed, we must first distinguish between online traffic and canary traffic.

Create a Rollout CRD (Custom Resource Definition) to define the process of end-to-end canary release.

1. Create a Rollout configuration for applications involved in the entire trace.

# a rollout configuration

---

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

metadata:

name: rollout-a

spec:

objectRef:

workloadRef:

apiVersion: apps/v1

kind: Deployment

name: spring-cloud-a

...

# b rollout configuration

---

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

metadata:

name: rollout-b

annotations:

rollouts.kruise.io/dependency: rollout-a

spec:

objectRef:

workloadRef:

apiVersion: apps/v1

kind: Deployment

name: spring-cloud-b

...

# c rollout configuration

---

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

metadata:

name: rollout-c

annotations:

rollouts.kruise.io/dependency: rollout-a

spec:

objectRef:

workloadRef:

apiVersion: apps/v1

kind: Deployment

name: spring-cloud-c

...2. Then, to show the characteristics of online traffic and canary traffic, configure canary traffic rules on the ingress service.

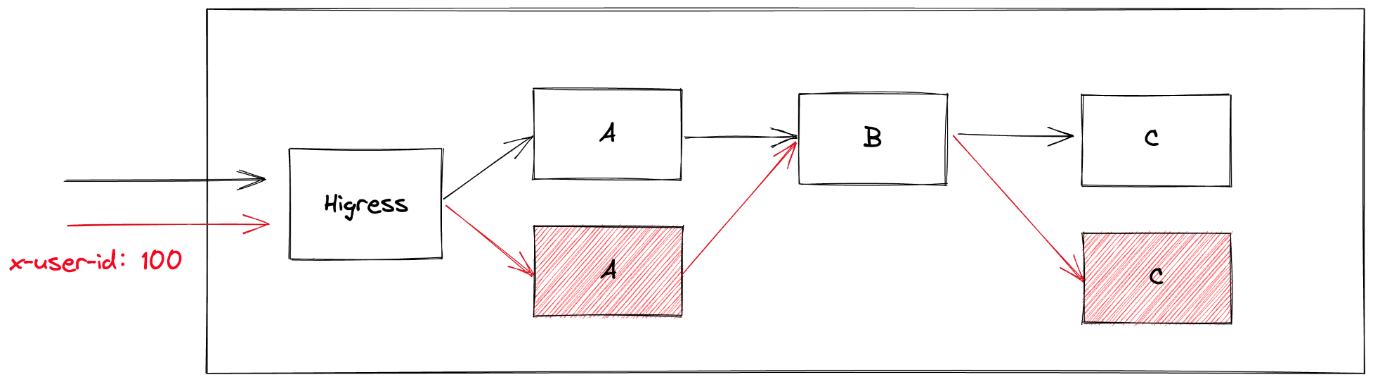

canary:

steps:

- matches:

- headers:

- type: Exact

name: x-user-id

value: '100'

requestHeaderModifier:

set:

- name: x-mse-tag

value: gray

trafficRoutings:

- service: spring-cloud-a

ingress:

name: spring-cloud-a

classType: mse3. Kruise will automatically identify them as gray versions for applications in the canary release.

# only support for canary deployment type

patchCanaryMetadata:

labels:

alicloud.service.tag: grayAfter installing the Rollout CRD, we can have a check.

➜ ~ kubectl get Rollout

NAME STATUS CANARY_STEP CANARY_STATE MESSAGE AGE

rollout-a Healthy 1 Completed workload deployment is completed 4s

rollout-b Healthy 1 Completed workload deployment is completed 4s

rollout-c Healthy 1 Completed workload deployment is completed 4sSo far, we have defined such a set of end-to-end canary release rules. The release trace involves the MSE cloud-native gateway and applications A, B, and C, where the traffic of x-user-id=100 is canary traffic.

Next, let's quickly start a canary release and verification.

1. This release involves changes to Applications A and C. Therefore, we directly edit the Deployment YAML of Applications A and C to make changes. Modify mse-1.0.0 -> mse-2.0.0 to trigger the release of Application A and the end-to-end canary release of MSE.

# a application

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-cloud-a

spec:

...

template:

...

spec:

# Modify mse-1.0.0 -> mse-2.0.0 to trigger the release of Application A and the end-to-end canary release of MSE.

image: registry.cn-hangzhou.aliyuncs.com/mse-demo-hz/spring-cloud-a:mse-2.0.0

# c application

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-cloud-c

spec:

...

template:

...

spec:

# Modify mse-1.0.0 -> mse-2.0.0 to trigger the release of Application A and the end-to-end canary release of MSE.

image: registry.cn-hangzhou.aliyuncs.com/mse-demo-hz/spring-cloud-c:mse-2.0.02. After the changes, let's look at the form of current applications.

~ kubectl get deployments

➜ ~ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

demo-mysql 1/1 1 1 30h

nacos-server 1/1 1 1 46h

spring-cloud-a 2/2 0 2 30h

spring-cloud-a-84gcd 1/1 1 1 86s

spring-cloud-b 2/2 2 2 30h

spring-cloud-c 2/2 0 2 30hWe found that Kruise Rollout did not directly modify our original deployment but first created two canary release applications, spring-cloud-a-84gcd and spring-cloud-c-qzh9p.

3. When the applications are ready to start, we will verify the normal online traffic and canary traffic respectively.

a) If the access gateway does not comply with the canary release rules, apply the baseline environment:

➜ ~ curl -H "Host: example.com" http://39.98.205.236/a

A[192.168.42.115][config=base] -> B[192.168.42.118] -> C[192.168.42.101]% b) If the access gateway complies with the canary release rules, apply the canary release environment:

➜ ~ curl -H "Host: example.com" http://39.98.205.236/a -H "x-user-id: 100"

Agray[192.168.42.119][config=base] -> B[192.168.42.118] -> Cgray[192.168.42.116]% 4. If unexpected behavior is encountered, how can we perform a quick rollback?

Let's try to roll back Application C. Just change the deployment of the Application C to the original configuration.

# c application

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-cloud-c

spec:

...

template:

...

spec:

# Modify mse-2.0.0 -> mse-1.0.0 and roll back Application C.

image: registry.cn-hangzhou.aliyuncs.com/mse-demo-hz/spring-cloud-c:mse-2.0.0After the modification, we can find that the canary deployment of Application C is no longer available.

➜ ~ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

demo-mysql 1/1 1 1 30h

nacos-server 1/1 1 1 46h

spring-cloud-a 2/2 0 2 30h

spring-cloud-a-84gcd 1/1 1 1 186s

spring-cloud-b 2/2 2 2 30h

spring-cloud-c 2/2 0 2 30h5. How to complete the release? Use kubectl-kruise rollout approve rollouts/rollout-a' to complete the application canary release.

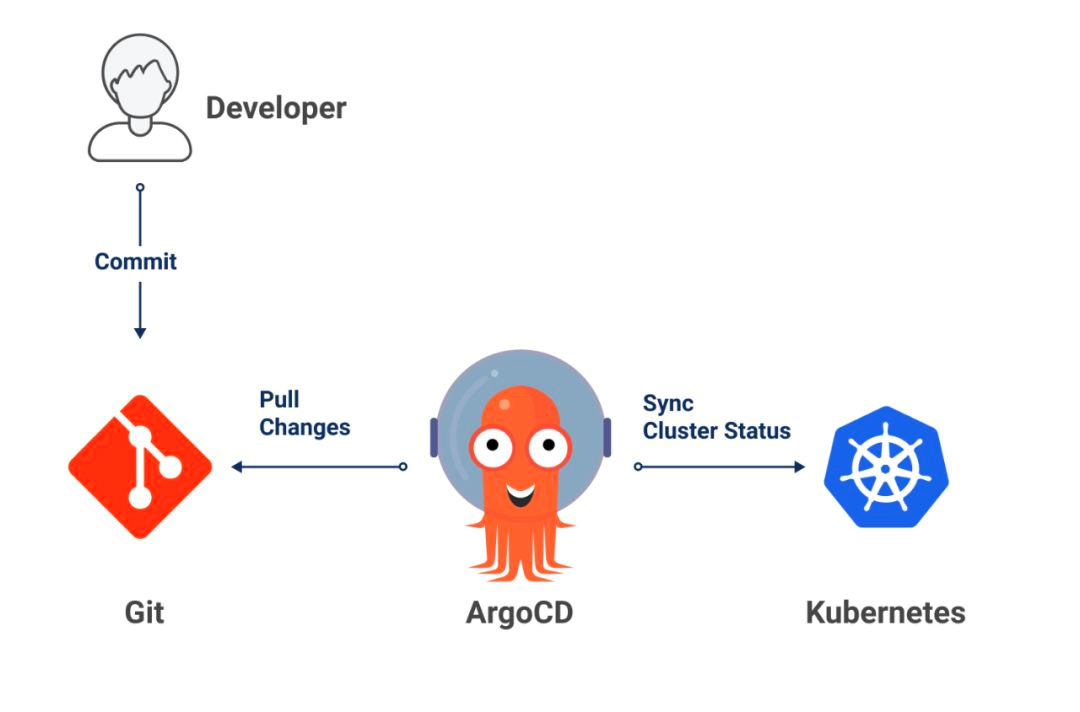

GitOps is a way of continuous delivery, and its core idea is to store the declarative infrastructure and applications of the application system in the Git repository. With Git as the core of the delivery pipeline, each developer can submit pull requests and use Git to accelerate and simplify the application deployment and O&M tasks of Kubernetes. By using simple tools like Git, developers can more efficiently focus on creating new features rather than on O&M-related tasks (such as application installation, configuration, and migration).

Imagine that, as developers, we want to submit the application definition (Deployment) written in YAML to be automatically released in the canary release environment first. After the traffic is fully verified to ensure that there are no problems with the new version of the application, we can release the full application. How can it be done? Next, we demonstrate the end-to-end canary release capability achieved by integrating ArgoCD.

Install ArgoCD according to ArgoCD. ArgoCD is a declarative GitOps continuous delivery tool for Kubernetes.

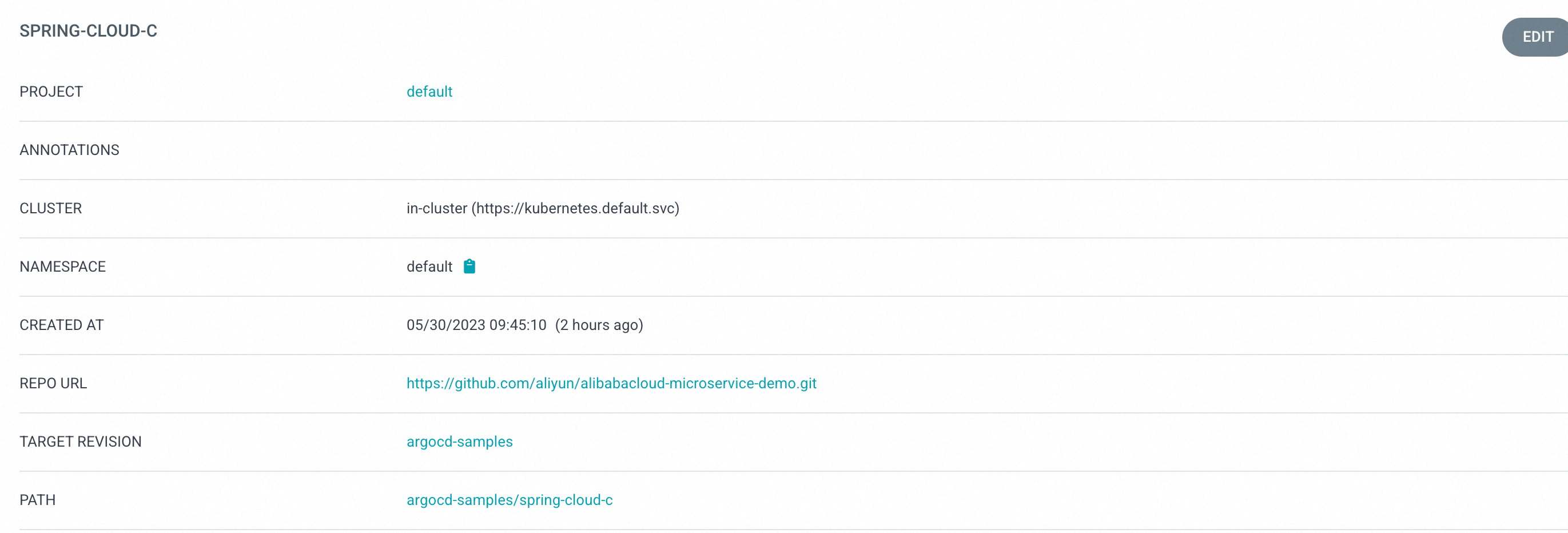

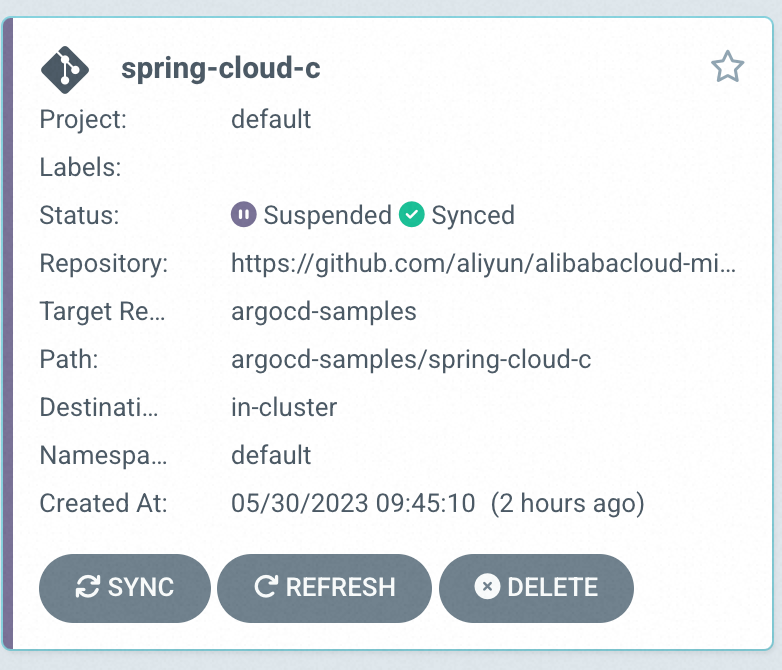

1. Create a spring-cloud-c application in ArgoCD.

2. On the ArgoCD Management page, click NEW APP and configure the following parameters.

a) In the GENERAL section, set Application to spring-cloud-c and Project to default.

b) In the SOURCE section, set the Repository URL to https://github.com/aliyun/alibabacloud-microservice-demo.git Revision to argocd-samples, and Path to argocd-samples/spring-cloud-c.

c) In the DESTINATION section, set the Cluster URL to https://kubernetes.default.svc and Namespace to default.

d) After the configuration, click CREATE in the upper part of the page.

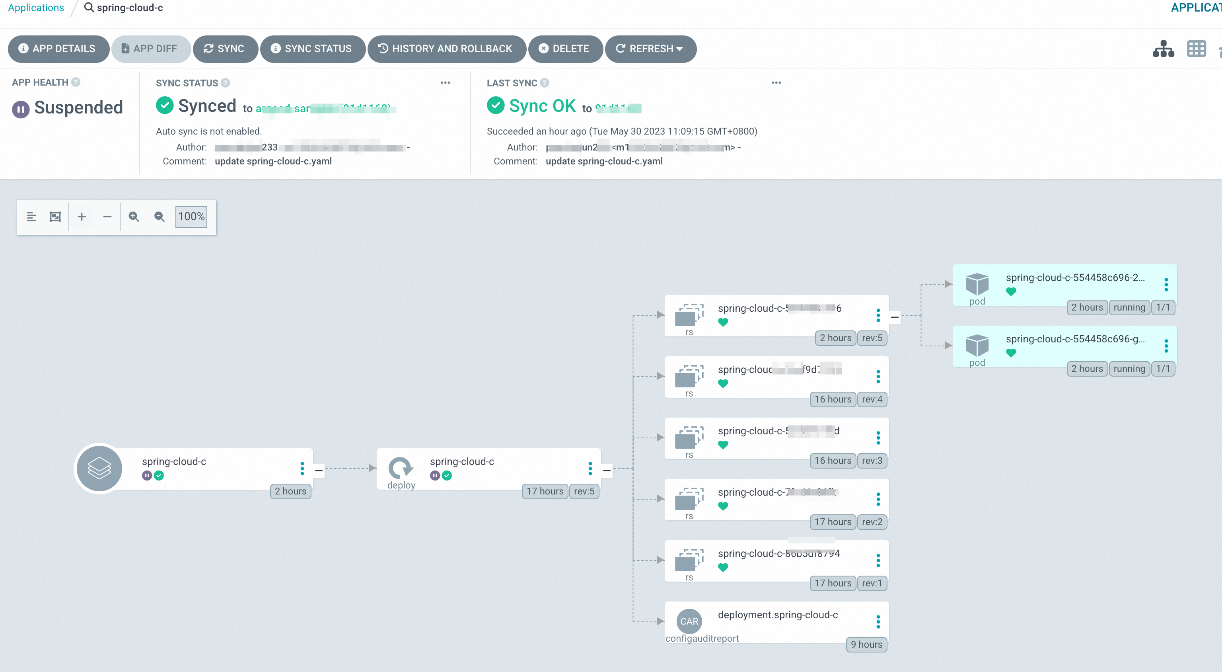

3. After the application is created, you can view the spring-cloud-c application status on the ArgoCD Management page.

4. Click the corresponding application to view the resource deployment status.

5. We modify the spring-cloud-c.yaml in the argocd-samples/spring-cloud-c and submit it through Git.

6. We can find that the canary version of the spring-cloud-c application is automatically deployed.

✗ kubectl get pods -o wide | grep spring-cloud

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

spring-cloud-a-69d577cc9-g7sbc 1/1 Running 0 16h 192.168.0.191 us-west-1.192.168.0.187 <none> <none>

spring-cloud-b-7bc9db878f-n7pzp 1/1 Running 0 16h 192.168.0.193 us-west-1.192.168.0.189 <none> <none>

spring-cloud-c-554458c696-2vp74 1/1 Running 0 137m 192.168.0.200 us-west-1.192.168.0.145 <none> <none>

spring-cloud-c-554458c696-g8vbg 1/1 Running 0 136m 192.168.0.192 us-west-1.192.168.0.188 <none> <none>

spring-cloud-c-md42b-74858b7c4-qzdxz 1/1 Running 0 53m 192.168.0.165 us-west-1.192.168.0.147 <none> <none>The architecture diagram is as follows:

7. When the applications are ready to start, we will verify the normal online traffic and canary traffic respectively.

a) If the access gateway does not comply with the canary release rules, apply the baseline environment:

➜ ~ curl -H "Host: example.com" http://39.98.205.236/a

A[192.168.0.191][config=base] -> B[192.168.0.193] -> C[192.168.0.200]% b) If the access gateway complies with the canary release rules, apply the canary release environment:

➜ ~ curl -H "Host: example.com" http://39.98.205.236/a -H "x-user-id: 100"

A[192.168.0.191][config=base] -> B[192.168.0.193] -> Cgray[192.168.0.165]% 8. We only need to roll back the last commit of the spring-cloud-c.yaml in the argocd-samples/spring-cloud-c through Git.

9. Finish the application canary release by kubectl-kruise rollout approve rollouts/rollout-c.

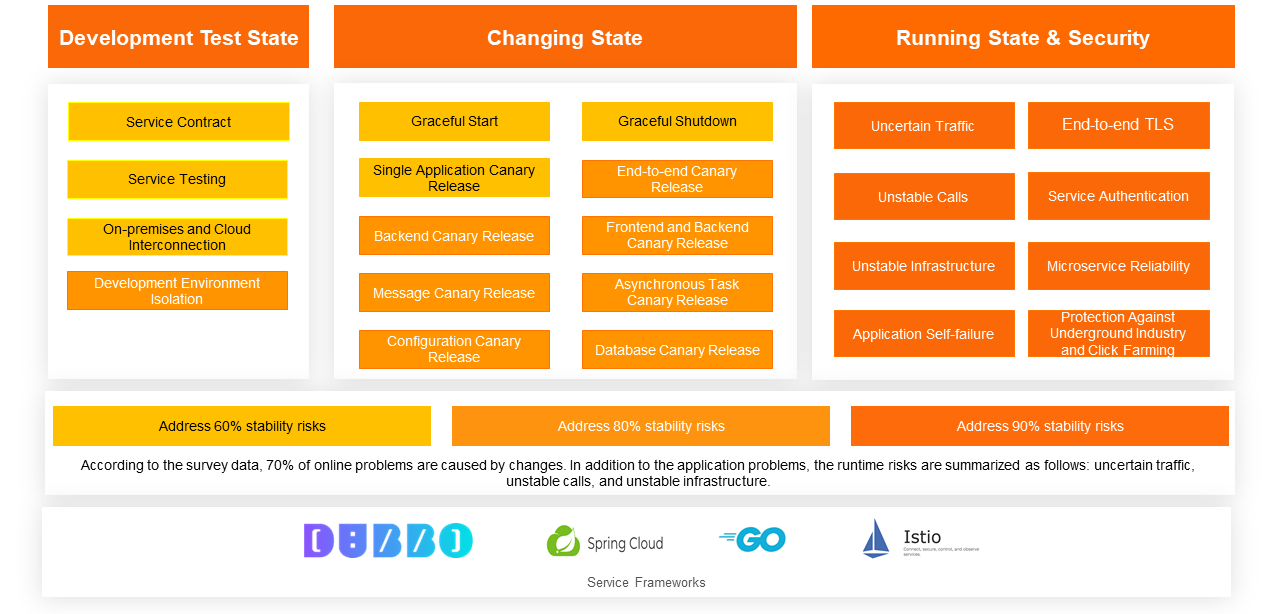

Kruise Rollout is an exploration by the OpenKruise community in the field of progressive delivery. Through collaboration with MSE, it has successfully implemented canary release scenarios for microservices in the cloud-native domain. Moving forward, Kruise Rollout will continue to make efforts in terms of scalability. This includes developing a scalable traffic scheduling solution based on Lua scripts to ensure compatibility with a wider range of gateways and architectures in the community, such as Istio and Apifix.

In the microservice governance architecture, the end-to-end canary release feature provides traffic swim lanes, greatly facilitating quick verification during testing and release. By employing precise drainage rules, it minimizes the blast radius and helps DevOps improve online stability.

The end-to-end canary capability of MSE continues to expand and iterate with the deepening of customer scenarios. In addition to ensuring the stability of the release state through MSE's end-to-end canary capability, MSE can also address risks of instability in the running state, such as traffic, dependencies, and infrastructure. The MSE microservice engine is dedicated to helping enterprises build always-on applications. We believe that only products continuously refined through customer scenarios will become increasingly durable.

If you think Higress is helpful, welcome to give us a star!

How Does DeepSpeed + Kubernetes Easily Implement Large-Scale Distributed Training?

634 posts | 55 followers

FollowAlibaba Cloud Native Community - September 12, 2023

Alibaba Cloud Native Community - March 11, 2024

Alibaba Cloud Native Community - November 22, 2023

Alibaba Cloud Native Community - October 31, 2023

Alibaba Developer - April 15, 2021

Alibaba Container Service - April 3, 2025

634 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn MoreMore Posts by Alibaba Cloud Native Community