By Mengyuan Pan

Kruise Rollout [1] is an open-source progressive delivery framework offered by the OpenKruise community. It enables canary releases, blue-green deployments, and A/B testing. With Kruise Rollout, you can control the traffic and pods for canary deployments, automate the release process in batches based on Prometheus metrics, and seamlessly integrate with various workloads such as Deployments, CloneSets, and DaemonSets.

Kruise Rollout has provided traffic scheduling ability to customize resources to better support traffic scheduling in progressive delivery. This article aims to introduce the proposed solution by Kruise Rollout.

Progressive delivery is a deployment and release strategy designed to gradually introduce new versions or features into the production environment. This approach mitigates risks and ensures system stability. Some common forms of progressive delivery include:

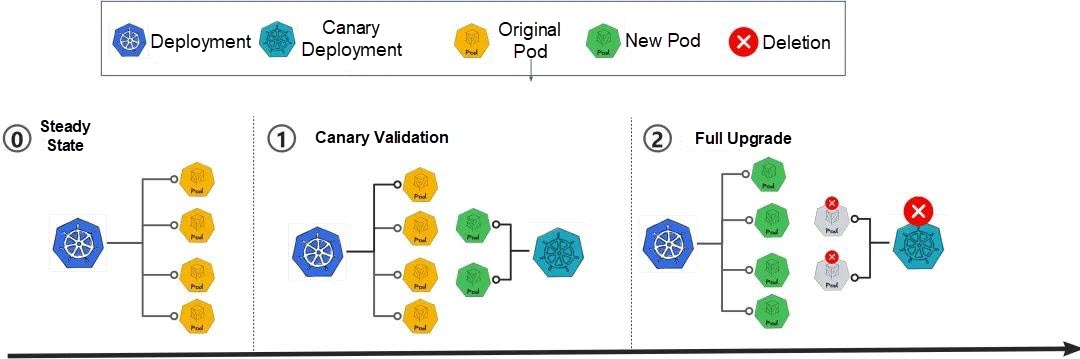

• Canary release: A canary deployment is created for verification when a new version is released. Once the deployment passes the verification, the system upgrades all workloads and removes the canary deployment.

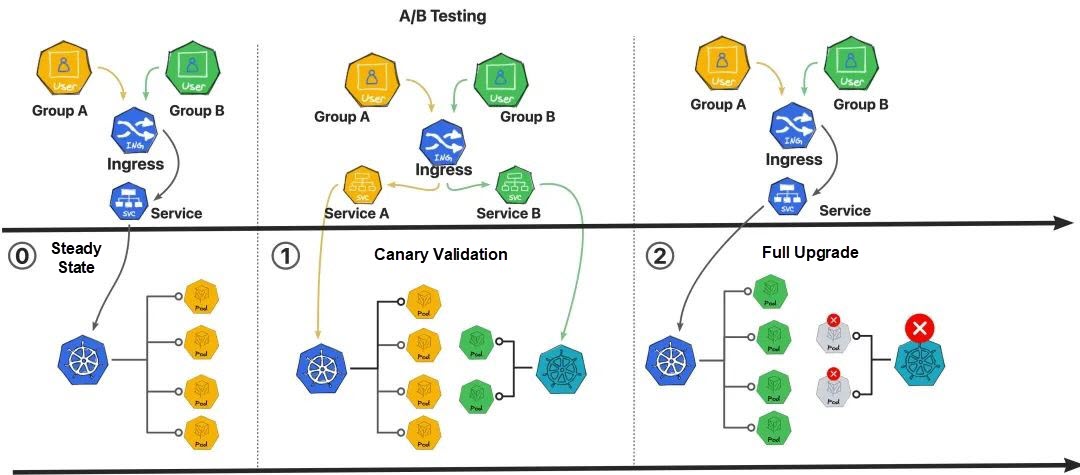

• A/B testing: This strategy divides user traffic into two distinct paths, A and B, based on predefined rules. Different versions of pod instances are then imported for processing, allowing better observation and comparison of the capabilities between the old and new versions.

Canary releases, A/B testing, and blue-green releases are all progressive testing and evaluation strategies for new features or changes. By selecting appropriate deployments and testing strategies based on specific requirements and scenarios and leveraging technologies such as traffic canary release, these strategies enable the gradual release and testing of new versions or features.

Kruise Rollout has already provided support for the Gateway API, so why is it necessary to also support gateway resources from different vendors? Before addressing this question, let's briefly introduce the Gateway API.

Currently, different vendors have their own gateway resources and propose their own standards. To establish a unified gateway resource standard and build standardized APIs that are independent of vendors, Kubernetes introduced the Gateway API. Although the Gateway API is still in its development stage, many projects have expressed support or planned to support it. These projects include:

• Istio, one of the most popular service mesh projects, plans to introduce experimental Gateway API support in Istio 1.9. Users can configure Istio's Envoy proxy using the Gateway and HTTPRoute resources.

• Apache APISIX, a dynamic, real-time, high-performance API Gateway, currently supports the v1beta1 version of the Gateway API specification for its Apache APISIX Ingress Controller.

• Kong, an open-source API Gateway built for hybrid and multi-cloud environments, supports the Gateway API in both Kong Kubernetes Ingress Controller (KIC) and Kong Gateway Operator.

However, since the Gateway API currently does not cover all the functions provided by vendors' gateway resources, and many users still rely on the gateway resources offered by vendors, solely providing support for the Gateway API is not sufficient. Although the Gateway API's capabilities are constantly expanding, using the Gateway API will be a more recommended approach in the future. Therefore, although Kruise Rollout already supports the Gateway API, it is still important to address how to support various gateway resources from existing vendors.

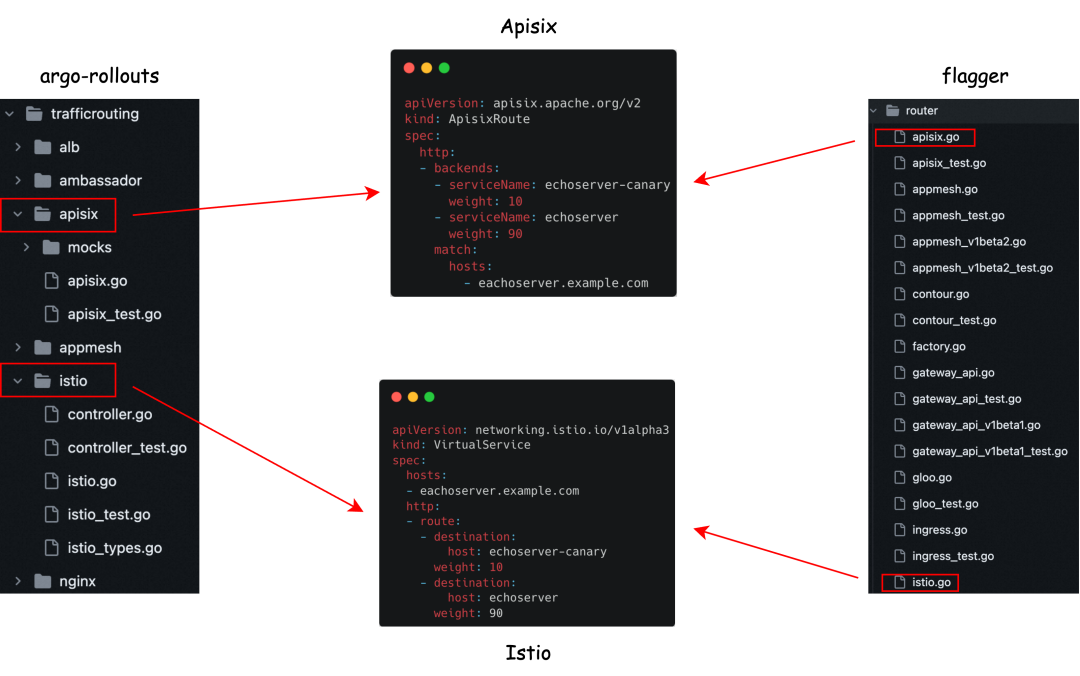

There are already many widely used gateway resources provided by vendors in the community, such as Istio, Kong, and Apisix. However, as mentioned earlier, the configuration of these resources is not standardized. Consequently, it is not possible to design a common set of code to process these resources, which poses some inconveniences and challenges for developers.

Argo Rollouts and Flagger Compatibility Scheme

In order to be compatible with more community gateway resources, some schemes are proposed, such as Flagger and Argo Rollouts, which provide code implementation for each gateway resource. The implementation of these schemes is relatively simple, but there are also some problems:

• When faced with a large number of community gateway resources, they need to consume a lot of energy for implementation.

• Each implementation needs to be released again, and they are less customizable.

• In some environments, users may use customized gateway resources, in which case it is difficult to adapt.

• Each resource has different configuration rules, and the configuration is complex.

• Each time a new gateway resource is added, a new interface needs to be implemented for it, which is difficult to maintain.

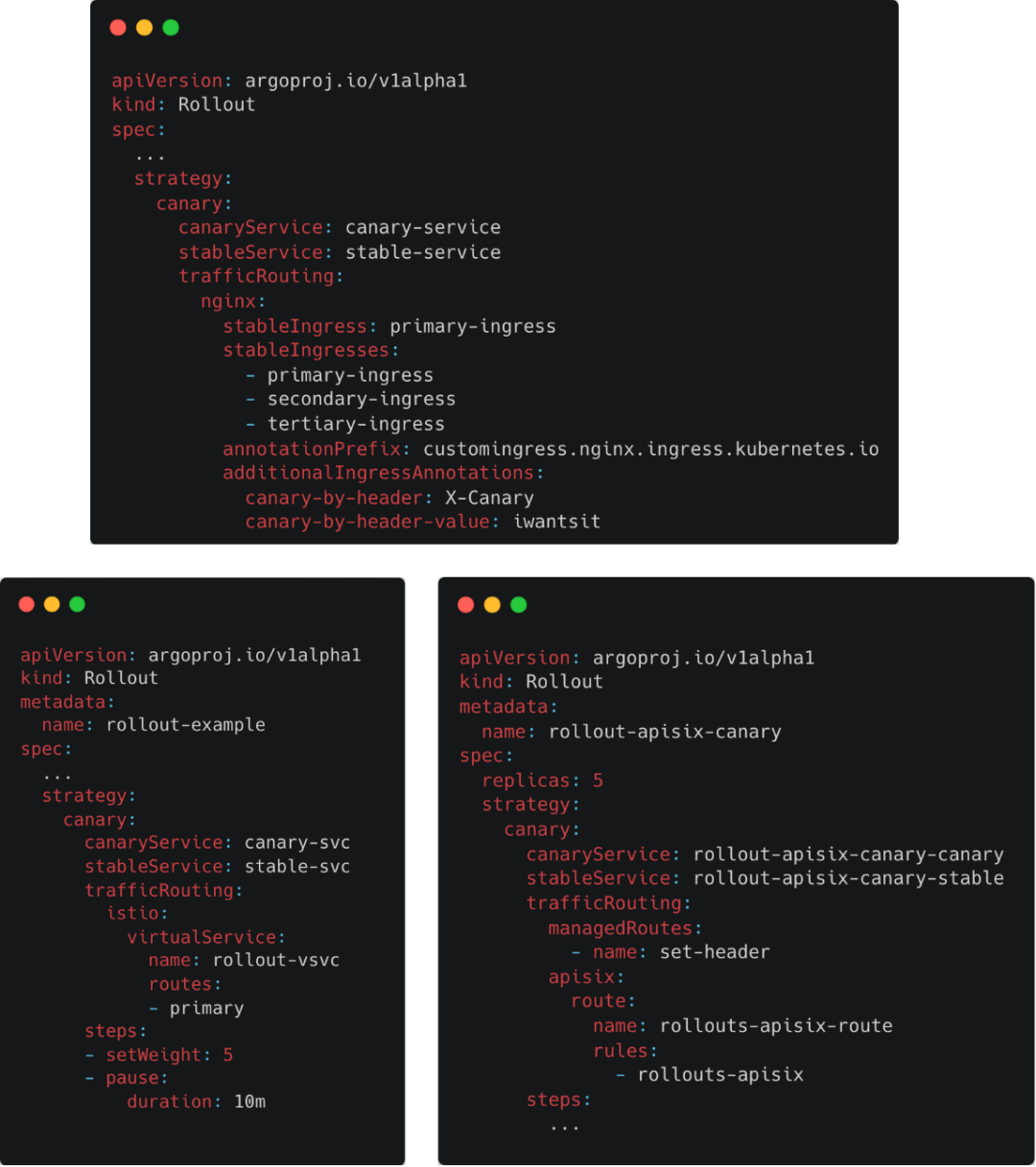

Different Resource Configurations of Argo Rollouts

Therefore, it is necessary to have an implementation scheme that supports user-customized capacity and can be flexibly plugged and unplugged to adapt to various gateway resources customized by the community and users to meet their different needs and enhance the compatibility and extensibility of Kruise Rollout.

To this end, we propose a scalable traffic scheduling scheme based on Lua scripts for gateway resources.

Kruise Rollout uses a customized gateway resource scheme based on Lua scripts. In this scheme, Lua scripts are invoked to retrieve and update the desired operational status of resources based on the release policy and the original status of gateway resources. The status includes spec, labels, and annotations. This allows users to easily adapt and integrate different types of gateway resources without the need to modify existing code and configurations.

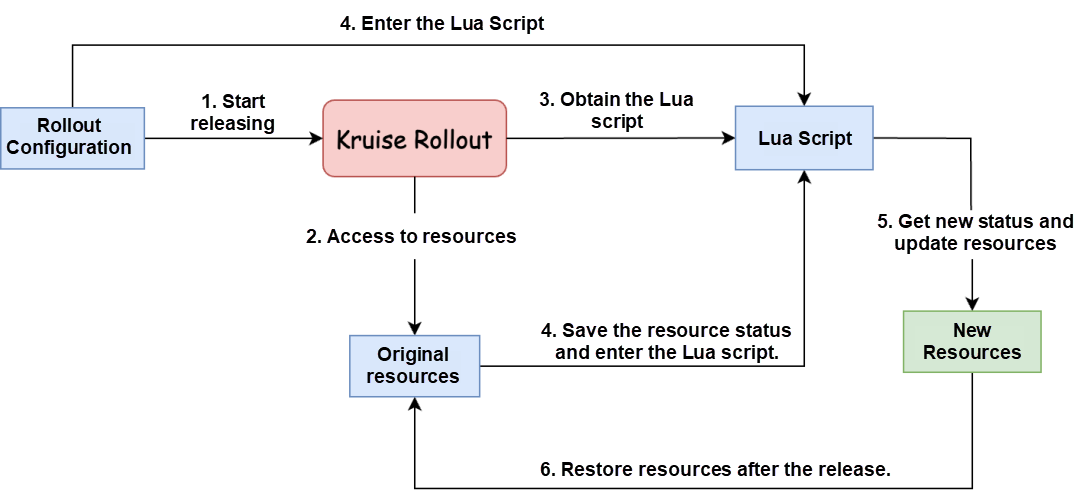

The process of handling gateway resources through this scheme is illustrated in the diagram above. The entire process can be described as follows:

By using Kruise Rollout, users can:

• Customize Lua scripts for processing gateway resources to freely implement resource processing logic and support a wider range of resources.

• Utilize a standardized set of Rollout configuration templates to configure different resources, reducing configuration complexity and facilitating user configuration.

Additionally, the approach adopted by Kruise Rollout only requires the addition of 5 new interfaces to support various gateway resources. In comparison, other schemes like Argo Rollouts provide different interfaces for gateway resources from different vendors. Argo Rollouts offers 14 and 4 new interfaces for Istio and Apisix, respectively. As the number of supported gateway resources increases, the number of interfaces in these schemes will continue to grow. In contrast, Kruise Rollout does not need to provide new interfaces for new gateway resources, making it a more streamlined and easier-to-maintain option without adding excessive interface burden. Furthermore, compared to developing Gateway APIs, writing Lua scripts to adapt gateway resources can significantly reduce the workload for developers.

The example below shows how to process an Istio DestinationRule using a Lua script.

1. First, define the rollout configuration file:

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

...

spec:

...

trafficRoutings:

- service: mocka

createCanaryService: false # Use the original canary service instead of creating a new canary service.

networkRefs: # The gateway resources to be controlled.

- apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

name: ds-demo

patchPodTemplateMetadata:

labels:

version: canary # Label the new pod.2. The Lua script for processing Istio DestinationRule:

local spec = obj.data.spec -- Get the spec of the resource, and obj.data is the state information of the resource.

local canary = {} -- Initialize a canary routing rule that points to the new version.

canary.labels = {} -- Initialize labels of canary routing rules.

canary.name = "canary" -- Define the canary routing rule name.

-- Loop over the pod label of the new version of the rollout configuration.

for k, v in pairs(obj.patchPodMetadata.labels) do

canary.labels[k] = v -- Add pod labels to the canary rule.

end

t table.insert(spec.subsets, canary) -- Insert the canary rule into the spec.subsets of the resource.

return obj.data -- Return resource status.3. The processed DestinationRule:

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

spec:

...

subsets:

- labels: # -+

version: canary # |- Newly inserted rules that processed by Lua scripts.

name: canary # -+

- labels:

version: base

name: version-baseNext, we will introduce a specific case of using our proposed scheme to support Istio.

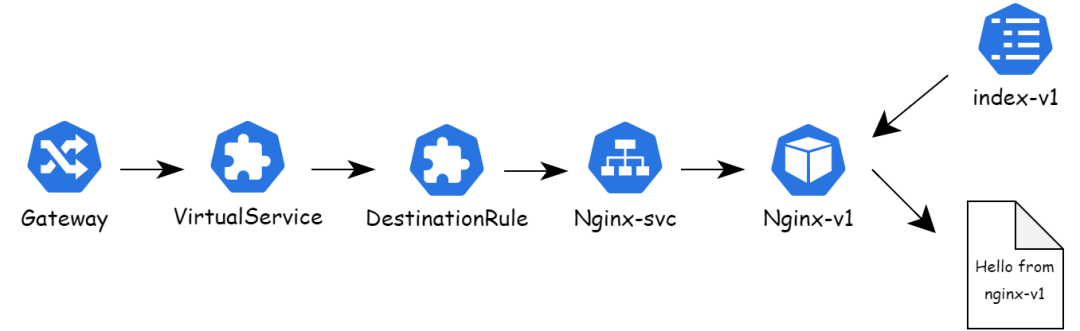

1. First, deploy the services shown in the following figure. The service consists of the following components:

o Use the Ingress Gateway as the gateway for external traffic.

o Use VirtualService and DestinationRule to schedule traffic to an nginx pod.

o Use ConfigMap as the home page for nginx pods.

The deployment of the nginx service is as follows:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

version: base

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: html-volume

mountPath: /usr/share/nginx/html

volumes:

- name: html-volume

configMap:

name: nginx-configmap-base # 挂载ConfigMap作为index2. Create a rollout resource and configure release rules. The rollout is released in two batches:

o The first batch forwards 20% of the traffic to the newly released pods.

o The second batch forwards traffic with header version = canary to pods in the new version.

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

metadata:

name: rollouts-demo

annotations:

rollouts.kruise.io/rolling-style: canary

spec:

disabled: false

objectRef:

workloadRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-deployment

strategy:

canary:

steps:

- weight: 20 # The first batch forwards 20% of the traffic to pods in the new version.

- replicas: 1 # The second batch forwards traffic with header version=canary to pods in the new version.

matches:

- headers:

- type: Exact

name: version

value: canary

trafficRoutings:

- service: nginx-service # The service used by pods in the previous version.

createCanaryService: false # Do not create a new canary service. The new and old pods share the same service.

networkRefs: # The gateway resource to be modified.

- apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

name: nginx-vs

- apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

name: nginx-dr

patchPodTemplateMetadata: # Add the version=canary label to pods in the new version.

labels:

version: canary3. Modify the ConfigMap mounted to the NGINX service deployment to start the canary release.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

...

volumes:

- name: html-volume

configMap:

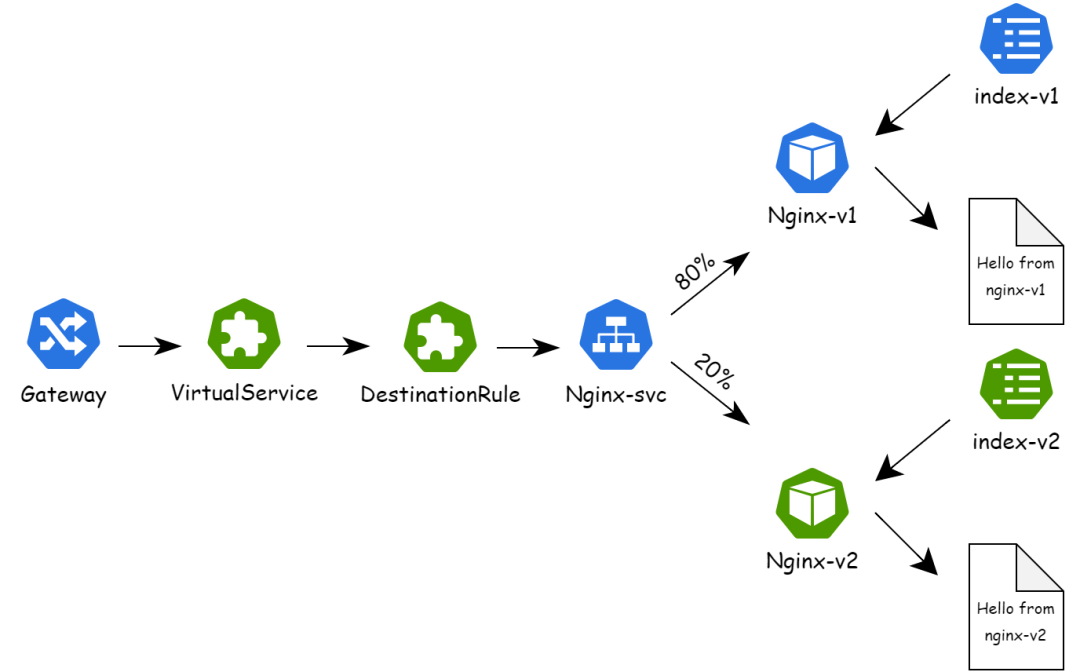

name: nginx-configmap-canary # Mount the new ConfigMap as an index.4. Start to release the first batch. Kruise Rollout automatically calls the defined Lua script to modify VirtualService and DestinationRule resources for traffic scheduling, and forwards 20% of the traffic to the pods in the new version. The following figure shows the traffic of the entire service:

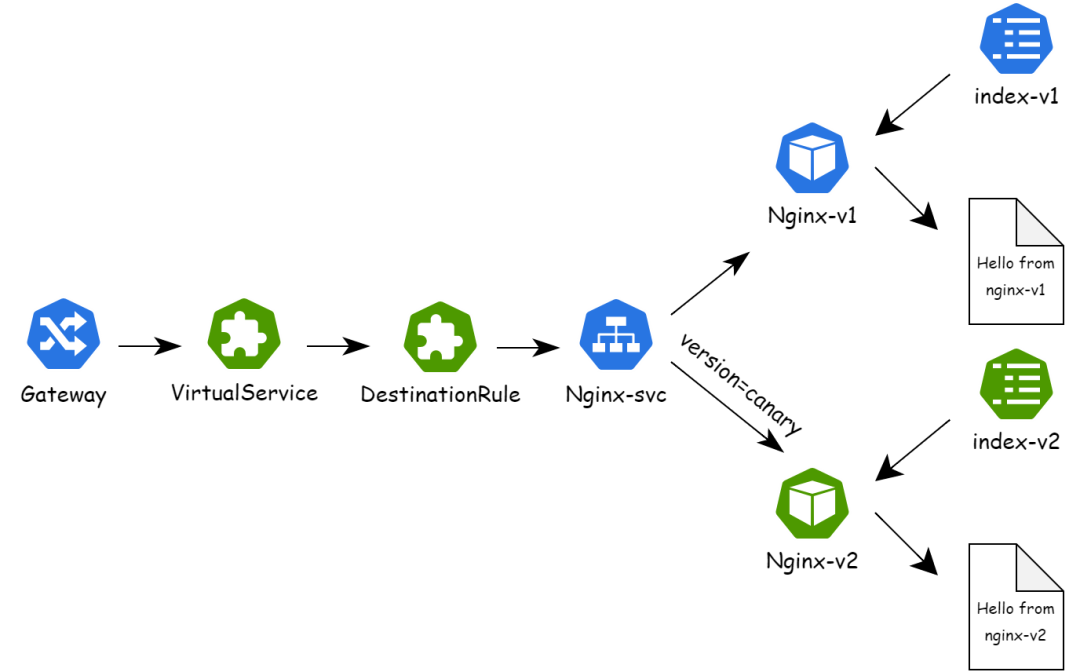

5. Run the kubectl-kruise rollout approve rollout/rollouts-demo command to release the second batch. Kruise Rollout automatically calls the defined Lua script to modify VirtualService and DestinationRule resources for traffic scheduling, and forwards the traffic with version=canary header to the pods in the new version. The following figure shows the traffic of the entire service:

6. Run the kubectl-kruise rollout approve rollout/rollouts-demo command. After the release ends, VirtualService and DestinationRule resources are restored to the pre-release state. All traffic is routed to pods in the new version.

When you call a Lua script to obtain the new status of a resource, Kruise Rollout supports the following Lua script calling methods:

• Custom Lua scripts: Lua scripts that are defined by users in the form of a ConfigMap and called in Rollout.

• Released Lua scripts: Community-based and stable Lua scripts that are packaged and released with Kruise Rollout.

By default, Kruise Rollout checks whether released Lua scripts exist locally. These scripts usually need to design test cases for unit tests to verify their availability and have better stability. The format of the test case is as follows. Kruise Rollout uses the Lua script to process the original status of the resource according to the release policy defined in rollout, obtains the new status of the resource at each step in the release process, and compares them with the expected status defined in expected in the test case to verify whether the Lua script works as expected.

rollout:

# The rollout configuration.

original:

# The original status of the resource.

expected:

# The expected status of the resource during the release process.If the Lua script of a resource is not released, you can call Kruise Rollout to process the resource by configuring the Lua script in the ConfigMap.

apiVersion: v1

kind: ConfigMap

metadata:

name: kruise-rollout-configuration

namespace: kruise-rollout

data:

# The key is named as lua.traffic.routing.Kind.CRDGroup.

"lua.traffic.routing.DestinationRule.networking.istio.io": |

--- Define a Lua script.

local spec = obj.data.spec

local canary = {}

canary.labels = {}

canary.name = "canary"

for k, v in pairs(obj.patchPodMetadata.labels) do

canary.labels[k] = v

end

table.insert(spec.subsets, canary)

return obj.dataFor more information about how to configure the Lua script, see Kruise Rollout official website [2].

• More gateway protocol support: Kruise Rollout supports multiple types of gateway protocols through Lua script plugins. We will focus on investing more in this area in the future. However, the community maintainers alone are not enough to handle the wide range of protocol types. We hope that more community users will join us to continuously improve this aspect.

• End-to-end canary release support: End-to-end canary release represents a more granular and comprehensive canary release mode. It covers all services of an application, rather than just a single service, allowing for better simulation and testing of new services. Currently, you can configure this feature through community gateway resources such as Istio, but manual configuration often requires significant effort. We will explore this aspect to provide support for end-to-end canary release.

You are welcome to join us through Github or Slack and participate in the OpenKruise open source community.

• Slack channel [4]

• OpenKruise Github [5]

[1] Kruise Rollout

https://github.com/openkruise/rollouts

[2] Kruise Rollout official website

https://openkruise.io/rollouts/introduction

[3] Slack channel

https://kubernetes.slack.com/?redir=%2Farchives%2Fopenkruise

[4] OpenKruise Github

https://github.com/openkruise/kruise

Best Practices for Microservices: Achieve Interoperability Between Spring Cloud and Apache Dubbo

664 posts | 55 followers

FollowAlibaba Cloud Native Community - July 27, 2023

Alibaba Cloud Native Community - September 18, 2023

Alibaba Container Service - February 25, 2026

Alibaba Cloud Native Community - March 11, 2024

Alibaba Cloud Native Community - September 20, 2022

Alibaba Cloud Native Community - September 20, 2022

664 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community