By Zhejian

To respond to failures efficiently, this article attempts to answer the following questions:

• Why does it take a long time for the service to resume after the database has been restarted and recovered from a crash?

• The service I depend on has undergone a high availability switchover. Why does it keep reporting errors?

• The service I depend on has deprecated a node. Why does it keep reporting errors?

• Why does the service of the customer keep reporting errors when they change configurations and upgrade cloud service node specifications?

Purpose: To minimize the duration of the failure, and enable quick recovery.

Let's first reproduce the said problem.

Default configuration parameters for the OS:

#sysctl -a |grep -E "tcp_retries|keepalive"

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 5

net.ipv4.tcp_keepalive_time = 10

net.ipv4.tcp_retries1 = 3

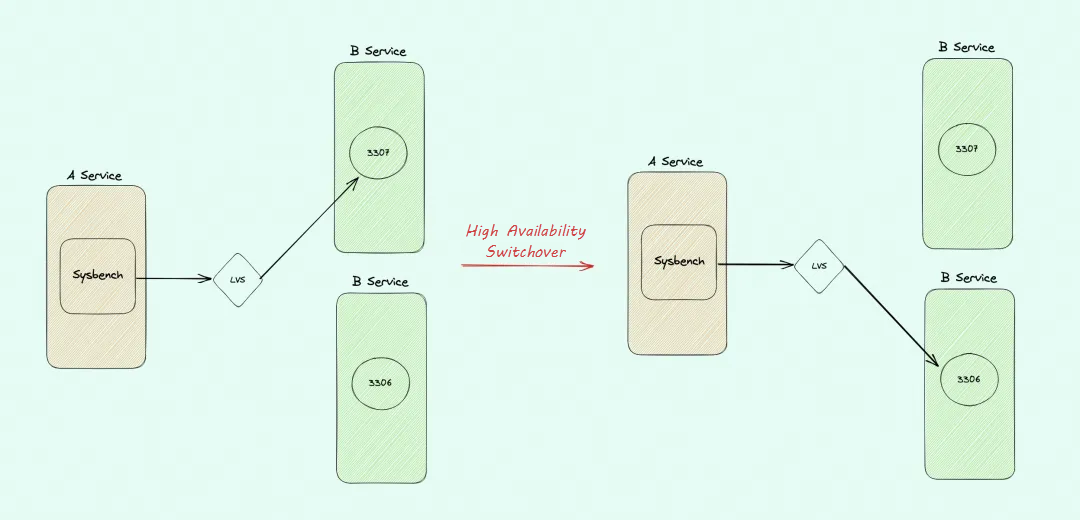

net.ipv4.tcp_retries2=15 // Key parameter, which is typically set to 15 by default and in AliOSThe external service port of Linux Virtual Server (LVS) is 3001, and 3307 is enabled on LVS. Assuming that 3307 is the current Master instance and 3306 is the Slave instance, when 3307 is detected to be abnormal, 3307 is deprecated from LVS and 3306 is enabled for a high availability switchover.

The status of LVS before the switchover:

#ipvsadm -L --timeout

Timeout (tcp tcpfin udp): 900 120 300

#ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 127.0.0.1:3001 rr

-> 127.0.0.1:3307 Masq 1 0 0Sysbench initiated a stress test to simulate user access. At 31 seconds, the simulation control system detected that the Master instance, 3307, could not be accessed. Therefore, the control system executed a primary/secondary switchover, upgrading the Slave instance, 3306, to the new Master instance. At the same time, 3307 was deprecated and 3306 was enabled in LVS.

The switch command is as follows:

#cat del3307.sh

ipvsadm -d -t 127.0.0.1:3001 -r 127.0.0.1:3307 ; ipvsadm -a -t 127.0.0.1:3001 -r 127.0.0.1:3306 -mAt this point, Sysbench showed a decrease to 0 for TPS and QPS in running state, as expected:

#/usr/local/bin/sysbench --debug=on --mysql-user='root' --mysql-password='123' --mysql-db='test' --mysql-host='127.0.0.1' --mysql-port='3001' --tables='16' --table-size='10000' --range-size='5' --db-ps-mode='disable' --skip-trx='on' --mysql-ignore-errors='all' --time='11080' --report-interval='1' --histogram='on' --threads=1 oltp_read_write run

sysbench 1.1.0 (using bundled LuaJIT 2.1.0-beta3)

Running the test with following options:

Number of threads: 1

Report intermediate results every 1 second(s)

Debug mode enabled.

Initializing random number generator from current time

Initializing worker threads...

DEBUG: Worker thread (#0) started

DEBUG: Reporting thread started

DEBUG: Worker thread (#0) initialized

Threads started!

[ 1s ] thds: 1 tps: 51.89 qps: 947.00 (r/w/o: 739.44/207.56/0.00) lat (ms,95%): 35.59 err/s 0.00 reconn/s: 0.00

[ 2s ] thds: 1 tps: 60.03 qps: 1084.54 (r/w/o: 841.42/243.12/0.00) lat (ms,95%): 22.28 err/s 0.00 reconn/s: 0.00

…

[ 29s ] thds: 1 tps: 68.00 qps: 1223.01 (r/w/o: 952.00/271.00/0.00) lat (ms,95%): 16.12 err/s 0.00 reconn/s: 0.00

[ 30s ] thds: 1 tps: 66.00 qps: 1188.00 (r/w/o: 924.00/264.00/0.00) lat (ms,95%): 16.71 err/s 0.00 reconn/s: 0.00

[ 31s ] thds: 1 tps: 67.00 qps: 1203.96 (r/w/o: 937.97/265.99/0.00) lat (ms,95%): 17.95 err/s 0.00 reconn/s: 0.00

[ 32s ] thds: 1 tps: 22.99 qps: 416.85 (r/w/o: 321.88/94.96/0.00) lat (ms,95%): 15.55 err/s 0.00 reconn/s: 0.00

[ 33s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 34s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 35s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00Five minutes later, a large number of failure reports flooded in. The customer complained that "their service had been down for 10 minutes, with all errors indicating MySQL connection timeout. The monitoring log clearly showed that the customer's service had not recovered even after 10 minutes:

[ 601s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 602s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 603s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 604s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00At that time, someone suggested restarting the application to restore the system. The application was then restarted in 5 seconds, which restored the service. This strategy successfully prevented the escalation of failure, proving once again that restarting the application is an effective solution.

During the period when the service/Sysbench QPS dropped to 0, 3307 was deprecated and replaced with 3306, but no new connection to 3306 was established, so the service/Sysbench still utilized 3307.

#ipvsadm -L -n --stats -t 127.0.0.1:3001

Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes

-> RemoteAddress:Port

TCP 127.0.0.1:3001 2 660294 661999 78202968 184940K

-> 127.0.0.1:3306 0 0 0 0 0

#ipvsadm -Lcn | head -10

IPVS connection entries

pro expire state source virtual destination

TCP 13:11 ESTABLISHED 127.0.0.1:33864 127.0.0.1:3001 127.0.0.1:3307

#netstat -anto |grep -E "Recv|33864|3001|33077"

Proto Recv-Q Send-Q Local Address Foreign Address State Timer

tcp 0 248 127.0.0.1:33864 127.0.0.1:3001 ESTABLISHED probe (33.48/0/8)

tcp6 0 11 127.0.0.1:3307 127.0.0.1:33864 ESTABLISHED on (49.03/13/0)After over 900 seconds, the OS experienced 15 failed attempts, and returned a connection exception to the service/Sysbench. This triggered the service/Sysbench to release the abnormal connection and build a new one, which pointed to the new Master instance, 3306. The service returned to normal.

[ 957s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

DEBUG: Ignoring error 2013 Lost connection to MySQL server during query,

DEBUG: Reconnecting

DEBUG: Reconnected

[ 958s ] thds: 1 tps: 53.00 qps: 950.97 (r/w/o: 741.98/208.99/0.00) lat (ms,95%): 30.26 err/s 0.00 reconn/s: 1.00

[ 959s ] thds: 1 tps: 64.00 qps: 1154.03 (r/w/o: 896.02/258.01/0.00) lat (ms,95%): 22.69 err/s 0.00 reconn/s: 0.00

[ 960s ] thds: 1 tps: 66.00 qps: 1184.93 (r/w/o: 923.94/260.98/0.00) lat (ms,95%): 25.28 err/s 0.00 reconn/s: 0.00The problem often encountered in failure scenarios is reproduced as above: the service takes over 900 seconds to gradually recover. This problem is known as the traffic blackhole in long-lived TCP connections.

We set the parameter net.ipv4.tcp_retries2 to 5 and repeated the experiment. We found that the service/Sysbench could be recovered in only 20 seconds. In other words, the traffic blackhole issue was reduced from over 900 seconds to 20 seconds:

[ 62s ] thds: 1 tps: 66.00 qps: 1191.00 (r/w/o: 924.00/267.00/0.00) lat (ms,95%): 17.63 err/s 0.00 reconn/s: 0.00

[ 63s ] thds: 1 tps: 63.00 qps: 1123.01 (r/w/o: 874.00/249.00/0.00) lat (ms,95%): 17.63 err/s 0.00 reconn/s: 0.00

[ 64s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 65s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 66s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 67s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 68s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 69s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 70s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 71s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 72s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 73s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 74s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 75s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 76s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 77s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 78s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 79s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 80s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 81s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

[ 82s ] thds: 1 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,95%): 0.00 err/s 0.00 reconn/s: 0.00

DEBUG: Ignoring error 2013 Lost connection to MySQL server during query,

DEBUG: Reconnecting

DEBUG: Reconnected

[ 83s ] thds: 1 tps: 26.00 qps: 457.01 (r/w/o: 357.01/100.00/0.00) lat (ms,95%): 16.41 err/s 0.00 reconn/s: 1.00

[ 84s ] thds: 1 tps: 60.00 qps: 1086.94 (r/w/o: 846.96/239.99/0.00) lat (ms,95%): 26.68 err/s 0.00 reconn/s: 0.00

[ 85s ] thds: 1 tps: 63.00 qps: 1134.02 (r/w/o: 882.01/252.00/0.00) lat (ms,95%): 23.10 err/s 0.00 reconn/s: 0.00Reproduction of this problem on NGINX: https://asciinema.org/a/649890

This 3-minute screen recording demonstrates an HA switchover process for LVS, with two Nginx behind LVS. It simulates a situation where after one Nginx (Master) is disconnected, the second Nginx (Slave) is added to the LVS and the first Nginx (Master) is deprecated from the LVS, with the aim of quickly restoring the service. However, it can be seen that all the previous long-lived connections failed to recover, leading to a traffic blackhole.

If a long-lived TCP connection doesn't receive any acknowledgment after sending a packet, it will perform 15 retransmission attempts by default (as for net.ipv4.tcp_retries2, the value is about 15 times according to the RTO time), which add up to about 924 seconds. This is why it often takes the service about 15 minutes to recover. This problem exists in all long-lived TCP connections (there is hardly any service is still using short-lived connections). The essence of the problem has nothing to do with the LVS/Kubernetes Service.

The reproduction of the problem here with LVS is only for the convenience of demonstration.

The essence of this problem is that if the server suddenly disappears (due to a crash or network disconnection, unable to send RST in a timely manner) and the client is sending data to the server, the client will keep retransmitting the data according to the TCP retransmission logic. If the client does not receive any acknowledgment from the server, it will retry transmission about 15 times, for about 900 seconds. Therefore, it's not because of LVS that this problem occurs, but rather in certain scenarios, LVS has the ability to handle it more gracefully. For example, when a RealServer is deleted, LVS can easily detect this action and reset all long-lived connections on it.

Why is this problem more evident in Kubernetes? K8S emphasizes the unreliability of the service and PODs can be killed at any time (cutting off the network). It would be fine if the kill-9 process (triggering the reset action) can be executed or the service can be closed to trigger disconnection before the POD is killed. However, in most cases, no such operations are carried out, and instead PODs are forcibly killed or directly isolated, which only results in TCP retransmissions to the client.

The service provider should have effective control and fallback measures to handle request timeouts, and cannot allow a single request to hang indefinitely.

For example, JDBC URL supports setting the SocketTimeout and ConnectTimeout parameters. I believe other products have similar parameters. The service provider should configure these parameters. Otherwise, the service could take more than 900 seconds to recover, should the problem arise as demonstrated in the above example.

The SocketTimeout parameter must be set for a connection, whenever possible. The specific value can be determined according to your acceptable duration for slow queries. For analysis and AP requests, the parameter can be set higher.

**Most importantly, any service that uses long-lived TCP connections must be configured with an appropriate SocketTimeout value. For example, Jedis uses the connection pool mode. When the underlying layer times out, the current connection is destroyed. The next time the connection is established, Jedis will switch to a new node and resume service.

The TCP_USER_TIMEOUT configuration option is added in RFC 5482, which is usually used to control the amount of time to wait before releasing network resources when a data transmission problem occurs in TCP network connections, corresponding to the commit in Linux.

TCP_USER_TIMEOUT is an integer value that specifies how long transmitted data may remain unacknowledged (i.e. no acknowledgment received) before a TCP connection is closed.

For example, the TCP_USER_TIMEOUT parameter can be set in a way that the application would assume that an issue occurs with the network connection if the transmitted data has not been unacknowledged within 30 seconds, and then disconnects directly without trying to retransmit the data. This is very helpful to ensure connection quality and enhance the user experience.

In Linux, you can use the setsockopt function to set the TCP_USER_TIMEOUT parameter for a specific socket:

int timeout = 30000; // 30 seconds

setsockopt(sock, IPPROTO_TCP, TCP_USER_TIMEOUT, (char *)&timeout, sizeof(timeout));In this line of code, the sock is an established TCP socket, of which the TCP_USER_TIMEOUT parameter is set to 30000 milliseconds, or 30 seconds. Under this setting, if no acknowledgment is received within 30 seconds after sending a packet, the TCP connection will be released.

The TCP_USER_TIMEOUT parameter allows for more accurate control than the SocketTimeout parameter (without affecting slow queries). The SocketTimeout parameter does not distinguish between the acknowledgment and the request response time, but the TCP_USER_TIMEOUT parameter requires support from both the underlying API and OS. For example, JDK does not provide the TCP_USER_TIMEOUT parameter, but the Netty framework has introduced Native to enable the configuration of the TCP_USER_TIMEOUT parameter and other parameters in the OS. Since Lettuce is a Redis client for Java based on Netty, it also supports setting the TCP_USER_TIMEOUT parameter.

Originally, the idea was to introduce a feature on Druid to support TCP_USER_TIMEOUT configuration, so that this problem could be solved seamlessly for most services in the Group. However, it was later found that JDK does not support this parameter. To enable TCP_USER_TIMEOUT configuration in Druid, it must be set by bypassing JDK through Native, as in Netty, which is a bit heavy for Druid.

This value specifies the timeout period for creating new connections, and generally 3-5 seconds is enough.

For more information, refer to Recommendations on the Configuration of Database Connection Pool. Many of the recommendations are also applicable to services and applications. It may be easier to understand if you think of the database as a common service.

By the way, if you are using a Druid database connection pool, do not use it to set your SocketTimeout parameter, as it has a bug that makes it appear as if it has been set but it is actually isn't. The issue was fixed in release 1.2.22 dated March 16, 2024. Therefore, it is highly recommended to hard-code the SocketTimeout parameter in the JDBC URL for simplicity and clarity.

If the service is an AP query/slow request that takes half an hour, and setting the SocketTimeout parameter too small affects normal queries, then the following OS parameters can be changed to smaller values to provide a fallback solution at the OS level.

net.ipv4.tcp_retries2 = 8

net.ipv4.tcp_syn_retries = 4The default value of the keepalive parameter is 7200 seconds, which is too long. It is recommended to change it to 20 seconds. It can be fixed at the OS image level, and then each service can patch its own value.

Assume that a connection is reset by the LVS when exceeding 900 seconds. However, if the keepalive parameter is set to less than 900 seconds, even if the service remains idle, the keepalive parameter will send heartbeat packets, preventing LVS from resetting the connection. This avoids situations where connection is found to be unavailable when the service needs to use it. There is often a significant gap between when the service throws an error and when the connection is actually reset, making it difficult to troubleshoot.

The keepalive detection stops after a TCP retransmission is triggered.

If you have tried the Aliyun Server Load Balancer (SLB), you will find that when a node is deprecated, it allows you to set a time, after which the SLB of Aliyun sends a Reset command to these connected clients to kill the traffic, causing them to create new connections and quickly recover from the failure. This is an instance dimension parameter that is recommended for all products on the cloud, and administrators can set a default value when purchasing an SLB:

connection_drain_timeoutThe above-mentioned traffic blackhole issue with the long-lived connection generally recovers in 900+ seconds. Errors, such as "CommunicationsException:Communications link failure for 900 seconds", are commonly seen in the log, with the LVS usually set to reset the connection after the service is idle for 900 seconds.

#ipvsadm -L -timeout

Timeout (tcp tcpfin udp): 900 120 300• For decades, engineers have remained unclear about the problem;

• The problem rarely occurred and could be temporarily solved simply by restarting the service;

• This problem does not exist for new connections;

• Some connection pools have a check mechanism (which specifies a timeout period of seconds before aborting a connection);

• More microservices have emerged;

• LVS on the cloud is gaining popularity;

• Kubernetes services are thriving.

Simply put, any long-lived TCP connection could experience this problem.

The long-lived connection A accesses the service B, but the network connection between B and A fails. If an HA event occurs in B, all connections on B are reset/aborted first (for example, MySQL may kill all processlists or restart, assuming B is MySQL). However, due to the network connectivity issue, reset and fin packets cannot reach A, so B is unable to handle this abnormal scenario. As a result, A cannot detect that B is unavailable and will continue using the old connection for about 15 minutes.

In the worst-case scenario, service B has stopped responding, while the OS hosting B is still responding. From A's perspective, the network connection appears normal. Therefore, A can only handle this situation by throwing a timeout.

This problem is more evident in cases involving LVS, but it actually has nothing to do with LVS. Long-lived connections to any services can lead to a traffic blackhole of about 900 seconds. First and foremost, this problem needs to be taken seriously at the service level. Otherwise, the service has to be restarted whenever the database fails. If the problem can be resolved at the service level, you can avoid the 900-second blackhole events and service restart, achieving quicker recovery from failure.

Allow me to reiterate: this problem will still occur even if two services are directly connected without using LVS/Kubernetes Service/software load balancing, and unplugging the network cable will not help.

Summary of Best Practices:

• If your service supports setting the SocketTimeout parameter, please make sure to do so. However, it may not be suitable for analysis classes, that is, requests that take a long time to return.

• The best practice is to configure the TCP_USER_TIMEOUT parameter at the OS level, which will return an error message if no acknowledgment is received for a long time. But JDK does not support directly setting this parameter.

• If ALB/SLB is employed, the parameter connection_drain_timeout must be configured.

• At the OS image level, the tcp_retries2 parameter can also be set to 5-10 as a fallback;

• Make your timeouts manageable and predictable.

Related Failures and Information:

For detailed information, it is recommended to refer to this article: A Disaster Caused by Database Failure.

Explanation of the tcp_retries2 parameter:

tcp_retries1 - INTEGER

This value influences the time, after which TCP decides, that

something is wrong due to unacknowledged RTO retransmissions,

and reports this suspicion to the network layer.

See tcp_retries2 for more details.

RFC 1122 recommends at least 3 retransmissions, which is the

default.

tcp_retries2 - INTEGER

This value influences the timeout of an alive TCP connection,

when RTO retransmissions remain unacknowledged.

Given a value of N, a hypothetical TCP connection following

exponential backoff with an initial RTO of TCP_RTO_MIN would

retransmit N times before killing the connection at the (N+1)th RTO.

The default value of 15 yields a hypothetical timeout of 924.6

seconds and is a lower bound for the effective timeout.

TCP will effectively time out at the first RTO which exceeds the

hypothetical timeout.

RFC 1122 recommends at least 100 seconds for the timeout,

which corresponds to a value of at least 8.The value of the tcp_retries2 parameter is determined based on the RTO value, with a default value of 15, which is equivalent to 13-30 minutes (according to RFC1122 requirements, it must be greater than 100 seconds). However, this is an outdated value from many years ago. As network conditions are much better today, I think changing it to 5-10 is more appropriate.

A value of 3 is recommended for Oracle RAC: https://access.redhat.com/solutions/726753

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

One Pager: New Go-To-Market (GTM) Capability Launch on Alibaba Cloud Marketplace

1,320 posts | 464 followers

FollowAlibaba Cloud Native Community - December 17, 2025

ApsaraDB - December 25, 2024

Alibaba Cloud Native Community - April 29, 2025

Alibaba Cloud Community - July 5, 2022

Alibaba Cloud Native Community - February 20, 2025

Apache Flink Community China - August 19, 2021

1,320 posts | 464 followers

Follow Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn MoreMore Posts by Alibaba Cloud Community