By Wang Chen

The release of DeepSeek-R1 and Qwen2.5-Max has led to an increasing demand for AI inference services in China. The focus of the model performance competition is thus shifting from training to inference.

Improving the performance of training and inference has always been the talk of the town. Why? Ask anyone with experience in web applications, and their answer will likely be that better performance brings two apparent benefits: lower compute costs and faster content generation on the client side.

Performance is especially important in the contexts where AI models consume significantly high compute resources and stream responses to the user.

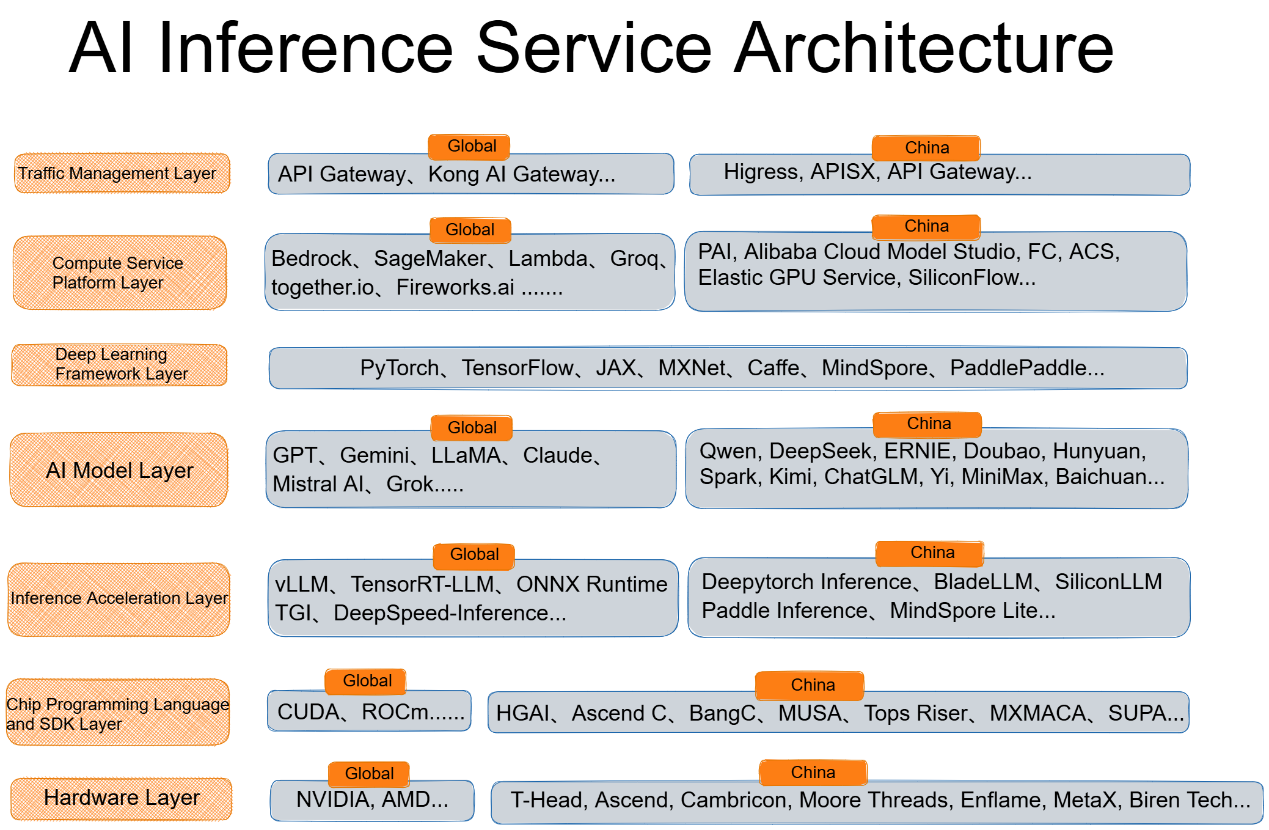

The architecture of an AI inference service involves the underlying hardware, AI models, and multiple middleware layers working in coordination. Therefore, adopting a bird's eye view of the architecture can facilitate the evaluation and selection of solutions to optimize AI inference performance.

Note: The listed vendors or items are not exclusive. Some vendors are involved in multiple layers.

1. Hardware layer (Chips)

Serving as the physical foundation of the compute system, the hardware layer executes underlying arithmetic and logical operations. Chip design has a direct impact on the density, energy efficiency, and parallelism of computing power. Globally, leading players in this market include GPU companies like NVIDIA and AMD, as well as chip makers that deliver products specially optimized for AI inference, such as Groq. In China, prominent chip manufacturers include established companies like Alibaba's T-Head, Huawei's Ascend, and Cambricon, as well as emerging players like Moore Threads, Enflame, MetaX, and Biren Tech.

2. Chip programming languages and SDKs

Hardware programming serves as the interface between the underlying hardware and the software on top. Major considerations are resource orchestration efficiency, command mapping, and the balance between programming convenience and compute efficiency. Examples of this layer include programming languages such as NVIDIA CUDA, AMD ROCm, T-Head HGAI, Ascend C, Cambricon BANG C, Moore Threads MUSA, Enflame Tops Riser, MetaX MXMACA, and BIRENSUPA, along with the related libraries, toolkits, and documentation. However, the established ecosystem around CUDA makes it costly for programmers to switch to other languages.

3. Deep learning framework layer

This layer consists of fundamental tools and features that streamline the development, training, and deployment of AI models. Just like an experienced personal trainer helps you reach your fitness goal more quickly, a great framework can supercharge model performance. Popular frameworks are as follows, with PyTorch and TensorFlow being the top options:

• PyTorch: Originally developed by Facebook's AI Research lab, PyTorch features dynamic computational graphs that allow for on-the-fly modification during execution. Deeply integrated with Python, PyTorch offers intuitive APIs and flexible programming experience. This framework is ideal for rapid prototype development, research, and experimentation, especially scenarios involving frequent model modification and iteration.

• TensorFlow: Google's second-generation machine-learning system built on DistBelief, Tensorflow supports multiple programming languages, such as JavaScript, C++, and Java. It's widely used for machine learning and deep learning tasks, including speech recognition and image recognition. Supported algorithms include CNN, RNN, and LSTM.

• JAX: Google JAX is designed to enable researchers to effortlessly conduct algorithmic testing while fully leveraging the power of hardware accelerators. JAX excels in automatic differentiation and parallel computing.

• MindSpore: Huawei's novel open-source framework for deep learning and inference, MindSpore is suitable in device-edge-cloud scenarios. It works natively with Ascend AI processors and delivers performance improvement through hardware-software co-design.

• PaddlePaddle: Baidu's PaddlePaddle is an open-source framework that enhances the efficiency of training deep learning models. It supports distributed training across multiple nodes with multiple GPUs, optimizing computing power utilization.

• Apache MXNet: MXNet is AWS's officially adopted deep learning framework, co-developed by Carlos Guestrin at the University of Washington. It supports various programming languages, including C++, Python, Java, Julia, MATLAB, JavaScript, Go, R, and Scala.

• Caffe: Caffe is developed by Berkeley AI Research and the Berkeley Vision and Learning Center, together with community contributors. It features a modular design that enables easy extension of new data formats, network layers, and loss functions.

4. Inference acceleration layer

This layer is designed for optimizing computing efficiency and resource utilization of AI model inference. It leverages technologies like compilation, quantization, and batch processing to reduce latency and costs. The inference acceleration field has a variety of players, such as cloud vendors, software providers, model communities, and research institutes. They offer open-source or proprietary solutions that connect to AI models accessible through API calls.

In essence, inference acceleration focuses on offering maximum compute power while minimizing GPU memory usage. An ideal solution for inference acceleration should require fewer resources while delivering higher performance. Here are some popular solutions:

• vLLM: An easy-to-use library for LLM inference and serving. Originally developed in the Sky Computing Lab at UC Berkeley, vLLM uses the Paged Attention mechanism to effectively alleviate memory fragmentation, enhancing memory utilization. vLLM supports various LLMs, including Qwen and Llama, and integrates well with the Hugging Face ecosystem, allowing users to load model weights directly from the Hugging Face Hub.

• TensorRT-LLM: NVIDIA TensorRT-LLM is an open-source LLM library based on TensorRT. It harnesses the full potential of NVIDIA hardware features (e.g. Tensor Cores), and integrates seamlessly with other NVIDIA tools and libraries (CUDA, cuBLAS, etc.). It can be used with Triton for rapid inference execution on NVIDIA GPUs.

• ONNX Runtime: A lightweight, cross-platform, and high-performance inference engine developed and maintained by Microsoft. It's designed to bridge the gap between different deep learning frameworks, enabling AI models to run efficiently across various environments and enhancing model portability and deployment efficiency.

• Text Generation Inference (TGI): TGI is a toolkit that leverages the Hugging Face Transformers library to offer easy AI model deployment and serving. TGI supports distributed inference across multiple GPUs, enhancing its model serving capability.

• Deepytorch Inference: An AI model inference accelerator developed by Alibaba Cloud's Elastic GPU Service team, Deepytorch Inference is designed to offer high-performance inference acceleration for PyTorch models. Deepytorch Inference significantly improves the inference performance of PyTorch models through computational graph partitioning, implementation layer merging, and high-performance operator implementation.

• BladeLLM: BladeLLM is an inference engine optimized for LLMs. Developed by Alibaba Cloud's Platform for AI (PAI) team, BladeLLM enhances model computation, the generation engine, service framework, and application layer, delivering higher performance than mainstream open-source frameworks.

• TurboMind: Developed by Shanghai Artificial Intelligence Laboratory, TurboMind is an open-source inference acceleration engine for Llama LLMs. It supports dynamic batching and continuous batching.

5. Model layer

• Top-tier models from non-Chinese developers: OpenAI GPT, Google Gemini, Meta Llama, Anthropic Claude, Mistral AI, and X Grok. Meta has open-sourced the core components of its Llama models, and Mistral AI has done the same. Other AI models contribute to the community through technological reports or partial open-source efforts.

• Top-tier models from China: Alibaba Cloud Qwen, DeepSeek, Baidu ERNIE, ByteDance Doubao, Tencent Hunyuan, iFlytek Spark, and Kimi. Qwen and DeepSeek have open-sourced their core components while other AI models contribute to the community through technological reports or partial open-source efforts.

Open-source LLMs are no longer followers of their closed-source counterparts. Instead, they are starting to lead the AI development, with DeepSeek and Qwen as the frontrunners among current open-source projects.

6. Compute service platform layer

This layer is heavily dependent on GPU resources, which are subject to US export control. As a result, major players in China are predominantly public cloud vendors, such as Alibaba Cloud, who offers services such as PAI, Alibaba Cloud Model Studio, Function Compute (FC, which provides serverless GPU capabilities), Container Compute Service (ACS), and Elastic GPU Service. Globally, thanks to a full-fledged ecosystem around AI models and looser restrictions on GPU purchases, there are a wider variety of providers, including cloud vendors, chip makers, software providers, and more. Notable examples include Groq, Together AI, and Fireworks AI.

7. Application orchestration layer

This layer is crucial in putting models into actual business use cases. It integrates models, toolkits, data, and model serving, automates the creation and implementation of complex AI processes, and enhances generation performance.

• LangChain: LangChain is an open-source project created by Harrison Chase in 2022 that enables users to flexibly combine tools through three of its core components: chains, agents, and memory. It supports over 30 AI models, including those from OpenAI, and integrates with over 200 external services such as Wikipedia and Wolfram Alpha. Additionally, it provides built-in APIs for vector databases such as Chroma and Pinecone to facilitate real-time RAG.

• LlamaIndex: Focused on data processing and indexing, LlamaIndex is ideal for use cases that require effective management and utilization of a large volume of data, such as domain-specific Q&A.

Both LangChain and LlamaIndex are open-source projects for Python developers.

• Spring AI Alibaba: An open-source agent development framework for Spring and Java developers. It offers a general-purpose paradigm for AI agent development and has optimized for China's AI models in aspects from basic capabilities (chatbots, prompt templates, function invocation, etc.) to high-level abstractions (agent orchestration, chat memory management, etc.). Additionally, Spring AI Alibaba provides best practices for application deployment and O&M, covering aspects such as gateway integration, configuration management, deployment, and observability.

LangChain, LlamaIndex, and Spring AI Alibaba are code-level, highly flexible frameworks for application development.

• Dify: Dify is an open-source LLM application development platform that supports AI agent building, AI workflow orchestration, RAG, and model management. It can help users easily build and operate GenAI applications.

• Alibaba Cloud Model Studio: This is a one-stop platform for AI model development and application building that empowers both developers and business professionals to design and create AI applications. It provides a no-code development option, enabling users to effortlessly build AI applications through simple mouse clicks in the user interface.

Moreover, with Alibaba Cloud's Cloud Application Platform and FC, users can leverage compute resources and orchestrate AI applications in a serverless manner.

Dify, Alibaba Cloud Model Studio, and the combination of Cloud Application Platform and FC are low-code AI platforms, with the latter two providing compute resources for inference.

8. Traffic management layer

As a vital middleware for AI model deployment and serving, this layer manages traffic, services, security, and APIs, ensuring stable, low-latency performance even during workload spikes. While traffic management may be optional in the AI model training architecture, it's essential in the AI model inference architecture, just as it is in the web application architecture.

Unlike traditional web applications, AI applications exhibit the following characteristics and requirements:

• Long-lived connections: Websocket and SSE, commonly used in AI application serving, create long-lived connections. Therefore, gateway configuration updates must be no-disruptive to avoid impacting these connections or application services.

• High latency: The response time for LLM inference is significantly longer than that of other applications, making AI applications vulnerable to attacks, such as sustained slow request flooding. This type of attack is easy to carry out but can introduce substantial overhead on the server.

• High bandwidth: Because contexts are exchanged between the LLM and the user with high latency, AI applications consume more bandwidth than other applications. Therefore, the gateway should have strong stream processing capabilities and an effective memory reclamation mechanism to prevent sudden spikes in memory usage.

• Risks of service disruptions: AI applications require more time to deliver responses compared with web applications. Since conversational continuity is crucial to user experience, traffic management tools must prevent service disruptions caused by backend plugin updates.

• High resource consumption: Compared with web applications, AI applications consume many more resources on the server than on the client when processing requests. Therefore, a robust architecture must be in place to prevent low-cost client-side attacks from overwhelming the server.

• Grey market risk management: Many AI applications provide free-of-charge quotas to attract users. Measures need to be taken to stop people from trading the free quotas for money.

• Compliance and safety: Unlike web applications that generate results based on existing information, AI applications produce contents through inference. Ensuring the compliance and safety of the generated content is essential.

• Compatibility: If an application accesses multiple AI models, it's necessary to effectively manage the differences in API calls and minimize compatibility costs.

Using NGINX as an API gateway cannot cope with these characteristics of AI applications and the resulting new requirements. Consequently, open-source gateways powered by Envoy have gained popularity across the globe:

• Higress: Powered by Istio and Envoy and optimized to meet business demands, Higress is a cloud-native API gateway that can be deployed in web applications and AI applications. It has supported the Tongyi Qwen app, API calls on Alibaba Cloud Model Studio, PAI, FastGPT, and China United Property Insurance's AI application.

• Kong AI Gateway: Built on top of Kong Gateway, Kong AI Gateway is highly extensible, enabling developers to create custom plugins for various tasks, including traffic control, data conversion, and model framework scheduling.

• Alibaba Cloud's cloud-native API Gateway: This service offers full-lifecycle management of AI model APIs, and can be seamlessly integrated with other Alibaba Cloud products, including PAI and FC. Furthermore, it's offers enhanced open-source capabilities through compatibility with Higress.

There is another possible layer in the stack for AI inference service: the application implementation layer. Vendors on this layer, with their expertise in AI model infrastructure or their respective industry verticals, leverage open-source AI models and middleware to deliver integrated services and services that can be deployed on-premises. For brevity, those vendors are not detailed in this article, but they play a crucial role in the AI model landscape and the overall application ecosystem.

Step-to-step Guide to Creating Your AI Agent Locally with DeepSeek and Spring AI

DeepSeek と Spring AI を使用してローカルでAIエージェントを作成するためのステップバイステップガイド

668 posts | 55 followers

FollowAlibaba Container Service - July 25, 2025

Alibaba Cloud Native Community - September 20, 2023

Alibaba Container Service - February 25, 2026

Alibaba Cloud Native Community - September 20, 2023

Alibaba Container Service - July 10, 2025

Alibaba Cloud Native Community - February 13, 2026

668 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community