Author: Chengtan

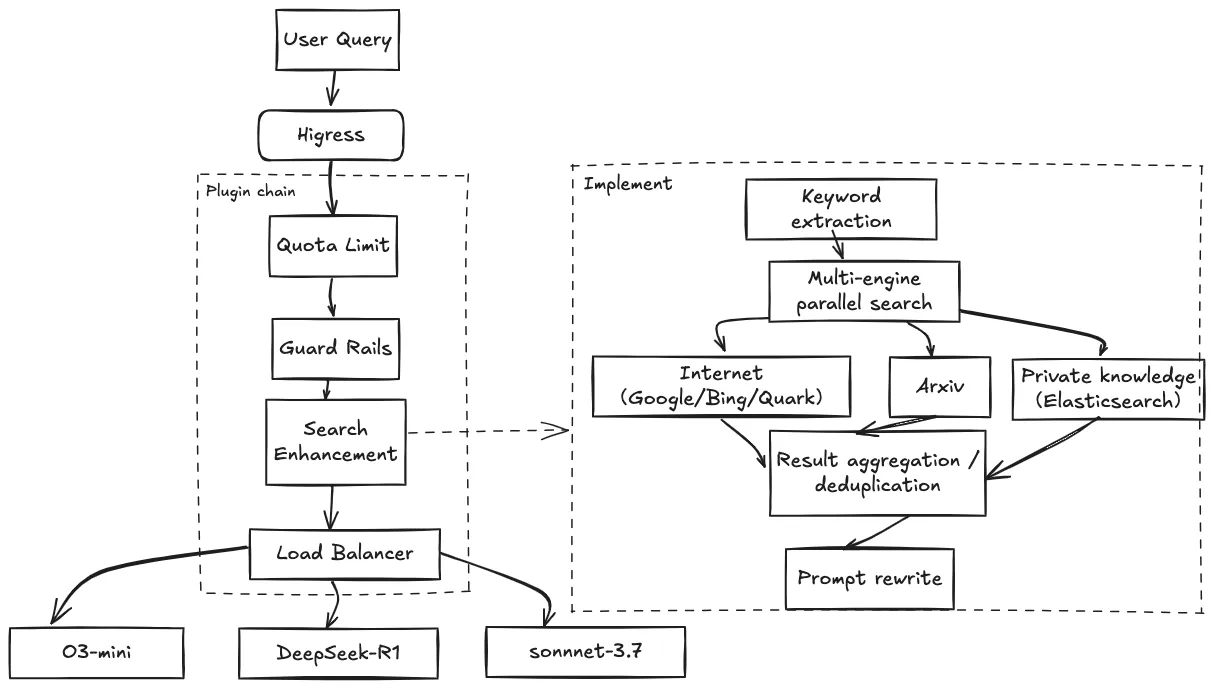

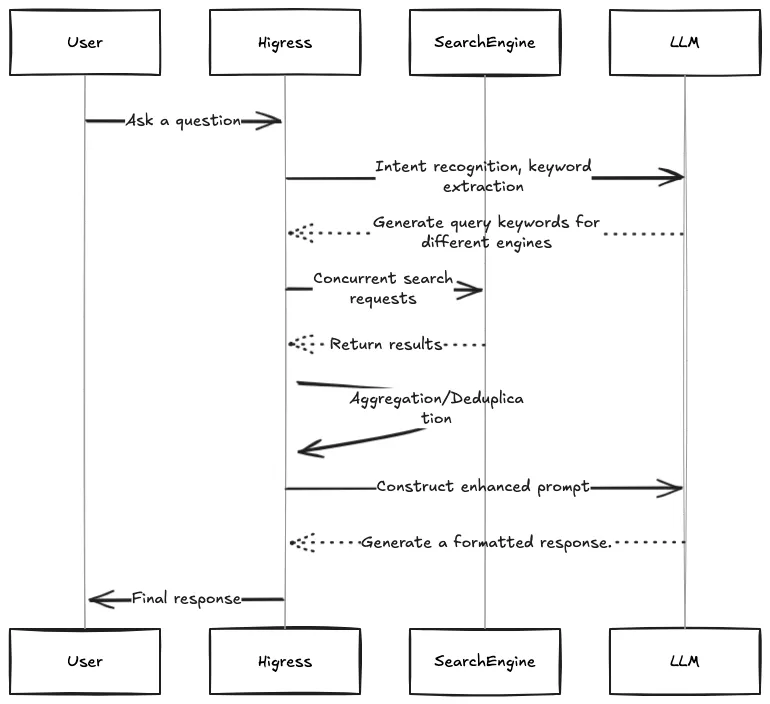

With the emergence of high-quality open-source large models like DeepSeek, the cost for enterprises to build their own intelligent Q&A systems that does not share data externally has been reduced by more than 90%. Models with parameter sizes of 7B/13B can achieve commercial-grade response effects on regular GPU servers. Combined with the enhanced capabilities of the Higress open-source AI gateway, developers can quickly build intelligent Q&A systems with real-time web search capabilities.

Higress, as a cloud-native API gateway, provides out-of-the-box AI enhancement capabilities through WebAssembly (WASM) plugins:

● Real-Time web search: Access the latest internet information

● Intelligent Routing: Multi-model load balancing and automatic fallback

● Security Protection: Sensitive word filtering and injection attack defense

● Performance Optimization: Request caching + token quota management

● Observability: Full-chain monitoring and audit logs

The Higress AI search enhancement plugin code is open-sourced; click the link to view the plugin documentation and code:https://github.com/alibaba/higress/blob/main/plugins/wasm-go/extensions/ai-search/README_EN.md

1. Multi-Engine Intelligent Traffic Distribution

● Public search engines (Google/Bing/Quark) for real-time information retrieval

● Academic search (Arxiv) for research scenarios

● Private search (Elasticsearch) for connecting enterprise or personal knowledge bases

2. Core Ideas for Search Enhancement

● LLM Query Rewriting: Based on LLM recognizing user intent, generating search commands significantly enhances search performance.

● Keyword Refinement: Different engines require different prompts; for example, Arxiv primarily contains English papers, so keywords should be in English.

● Field Identification: Taking Arxiv as an example, it divides into fields such as computer science, physics, mathematics, biology, etc. Specifying the field improves search accuracy.

● Long Query Splitting: Long queries can be split into multiple short queries to improve efficiency.

● High-Quality Data: While Google/Bing/Arxiv only provide abstracts, integrating with Quark search via Alibaba Cloud's information retrieval system allows access to full texts, enhancing the quality of LLM-generated content.

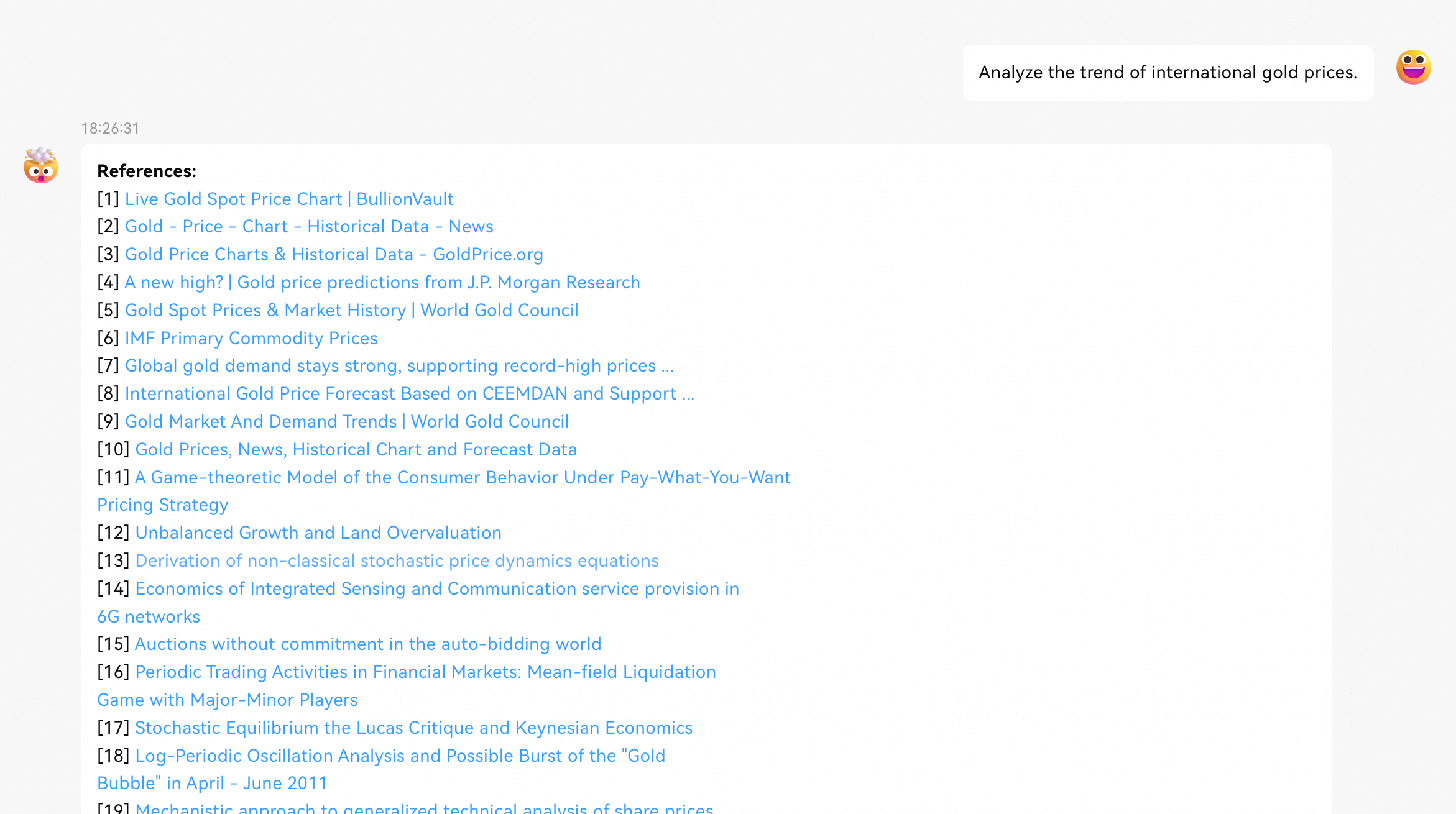

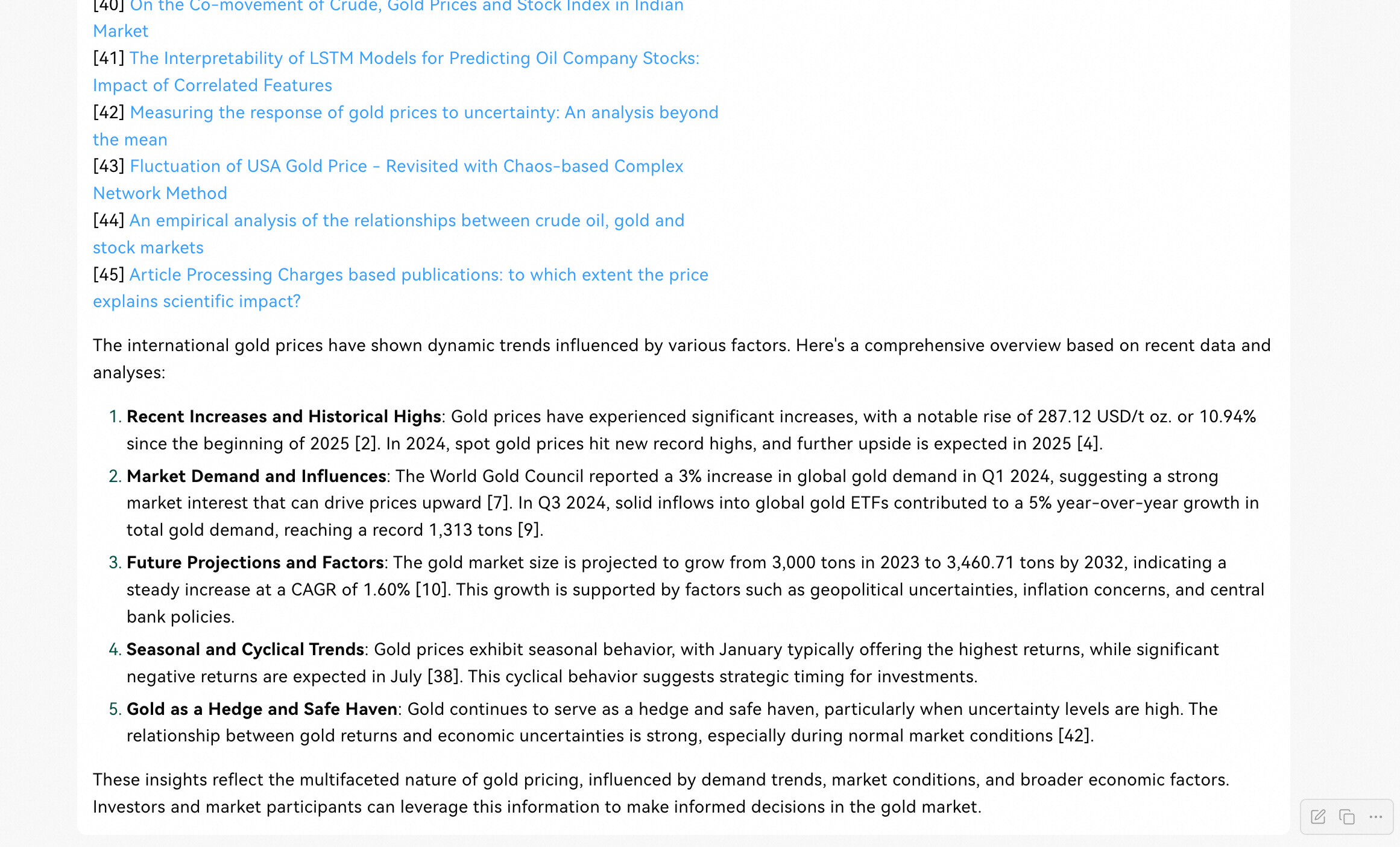

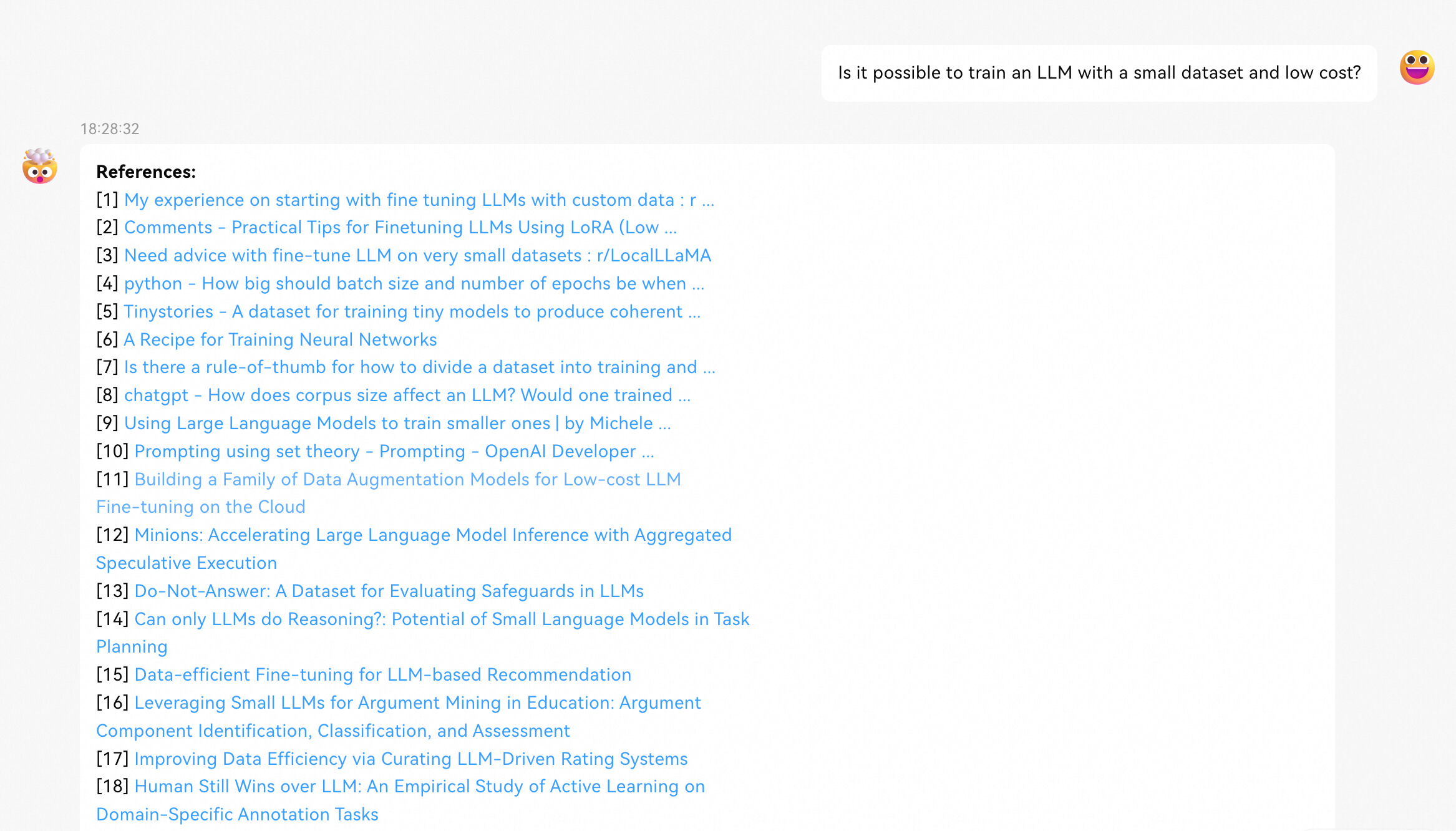

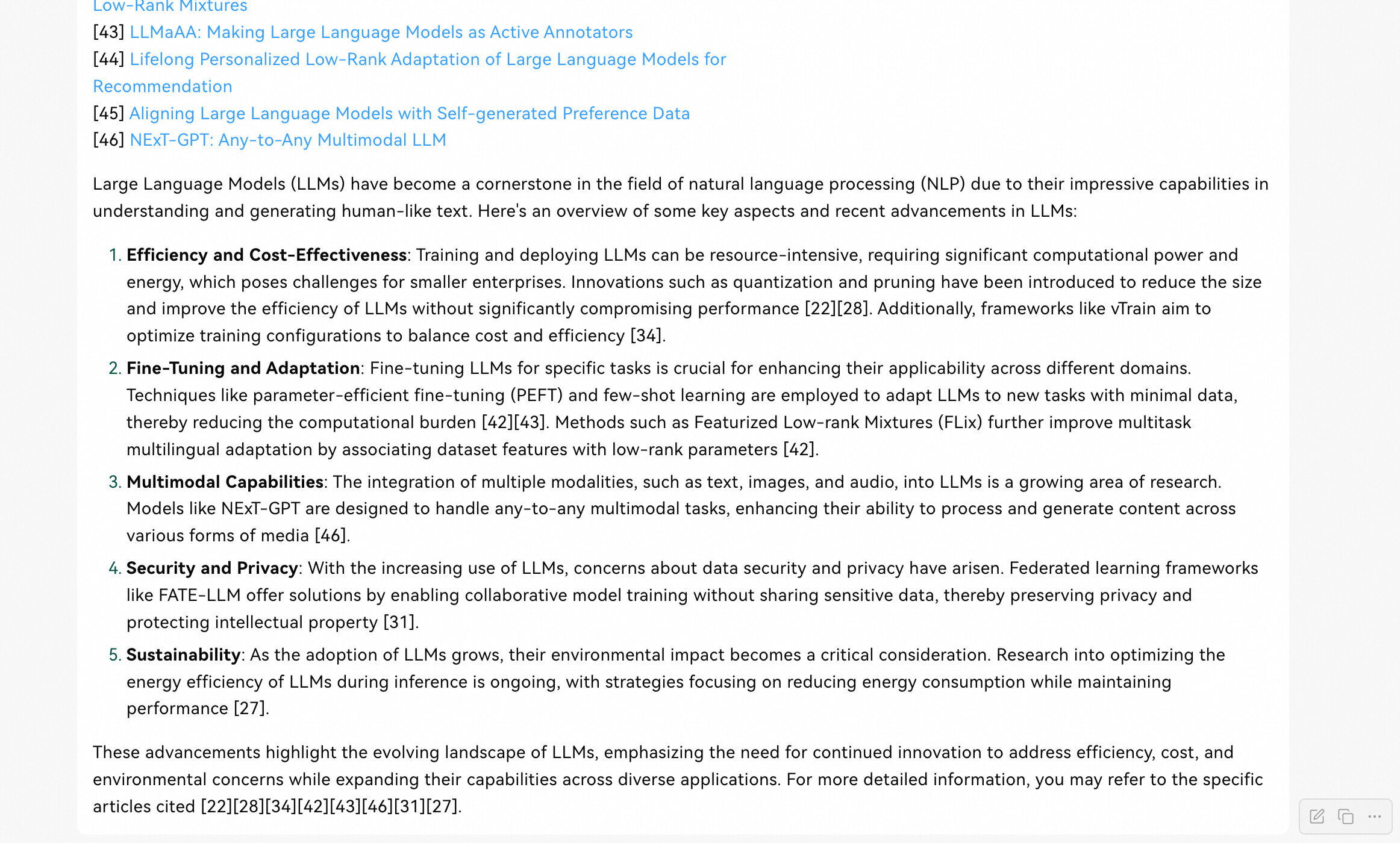

Financial information Q&A

Frontier technology exploration

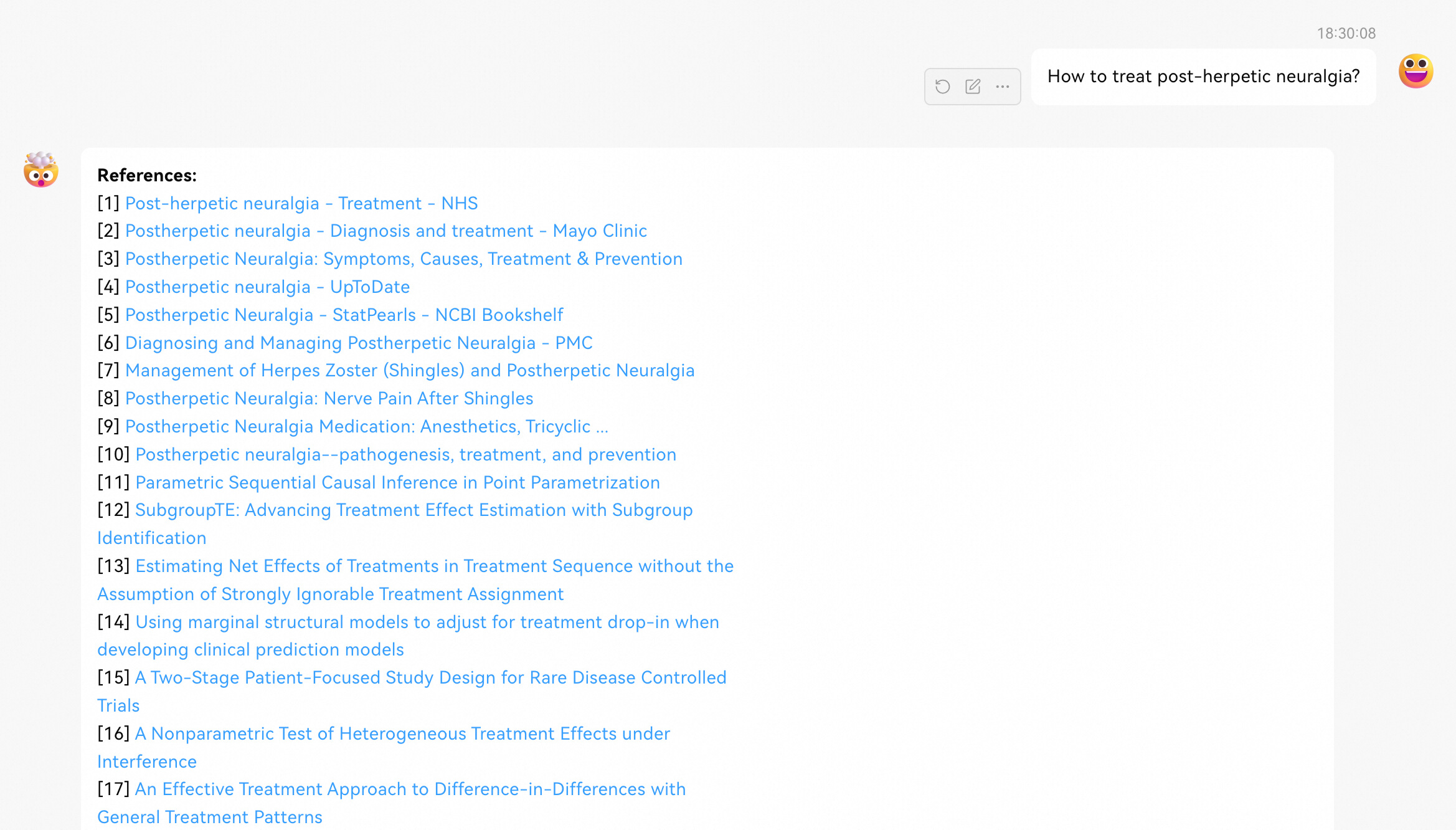

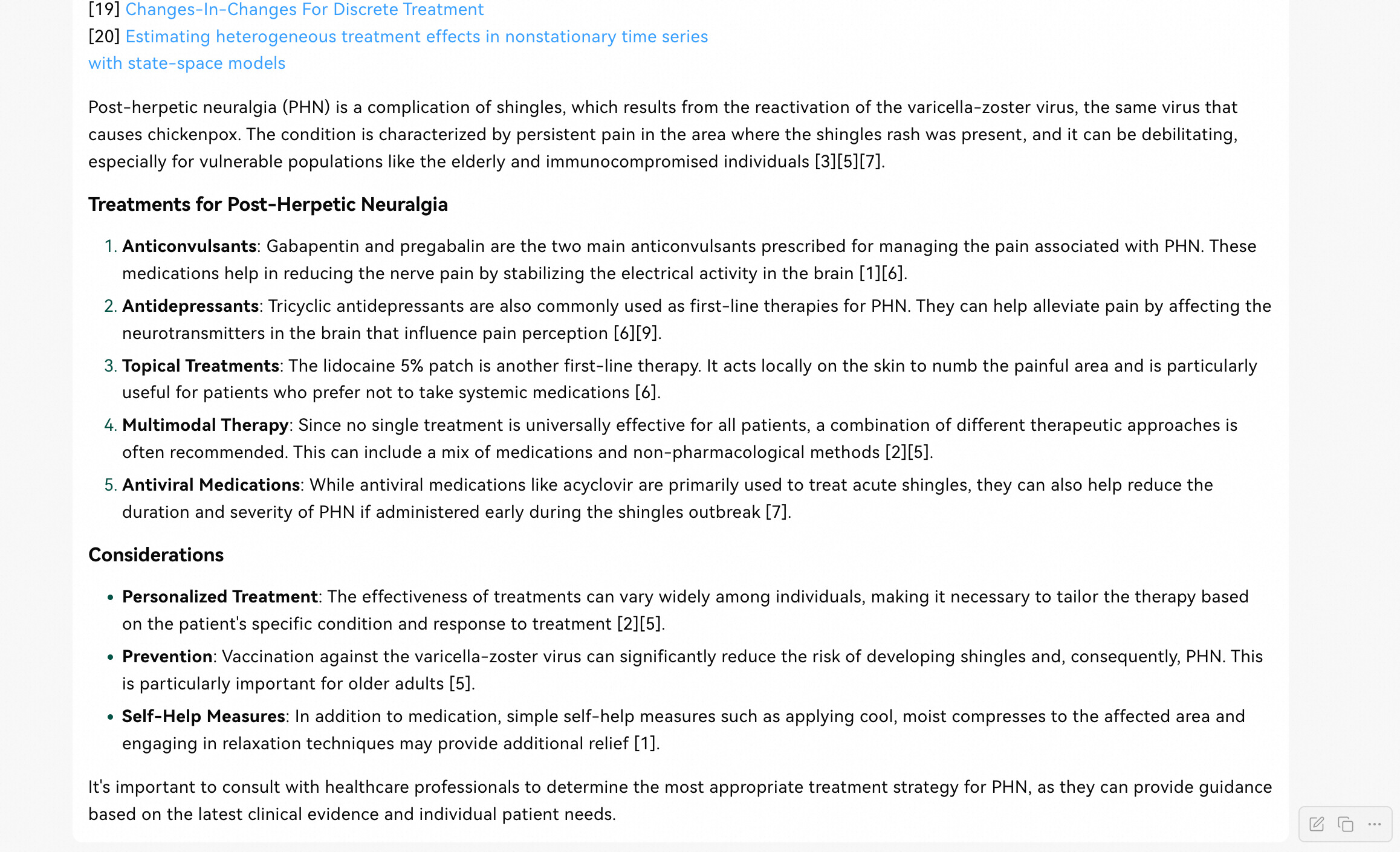

Medical question answering

# One command to install and start the Higress gateway

curl -sS https://higress.cn/ai-gateway/install.sh | bash

# Example deployment of DeepSeek-R1-Distill-Qwen-7B using vllm

python3 -m vllm.entrypoints.openai.api_server --model=deepseek-ai/DeepSeek-R1-Distill-Qwen-7B --dtype=half --tensor-parallel-size=4 --enforce-eagerThe Higress console can be accessed via http://127.0.0.1:8001. Configure DNS services for service sources, with the following domains corresponding to each search engine API:

● google: customsearch.googleapis.com

● bing: api.bing.microsoft.com

● quark: iqs.cn-zhangjiakou.aliyuncs.com

● arxiv: export.arxiv.org

Then configure the AI-search plugin as follows:

promptTemplate: |

# The following content is based on search results from the user-submitted query:

{search_results}

In the search results I provide, each result is formatted as [webpage X begin]...[webpage X end], where X represents the index number of each article. Please cite the context at the end of the sentences where appropriate. Use a format of citation number [X] in the answer for corresponding parts. If a sentence is derived from multiple contexts, list all relevant citation numbers, such as [3][5], and ensure not to cluster the citations at the end; instead, list them in the corresponding parts of the answer.

When responding, please pay attention to the following:

- Today’s date in Beijing time is: {cur_date}.

- Not all content from the search results is closely related to the user's question. You need to discern and filter the search results based on the question.

- For listing-type questions (e.g., listing all flight information), try to keep the answer to within 10 points and inform the user that they can check the search source for complete information. Prioritize providing the most comprehensive and relevant items; do not volunteer information missing from the search results unless necessary.

- For creative questions (e.g., writing a paper), be sure to cite relevant references in the body paragraphs, such as [3][5], rather than only at the end of the article. You need to interpret and summarize the user's topic requirements, choose the appropriate format, fully utilize search results, extract crucial information, and generate answers that meet user requirements, with deep thought, creativity, and professionalism. The length of your creation should be extended as much as possible, hypothesize the user's intent for each point, providing as many angles as possible, ensuring substantial information, and detailed discussion.

- If the response is lengthy, try to structure the summary into paragraphs. If responding with points, try to keep it within 5 points and consolidate related content.

- For objective Q&A, if the answer is very short, you can appropriately add one or two related sentences to enrich the content.

- You need to choose a suitable and aesthetically pleasing response format based on the user’s requirements and answer content to ensure high readability.

- Your answers should synthesize multiple relevant web pages to respond and should not repeatedly quote a single web page.

- Unless the user requests otherwise, respond in the same language the question was asked.

# The user’s message is:

{question}

needReference: true

searchFrom:

- type: quark

apiKey: "your-aliyun-ak"

keySecret: "your-aliyun-sk"

serviceName: "aliyun-svc.dns"

servicePort: 443

- type: google

apiKey: "your-google-api-key"

cx: "search-engine-id"

serviceName: "google-svc.dns"

servicePort: 443

- type: bing

apiKey: "bing-key"

serviceName: "bing-svc.dns"

servicePort: 443

- type: arxiv

serviceName: "arxiv-svc.dns"

servicePort: 443

searchRewrite:

llmServiceName: "llm-svc.dns"

llmServicePort: 443

llmApiKey: "your-llm-api-key"

llmUrl: "https://api.example.com/v1/chat/completions"

llmModelName: "deepseek-chat"

timeoutMillisecond: 15000With this OpenAI protocol BaseUrl: http://127.0.0.1:8080/v1, you can converse using ChatBox/LobeChat and other OpenAI protocol-supported tools.

Additionally, you can integrate directly using OpenAI's SDK, as illustrated below:

import json

from openai import OpenAI

client = OpenAI(

api_key="none",

base_url="http://localhost:8080/v1",

)

completion = client.chat.completions.create(

model="deepseek-r1",

messages=[

{"role": "user", "content": "Analyze the trend of international gold prices."}

],

stream=False

)

print(completion.choices[0].message.content)By combining Higress and DeepSeek, enterprises can complete the deployment from zero to production-level intelligent Q&A systems within 24 hours, making LLMs truly become the smart engine driving business growth.

639 posts | 55 followers

FollowAlibaba Cloud Native Community - March 20, 2025

Alibaba Cloud Native Community - March 6, 2025

Alibaba Cloud Native Community - February 13, 2025

ApsaraDB - February 9, 2021

Alibaba Cloud Native Community - March 6, 2025

Alibaba Cloud Native Community - March 13, 2025

639 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community