By Geng Jiangtao.

Spark on MaxCompute is different to Spark's native architecture. So, in this post, we're going to show you how you can get Spark on MaxCompute set up elsewhere, in particular, how you can set up Spark on an Alibaba Cloud ECS server, in DataWorks, and in a local IDEA test environment.

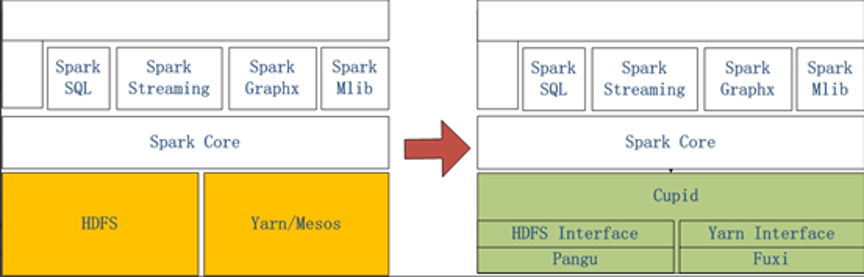

The underlying architecture built for Spark on Alibaba Cloud is different to its native architecture, but offers full native support. Consider the two diagrams below.

The above diagrams show how Sparks works both in a native architecture and how it works on Alibaba Cloud. The diagram on the left shows the native Spark architecture, and the one on the right shows the architecture used for Spark on MaxCompute, which is a solution that runs on the Cupid platform. This architecture allows MaxCompute to provide Spark computing services and enable the Spark computing framework to be provided on a unified computing resource and dataset permission system.

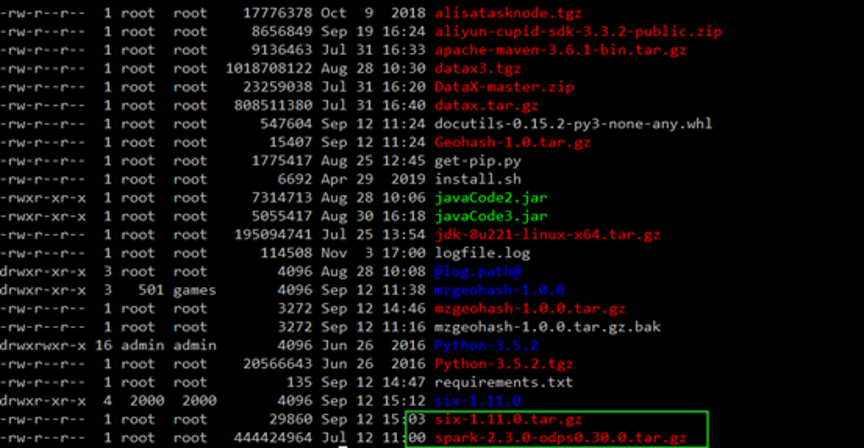

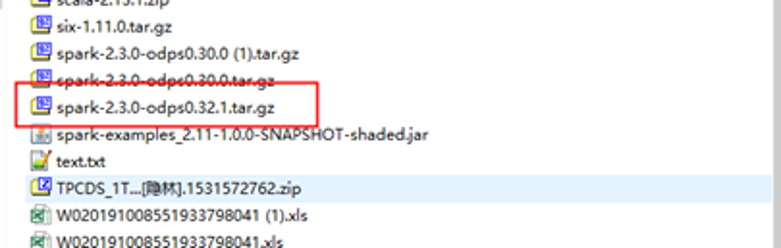

Download the client to your local device. You can download it from this link. After you've downloaded it, you'll need to upload the file to an Alibaba Cloud ECS server.

Next, you'll need to decompress the file.

tar -zxvf spark-2.3.0-odps0.30.0.tar.gzFind Spark-default.conf in the file and configure it.

# spark-defaults.conf

# 一般来说默认的template只需要再填上MaxCompute相关的账号信息就可以使用Spark

spark.hadoop.odps.project.name =

spark.hadoop.odps.access.id =

spark.hadoop.odps.access.key =

# 其他的配置保持自带值一般就可以了

spark.hadoop.odps.end.point = http://service.cn.maxcompute.aliyun.com/api

spark.hadoop.odps.runtime.end.point = http://service.cn.maxcompute.aliyun-inc.com/api

spark.sql.catalogImplementation=odps

spark.hadoop.odps.task.major.version = cupid_v2

spark.hadoop.odps.cupid.container.image.enable = true

spark.hadoop.odps.cupid.container.vm.engine.type = hyperDownload the corresponding code from GitHub, which you can find here. Then, take that code and upload it to your ECS server and decompress it.

unzip MaxCompute-Spark-master.zipYou're want to compress the code again. Compress the code into a JAR package. Before you do this, however, ensure that Maven is installed.

cd MaxCompute-Spark-master/spark-2.x

mvn clean packageNow check that this last operation was successful. Go to view and run the JAR package.

bin/spark-submit --master yarn-cluster --class com.aliyun.odps.spark.examples.SparkPi \

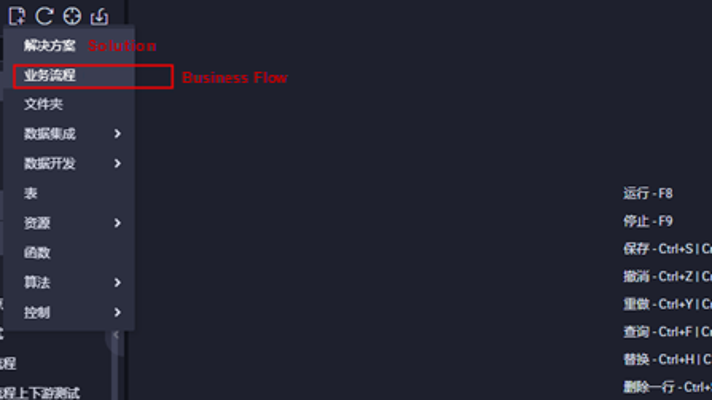

MaxCompute-Spark-master/spark-2.x/target/spark-examples_2.11-1.0.0-SNAPSHOT-shaded.jarLog on to the DataWorks console and click Business Flow.

Open a business flow and create an ODPS Spark node.

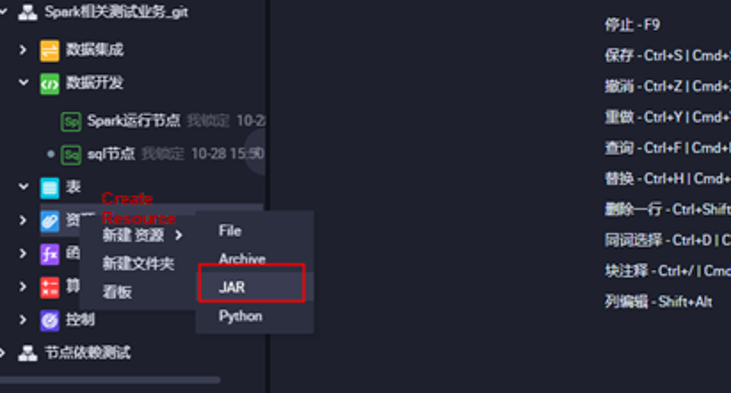

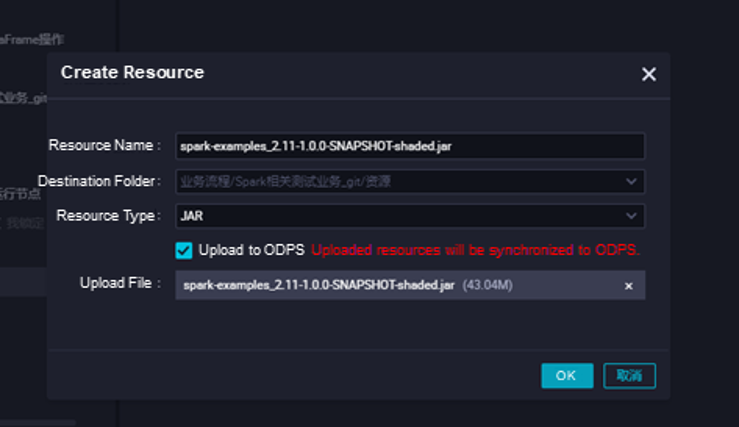

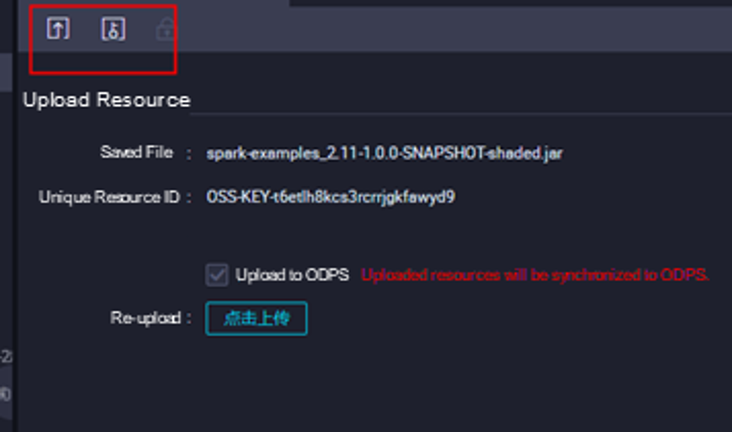

Upload JAR package resources. You can select the JAR package to be uploaded and submit it.

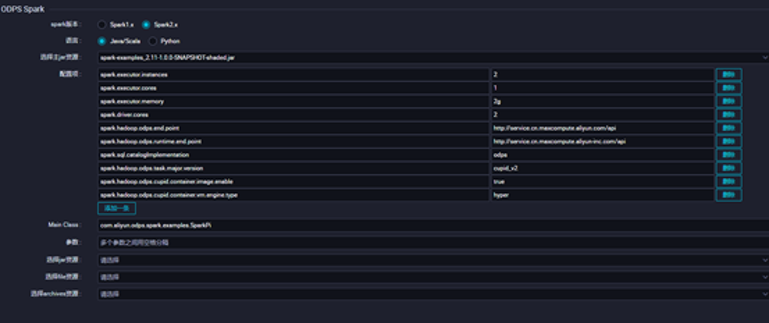

Configure the corresponding ODPS Spark node. Save and submit the configuration. Click Run to view its running status.

Download and decompress the client and template code. You can find the client here.

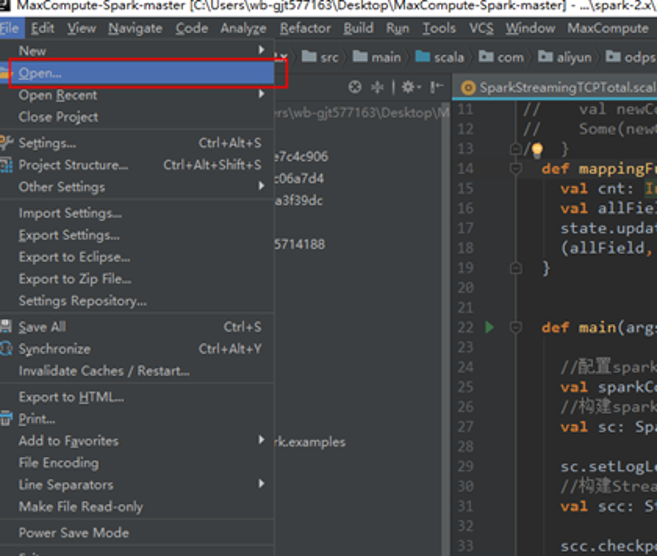

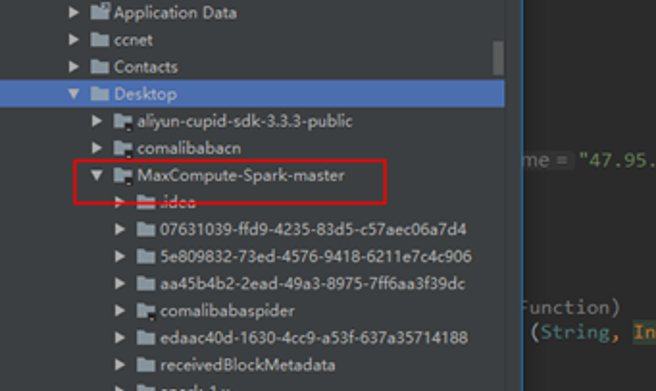

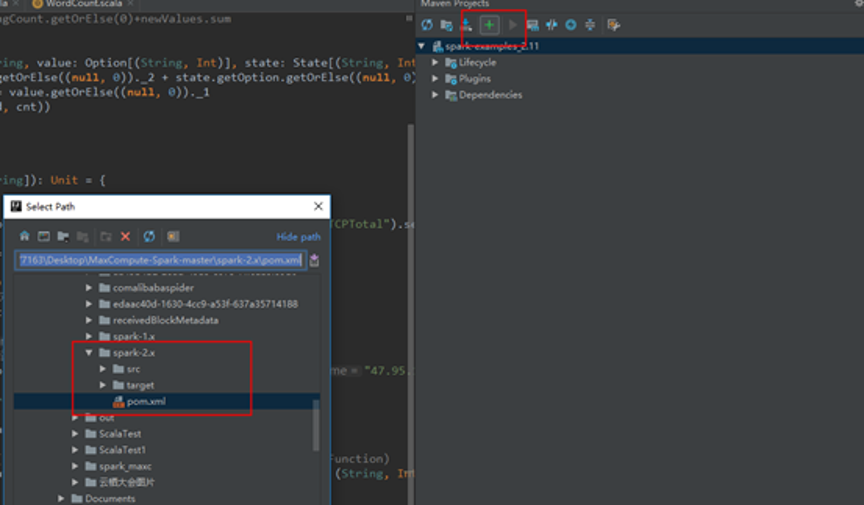

You'll also need the template code, which you can find on GitHub. After you've got the code, open IDEA and choose File and then Open… to select the template code.

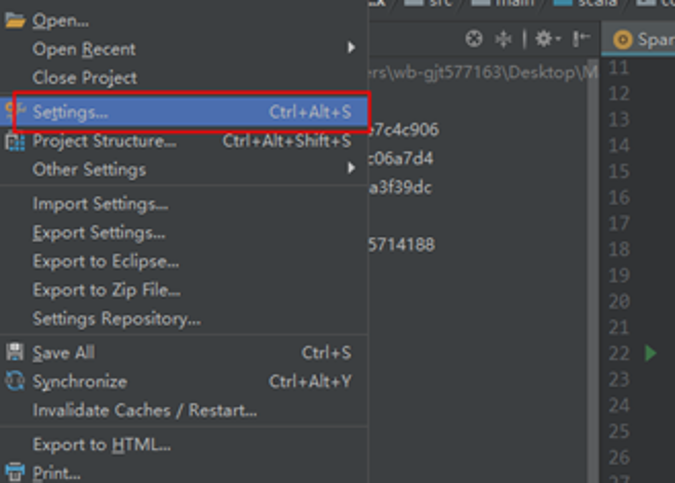

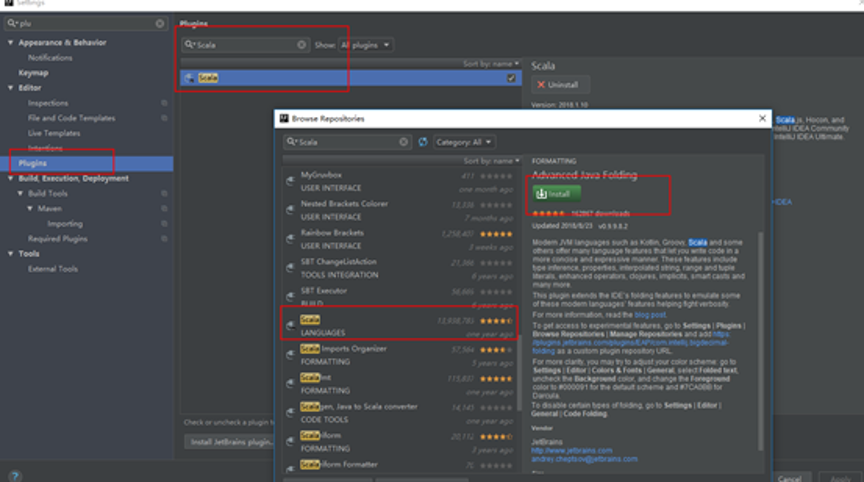

Install the Scala plugin.

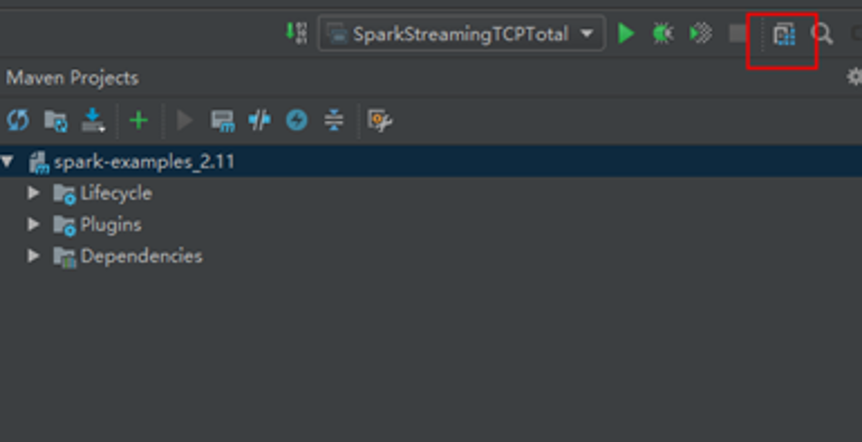

Configure Maven.

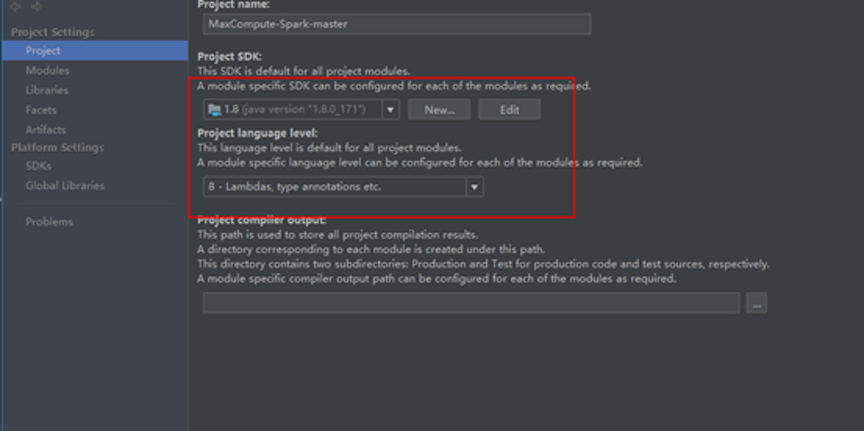

And last configure the JDK and related dependencies.

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - June 2, 2021

Alibaba Cloud Community - February 23, 2024

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Cloud MaxCompute - August 27, 2020

Alibaba Cloud Indonesia - February 15, 2024

Alibaba Cloud MaxCompute - May 5, 2019

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba Cloud MaxCompute