By Aaron Handoko and M Rifandy Zulvan, Solution Architects Alibaba Cloud Indonesia

In this article we will discuss how to quickly setup ODPS Spark Environment by using Docker Image and how to run PySpark ODPS using CLI.

1). An active Alibaba Cloud account

2). Maxcompute Project Created

3). Familiarity with Spark

1). Create a new Dockerfile and copy and paste the following codes. This Dockerfile image will setup all the necessary libraries for running Spark on Linux.

FROM centos:7.6.1810

# Install JDK

RUN yum install -y java-1.8.0-openjdk-devel.x86_64

RUN set -ex \

&& yum install wget -y \

&& yum install git -y

#Install Maven

RUN set -ex \

&& wget https://dlcdn.apache.org/maven/maven-3/3.9.6/binaries/apache-maven-3.9.6-bin.tar.gz --no-check-certificate \

&& tar -zxvf apache-maven-3.9.6-bin.tar.gz

#install Spark MaxCompute client

RUN set -ex \

&& wget https://maxcompute-repo.oss-cn-hangzhou.aliyuncs.com/spark/2.4.5-odps0.33.2/spark-2.4.5-odps0.33.2.tar.gz \

&& tar -xzvf spark-2.4.5-odps0.33.2.tar.gz

# Install python

RUN set -ex \

# Preinstall the required components.

&& yum install -y wget tar libffi-devel zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gcc make initscripts zip\

&& wget https://www.python.org/ftp/python/3.7.0/Python-3.7.0.tgz \

&& tar -zxvf Python-3.7.0.tgz \

&& cd Python-3.7.0 \

&& ./configure prefix=/usr/local/python3 \

&& make \

&& make install \

&& make clean \

&& rm -rf /Python-3.7.0* \

&& yum install -y epel-release \

&& yum install -y python-pip

# Set the default Python version to Python 3.

RUN set -ex \

# Back up resources of Python 2.7.

&& mv /usr/bin/python /usr/bin/python27 \

&& mv /usr/bin/pip /usr/bin/pip-python27 \

# Set the default Python version to Python 3.

&& ln -s /usr/local/python3/bin/python3.7 /usr/bin/python \

&& ln -s /usr/local/python3/bin/pip3 /usr/bin/pip

# Fix the YUM bug that is caused by the change in the Python version.

RUN set -ex \

&& sed -i "s#/usr/bin/python#/usr/bin/python27#" /usr/bin/yum \

&& sed -i "s#/usr/bin/python#/usr/bin/python27#" /usr/libexec/urlgrabber-ext-down \

&& yum install -y deltarpm

RUN pip install --upgrade pip

ENV JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.392.b08-2.el7_9.x86_64

ENV CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

ENV PATH=$JAVA_HOME/bin:$PATH

ENV SPARK_HOME=/root/spark-3.1.1-odps0.34.1

ENV SPARK_HOME=/spark-2.4.5-odps0.33.2

ENV PATH=$SPARK_HOME/bin:$PATH

ENV MAVEN_HOME=/apache-maven-3.9.6

ENV PATH=$MAVEN_HOME/bin:$PATH

ENV PATH=/usr/local/git/bin/:$PATH

ENV HADOOP_CONF_DIR=$SPARK_HOME/conf

WORKDIR /spark-job

# Clone repos

RUN git clone https://github.com/aliyun/MaxCompute-Spark.git .\

&& cd ./spark-2.x \

&& mvn clean package

RUN echo PATH=/usr/local/git/bin/:$PATH

2). Run the following command to execute and enter the Dockerfile container

docker build -t spark-odps .

docker run -it spark-odps bash

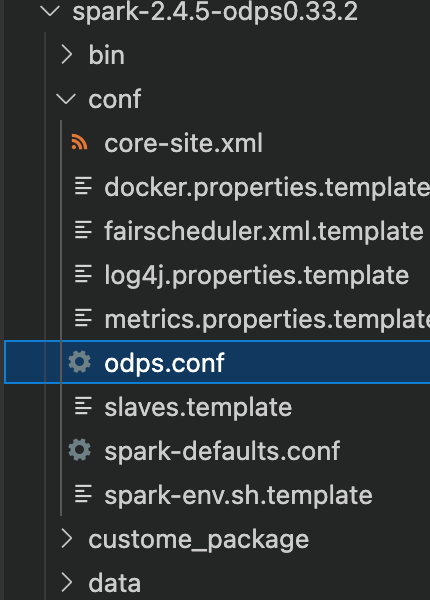

3). Edit Configuration file

cd $SPARK_HOME

vim conf/spark-defaults.conf

Below is the example of the configuration file

spark.hadoop.odps.project.name =<YOUR MAXCOMPUTE PROJECT>

spark.hadoop.odps.access.id = <YOUR ACCESSKEY ID>

spark.hadoop.odps.access.key = <YOUR ACCESSKEY SECRET>

spark.hadoop.odps.end.point = <YOUR MAXCOMPUTE ENDPOINT>

# For Spark 2.3.0, set spark.sql.catalogImplementation to odps. For Spark 2.4.5, set spark.sql.catalogImplementation to hive.

spark.sql.catalogImplementation=hive

spark.sql.sources.default=hive

# Retain the following configurations:

spark.hadoop.odps.task.major.version = cupid_v2

spark.hadoop.odps.cupid.container.image.enable = true

spark.hadoop.odps.cupid.container.vm.engine.type = hyper

spark.hadoop.odps.cupid.webproxy.endpoint = http://service.cn.maxcompute.aliyun-inc.com/api

spark.hadoop.odps.moye.trackurl.host = http://jobview.odps.aliyun.com

spark.hadoop.odps.cupid.resources=aaron_ws_1.hadoop-fs-oss-shaded.jar

# Accessing OSS

spark.hadoop.fs.oss.accessKeyId = <YOUR ACCESSKEY ID>

spark.hadoop.fs.oss.accessKeySecret = <YOUR ACCESSKEY SECRET>

spark.hadoop.fs.oss.endpoint = <OSS ENDPOINT>4). Create odps.conf in the conf folder in spark

Here is the example of the configuration required inside the odps.conf

odps.project.name = <MAXCOMPUTE PROJECT NAME>

odps.access.id = <ACCESSKEY ID>

odps.access.key = <ACCESSKEY SECRET>

odps.end.point = <MAXCOMPUTE ENDPOINT>

5). There are two types of running mode you can use; Local mode and Cluster Mode.

cd $SPARK_HOME

Running on Local Mode (on-premise engine)

1). Run the following command:

./bin/spark-submit --master local[4] /root/MaxCompute-Spark/spark-2.x/src/main/python/spark_sql.py

Running on Cluster Mode (ODPS engine)

a). Run the following commands to add environment variable

export HADOOP_CONF_DIR=$SPARK_HOME/conf

b). Run the following command to run the spark in cluster mode

bin/spark-submit --master yarn-cluster --class com.aliyun.odps.spark.examples.SparkPi /root/MaxCompute-Spark/spark-2.x/src/main/python/spark_sql.pySmart Talk: Empowering Conversations with LLM Langchain AI Chatbots

117 posts | 21 followers

FollowAlibaba Cloud Community - February 23, 2024

Alibaba Clouder - September 29, 2019

Alibaba Cloud Indonesia - February 19, 2024

Farruh - January 12, 2024

Alibaba Cloud MaxCompute - March 3, 2020

Alibaba Cloud MaxCompute - June 2, 2021

117 posts | 21 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Cloud Governance Center

Cloud Governance Center

Set up and manage an Alibaba Cloud multi-account environment in one-stop mode

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More CloudBox

CloudBox

Fully managed, locally deployed Alibaba Cloud infrastructure and services with consistent user experience and management APIs with Alibaba Cloud public cloud.

Learn MoreMore Posts by Alibaba Cloud Indonesia