By Haiqing

MaxCompute (formerly known as ODPS) is a leading distributed big data processing platform developed by Alibaba Cloud. It is widely utilized, especially within the Alibaba Group, and supports the core businesses of multiple business units (BUs). ODPS V2.0, continuously optimizing performance, aims to enhance the user experience and expression capabilities of SQL, as well as improve the productivity of ODPS developers.

Building upon the SQL engine of ODPS V2.0, MaxCompute simplifies SQL compilation and enhances the language's expressiveness. We present a series of articles titled Unleash the Power of MaxCompute to explore the capabilities of MaxCompute (ODPS V2.0).

The flexibility of MaxCompute self-defined functions in terms of parameters and return values is often mentioned as a limitation in the data development process. Unlike Hive's GenericUDF method, which allows users to determine the return value type based on the parameter type by calling user code, MaxCompute does not support this approach due to performance and security considerations. However, MaxCompute provides several alternative methods that allow you to customize functions flexibly.

• Scenario 1: If you need to implement a UDF (User-defined Function) that can accept input of any type, you will need to write an evaluate function for each type, as MaxCompute UDFs do not support generics.

• Scenario 2: UDAFs and UDTFs in MaxCompute use the @Resolve annotation to specify input and output types, and they cannot be overloaded. To implement a custom function that accepts multiple types, you will need to define different functions.

• Scenario 3: MaxCompute supports parameterized views, which allow common SQL statements to be encapsulated. However, the table-valued parameters of a parameterized view require the input table to have the exact number and type of columns as defined when creating the view. It is not possible to define a view that can accept different tables with similar characteristics.

This article explores the improvements made by MaxCompute to address these concerns.

A parameterized view is a type of view specifically designed by MaxCompute. It allows users to define parameters, greatly increasing code reusability. Many users utilize this feature to extract common SQL statements into views, forming a pool of reusable SQL code.

Parameterized views have certain limitations during the declaration process. The parameter type and length are fixed. In particular, table-valued parameters can be passed into parameterized views. These table-valued parameters require the formal parameter to be consistent with the actual parameter in terms of the number and type of columns. This limitation restricts many usage scenarios. Here's an example:

CREATE VIEW paramed_view (@a TABLE(key bigint)) AS SELECT @a.* FROM @a JOIN dim on a.key = dim.key;In this example, a section of logic is encapsulated that uses the "dim" table to filter input tables. Originally, this logic was designed to be generic, allowing any table containing the "key" column to be used as an input table. However, due to the limitations of view definition, the declared parameter type only includes the "key" column. As a result, the table parameter passed by the caller of the view must have only one column, and the returned data set also contains only one column. This deviates from the original intention of the view.

The latest version of MaxCompute has introduced improvements to parameterized views, enhancing their flexibility in definition.

Firstly, parameterized views now support the use of the ANY keyword, which represents any type. For example:

CREATE VIEW paramed_view (@a ANY) AS SELECT * FROM src WHERE case when @a is null then key1 else key2 end = key3;In this view, the first parameter can accept any type. However, please note that the ANY type cannot be used in operations that require explicit types, such as + or AND. The ANY type is commonly used in TABLE parameters as a PassThrough column. For example:

CREATE VIEW paramed_view (@a TABLE(name STRING, id ANY, age BIGINT)) AS SELECT * FROM @a WHRER name = 'foo' and age < 25;

-- Call example

SELECT * FROM param_view((SELECT name, id, age from students));In the above view, a table-valued parameter is accepted, while not considering the second column of the table. Therefore, this column can be defined as the ANY type. Each time a parameterized view is called, the return value type is recalculated based on the actual type of the input parameter. For instance, in the mentioned view, if the input table is TABLE(c1 STRING, c2 DOUBLE, c3 BIGINT), the second column of the output dataset will be automatically converted to DOUBLE type, allowing the caller of the view to perform operations on this column using any available DOUBLE type operation.

It is important to note that after creating a view using CREATE VIEW, the DESC command can be used to obtain the view's description, which includes information about the return type of the view. However, since the return type is recalculated during the view's invocation, the recalculated type may differ from the initial type calculated during view creation. This is particularly applicable to the ANY type.

Aside from the ANY type, table-valued parameters in parameterized views also support the use of *, which represents any number of columns. The * can have a specified type or use the ANY type. For example:

CREATE VIEW paramed_view (@a TABLE(key STRING, * ANY), @b TABLE(key STRING, * STRING)) AS SELECT a.* FROM @a JOIN @b ON a.key = b.key;

-- Call example

SELECT name, address FROM param_view((SELECT school, name, age, address FROM student), school) WHERE age < 20;The above view accepts two table-valued parameters. The first parameter has a string type for its first column, followed by any number of columns of any type. The second parameter has a string type for its first column, followed by any number of columns of the STRING type. There are a few points to note here:

• The variable-length part must be written at the end of the table-valued parameter definition. That is, no other columns are allowed after the *. This also indirectly results in a table-valued parameter having at most one variable-length column list.

• Since the variable-length part needs to be at the end, the columns of the input table may not necessarily be arranged in this order. In such cases, the columns of the input table need to be rearranged. A subquery can be used as a parameter (as shown in the example above), and the subquery needs to be enclosed in parentheses.

• The variable-length part of a table-valued parameter does not have a name, so you cannot reference or operate on this data during the view definition. This limitation is intentional. To operate on data within the variable-length part, you must declare the column in the fixed-length portion, and the compiler will check the parameters passed during the call.

• Although operations cannot be performed on the variable-length part, the SELECT * wildcard can still output the variable-length columns. For example, in the paramed_view above, all columns from @a are returned, including name, age, and address, which the compiler adds when the view is called, even though only the key column is present when the view is created. The view caller can then operate on these columns (such as using WHERE age < 20).

• The columns in the table specified by the TABLE parameter might not exactly match the fixed-length columns specified in the view. If the names differ, the compiler will rename them automatically; if the types differ, the compiler will attempt an implicit conversion (and will report an error if it's not possible).

The fourth point is particularly valuable as it ensures the flexibility of input parameters when calling the view, without reducing the information in the data. Using this wisely can significantly increase the reusability of common code.

The following is a call example. The view used in the example is:

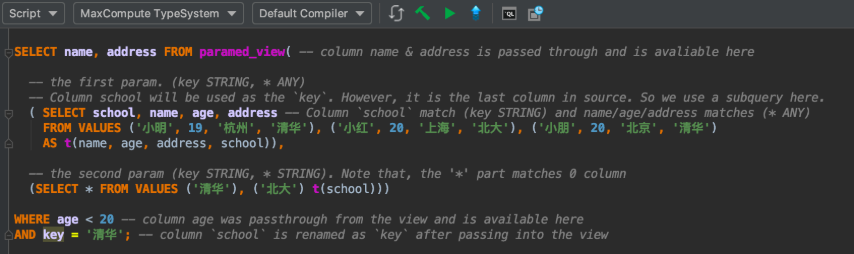

CREATE VIEW paramed_view (@a TABLE(key STRING, * ANY), @b TABLE(key STRING, * STRING)) AS SELECT a.* FROM @a JOIN @b ON a.key = b.key; If you call views in MaxCompute Studio, you can enjoy features such as syntax highlighting and error prompts. The call code of the execution is as follows:

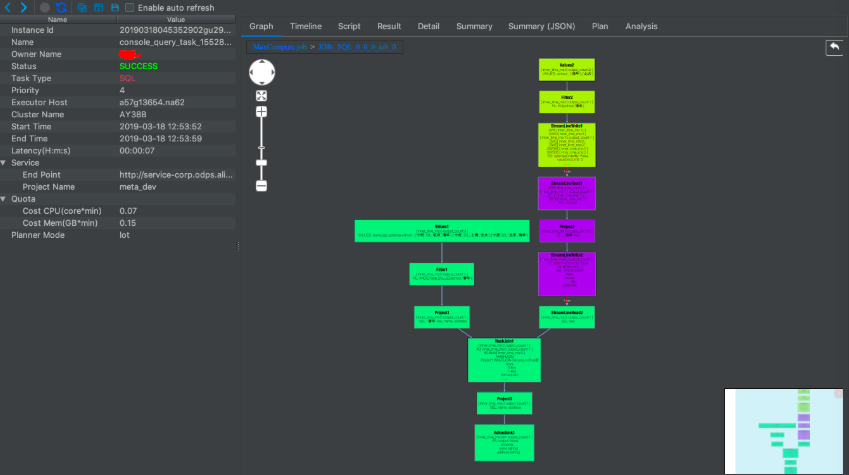

The state diagram of the execution is as follows:

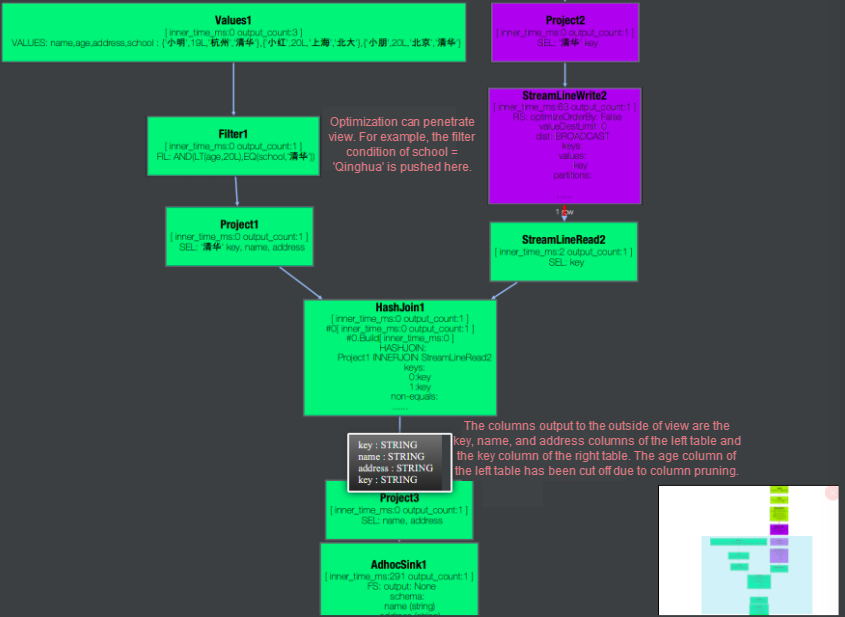

Zoom in on the execution process and observe carefully. You can find several interesting points in the diagram:

The output of the preceding execution is as follows:

+------+---------+

| name | address |

+------+---------+

| Xiaoming | Hangzhou |

+------+---------+Users often misuse parameterized views. For example, they use the parameters of the parameterized view as macro substitution parameters. Let me explain it here. A parameterized view is actually a function call, not a macro substitution. Here is an example:

CREATE VIEW paramed_view(@a TABLE(key STRING, value STRING), @b STRING)

AS SELECT * FROM @a ORDER BY @b;

-- Call example

select * from paramed_view(src, 'key');In the above example, the user expects that the ORDER BY @b is replaced by the macro with the ORDER BY key. That is, decide to sort by the key column according to the input parameters. However, the parameter @ b is actually passed as a value, and ORDER BY @b is equivalent to ORDER BY 'key'. That is, ORDER BY is a string constant ('key') instead of a column. To implement the function of "letting the caller decide the sorting column", you can consider the following approach.

CREATE VIEW orderByFirstCol(@a TABLE(columnForOrder ANY, * ANY)) AS SELECT `(columnForOrder)?+.+` FROM (SELECT * FROM @a ORDER BY columnForOrder) t;

-- Call example

select * from orderByFirstCol((select key, * from src));In the above example, the caller is required to put the column to be sorted in the first column, so the subquery is used to extract the columns to be sorted in src to the forefront during the call. The (columnForOrder)?+.+ returned by the view is a regular wildcard that matches all columns except columnForOrder. For more information on column expression, please see the document.

MaxCompute UDFs overload functions by overloading the evaluate method. For example, the following UDFs define two overloads. If the input is of the String type, the output is of the String type. If the input is of the BIGINT type, the output is of the DOUBLE type.

public UDFClass extends UDF {

public String evaluate(String input) { return input + "123"; }

public Double evaluate(Long input) { return input + 123.0; }

}Although this method can solve some problems, it has limitations. For example, if the genericsare not supported, you must write an evaluate function for each type to do a function that accepts any type. Sometimes, overloading is even impossible, such as ARRAY and ARRAY overloading.

public UDFClass extends UDF {

public String evaluate(List<Long> input) { return input.size(); }

// An error is reported here because the parameters of this function and String evaluate (List<Long> input) are the same after the Java type is erased.

public Double evaluate(List<Double> input) { input.size(); }

// UDFs do not support the following definition method.

public String evaluate(List<Object> input) { return input.size(); }

}When PYTHON UDF or UDTF does not provide the Resolve annotation, the input parameters are determined based on the number of parameters, and variable lengths are also supported. Therefore, PYTHON UDF or UDTF is very flexible. However, because they are too flexible, the compiler cannot find certain errors statically. For example:

class Substr(object):

def evaluate(self, a, b):

return a[b:];The preceding function accepts two parameters. From the implementation point of view, the first parameter needs to be of the STRING type, and the second parameter should be of the INTEGER type. Users need to decide which one to be used during a call. Even if the user passes the wrong parameter, the compiler cannot report the error. In addition, UDF return values defined in this way can only be of the STRING type, which is not flexible enough.

To solve the above problem, consider using UDT. UDTs are often used to call methods in the JDK, such as java.util.Objects.toString(x), which converts any object x to type STRING. It also has a good use in user-defined functions. UDTs support generics, class inheritance, variable length, and other functions, making it easier to define functions. Here is an example:

public class UDTClass {

// This function accepts a numeric type (which can be TINYINT, SMALLINT, INT, BIGINT, FLOAT, DOUBLE and any UDT whose base class is Number) and returns DOUBLE

public static Double doubleValue(Number input) {

return input.doubleValue();

}

// This method accepts a parameter of numeric type and a parameter of any type, and the return value type is the same as the type of the second parameter.

public static <T extends Number, R> R nullOrValue(T a, R b) {

return a.doubleValue() > 0 ? b : null;

}

// This method accepts an array or list of any element type and returns BIGINT

public static Long length(java.util.List<? extends Object> input) {

return input.size();

}

// Note that this parameter can only accept the java.util.Map object <Object, Object> object of UDT without forced conversion. If you need to pass in any map object, such as map<bigint,bigint>, you can consider:

// 1. Use java.util.Map when defining functions <?extends Object, ?extends Object>

// 2. Perform forced conversion during a call, such as UDTClass.mapSize(cast(mapObj as java.util.Map<Object, Object>)).

public static Long mapSize(java.util.Map<Object, Object> input) {

return input.size();

}

}UDTs can provide flexible ways to define functions. But sometimes UDFs need to obtain some context through com.aliyun.odps.udf.ExecutionContext (passed in the setup method). Now UDTs can also use com.aliyun.odps.udt.UDTExecutionContext.get() method or such an ExecutionContext object to obtain some context.

UDAFs and UDTFs of MaxCompute use the Resolve annotation to determine a function signature. For example, the following method defines a UDTF. The UDTF accepts a BIGINT parameter and returns a value of the DOUBLE type.

@com.aliyun.odps.udf.annotation.Resolve("BIGINT->DOUBLE")

public class UDTFClass extends UDTF {

...

}The limitation of this method is obvious: the input parameters and output parameters are fixed, which cannot be overloaded.

MaxCompute has expanded the syntax of the Resolve annotation, allowing for increased flexibility. Here are the key points:

• The parameter list can include an asterisk ('*') to accept input parameters of any length and type. For example, @Resolve('double,*->String') accepts a parameter list where the first parameter is of type DOUBLE, followed by any type and any number of parameters. The UDF author needs to handle the number and type of inputs within the code and perform the corresponding operations (similar to the printf function in the C programming language). It's important to note that when the asterisk is used in the return value list, it has a different meaning, as explained in the following third point.

• The ANY keyword can be used in the parameter list to indicate parameters of any type. For instance, @Resolve('double,any->string') accepts a parameter list where the first parameter is of type DOUBLE, and the second parameter can be of any type. It's worth mentioning that ANY cannot be used in the return value list and cannot be used for subtypes of complex types (e.g., ARRAY).

• UDTF's return value can contain an asterisk, indicating multiple STRING types being returned. However, it's important to note that the number of return values is not infinitely flexible but depends on the number of aliases given when the function is called. For example, in @Resolve("ANY,ANY->DOUBLE,*"), when calling the function as UDTF(x, y) as (a, b, c), three aliases (a, b, c) are given after "as". The compiler determines that alias "a" is of type DOUBLE (based on the type given for the first column in the annotation), and aliases "b" and "c" are of type STRING. Since three return values are specified, the UDTF must forward an array with a length of 3 during forwarding. Otherwise, a runtime error will occur. It's important to note that this error cannot be detected during compilation, so effective communication between the UDTF author and the caller is necessary. When the caller specifies the number of aliases in the SQL statement, it must be in accordance with the requirements of the UDTF. It's worth mentioning that the number of return values for an Aggregator is fixed at 1, so this functionality has no impact on UDAFs.

Use an example to illustrate. Take a UDTF as an example:

import com.aliyun.odps.udf.UDFException;

import com.aliyun.odps.udf.UDTF;

import com.aliyun.odps.udf.annotation.Resolve;

import org.json.JSONException;

import org.json.JSONObject;

@Resolve("STRING,*->STRING,*")

public class JsonTuple extends UDTF {

private Object[] result = null;

@Override

public void process(Object[] input) throws UDFException {

if (result == null) {

result = new Object[input.length];

}

try {

JSONObject obj = new JSONObject((String)input[0]);

for (int i = 1; i < input.length; i++) {

// The variable-length part of a return value must be of the STRING type.

result[i] = String.valueOf(obj.get((String)(input[i])));

}

result[0] = null;

} catch (JSONException ex) {

for (int i = 1; i < result.length; i++) {

result[i] = null;

}

result[0] = ex.getMessage();

}

forward(result);

}

}The number of return values of this UDTF is determined based on the number of input parameters. The first output parameter is a JSON text, followed by the key to be parsed from the JSON. The first return value is the error message during JSON parsing. If no error occurs, the content parsed from JSON is sequentially output based on the input key. For examples:

-- Customize the number of output aliases based on the number of input parameters.

SELECT my_json_tuple(json, 'a', 'b') as exceptions, a, b FROM jsons;

-- There can be no column of the variable-length part.

SELECT my_json_tuple(json) as exceptions, a, b FROM jsons;

-- The following SQL statement will occur a runtime error because the number of aliases does not match the actual output number.

-- Note that this error cannot be found at compile time.

SELECT my_json_tuple(json, 'a', 'b') as exceptions, a, b, c FROM jsons;Although many extensions have been made, they may not meet all the needs. In such cases, UDTs can still be used. UDTs can also be employed to implement Aggregators and UDTFs. For more information, please refer to the UDT examples.

The function prototype of MaxCompute user-defined functions may not offer sufficient flexibility, resulting in inconveniences during the data development process. This article has addressed the issues and provided solutions for various function definition methods. We hope that these solutions are helpful. Additionally, MaxCompute is constantly working to provide better services for you.

>> Next article

MaxCompute Unleashed - Part 7: Grouping Set, Cube and Rollup

MaxCompute Unleashed - Part 9: Script Mode and Parameter View

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - January 22, 2024

Alibaba Cloud MaxCompute - January 22, 2024

Alibaba Cloud MaxCompute - February 7, 2024

Alibaba Cloud MaxCompute - January 29, 2024

Alibaba Cloud MaxCompute - January 29, 2024

Alibaba Cloud MaxCompute - January 22, 2024

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute