The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Guan Tao.

The gross merchandise volume (GMV) of last year's Double 11 reached RMB 268.4 billion, setting yet another Double 11 record. This article unveils the advanced technologies, which have allow users from all over the world to shop through Alibaba. Specifically, we will discuss the development of the big data platform that underlies Alibaba's e-commerce platfroms.

During an exclusive big data session, Guan Tao, a researcher from the Alibaba Cloud Intelligent Computing Platform Division, spoke about the construction and considerations behind the Alibaba economy's big data platform.

The first thing you need to know about Double 11 is that it is Alibaba's largest online promotion. For consumers, Double 11 is something that happens in only one day, but it is a long-term project that requires a year's worth of dedication and planning, and it affects the lives of millions of platform merchants. From Alibaba's perspective, lots of thinking and preparation about the related business and technologies needs to go into Double 11.

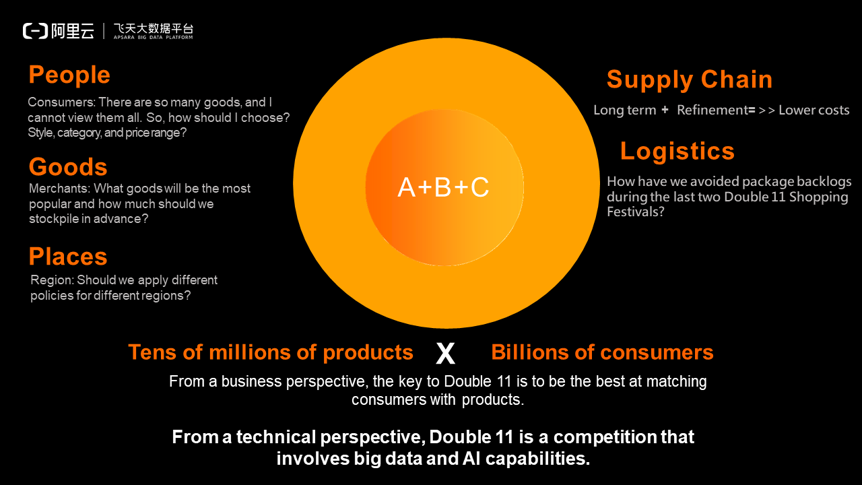

We view Double 11 in terms of the consumers, goods, and geography involved. First, when it comes to the consumers, during Double 11, we need to understand what kinds of consumers will be browsing on Alibaba's e-commerce platform, and what kinds of goods will they be interested in buying and will see. In terms of goods, merchants need to know what goods will be popular during the Double 11 event, because they will need to stock up supplies far in advance. The consideration of geography is most prominently involved in logistics, for example, the specific geographical locations where goods need to be stored in advance to achieve optimal logistical efficiency. There are two major things that are deeply connected with the three aspects we mentioned above. These two things are also key to the competitiveness of Alibaba's e-commerce platform. The first of these things is supply chain. If our supply chain can be laid out long in advance and with the necessary flexibility and refinement, it will play an important role in the promotion of brands and goods on the platform and the reduction of running costs. The second thing is logistics. A few years back, each Double 11 would turn our warehouse system into a sort of breakdown state. In recent years, although the purchase volume has been rising steadily, zero logistical breakdowns have occurred. Alibaba solved this problem by partnering with merchants to deploy products likely to be purchased by consumers in local warehouses near consumers. This is a process of matching tens of millions of products with billions of consumers.

In terms of technology, this is actually a competition that involves big data and AI capabilities. To succeed in Double 11, an enterprise must have data, computing power, and algorithms that are required for predicting the styles, categories, and price ranges of products that different types of consumers would purchase. Merchants must determine the goods likely to sell well and how many such goods they should stock in advance. Meanwhile, the supply chain must determine the layout that is required to achieve the optimal cost-performance ratio and then deploy goods to local warehouses, bringing them closer to customers. This is Alibaba's notion of A+B+C. Here, A refers to algorithms, B refers to big data, and C refers to computing. In this concept, the key to success is making sure you have the necessary data volume, algorithm quality, and low-cost computing power. Therefore, from a technical perspective and for participating companies, the competition in the Double 11 market is a competition between the big data and AI capabilities of the companies involved.

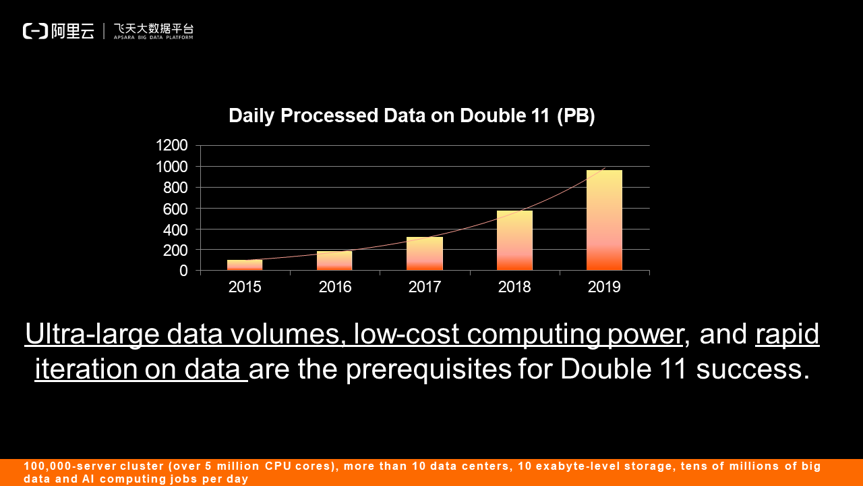

The following figure shows the daily data volumes processed during Double 11. From 2015 to 2019, the daily data processing volume of Double 11 increased by about 70% annually. However, these data volumes do not only need to be processed on the day of Double 11. In fact, from late September to Double 11, we need to process large volumes of data every day. The operation staff, analysts, and merchants run fine-grained algorithms on this data to implement data mining and information processing. These preparations allow us to achieve optimal matching performance on the actual day of Double 11.

In terms of the specific technology involved, there are three necessary conditions for success in Double 11: massive data volumes, low-cost computing power, and fast data iteration. Among them, the last condition deserves special attention. That is, we have to find a way to have tens of thousands of algorithm engineers and data engineers quickly iterate by using the data. This is as critical as the data volume and computing power we mentioned above. The ability to perform fast iteration on massive data volumes is essentially the capability of the data mid-end and data development platform.

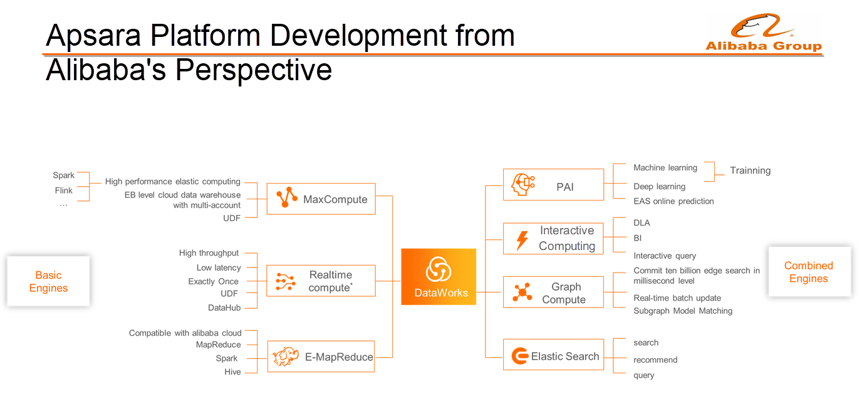

The following figure shows the overall layout of the Apsara big data platform. As shown on the left side, Alibaba's main data warehouses are located on MaxCompute. Currently, this product has more than 100 thousand servers located in 10 data centers around the world. This is where almost all of Alibaba's data is stored. MaxCompute supports a set of proprietary SQL engines and open-source computing capabilities like Spark. In addition to the primary data warehouses and big data computing, Alibaba also has a Flink-based stream computing system. A complete Hadoop-based E-MapReduce (EMR) solution is provided for cloud services. In the following figure, the three parts on the left are called Basic Engines. The engines on the right include the Machine Learning Platform for AI. The reason the engines on the left are separated from those on the right is that they are not on the same level. PAI jobs can run on MaxCompute, Flink, and EMR. In addition to PAI, Alibaba's big data platform also includes Hologres, Graph Compute, and Elasticsearch. In the middle, an integrated big data development platform runs through DataWorks. This integrates big data development and the establishment of data pipelines into one system.

The following uses MaxCompute as an example to describe the construction and considerations of the Alibaba economy's big data platform.

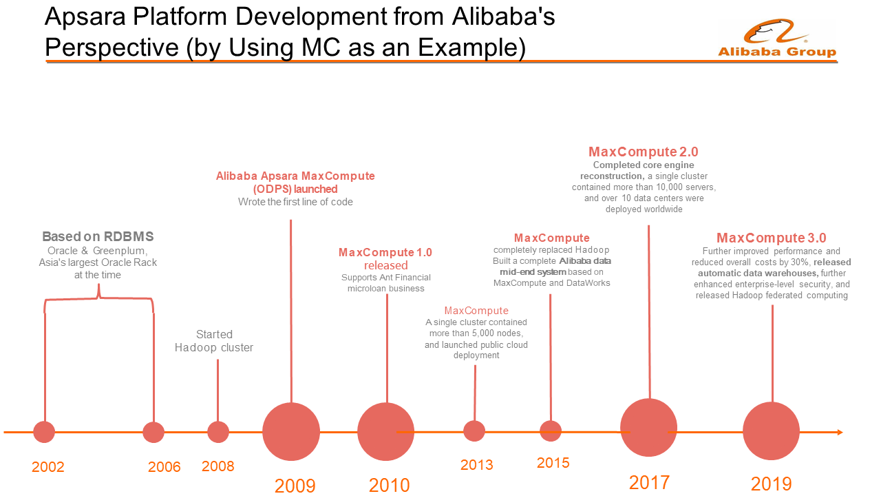

Starting in 2002, all Alibaba data was stored in Oracle databases. Since e-commerce businesses were originally classified as accounting businesses, therefore all of their data was stored in Oracle databases. At the time, Alibaba had the largest Oracle rack in Asia. After discovering that Oracle could not support increased computing power, Alibaba migrated to Greenplum. Greenplum adopts a distributed architecture that can be scaled up to the petabyte level. However, after less than a year, it was clear that Greenplum could not meet the development needs of Alibaba's business operations. Therefore, in 2008, Alibaba launched the Hadoop cluster, which marked Alibaba's first foray into big data. Alibaba Cloud was founded in 2009 and established the Apsara brand. At that time, while considering whether to build an Apsara system ourselves, we launched a big data engine project, distributed storage project, and distributed scheduling project together. In 2010, Alibaba released MaxCompute 1.0 for storage and computing. The underlying data basis is called Apsara Distributed File System, and Job Scheduler is used in the middle to support the microloan business of Ant Financial. In 2013, Alibaba began to reach a certain level in scale and scalability, with about 5,000 servers. At this key moment, Alibaba launched a critical internal project called "Moon Landing". This project called for all Alibaba data to be centralized in one system. Therefore, we discussed whether to use Hadoop or our in-house Apsara Distributed File System + MaxCompute system, and finally decided to use the latter. From 2013 to 2015, a complete moon landing was achieved. In 2015, Alibaba used MaxCompute to completely replace Hadoop and built a complete Alibaba data mid-end based on MaxCompute and DataWorks. In 2017, Alibaba believed that it was the time to update its engine, so we released MaxCompute 2.0, a total reconstruction of the core engine. At that time, a single cluster could be scaled to tens of thousands of servers, and we had more than 10 data centers around the world. Recently, Alibaba's work on MaxCompute has included performance improvements and the evolution of an automatic mid-end, or what we could call an automatic data warehouse.

To summarize the development of the Apsara platform in a few sentences: Looking back on Alibaba's development over the past decade, we see a continuous pursuit of greater data scales and lower computing costs. Next, we can also see a transition at Alibaba from open-source to in-house solutions, and now from in-house back to open-source solution, as is shown with the recent developments of Flink. We have also moved from databases to data warehouses, or to the data mid-end, and now to automatic data warehouses. Last, for our data lake, we initially chose the Hadoop system, but now it is necessary to consider how to integrate data lakes with data warehouses.

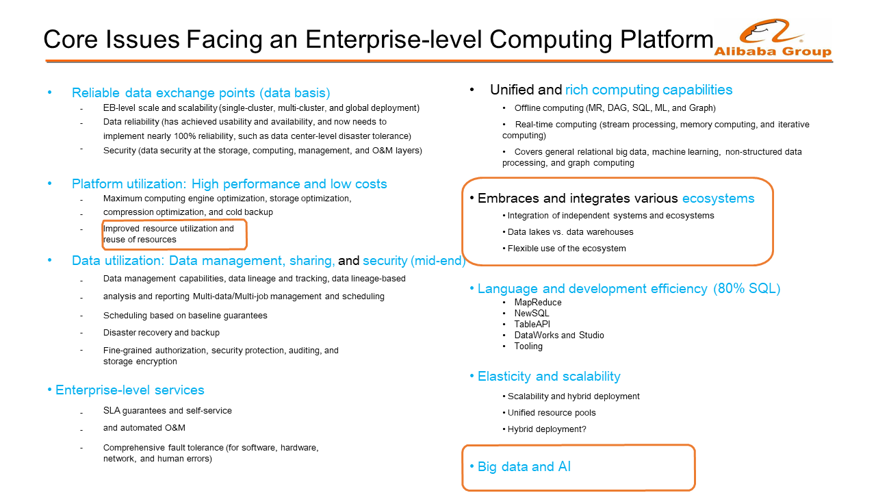

Enterprise-level computing platforms must resolve many issues. The first issue—which is a relatively simple issue, but nonetheless also very common—is that we need reliable data exchange points. Here, the reliability needs to be basically 100%, and it is necessary to ensure that the data is never lost once stored in data centers. Second, we must strike a balance between high performance and low cost. In addition to engine performance optimization, storage also needs to be considered. The third issue is how to ensure sound data management, sharing, and security. The fourth issue is enterprise-level capabilities, such as how to implement enterprise-level automated O&M. Fifth, we must support a wide range of unified computing capabilities, including batch processing, stream processing, machine learning, graph computing, and interactive computing. The sixth issue is how to embrace and integrate various ecosystems, including the integration of autonomous systems and ecosystems and the integration of data lakes and data warehouses. The seventh issue is relatively simple, that is, the development language and efficiency. Elasticity and scalability are the eighth issue. The final issue is how to improve the support of big data systems for AI capabilities and whether we can use AI capabilities to optimize big data systems.

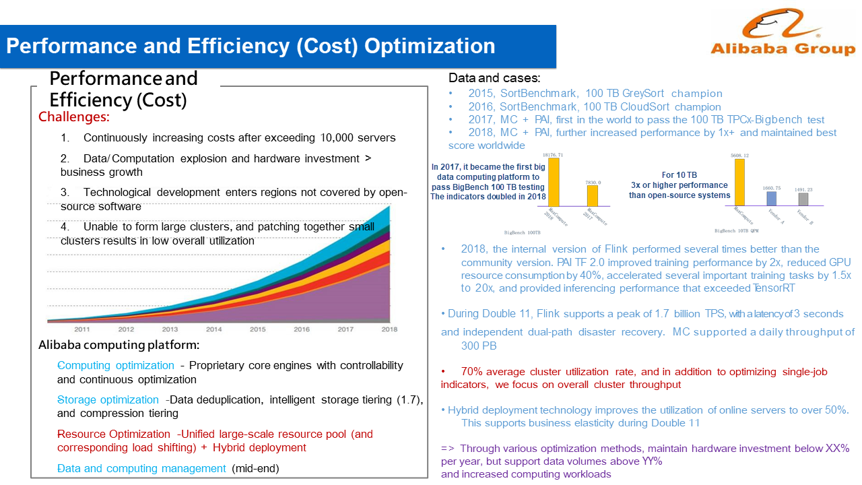

Alibaba faces four challenges related to performance and efficiency:

In response to these problems and challenges, we can optimize computing, storage, and resources. Here we focus on resource optimization, which means hybrid deployment. It should be noted that, when Alibaba considers resource optimization, we do not consider the cost and efficiency of a single job, but focus on improving the utilization rate of the entire cluster. Alibaba set a mandatory goal of improving the cluster utilization rate to over 60%.

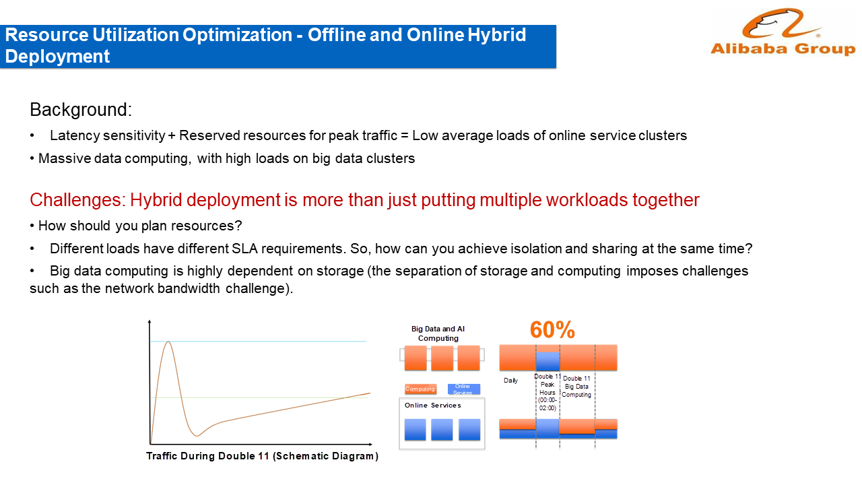

On the day of Double 11, a spike occurs as soon as the traffic begins. This spike may only occur once a year. By about 02:00 in the morning, the traffic falls to a low level because everyone has gone to bed after the first wave of shopping. Then, the traffic rises again in the morning after everyone wakes up. Considering all of this, how many servers does Alibaba need to have ready to meet our requirements? If we only consider the peak value, half of our servers would almost always be idle. If we make plans by using average values, user requests may fail during peak hours, leading to a poor user experience during Double 11.

In response to the current Double 11 situation, Alibaba has implemented two hybrid deployment systems. The first system is called offline and online hybrid deployment, and the second is called online and offline hybrid deployment. Big data and AI have different requirements for online and offline computing. E-commerce services may be idle during the day, while big data services are always busy. Therefore, during the day, you can send some big data computing tasks to e-commerce clusters and use some e-commerce CPU resources. During Double 11, big data services need to be quickly rolled back. This rollback occurs on tens of thousands of servers, freeing up their resources to support the e-commerce traffic peak. Big data and AI computing use the e-commerce resource hybrid deployment on a daily basis, while e-commerce only uses big data resources on Double 11 and Double 12 every year, but the flexibility this provides on these dates saves Alibaba billions of RMB in costs. Hybrid deployment is by no means a problem that can be solved by simply putting some workloads together. Therefore, we must always face certain problems, such as how to plan resources. This not only involves resource planning for online clusters, but also resource planning for the entire Alibaba economy. Different loads have different SLA requirements, so how can we ensure isolation and allow resource sharing at the same time? In addition, big data computing is highly dependent on storage. We must use an architecture that separates storage and computing. However, to achieve the separation of storage and computing, we must also cope with extremely high network bandwidths.

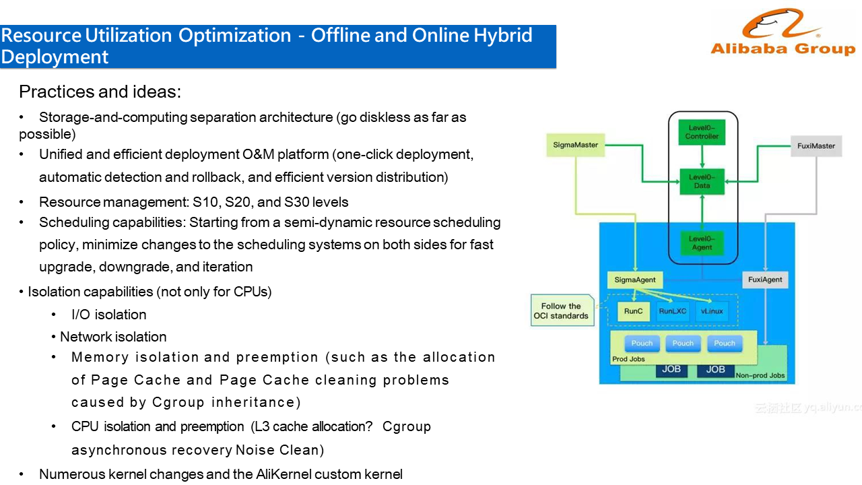

The following figure shows a resource scheduling simulation chart. Sigma is an online container scheduler. All online systems implement containerization and all container systems use Sigma for scheduling. Job Scheduler is the scheduler for big data and AI and schedules primary computing power. Both schedulers can see a set of resource views, which is equivalent to seeing which resources are idle and can be transferred in and out. There are two sets of agents at the underlying layer, which apply for and manage resources in a downward manner after they are obtained. In the future, Sigma may be extended to the Kubernetes platform, but it is currently implemented by using Docker. Here, we are not trying to produce a central scheduler to implement all scheduling tasks. Instead, we schedule tasks online and offline separately. Starting from a semi-dynamic resource scheduling policy, changes to the scheduling systems on both sides are kept to a minimum to achieve fast upgrade, downgrade, and iteration.

Conceptually, this involves several key points, one of which is the separation of storage and computing. You need to separate storage from computing. This is especially the case if you want to use large-scale e-commerce resources to achieve elasticity. Therefore, Alibaba launches a project called "Diskless World", which aims to separate all computing from storage so that computing will not rely on local storage. As scheduling involves hundreds of thousands of servers, we require a centralized and efficient deployment and O&M platform, along with one-click deployment, automatic detection and rollback, and efficient version distribution. At the resource management layer, resources are divided into the S10, S20, and S30 levels because different applications have different SLA requirements. Isolation capability not only refers to CPU isolation, but also I/O, network, and memory isolation. Facing a large number of changes, Alibaba has implemented a custom kernel called AliKernal, which includes all these changes. After addressing these key points, we have more or less completed a complete e-commerce hybrid deployment.

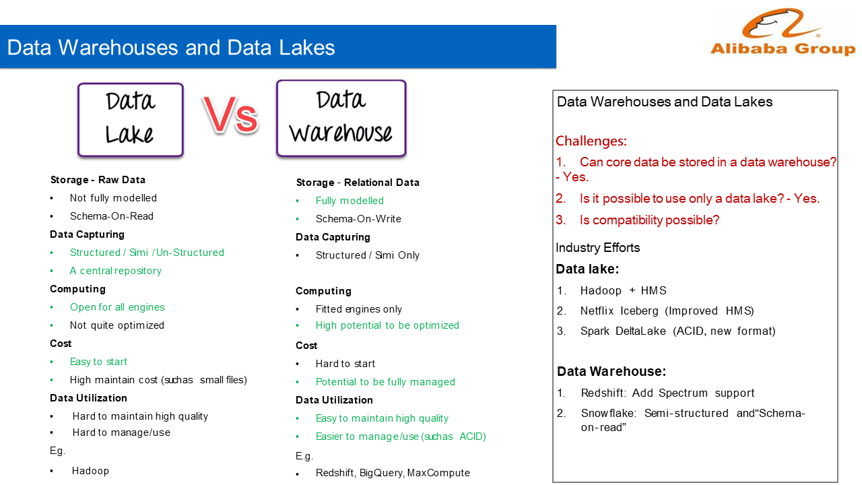

In fact, Hadoop itself is a data lake system, which has a unified storage architecture with multiple engines running on it. Hadoop more or less meets all the definitions of a data lake. For example, data stored in HDFS does not need to be fully modeled and you can even completely forego modeling. It supports Schema-On-Read, and the schema can be dynamically parsed when it is read. Because of the open architecture, all engines can run on Hadoop. However, it is difficult to perform comprehensive storage optimization because the storage is open. In terms of cost, it is relatively easy to create a data lake. The difficulty lies in the high maintenance costs. In terms of data usage, it is often difficult to achieve high quality and maintainability. From another perspective, many enterprises, including Alibaba, are working on data warehouses. This is because data is stored in the data warehouse in a fully modeled manner, and therefore tables and data are defined in advance. Due to the fully modeled manner, a data warehouse usually stores only structured and non-structured data, which leads to a problem with data storage flexibility. On the other hand, data warehouses are integrated, so not all engines are suitable for this architecture. However, the integrated architecture is easier to optimize, and it is easier to optimize storage for upper-layer computing. The drawback here is that it may be difficult to build a data warehouse because it has high requirements for data writing and maintenance. However, once the data warehouse is built, it is easier to achieve fully managed data. In terms of data usage, high data quality and usability can also be achieved in most cases.

Therefore, it is feasible to put all core data in the data warehouse. This is true for many enterprises, including Alibaba. This is also feasible if only a data lake is used. In fact, this is the solution adopted by many companies. Then, we are wondering whether we can combine data warehouses and data lakes? This is also feasible. The industry has tried this many times in both directions. Those who are using a data lake architecture can find the advantages of data warehouses. Therefore, they are evolving towards data warehouses. Examples of such efforts include Hadoop + HMS, Netflix Iceberg (which we can view as an enhanced version of HMS), and Spark DeltaLake. Users of data warehouse systems can likewise find the flexibility of data lakes and are evolving in that direction, such as Redshift and Snowflake. Therefore, this evolution is happening at both ends.

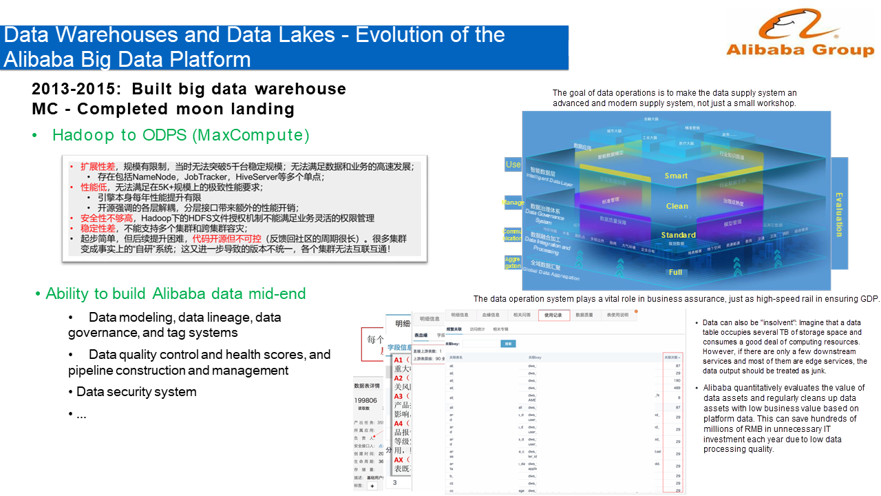

From 2013 to 2015, Alibaba encountered some problems with the Hadoop system, in terms of its scalability, performance, security, stability, and code controllability. Therefore, Alibaba migrated its data from Hadoop to MaxCompute, which is equivalent to the main data warehouse for a data lake scenario. At that time, we began to build the Alibaba data mid-end and to construct data modeling, data lineage, data governance, and tag systems.

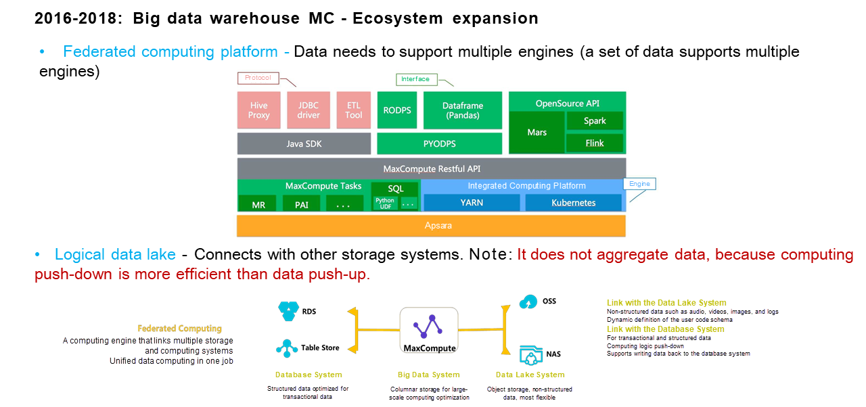

After Alibaba built its data mid-end, from 2016 to 2018, we worked on two projects in our main data warehouse platform, a joint computing platform and a logical data lake. The joint computing platform empowers data to support multiple engines. Cupid is encapsulated on the MaxCompute platform, and the data resource layer is encapsulated into the Yarn and Kubernetes platforms. The data storage layer was abstracted as a set of I/O interfaces, and the metadata system was abstracted into a set of systems, allowing connection with open-source engines such as Spark and Flink. The Cupid platform allows Spark and Flink to run data by using resources on MaxCompute without modifying the code. The system only replaces some JAR packages. This is equivalent to expanding the range of supported engines on the basis of a data warehouse. This approach also provides external table capabilities. A "logical data lake" connects to other storage systems instead of aggregating data. This is because computing push-down is more efficient than data move-up.

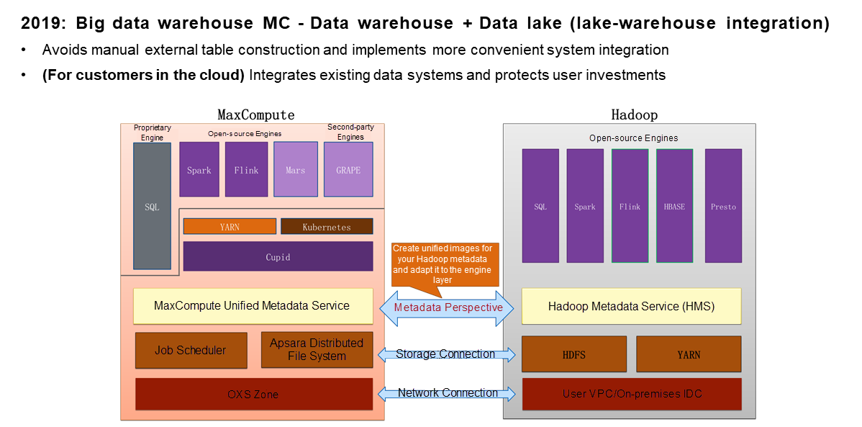

In 2019, Alibaba saw the potential problems of implementing a logical data lake, which would make things very difficult for users, especially when working with large volumes of data. In addition, cloud customers have reported that they already have Hadoop systems with 200 servers and want to use Alibaba's data warehouse architecture and mid-end architecture to improve their business capabilities. That is, they want to achieve harmonious development along both lines. Therefore, Alibaba is working on achieving "lake-warehouse integration", by fusing data warehouses and data lakes. In addition to the network connection between the data lake and the data warehouse, Alibaba also implements metadata interconnection. What this means is that, when you want to perform Join computing on the data on both sides, you do not need to create external tables. Currently, this architecture is in trial use.

137 posts | 21 followers

FollowAlibaba Clouder - January 22, 2020

Alibaba Clouder - August 27, 2019

Alibaba Clouder - November 22, 2019

AlibabaCloud_Network - December 19, 2018

Alibaba Clouder - December 3, 2019

AlibabaCloud_Network - December 3, 2019

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud MaxCompute