By Li Yongbin (Shuide)

A proper smart assistant can help you schedule meetings, handle daily office tasks, or call to remind you to pay your credit card. As users and consumers, we are growing accustomed to the wide range of services provided by dialog bots. However, it is difficult and costly for enterprises to build dialog bots with these services. Li Yongbin, a senior algorithm expert from Alibaba DAMO Academy's Alime Conversational AI team, shared his ideas about the Dialog Studio development platform with us, touching on the origin of the platform, its design philosophy, core technologies, and business applications. He also described how this platform enables all industries to develop their own dialog bots.

Why was it necessary to build a platform? For this question, I think we should start by looking at a specific task-based dialog example. In our daily work, we often have to schedule meetings. This is how our internal office assistant schedules a meeting: First, I say "Help me schedule a meeting", and it asks "What day would you like to hold the meeting?" I reply, "Three o'clock in the afternoon on the day after tomorrow." It then asks "Who will you meet with?", and I list the people I want to attend. Then, the assistant calls a backend service to obtain the schedules of all the participants. It assures that all the participants are available during the meeting time. If not, it will recommend another time when they are all free. Since not everyone was free at 3 o'clock, I had to change the meeting time.

So I said, "How about eleven o'clock in the morning?" Then, the assistant will ask, "How long will be the meeting?", and I say, "One hour." Next, it asks, "What is the subject of the meeting?", and I tell it, "We will discuss the launch plan for next week." Now, the assistant has all the information it needs. Finally, it will give me a summary and ask me to confirm whether to send a meeting invitation. After I confirm the meeting, the assistant will call the backend email service to send the meeting invitations.

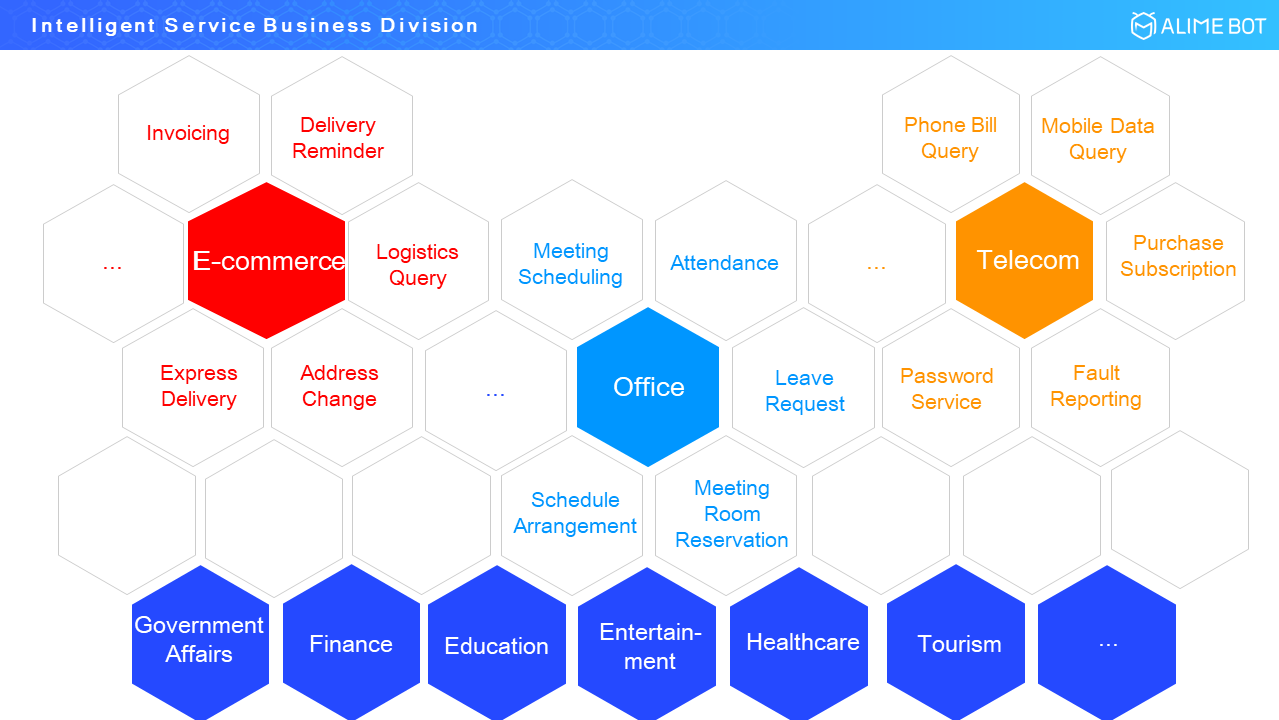

This is a typical example of task-based dialog, which satisfies two conditions. First, it has a clear goal. Second, it achieves this goal through multiple rounds of dialog and interaction. In the office management field, similar task-based dialogs are used for attendance, leave requests, reserving meeting rooms, arranging schedules, and many other similar tasks.

In the e-commerce industry, task-based dialogs are used in many scenarios, such as invoicing, delivery reminders, logistics queries, address changes, and express delivery reception. In the telecom industry, such scenarios include phone bill queries, mobile data queries, subscription purchases, fault reporting, and password changes. If we look for it, we can find this kind of task-based dialogs in various scenarios throughout our daily lives, in fields such as government affairs, finance, education, entertainment, healthcare, and tourism. Task-based dialogs are necessary in basically all industries.

Alibaba intelligent robots, such as Alime, Alime Shop Assistant, and AlimeBot, can be used in these scenarios. However, as it is impossible for us to customize a dialog process for every scenario in every industry, we adopted the idea of Alibaba as a platform. With this idea, we created the Alibaba dialog development platform. Internally, we call this platform Dialog Studio.

In short, we developed Dialog Studio because we could not create a custom dialog bot for every scenario. Therefore, we wanted to create a platform where developers could develop bots for their own dialog scenarios.

Now, I want to talk about the core design concepts of Dialog Studio. I think the overall design philosophy can be summed up as follows: "one center and three principles". "One center" means that we view dialogs as the center of the platform.

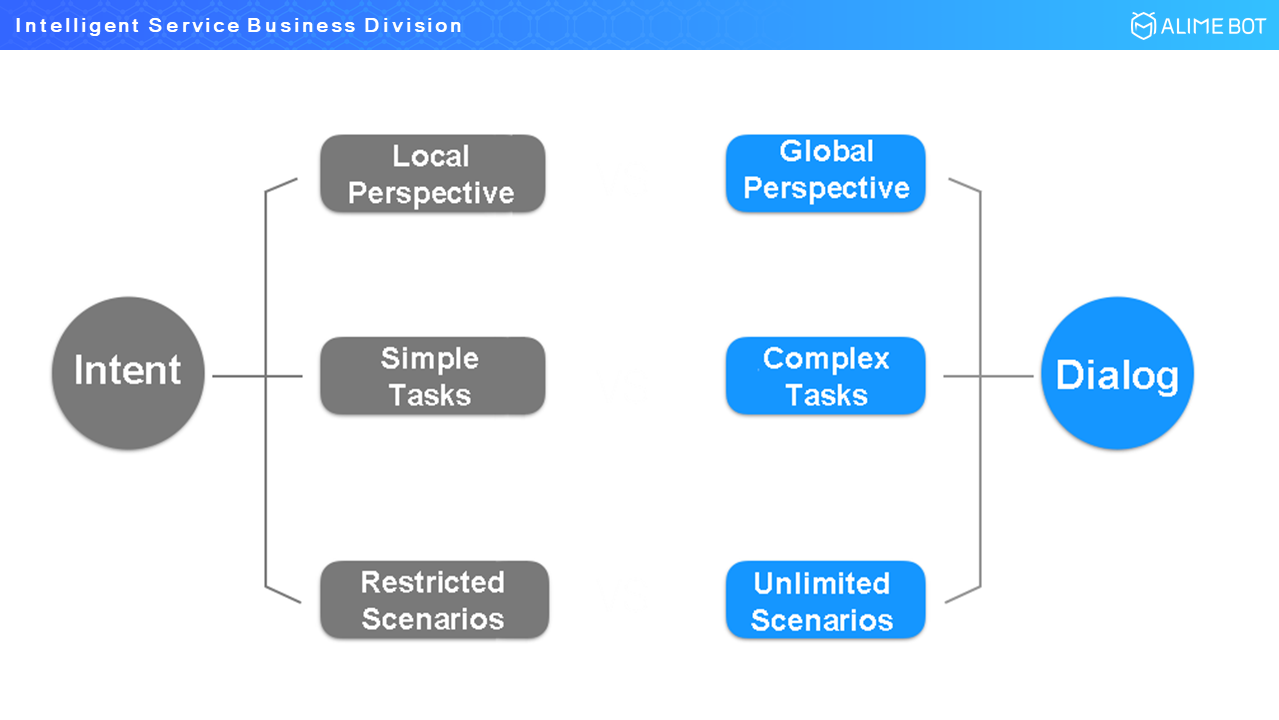

This phrase comes from the practice over the past few years by which many of the major dialog platforms throughout the world have adopted designs centered on intents. For example, if these platforms want to complete a task in the music field, they actually look at intent lists, so the actual dialog is reduced to secondary importance.

In Dialog Studio, we take the opposite approach by placing dialogs at the center of our design. Therefore, our product interface does not show isolated intents, but an interconnected dialog process related to the business logic. In intent-centric designs, you only see a local perspective, so these systems can only complete certain simple tasks, such as controlling a light, telling a joke, or checking the weather. When performing complex tasks, such as issuing an invoice or activating a China Mobile subscription, the intent-centric approach increases the difficulty of use and maintenance. By taking a completely different approach and putting dialogs back at the center of our design philosophy, we have a global perspective and can perform complex tasks in any scenario.

As I just said, Dialog Studio is a platform, and to build a platform, you must overcome many challenges.

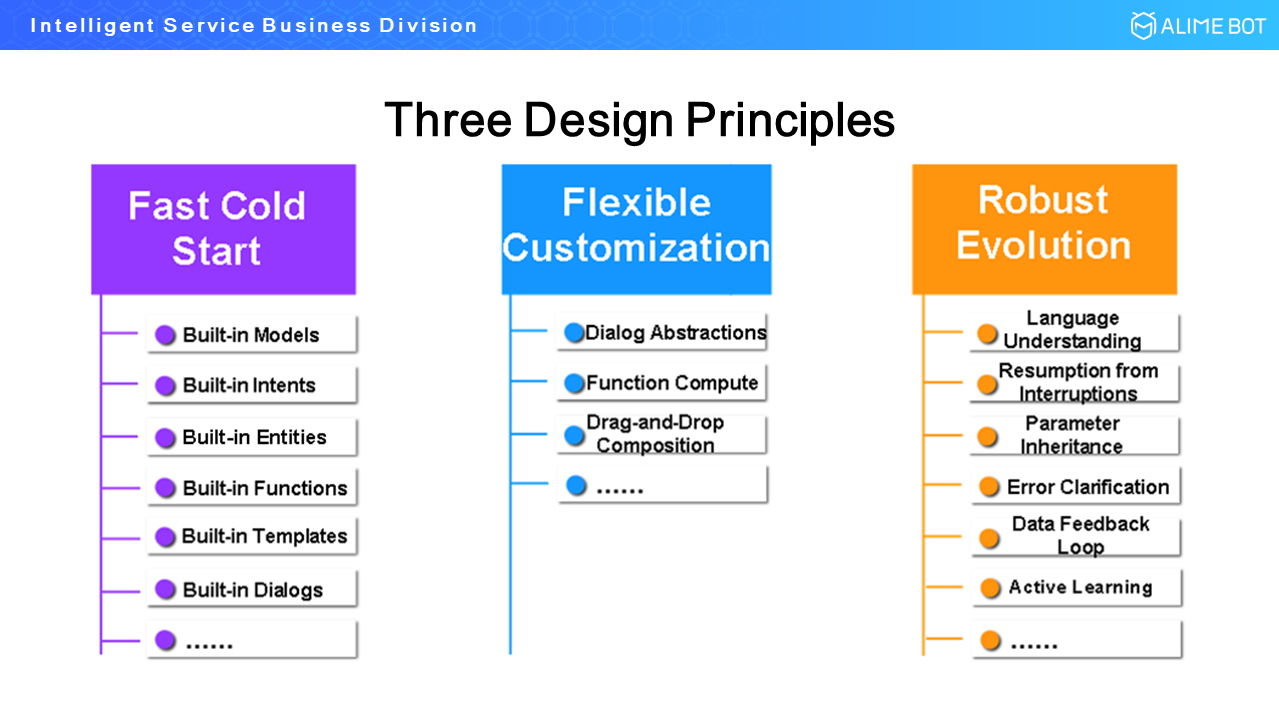

The first challenge was to provide users with greater ease of use. The second was to allow users to flexibly customize capabilities for different industries. The third was to allow bots and dialog systems to keep improving after launch. These were the three major challenges that faced our platform.

To address these challenges, we developed three principles to be followed throughout the design and implementation of the platform.

The first principle was that the cold start speed had to be fast to make the platform easier to use. The second principle was to provide users with flexible customization capabilities. This is the only way that the needs of various scenarios in various industries can be met. The third principle was to provide robust capabilities for evolution. That means, after a model goes online, it can continuously learn from various feedback data to constantly improve its performance.

A fast cold start speed converts all kinds of capabilities and data used by users into preset capabilities. Simply put, the platform does more, so users have to do less. To allow flexible customization, we must abstract the basic elements of the entire dialog platform to a high degree. The greater the platform's degree of abstraction, the more adaptable it becomes. To enable robust evolution, we had to explore models and algorithms in depth. For example, we carefully designed language understanding models, dialog management models, and data feedback loops. These are our design concepts and principles. Next, I will discuss how these concepts are actually applied in the implementation process.

When creating a platform, we must deal with a wide range of technologies, including algorithms, engineering, frontend technology, and interaction technology. A closer look at algorithms will give you a better understanding of the creation.

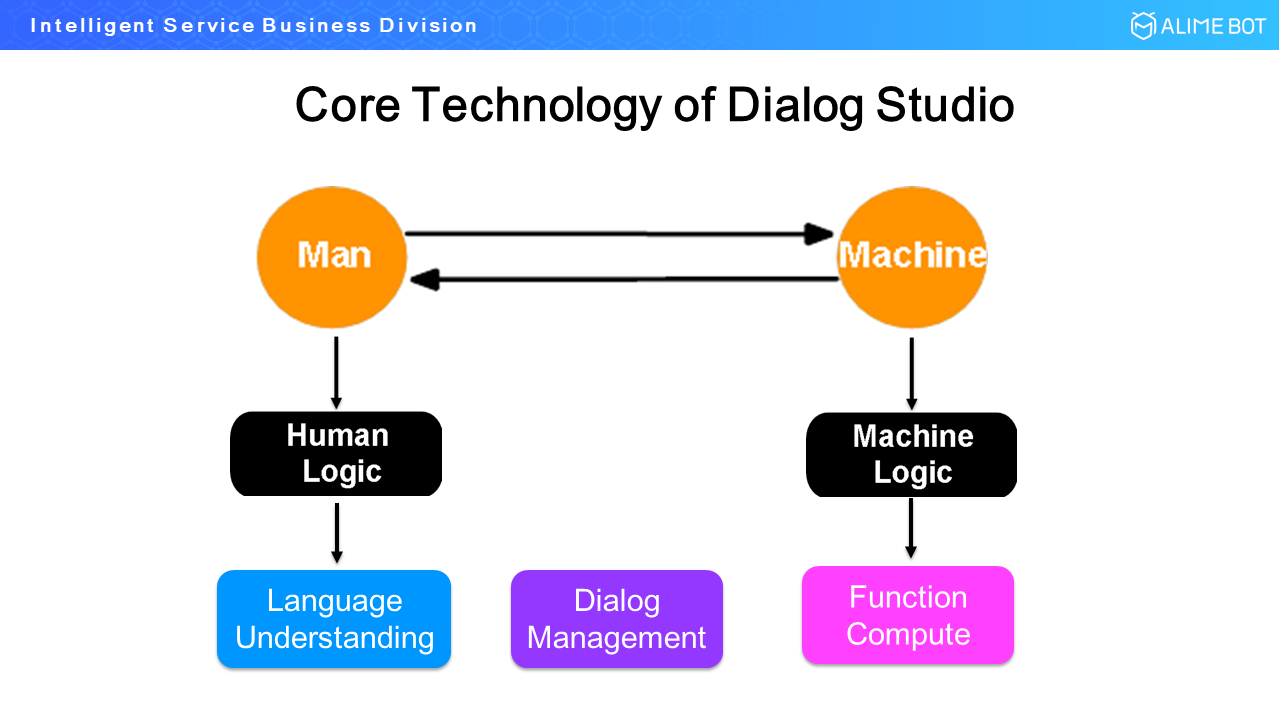

First, Dialog Studio is used for dialogs. A man-machine dialog involves two entities, a human and a machine. Humans use human logic, which requires us to express our ideas through a language. Therefore, we need a language understanding service to deal with input in natural language. Meanwhile, machines use machine logic, which expresses ideas as code. Because of this, a function compute service is required. The dialog process between a human and a machine must be effectively managed, so a dialog management module is also required. Therefore, the three core modules of Dialog Studio are language understanding, dialog management, and function compute.

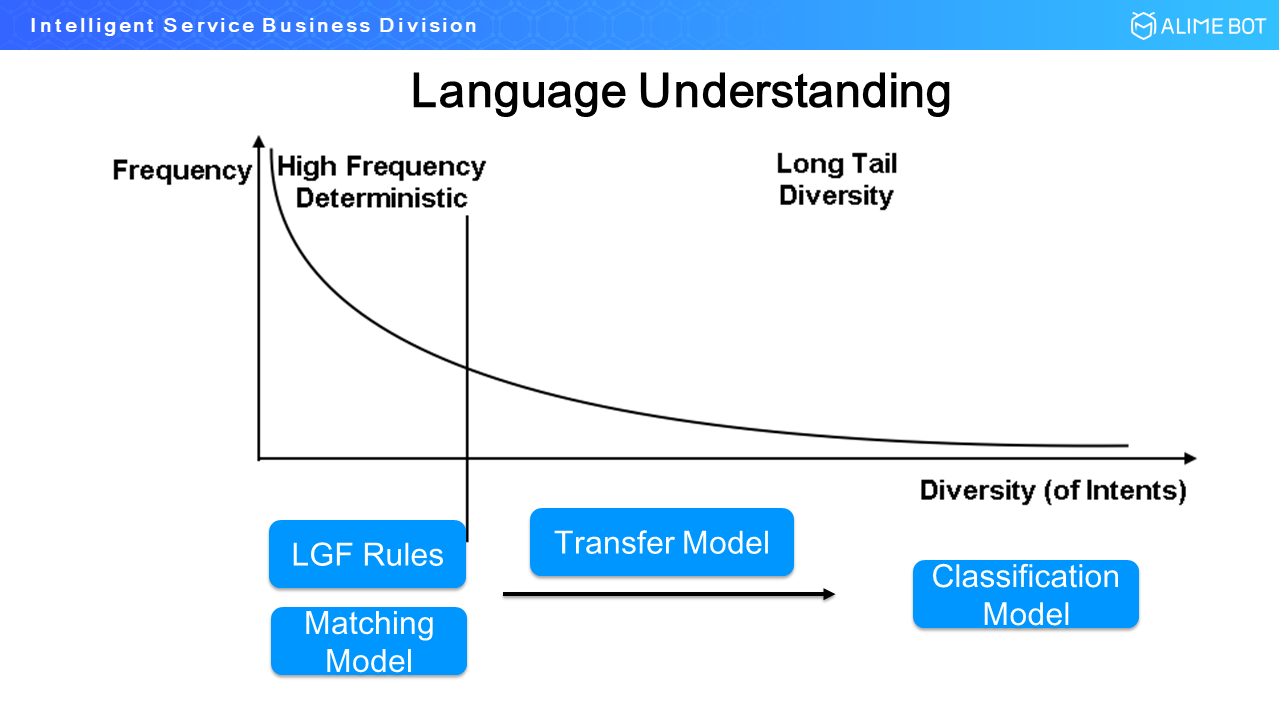

First, let's take a look at the preceding figure. The horizontal axis measures the diversity of intents, and the vertical axis indicates frequency. This is a bit unclear, so let's see a specific example. Say I want to issue an invoice. This is an intent. If we sample the dialog input of 100,000 users with this same intent and measure the frequency of different word combinations used to express this intent, perhaps the most common phrase used will be "issue an invoice", which occurs, for example, 20,000 times. The second most common phrase may be "print an invoice", which occurs 8,000 times. These are both high-frequency phrases. The same intent can also be expressed by much longer phrases, such as "Yesterday I bought a red skirt in your shop. Could you help me issue an invoice?" Such sentences with a long cause and effect will be used less frequently and make up the long tail in the preceding graph. Such a phrase may only appear once or twice in the sample set.

After compiling statistics, we can see that the diversity distribution of intent statements is a power-law distribution. This means we can implement an effectively targeted design through technical means. First, we can apply some rules to high-frequency statements, such as context-independent grammar. This will allow us to deal with high-frequency statements more effectively. However, a rule-based approach cannot be generalized. Therefore, we need to use a matching model and calculate similarity to assist the rules. The combination of these two approaches allows us to better handle the high-frequency part of the graph. For the long tail composed of low-frequency statements, the current approach is to use a supervised classification model. This means we have to collect or label lots of data. Between the rule component and the classification model, we use a transfer learning model. The following figure shows why we need to introduce the transfer learning model.

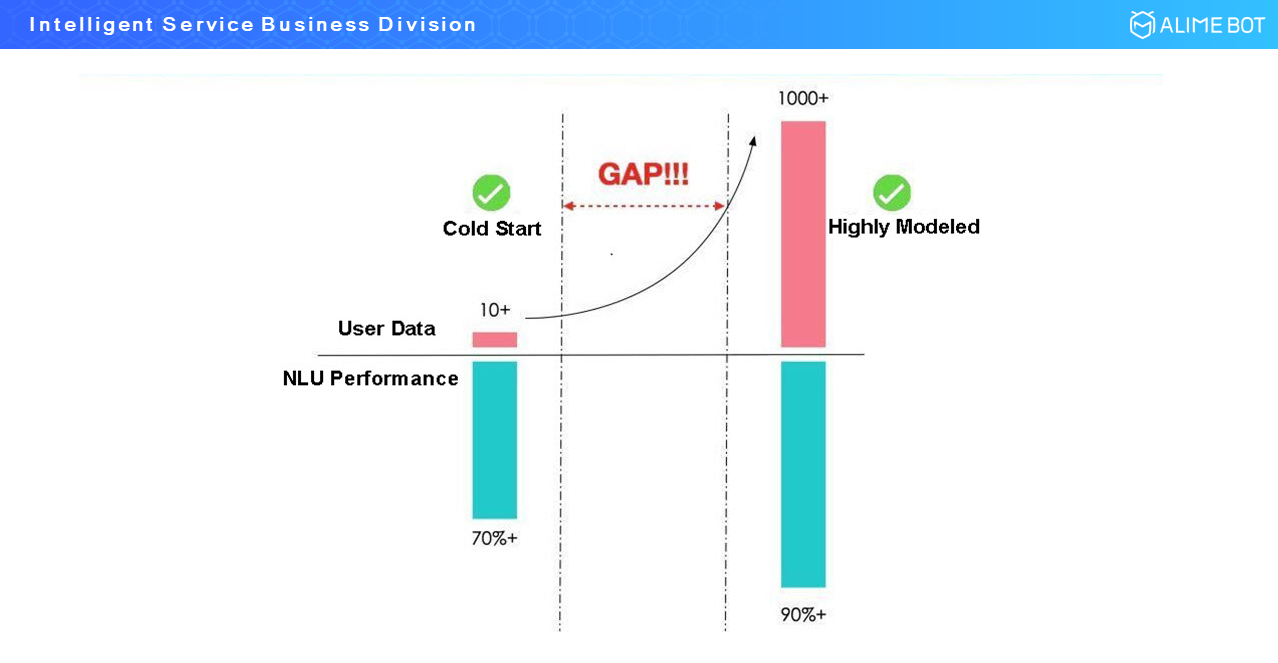

In the cold start phase, users will not enter many samples, but maybe a dozen to several dozens of samples. According to the power-law distribution and the Pareto principle, an accuracy of a bit more than 70% can be achieved at this stage, but no better. However, this accuracy is not good enough. This type of model needs to be able to meet the needs to provide an accuracy in excess of 90%. This requires a complex model and a large amount of labeled data. There is a gap between the current performance and expected performance, which is why we introduced the transfer learning model.

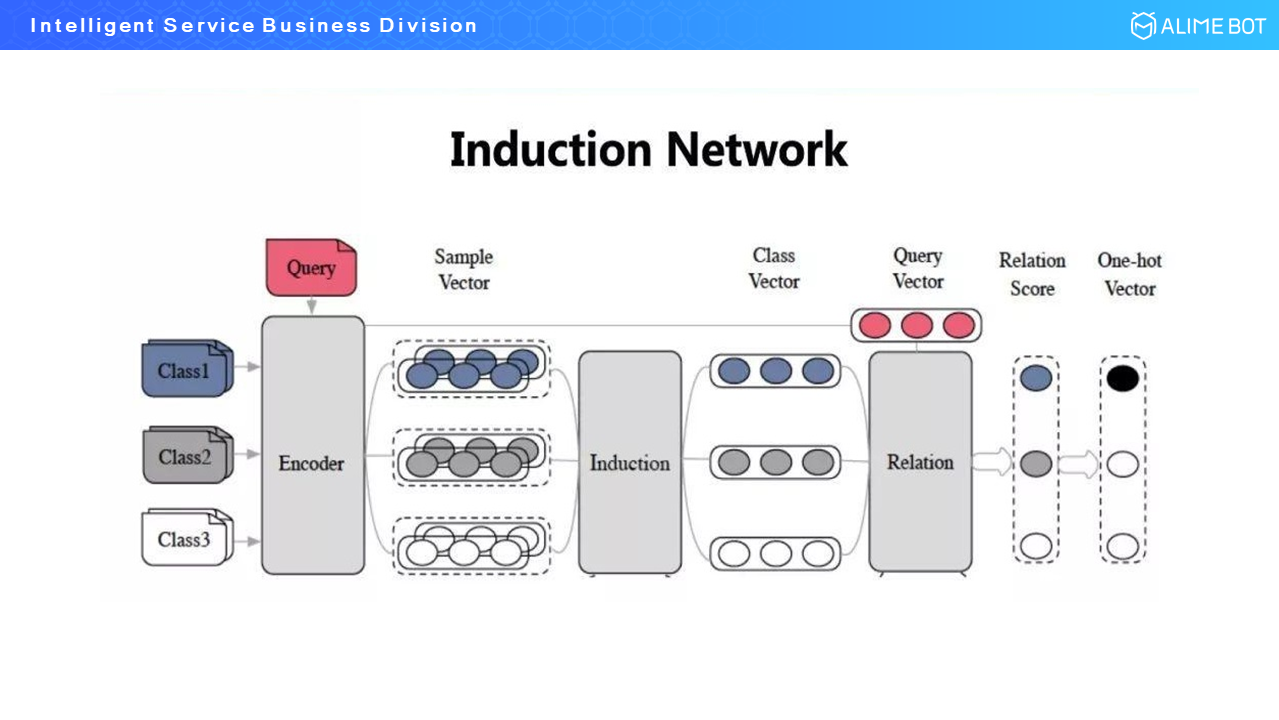

Specifically, we combined a capsule network with few-shot learning to create an induction network. This network has three layers: Encoder, Induction, and Relation.

The first layer, the Encoder layer, is responsible for encoding each sample of each class into a vector. The second layer, the Induction layer, is the most important layer of the three. It uses some capsule network methods to generalize multiple vectors of the same class into a single vector. The third layer, the Relation layer, calculates the relationship of newly input statements to the induction vector of each class and outputs their similarity scores. If we want a single classification result, we can output a one-hot vector. If we do not want a one-hot vector, we can output a relation score.

After we proposed this induction network structure, we tested it on academic few-shot learning data sets. This network produced a significant performance improvement and currently provides the best performance on these datasets. At the same time, we launched the network structure by incorporating it into our products.

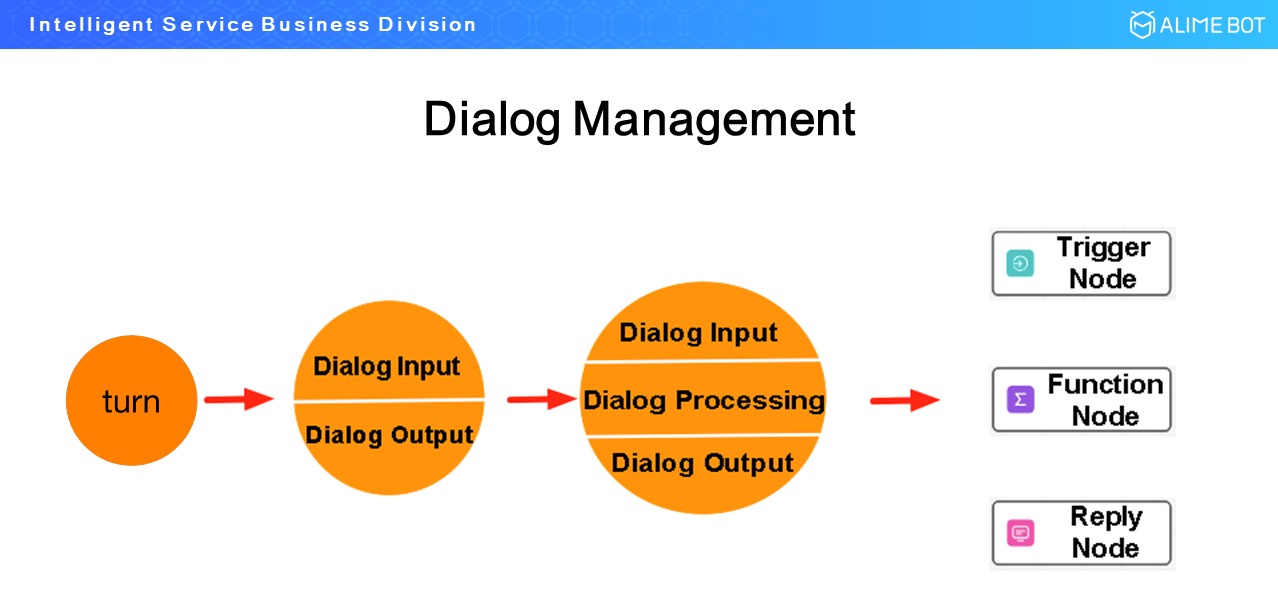

As I mentioned earlier, to give a platform sufficient adaptability, it must have good abstraction capabilities. Dialog management is a part of abstraction. The smallest unit of a dialog is a round. Each round can be divided into two parts, dialog input and dialog output. Between the input and output, there is a dialog processing procedure. The same applies to human communication. When I ask a question, you answer it after performing a thinking process. If you do not think about the question before answering it, your answer is meaningless. Therefore, this intermediate dialog processing procedure is essential to true dialogs.

By abstracting dialogs into these components, we were able to design a platform with three nodes: a trigger node, a function node, and a reply node.

The trigger node corresponds to the dialog input, the function node corresponds to the dialog processing procedure, and the reply node corresponds to the dialog output. Abstracted in this way, you can plot your business workflow by combining these three nodes regardless of your industry, scenario, or specific dialog process.

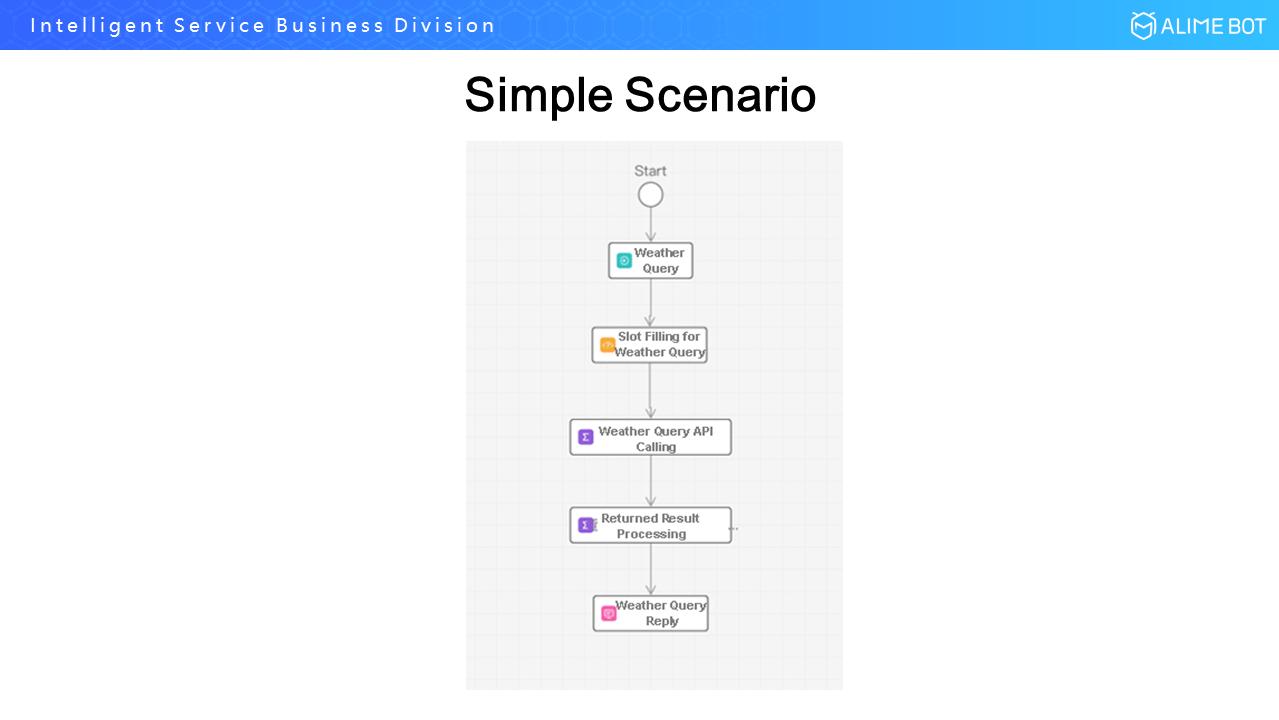

Let's look at a simple example. Say you need to check the weather. The process is very simple. First, the trigger node triggers the weather process. This process has two intermediate function nodes. One calls the API of the National Meteorological Center and returns the result. The other node parses and packages the result and returns the information to the user in a readable format through the reply node. Here, I should also briefly describe slot filling nodes. In task-based dialog scenarios, almost all tasks need to collect user information. For example, if you want to check the weather, the system must ask for the desired date, time, and location. This is called slot filling. Because slots are extremely common, we can preset them for cold start. To do this, we can use the three basic nodes to build a slot filling template. When we need to fill a slot, we can drag in a slot filling node and drop it at the desired position.

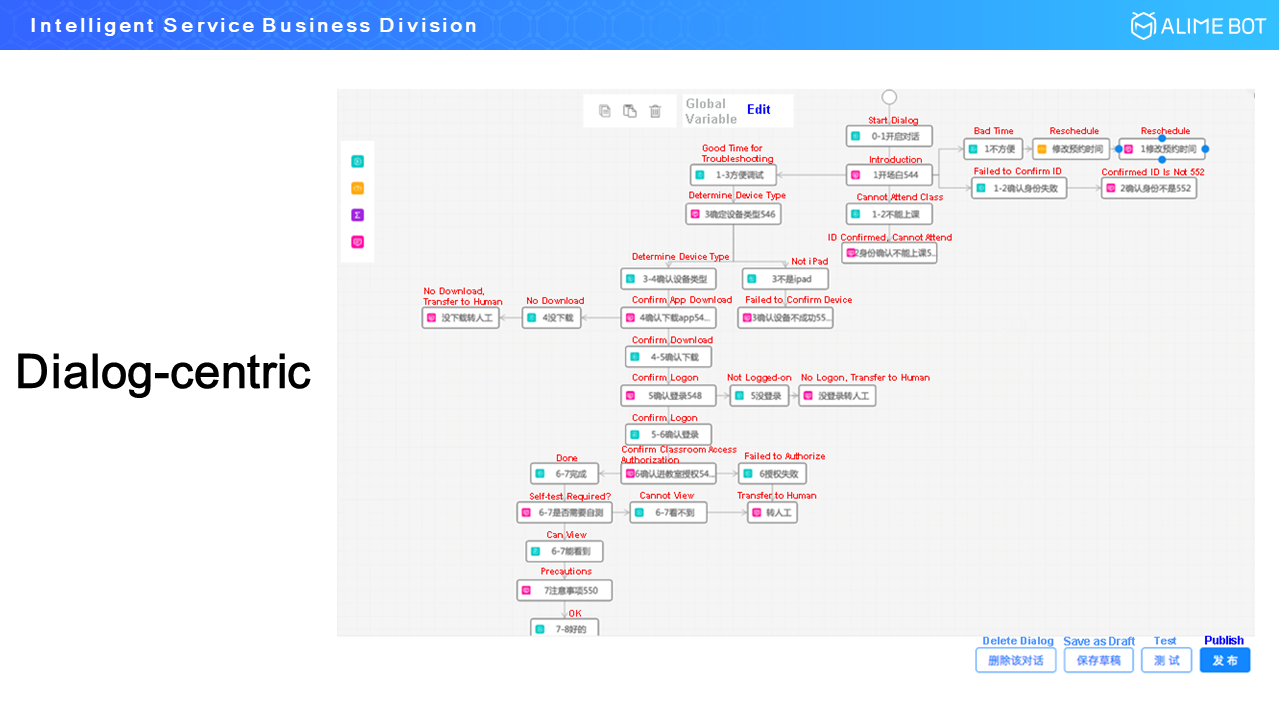

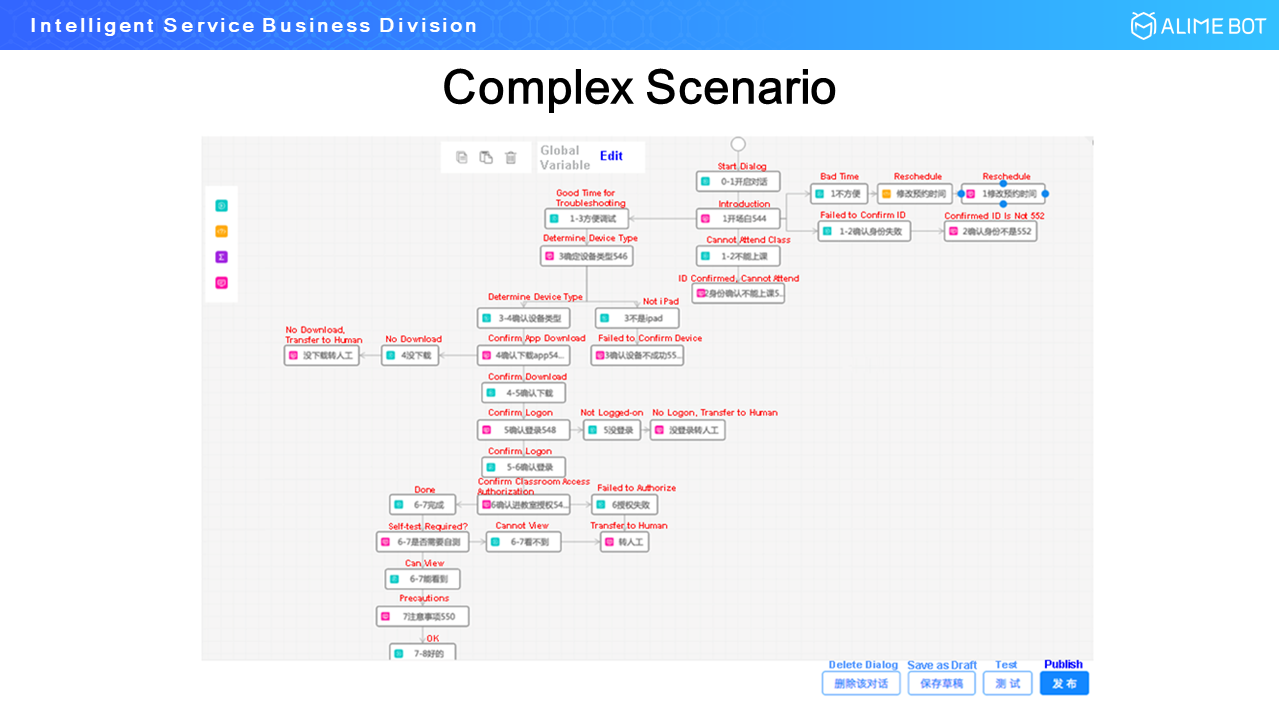

Look at the complicated scenario shown in the preceding figure. This is an outbound call scenario for online education. People with children will know that this is a common practice in the online education industry. Half an hour before a class, a robot will call users to instruct them how to download the class app, log on to the app, and enter the classroom.

These two examples show that the three abstract nodes can be used in both simple and complex scenarios.

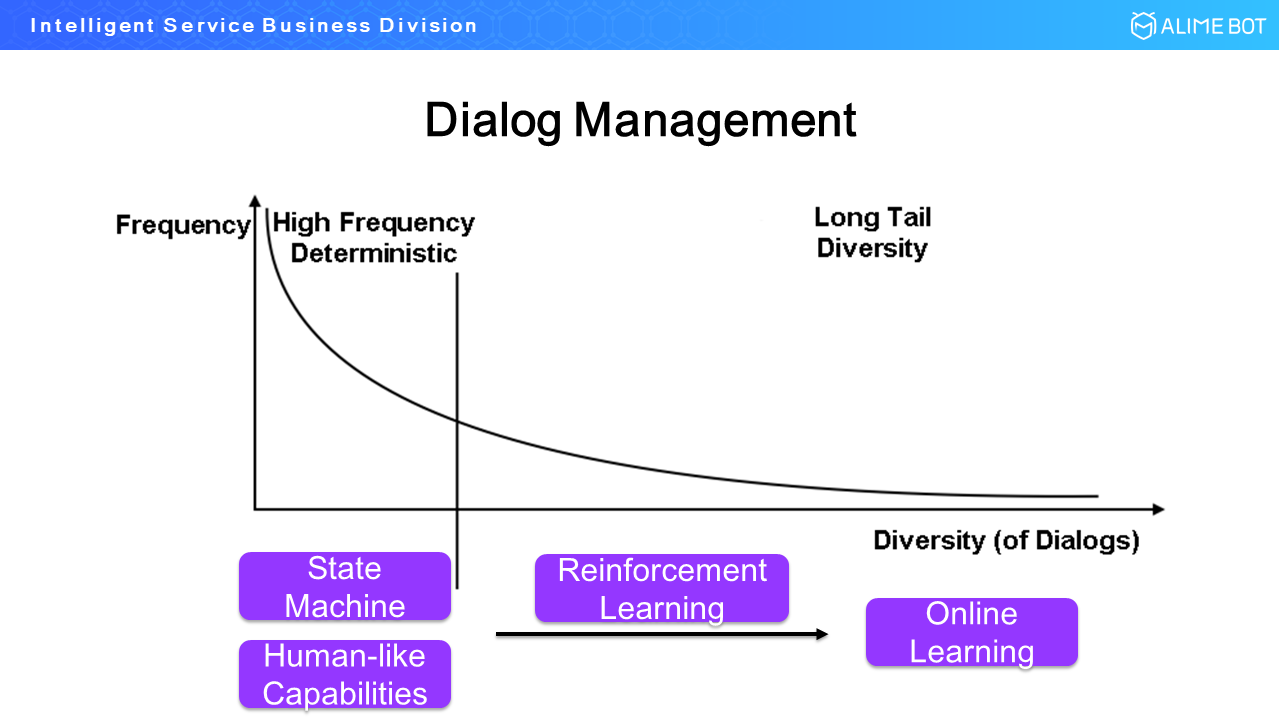

Now, let's look at specific dialog management techniques. In terms of implementation, the preceding figure is exactly the same as the one that we used when talking about language understanding. The only difference is that we are now considering dialogs instead of intents.

For example, if you collect 100,000 samples of weather query dialog inputs and count the frequency of the different input statements, they would form a curve like the one above. In a weather query process, we must first fill the time slot and then the location slot. Then, we can return the result to complete the process. This could be the process in 20,000 of the 100,000 samples. In other situations, the system may have to ask a question and receive an answer, and many different answers are possible. Therefore, the overall dialog structure itself also follows a power-law distribution.

We can handle the high-frequency part of the graph by using a state machine. However, the state machine cannot provide strong fault tolerance. This will cause problems if the system asks a question, but does not receive an understandable answer. In this case, it is necessary to introduce human-like capabilities to supplement the state machine. After adding human-like capabilities to the state machine, we can basically handle high-frequency dialogs. Dialogs on the long tail are currently a challenge for practitioners in both academia and industry. One good solution is to introduce online interactive learning after launch so that the model can continually learn from dialogs with users. However, there is a gap in the coverage of the state machine and online interactive learning. Therefore, we must use reinforcement learning because state machines do not natively possess the learning ability. Now, I will discuss the work we are doing in human-like capabilities and reinforcement learning.

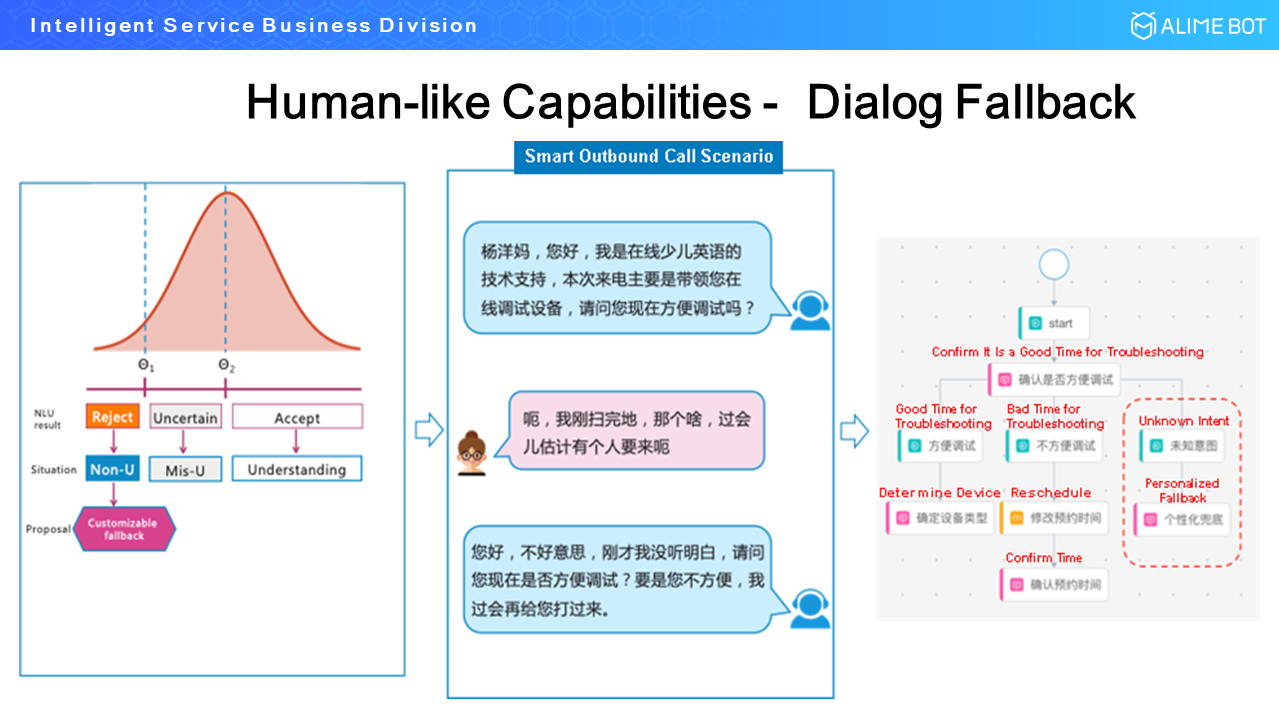

Let's take a look at human-like capabilities first. We can classify user input into three types. 1) Input that clearly expresses one and only one intent, which can be understood by a machine. 2) Unknown input, which cannot be understood by the machine at all. 3) Ambiguous (uncertain) input, where the machine can understand the user input to a certain extent. For example, the user might express multiple intents. State machines can capture and describe unambiguous (certain) input very well, so human-like capabilities are mainly used for unknown and uncertain input.

Let's consider an example of an unknown statement. Say a customer service bot calls a user to ask if it is a good time for the user to troubleshoot a device. After asking this question, the bot will expect an answer similar to "it is a good time" or "it is not a good time". However, if the bot receives an unexpected response like "I'm just here tidying up. The person you want should be back in a little while.", the language model will have a hard time grasping the semantic meaning. At this time, it is necessary to introduce a personalized fallback response for unknown inputs, such as "Sorry, I didn't understand your response. Is it convenient for you to troubleshoot the device now? If it's not convenient for you, I'll call you back later." This is a fallback solution such systems use to handle unknown input.

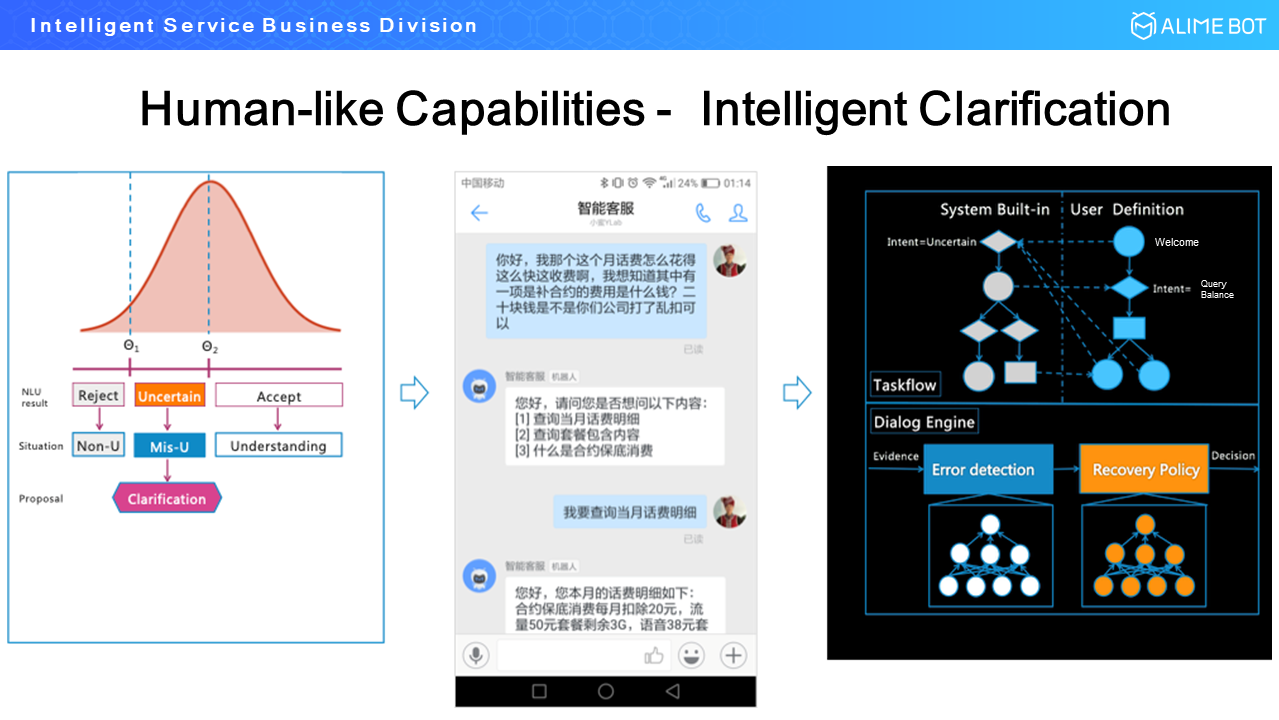

Next, let's take a look at how a bot can clarify user input when the original input is ambiguous. After analyzing a large amount of data, we found that ambiguity mainly occurs in two cases. The first is when a user expresses multiple intents in a single statement. The second is when a user gives a great deal of background information before expressing an intent. In these cases, our language model can output an intent probability distribution that the dialog management module can use to ask the user for clarification in another round of dialog. For example, the example from the telecom field in the preceding figure has three intents. The user wants to ask about his phone bill details, ask about his subscription, and ask about the minimum of a contract. In response, the system asks the user to clarify his or her intent from among these three questions.

The figure shows the technical details of the solution. The developer must clearly describe the dialog process with a flowchart, but the clarification process is actually a built-in capability of our system. The implementation time of the clarification process is determined by two underlying engines. The first is the Error Detection engine, which detects whether or not clarification must be implemented for the user input. If it determines that clarification is required, it hands over the task to the Recovery Policy engine. This second engine determines how to implement clarification and how to generate the clarification language. This is how our clarification process works at present.

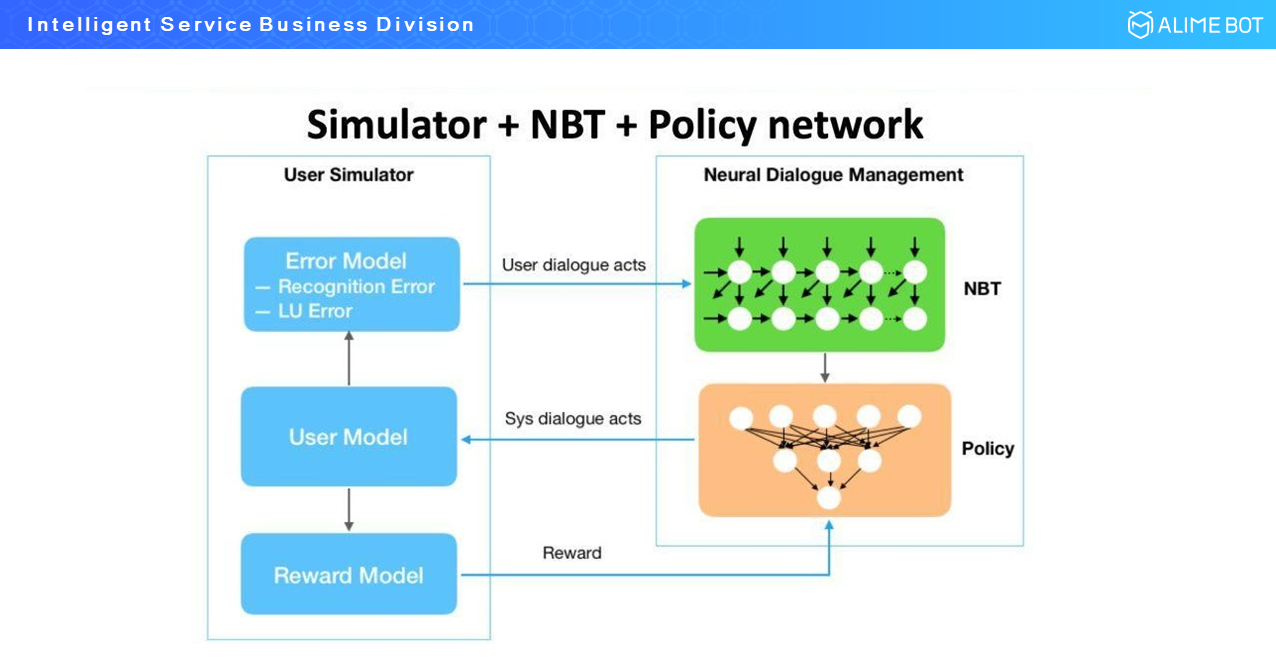

Now, let's look at where we at in reinforcement learning. The dialog management model is typically divided into two sub-modules: a neural belief tracker for tracking dialog states and a policy network for behavior decision-making. Looking at the overall framework, we can use two methods to train this network. One is end-to-end training, which applies reinforcement learning. However, the convergence speed of this method is generally slow, and the training result is poor. The second method is separate pre-training. Currently, training through supervised learning provides a good result. By using this method, there is no need to perform training through reinforcement learning. Instead, we can perform reinforcement learning to tune the model pre-trained through supervised learning.

Whether we perform one-step end-to-end training or pre-training and then tuning, as long as reinforcement learning is involved, an external environment is always required. Therefore, in our implementation architecture, we introduce a simulator, that is, the user simulator. This simulator is composed of three major models. One is the user model, which is used to simulate human behavior. The second is the error model. After the user model simulates human behavior, the error model introduces an error disturbance. The user model only outputs results with a probability of 1, which is not sufficient for network training. The error model disturbs this result and introduces several other results to it. It then recalculates the probability distribution to improve the scalability and generalization capability of the trained model. The third module is the reward model, which provides a reward value. This is what we are currently working on in the field of reinforcement learning for dialog management.

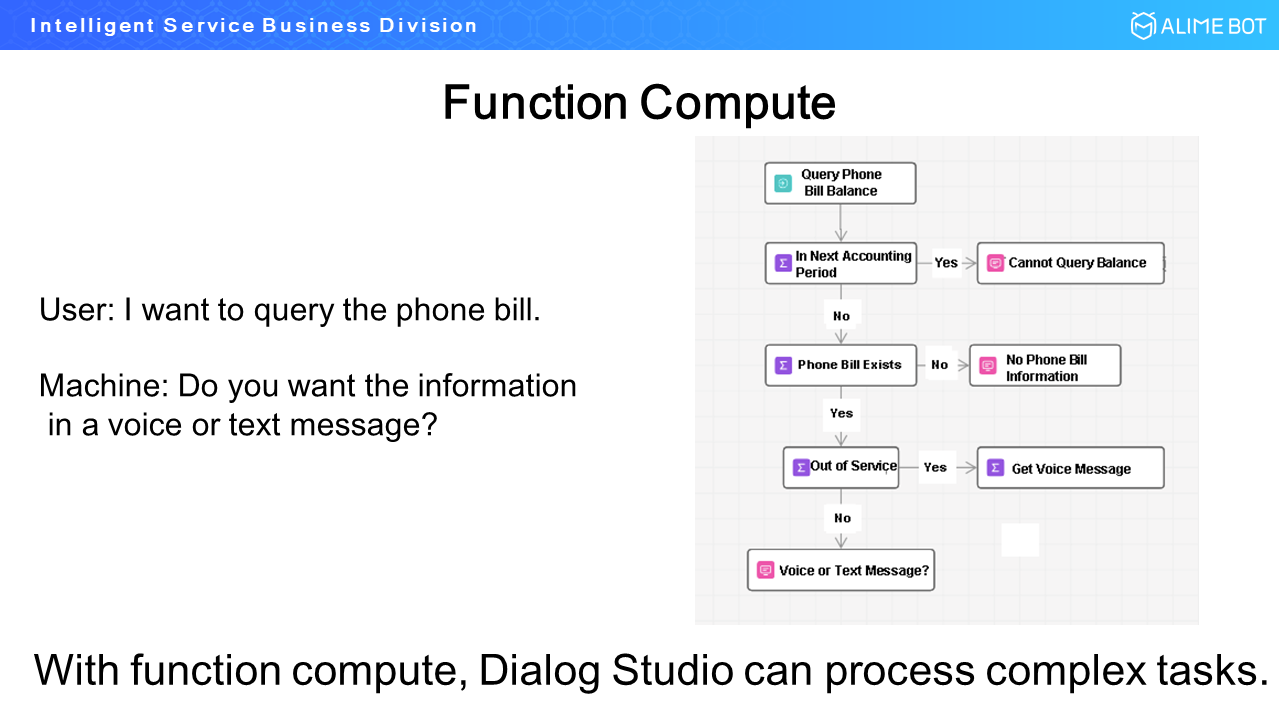

Let me provide an example to better explain function compute. Assume that a China Mobile user wants to check his phone bill. When the user is put through the customer service number, the customer service bot will reply by either text or voice message. On the surface, this seems to be a simple one-input and one-output process. In fact, however, it takes several rounds of service inquiries to obtain the final result. When the user requests phone bill details, function compute is used to obtain the current date. If the current date is in the next accounting period, for example on the last day of the month, the phone bill inquiry service is unavailable. If the date allows phone bill inquiry, the system must first determine if the user actually has a phone bill. If yes, the system makes a third call to check if the user's device is out of service. If yes, the system must send a voice message because the user will not be able to receive text messages. As you can see, this process is actually very complicated. Currently, all such processes are implemented on the bot's end by running code. Function compute allows Dialog Studio to process complex tasks.

To wrap up, let's consider some of Dialog Studio's business applications. In Zhejiang, a province in China, Dialog Studio is used for the 114 hotline. When someone parks illegally and blocks you in, you can call this hotline to have the system place an automatic call to ask the offender to move the car. A second example comes from the loan collections field. In addition, during the past Double 11 Shopping Festival, Dialog Studio was applied on a large scale in the e-commerce field. Its main applications in this field are Alime Shop Assistant and AlimeBot.

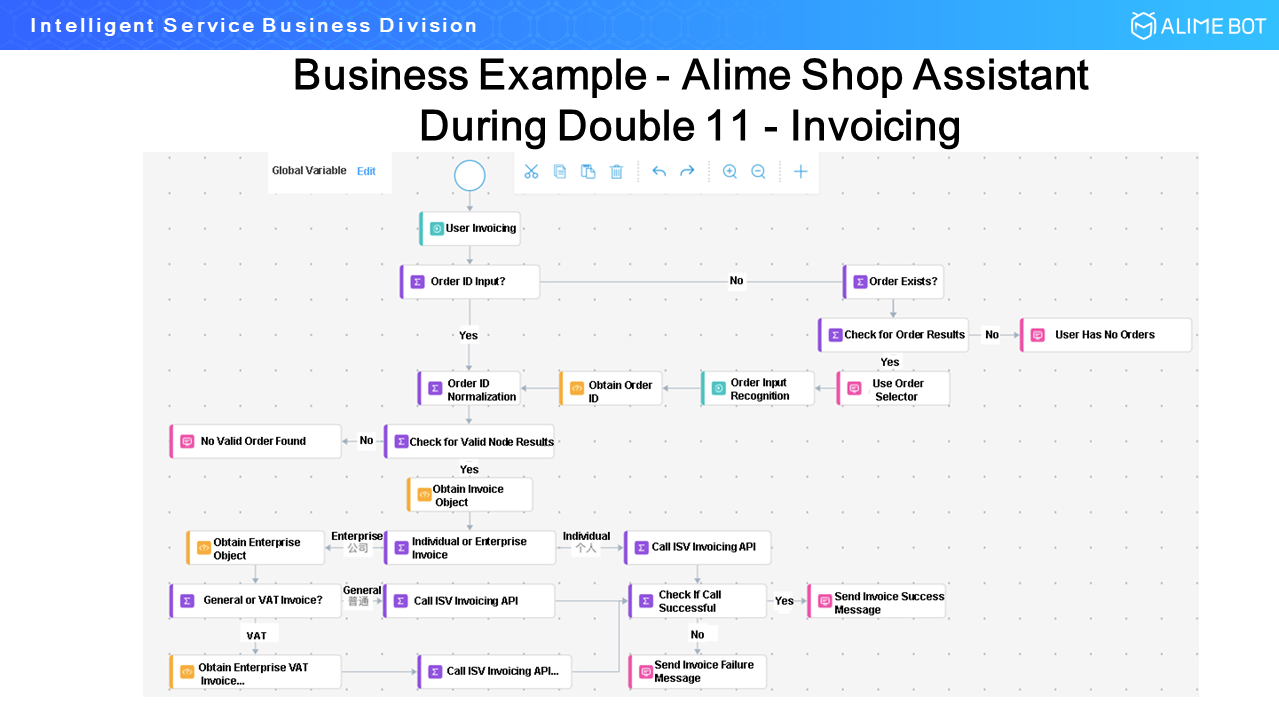

Alime Shop Assistant mainly handles invoicing, delivery reminders, address changes, and other similar processes. When a user requests an invoice, the system must perform a complicated process. First, the user may have already provided an order number, but otherwise, the system must call the backend order lookup system, which uses an order selector to select the appropriate order. Next, the system must determine whether the invoice is an individual or enterprise invoice, as this determines the subsequent process. Next, the system asks whether it is a general invoice or a VAT invoice. If it is a general invoice, the process proceeds. If it is a VAT invoice, the system must obtain the enterprise's VAT tax ID. Finally, the system summarizes the information and calls the background invoicing system to issue the invoice. This is the invoicing process used during Double 11.

AlimeBot primarily serves the business units in Alibaba Group. For Taobao Mobile, our largest business, the AlimeBot customer service feature is provided on the user homepage. Just last month, we launched Youku AlimeBot for Youku. Starbucks, our largest new retail project, also uses AlimeBot in many scenarios.

With DingTalk smart work assistants, Dialog Studio provides smart attendance, smart HR, and other dialog services to millions of enterprises.

In all, Dialog Studio currently provides services to all the internal businesses of the Alibaba economy (such as Taobao, Youku, and Hema Fresh), the merchants on Taobao and Tmall, millions of companies that use DingTalk, companies that use our public cloud, and private cloud users in key industries such as government affairs, telecom, and finance. So far, we already serve customers, around the world, including in Singapore, Indonesia, Vietnam, Thailand, the Philippines, and Malaysia. We are empowering developers in all industries by allowing them to create their own dialog bots.

1 posts | 0 followers

FollowAlibaba Cloud Community - April 15, 2025

Alibaba Cloud Project Hub - July 15, 2025

Alibaba Cloud Native Community - November 21, 2025

Alibaba Cloud Community - July 9, 2024

Alibaba Clouder - March 20, 2019

Alibaba Cloud MaxCompute - April 25, 2019

1 posts | 0 followers

Follow Dedicated DingTalk Solution

Dedicated DingTalk Solution

Organizations of any size can leverage DingTalk’s enterprise-exclusive APIs to quickly create customized mobile workspaces on DingTalk or mobile applications while taking full control of your business data.

Learn More DingTalk Solution

DingTalk Solution

Alibaba's DingTalk is significantly helping us all to mitigate the medical, educational, and economic risks of the unforeseen COVID-19 outbreak.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More