By Farruh

Previously, we prepared an ECS instance and Docker environment at the ECS instance here and here. Now, it's time to run the Machine Learning Model on Docker and get results. Let's take on image classification tasks as an example. These tasks are well-studied and have a lot of benefits in terms of business values. Moreover, it is fundamental to attempt to comprehend an entire image.

Image classification categorizes and labels groups of pixels or vectors within an image based on specific rules within that image. The categorization law can be devised using one or more spectral or textural characteristics. Two general methods of classification are supervised and unsupervised. The goal is to classify the image by assigning it to a specific label. Typically, Image Classification deals with images in which only one object appears and is being analyzed.

Inspired by nidolow, we will get a pre-trained model for image classification and put it in Docker. If you want to run a TensorFlow program developed in the host machine within a container, mount the host directory and change the container's working directory (-v hostDir:containerDir -w workDir). In our example, it will be -v $PWD:/classification -w /classification, PWD is a current directory, so the codes should be in this directory.

docker run --gpus all -it --rm -v $PWD:/classification -w /classification tf.2.1.0-gpu-cat_dog:v0.0.1 bashAfter running the command above, we will be in the Docker image and can start to prepare the Python environment.

pip install -r requirements.txtNow, the environment is ready. We can run the pre-trained model to test it.

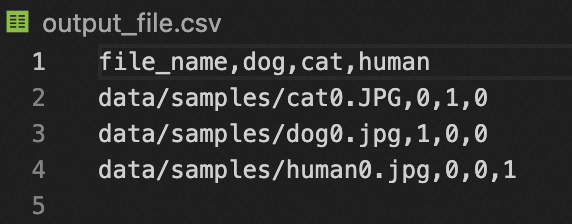

python src/predict.py -m models/model-2a05ce2.mdl -d data/samples/ -o output_file.csvIf we can see the similar output_file.csv as a result, congrats, you have succeeded in preparing the model.

The Docker image can be prepared to upload to Docker Hub or Alibaba Cloud Container Registry. Container Registry allows you to manage images throughout the image lifecycle and provides an optimized solution for using Docker on the cloud.

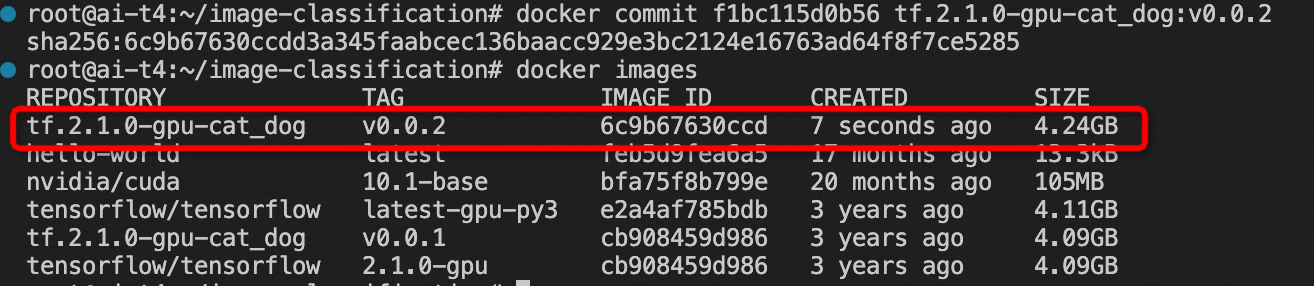

Firstly, let's create an image from the container. We can use the following command syntax to create an image from the container: docker commit <Container ID or container name> [<Repository name>[:<Tag>]]. Run the image and derive a new image with a simple name for testing and restoration purposes.

docker commit f1bc11* tf.2.1.0-gpu-cat_dog:v0.0.2

Here is documentation on how to push the Docker image to Docker Hub. It needs to run the below command in a simplified way (please put your information):

docker push <hub-user>/<repo-name>:<tag>Another way is to create a Docker image file, which will be part of MLOps and help decrease unnecessary work in the future. Later, we will have an article to explain the whole ML production lifecycle with MLOps.

Dockerfile is a simple text file with instructions to build Docker images. Let's start with the preparation of the Dockerfile. First, we create the Dockerfile in the working directory.

vim DockerfileYou can enter edit mode by pressing the I key. Then, add the following content to the file:

#Declare a base image.

FROM tensorflow/tensorflow:latest-py3

#Update and Upgrade apt

RUN apt-get update

RUN apt-get upgrade -y

#Specify the commands that you want to run before the container starts. You must append these commands to the end of the RUN command. Dockerfile can contain up to 127 lines. If the total length of your commands exceeds 127 lines, we recommend that you write these commands to a script.

RUN pip install --upgrade pip

RUN pip install pandas==1.0.5

RUN pip install scikit_learn==0.23.1

RUN pip install pillow==8.4.0

RUN \

cd / \

&&\

git clone https://github.com/k-farruh/image-classification.git \

&&\

cd image-classificationThen, press the Esc key, enter :wq, and press the Enter key to save and exit Dockerfile. Then, create an image:

docker build -t tf.2.1.0-gpu-cat_dog:v0.0.3 . #Use Dockerfile to create an image. . at the end of the command line specifies the path of Dockerfile and must be provided.

docker images #Check whether the image is created.Run the container and check its state:

docker run -d webalinux3:v1 #Run the container in the background.

docker ps #Query the containers that are in the running state.

docker ps -a #Query all containers, including those in the stopped state.

docker logs CONTAINER ID/IMAGE #If the container does not appear in the query results, check the startup log to troubleshoot the issue based on the container ID or name.The image is pushed to Docker Hub by default. You must log on to Docker, add a tag to the image, and name the image in the <Docker username>/<Image name>:<Tag> format. Then, the image is pushed to the remote repository.

docker login --username=dtstack_plus registry.cn-shanghai.aliyuncs.com #Enter the password of the image repository after you run this command.

docker tag [ImageId] registry.cn-shanghai.aliyuncs.com/dtstack123/test:[Tag]

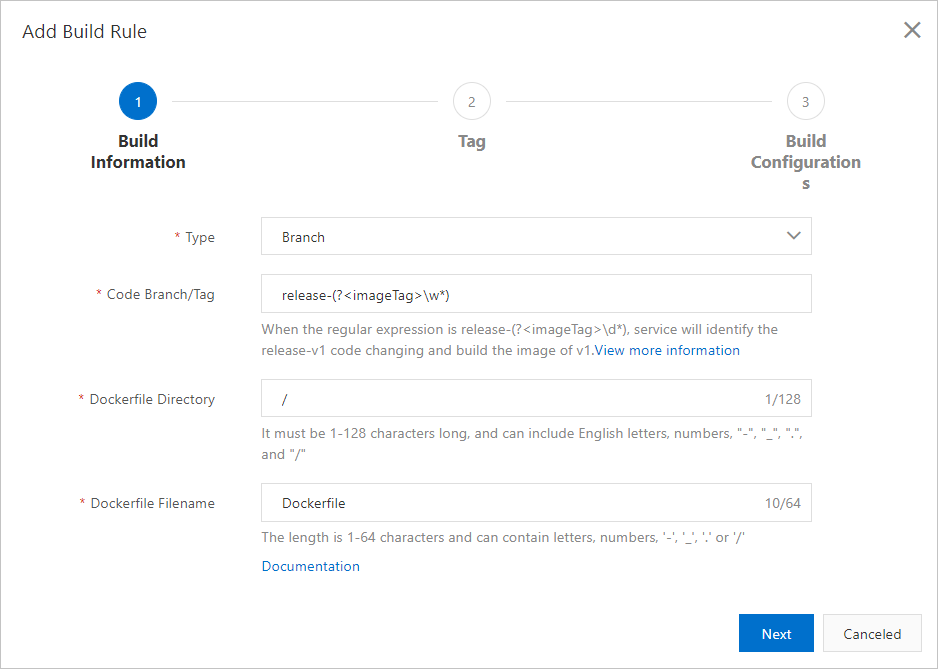

docker push registry.cn-shanghai.aliyuncs.com/dtstack123/test:[Tag]However, we recommend an alternative to Docker Hub, Alibaba Cloud Container Registry. This helpful article explains how to use Container Registry to build the Docker image. Since we have prepared every step and will be another paper regarding the container register, we press the button to create a Docker image or wait until the scheduler creates a Docker image.

Since our long-term goal is to make fully automated MLOps, an emerging field, MLOps is rapidly gaining momentum among data scientists, ML engineers, and AI enthusiasts. We start our journey by preparing the Docker image for inference of the machine learning model. Little by little, we will be closer to our goal and make MLOps easy with the lowest cost and time spent on the model lifecycle.

Working with Alibaba Cloud ECS - Part 2: NVIDIA-TensorFlow Docker Image

Alibaba Clouder - December 27, 2018

Alibaba Clouder - October 11, 2018

Farruh - March 1, 2023

Farruh - March 1, 2023

Alibaba F(x) Team - June 20, 2022

Alibaba Developer - June 17, 2020

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn MoreMore Posts by Farruh