By Zhou Kaibo (Baoniu) and compiled by Maohe

This article provides an overview of Apache Flink architecture and introduces the principles and practices of how Flink runs on YARN and Kubernetes, respectively.

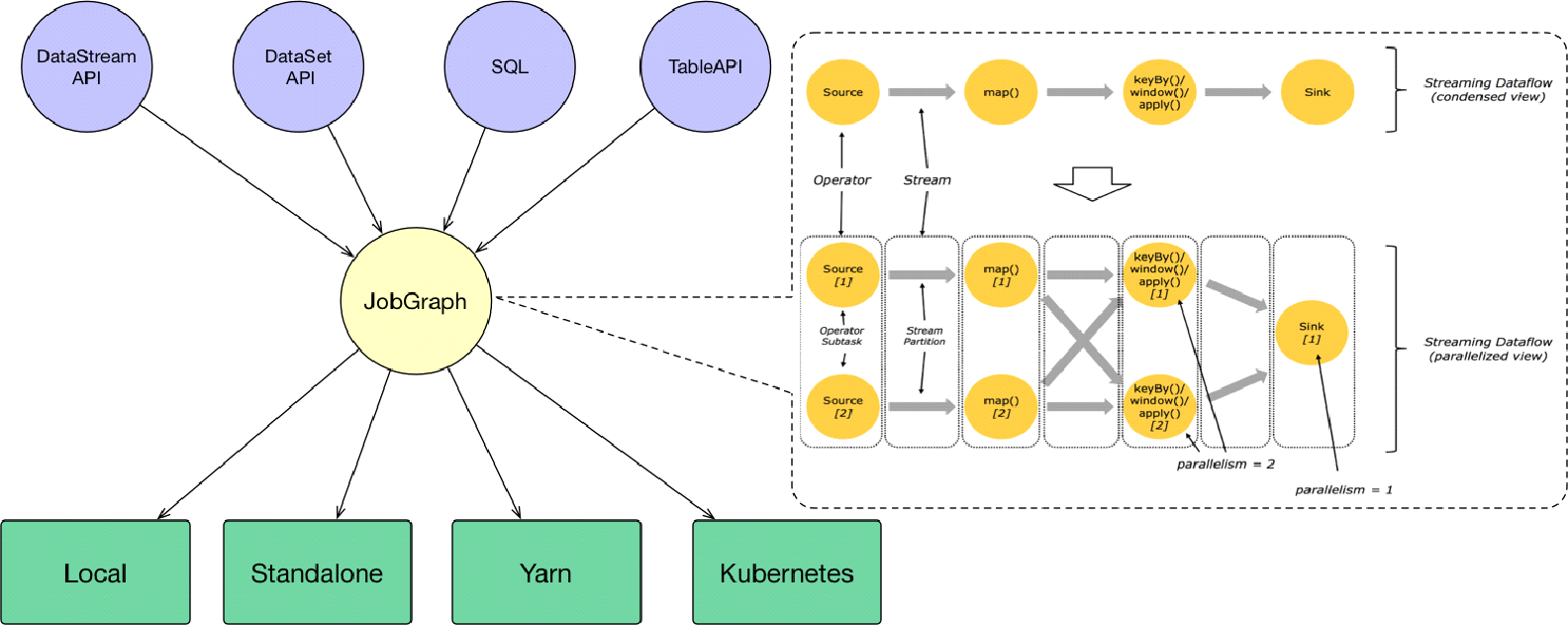

Use the DataStream API, DataSet API, SQL statements, and Table API to compile a Flink job and create a JobGraph. The JobGraph is composed of operators such as source, map(), keyBy(), window(), apply(), and sink. After the JobGraph is submitted to a Flink cluster, it can be executed in Local, Standalone, YARN, or Kubernetes mode.

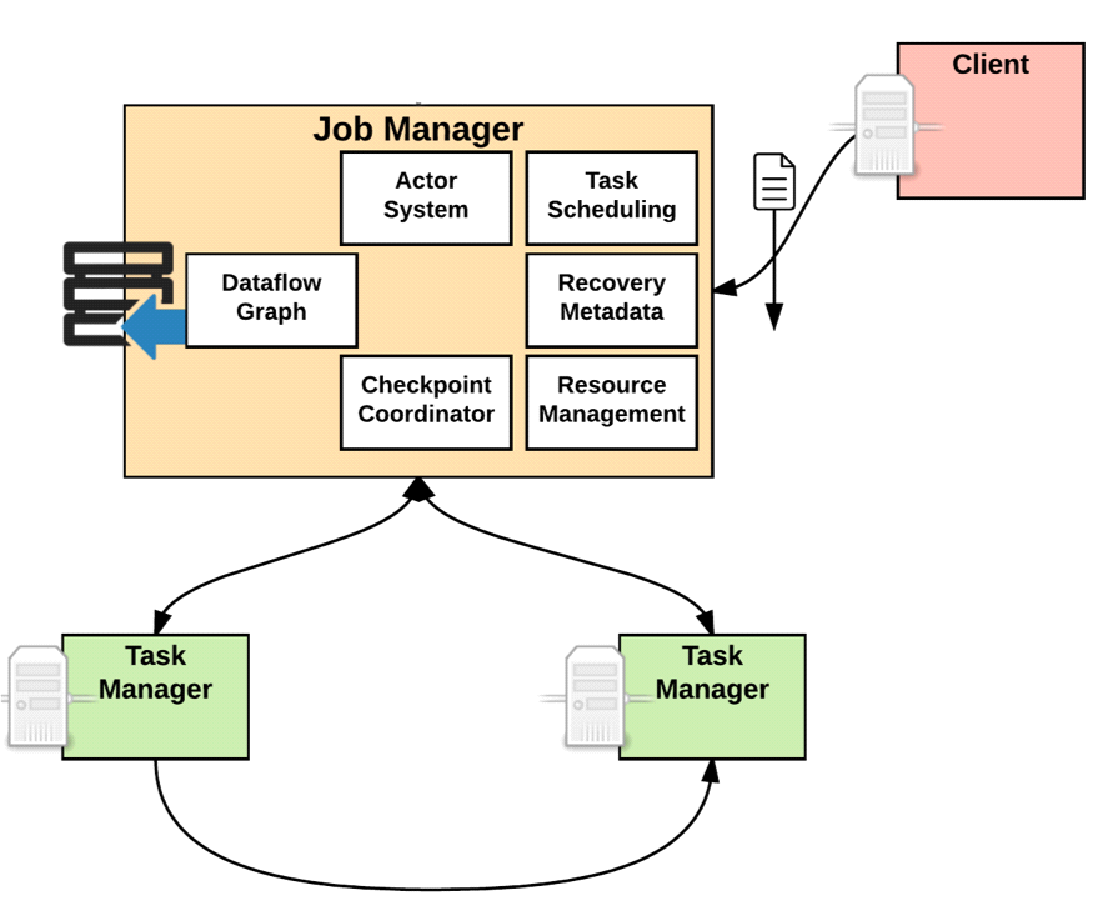

A JobManager provides the following functions:

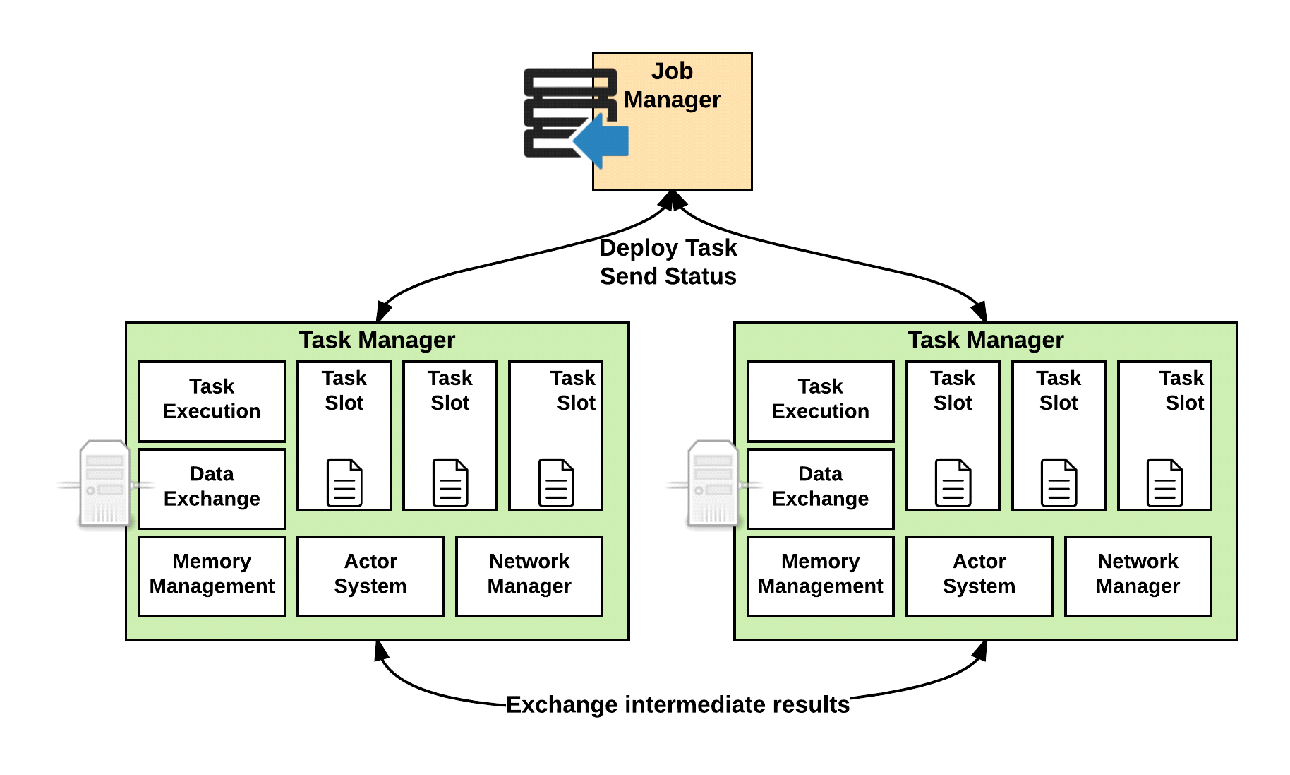

TaskManager is responsible for executing tasks. It is started after the JobManager applies for resources. It has the following components:

TaskManager is divided into multiple task slots. Each task runs within a task slot. A task slot is the smallest unit for resource scheduling.

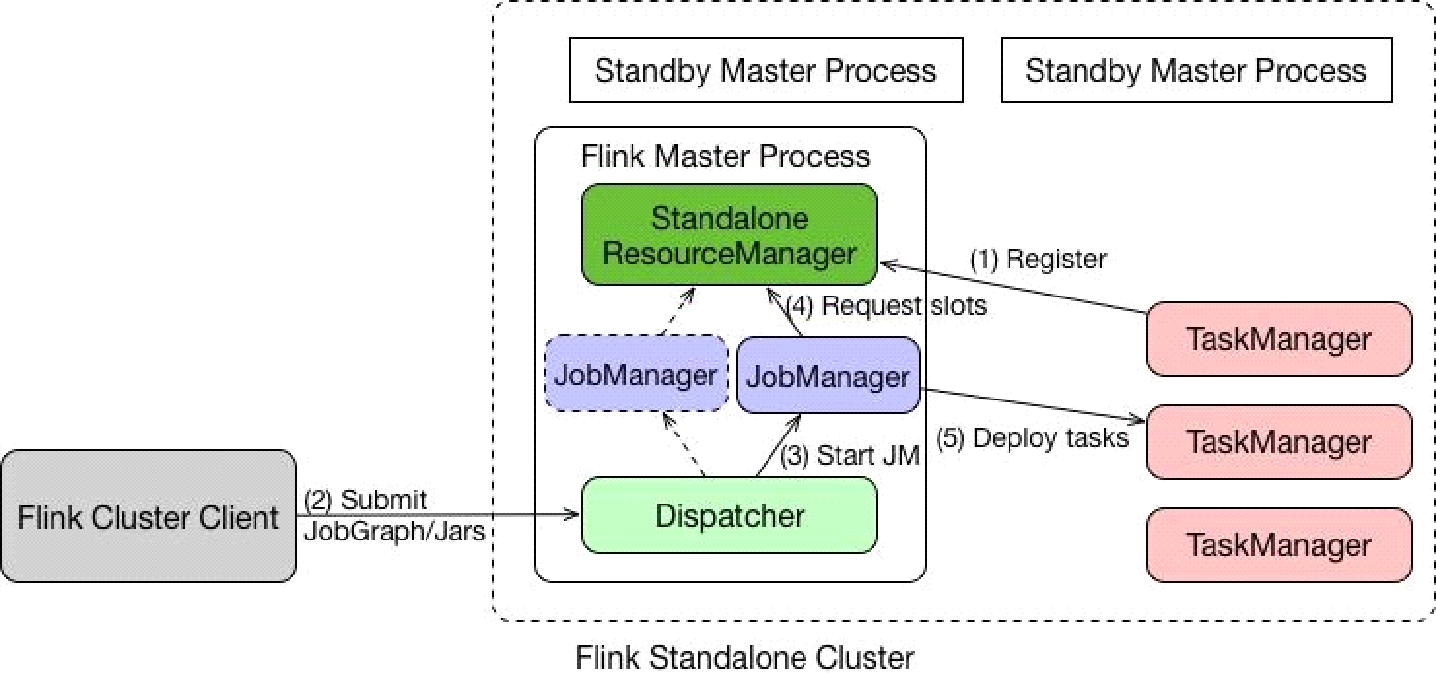

Let's have a look at Flink's Standalone mode to better understand the architectures of YARN and Kubernetes.

This section summarizes Flink's basic architecture and the components of Flink runtime.

YARN is widely used in the production environments of Chinese companies. This section introduces the YARN architecture to help you better understand how Flink runs on YARN.

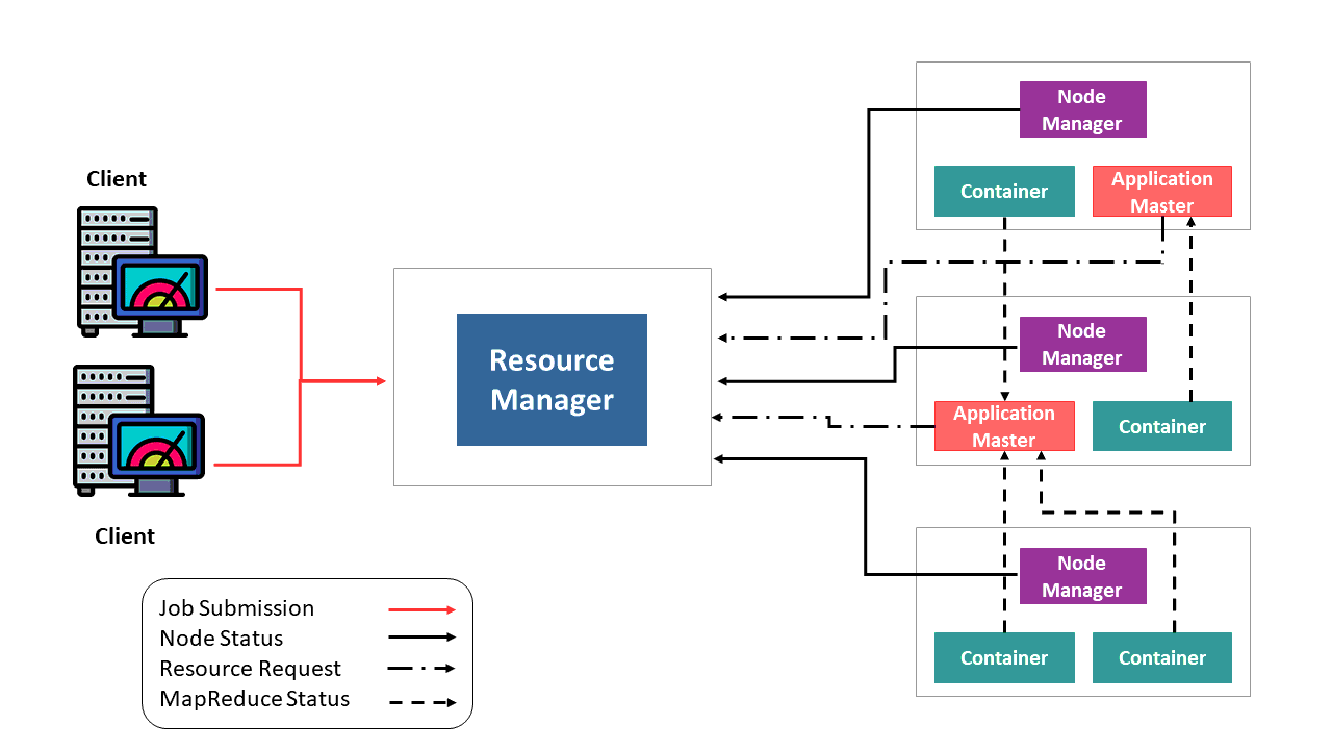

The preceding figure shows the YARN architecture. The ResourceManager assumes the core role and is responsible for resource management. The clients submit jobs to the ResourceManager.

After a client submits a job to the ResourceManager, the ResourceManager starts a container and then an ApplicationMaster, the two of which form a master node. After startup, the master node applies for resources from the ResourceManager, which then allocates resources to the ApplicationMaster. The ApplicationMaster schedules tasks for execution.

A YARN cluster consists of the following components:

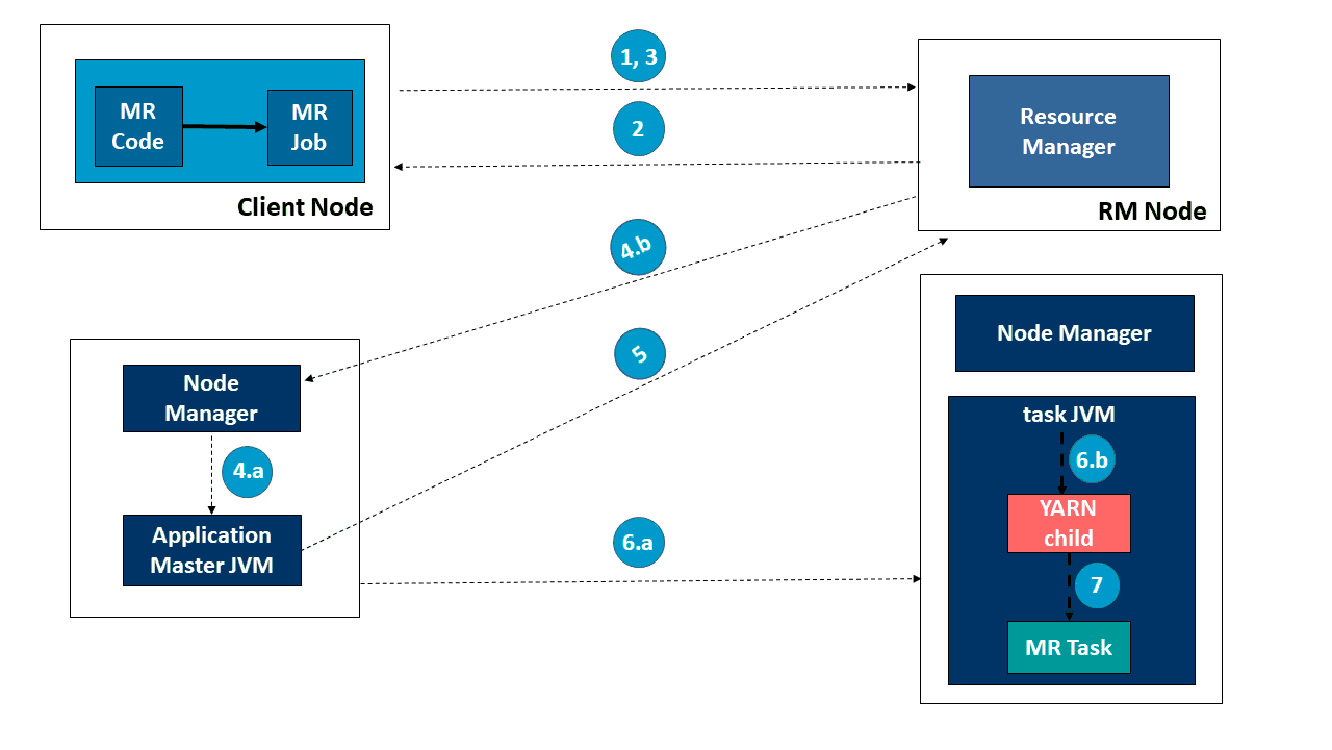

This section describes the interaction process in the YARN architecture using an example of running MapReduce tasks on YARN.

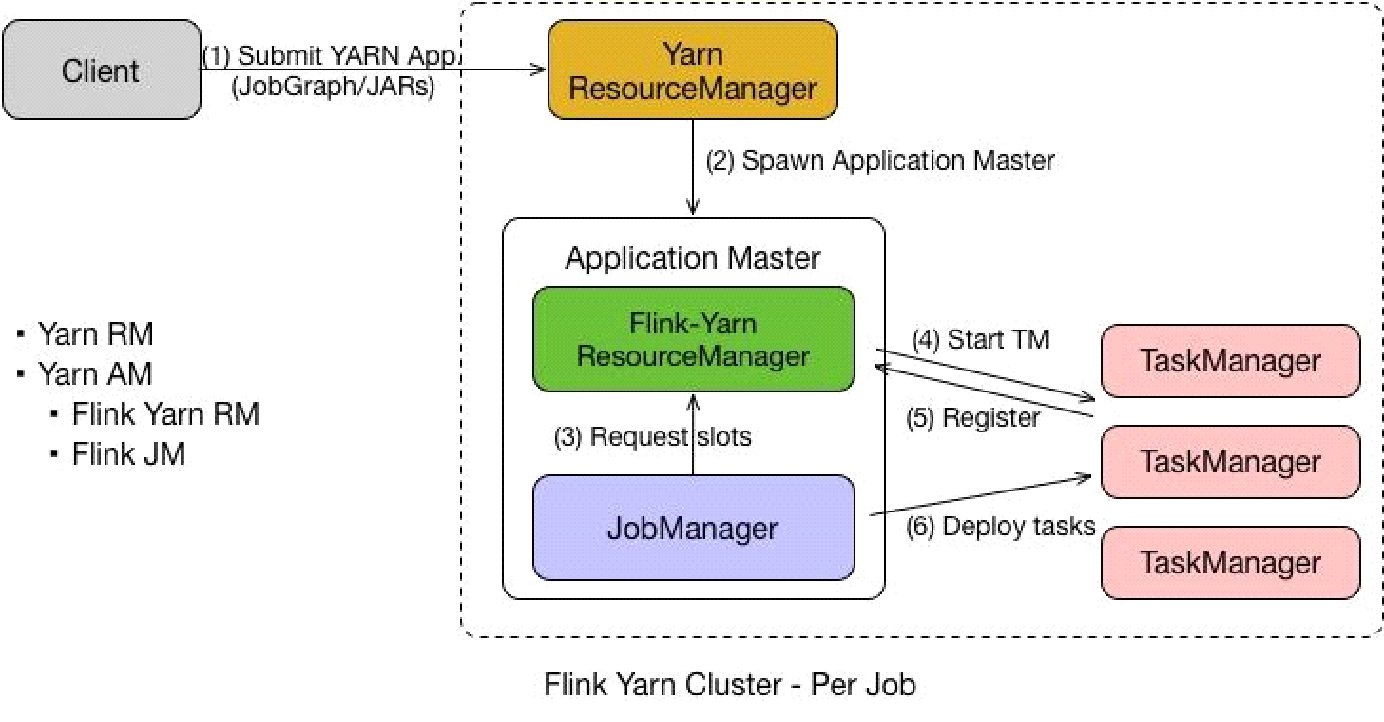

Flink on YARN supports the Per Job mode in which one job is submitted at a time and resources are released after the job is completed. The Per Job process is as follows:

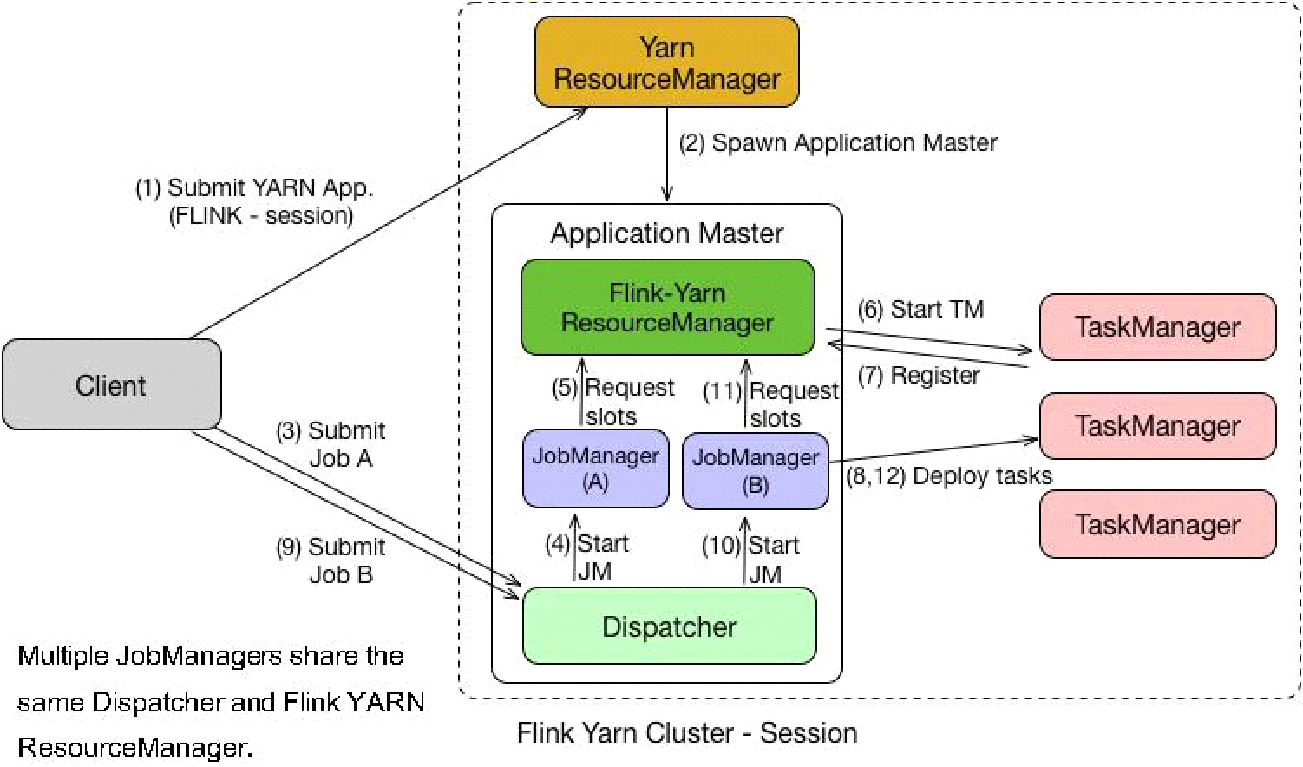

In Per Job mode, all resources, including the JobManager and TaskManager, are released after job completion. In Session mode, the Dispatcher and ResourceManager are reused by different jobs. In Session mode, after receiving a request, the Dispatcher starts JobManager (A), which starts the TaskManager. Then, the Dispatcher starts JobManager (B) and the corresponding TaskManager. Resources are not released after Job A and Job B are completed. The Session mode is also called the multithreading mode, in which resources are never released and multiple JobManagers share the same Dispatcher and Flink YARN ResourceManager.

The Session mode is used in different scenarios than the Per Job mode. The Per Job mode is suitable for time-consuming jobs that are insensitive to the startup time. The Session mode is suitable for jobs that take a short time to complete, especially batch jobs. Executing jobs with a short runtime in Per Job mode results in the frequent application for resources. Resources must be released after a job is completed, and new resources must be requested again to run the next job. Obviously, the Session mode is more applicable to scenarios where jobs are frequently started and is completed within a short time.

YARN has the following advantages:

Despite these advantages, YARN also has disadvantages, such as inflexible operations and expensive O&M and deployment.

Kubernetes is an open-source container cluster management system developed by Google. It supports application deployment, maintenance, and scaling. Kubernetes allows easily managing containerized applications running on different machines. Compared with YARN, Kubernetes is essentially a next-generation resource management system, but its capabilities go far beyond.

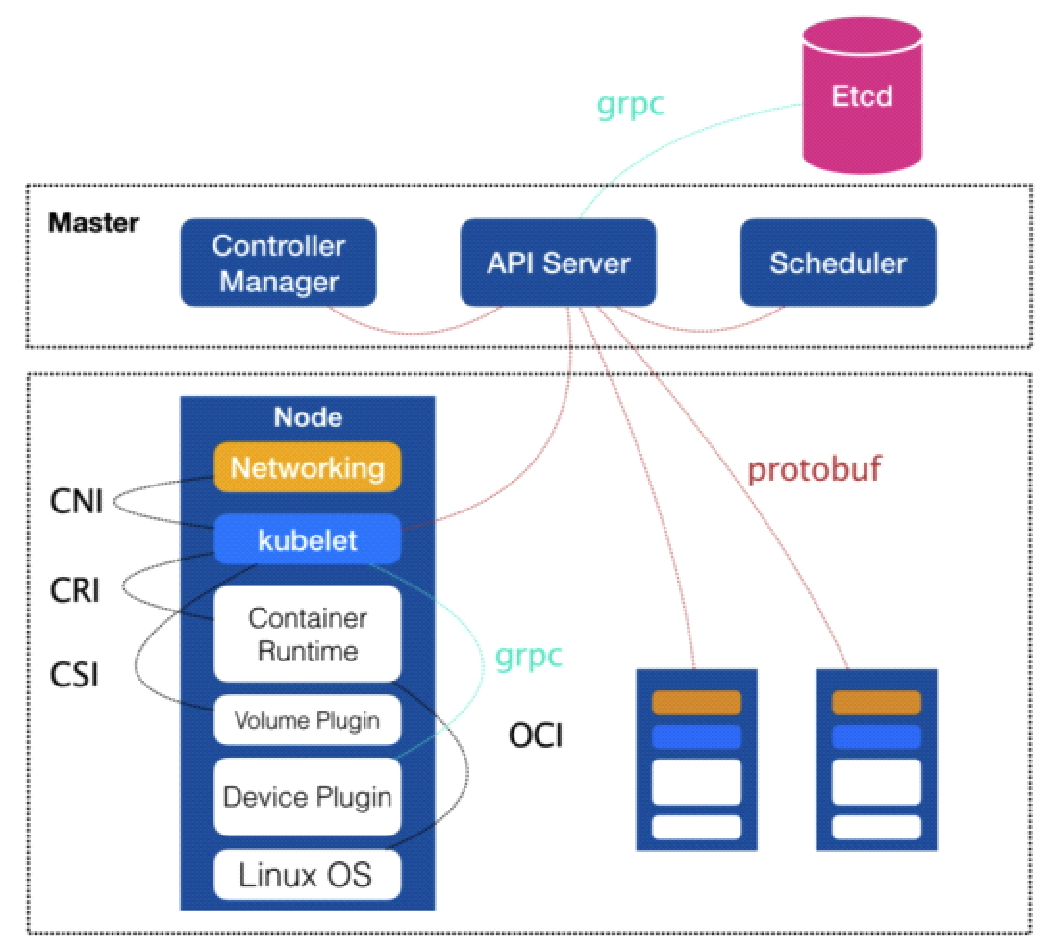

In Kubernetes, a master node is used to manage clusters. It contains an access portal for cluster resource data and etcd, a high-availability key-value store. The master node runs the API server, Controller Manager, and Scheduler.

A node is an operating unit of a cluster and also a host on which a pod runs. A node contains an agent process, which maintains all containers on the node and manages how these containers are created, started, and stopped. A node also provides kube-proxy, which is a server for service discovery, reverse proxy, and load balancing. A node provides the underlying Docker engine used to create and manage containers on the local machine.

A pod is the combination of several containers that run on a node. In Kubernetes, a pod is the smallest unit for creating, scheduling, and managing resources.

The preceding figure shows the architecture of Kubernetes and its entire running process.

Kubernetes involves the following core concepts:

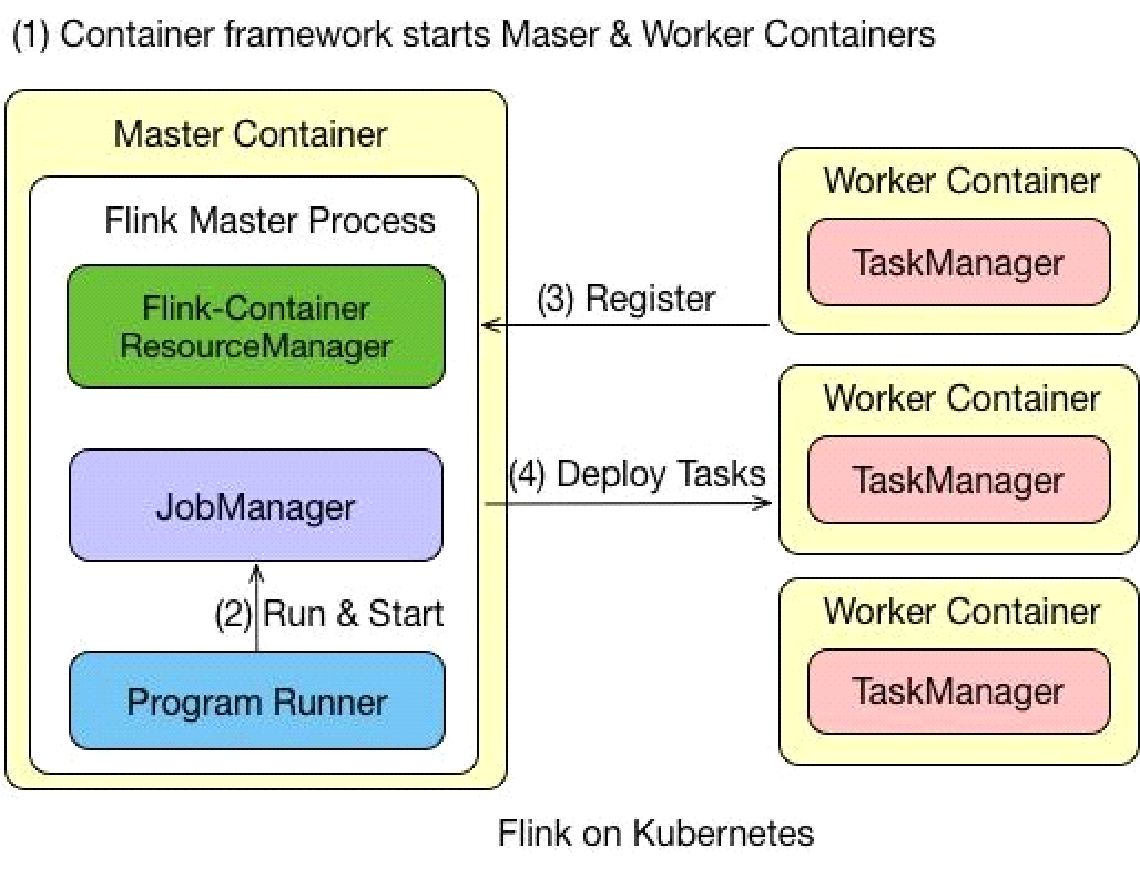

The preceding figure shows the architecture of Flink on Kubernetes. The process of running a Flink job on Kubernetes is as follows:

The execution process of a JobManager is divided into two steps:

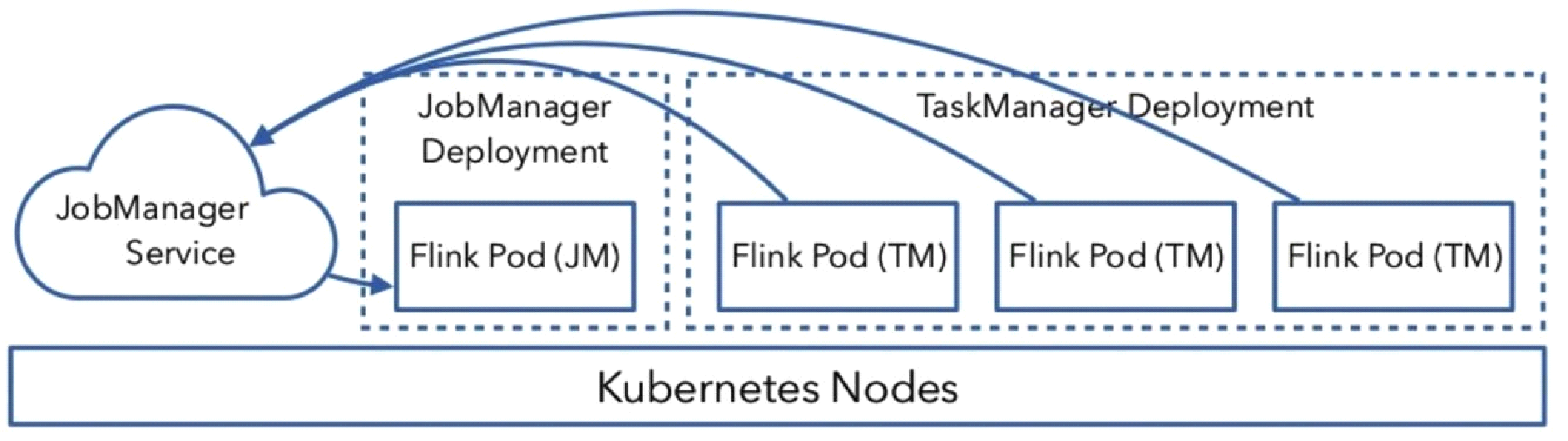

1) The JobManager is described by a Deployment to ensure that it is executed by the container of a replica. This JobManager are labeled as flink-jobmanager.

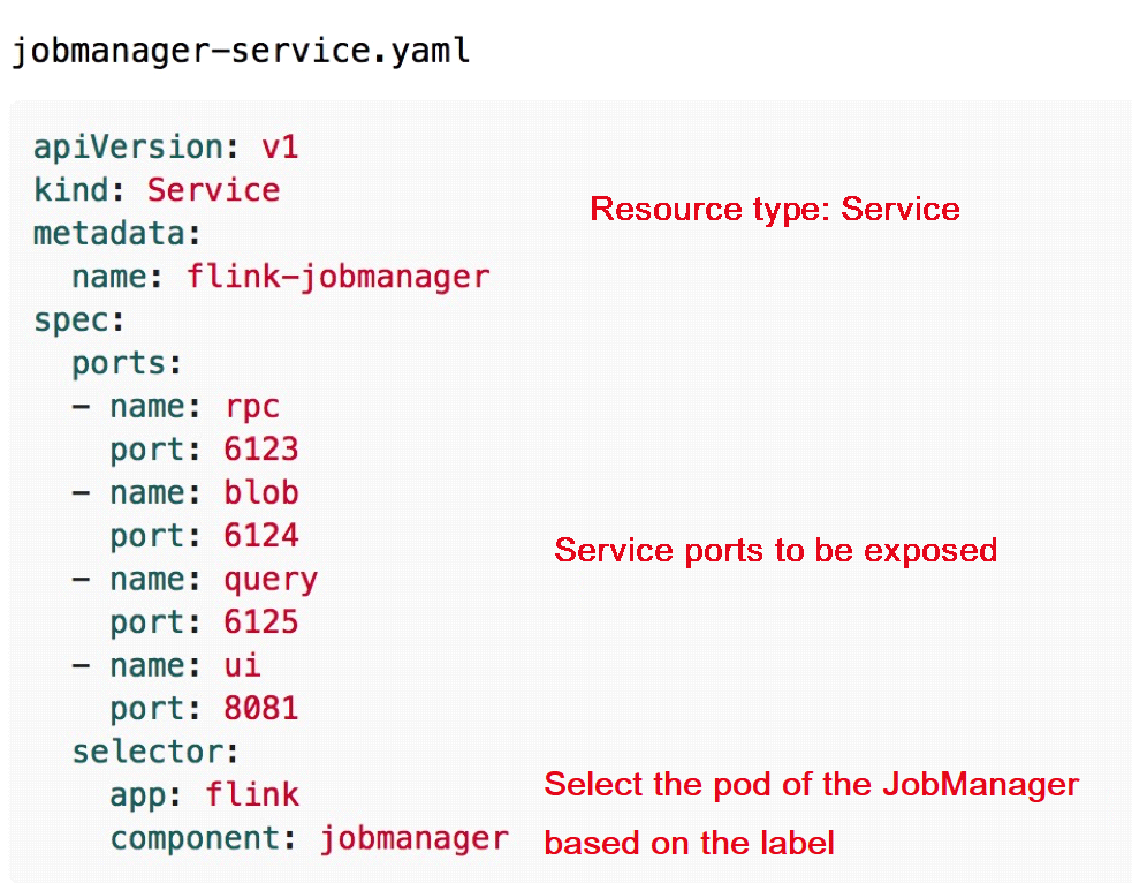

2) A JobManager Service is defined and exposed by using the service name and port number. Pods are selected based on the JobManager label.

A TaskManager is also described by a Deployment to ensure that it is executed by the containers of n replicas. A label, such as flink-taskmanager, is defined for this TaskManager.

The runtimes of the JobManager and TaskManager require configuration files, such as flink-conf.yaml, hdfs-site.xml, and core-site.xml. Define them as ConfigMaps in order to transfer and read configurations.

The entire interaction process is simple. You only need to submit defined resource description files, such as Deployment, ConfigMap, and Service description files, to the Kubernetes cluster. The Kubernetes cluster automatically completes the subsequent steps. The Kubernetes cluster starts pods and runs user programs based on the defined description files. The following components take part in the interaction process within the Kubernetes cluster:

This section describes how to run a job in Flink on Kubernetes.

•Session Cluster

• Start

•kubectl create -f jobmanager-service.yaml

•kubectl create -f jobmanager-deployment.yaml

•kubectl create -f taskmanager-deployment.yaml

•Submit job

•kubectl port-forward service/flink-jobmanager 8081:8081

•bin/flink run -d -m localhost:8081 ./examples/streaming/TopSpeedWindowing.jar

• Stop

•kubectl delete -f jobmanager-deployment.yaml

•kubectl delete -f taskmanager-deployment.yaml

•kubectl delete -f jobmanager-service.yamlStart the session cluster. Run the preceding three commands to start Flink's JobManager Service, JobManager Deployment, and TaskManager Deployment. Then, access these components through interfaces and submit a job through a port. To delete the cluster, run the kubectl delete command.

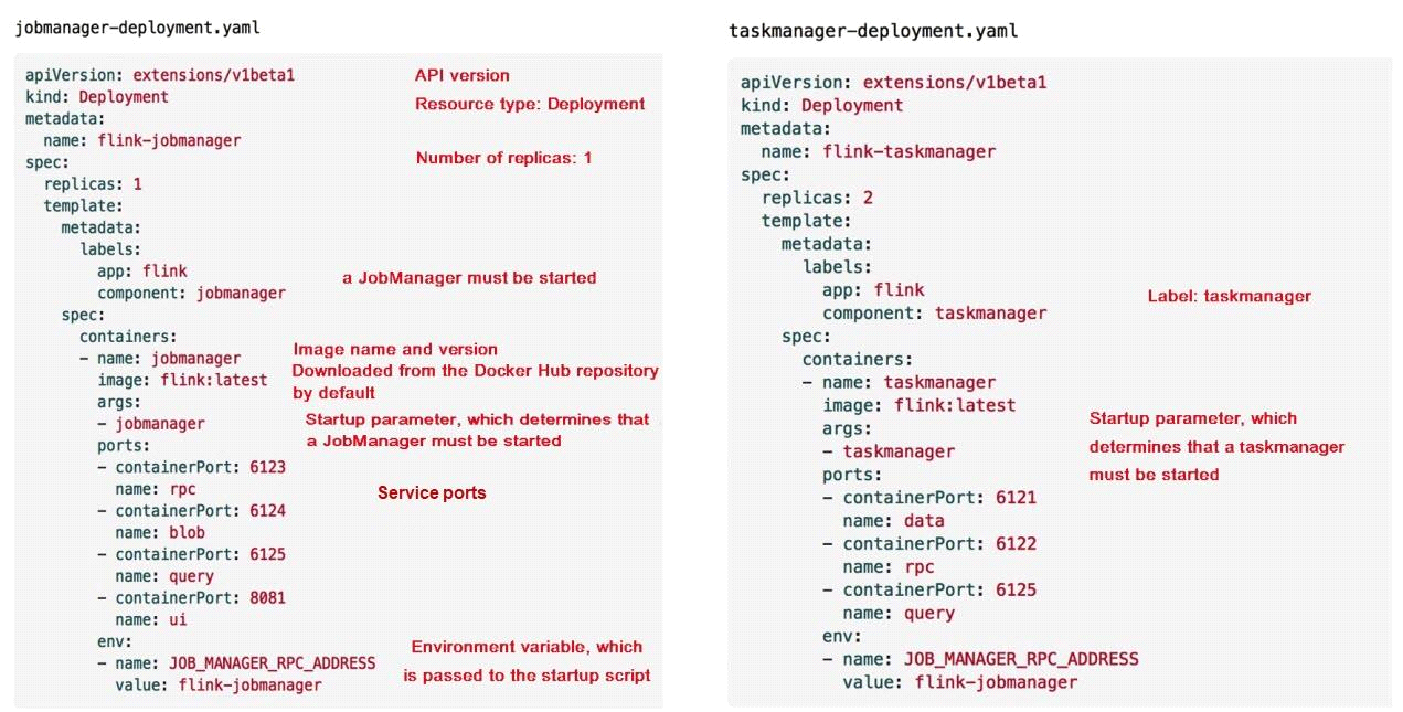

The preceding figure shows an example provided by Flink. The left part is the jobmanager-deployment.yaml configuration, and the right part is the taskmanager-deployment.yaml configuration.

In the jobmanager-deployment.yaml configuration, the first line of the code is apiVersion, which is set to the API version of extensions/vlbetal. The resource type is Deployment, and the metadata name is flink-jobmanager. Under spec, the number of replicas is 1, and labels are used for pod selection. The image name for containers is jobmanager. Containers include an image downloaded from the public Docker repository and may also use an image from a proprietary repository. The args startup parameter determines whether to start the JobManager or TaskManager. The ports parameter specifies the service ports to use. Port 8081 is a commonly used service port. The env parameter specifies an environment variable, which is passed to a specific startup script.

The taskmanager-deployment.yaml configuration is similar to the jobmanager-deployment.yaml configuration. However, in the former, the number of replicas is 2.

In the jobmanager-service.yaml configuration, the resource type is Service, which contains fewer configurations. Under spec, the service ports to expose are configured. The selector parameter specifies the pod of the JobManager based on a label.

In addition to the Session mode, the Per Job mode is also supported. In Per Job mode, the user code is passed to the image. An image is regenerated each time a change of the business logic leads to JAR package modification. This process is complex, so the Per Job mode is rarely used in production environments.

The following uses the public Docker repository as an example to describe the execution process of the job cluster.

sh build.sh --from-release --flink-version 1.7.0 --hadoop-version 2.8 --scala-version 2.11 --job-jar ~/flink/flink-1.7.1/examples/streaming/TopSpeedWindowing.jar --image-name topspeeddocker tag topspeed zkb555/topspeedwindowing

docker push zkb555/topspeedwindowingkubectl create -f job-cluster-service.yaml

FLINK_IMAGE_NAME=zkb555/topspeedwindowing:latest FLINK_JOB=org.apache.flink.streaming.examples.windowing.TopSpeedWindowing FLINK_JOB_PARALLELISM=3 envsubst < job-cluster-job.yaml.template | kubectl create -f –

FLINK_IMAGE_NAME=zkb555/topspeedwindowing:latest FLINK_JOB_PARALLELISM=4 envsubst < task-manager-deployment.yaml.template | kubectl create -f -Q) Can I submit jobs to Flink on Kubernetes using operators?

Currently, Flink does not support operator implementation. Lyft provides open-source operator implementation. For more information, check out this page.

Q) Can I use a high-availability (HA) solution other than ZooKeeper in a Kubernetes cluster?

etcd provides a high-availability key-value store similar to ZooKeeper. Currently, the Flink community is working on an etcd-based HA solution and a Kubernetes API-based solution.

Q) In Flink on Kubernetes, the number of TaskManagers must be specified upon task startup. Does Flink on Kubernetes support a dynamic application for resources as YARN does?

In Flink on Kubernetes, if the number of specified TaskManagers is insufficient, tasks cannot be started. If excessive TaskManagers are specified, resources are wasted. The Flink community is trying to figure out a way to enable the dynamic application for resources upon task startup, just as YARN does. The Active mode implements a Kubernetes-native combination with Flink, in which the ResourceManager can directly apply for resources from a Kubernetes cluster. For more information, see this link.

206 posts | 54 followers

FollowApache Flink Community China - September 16, 2020

Apache Flink Community China - November 6, 2020

Apache Flink Community - April 29, 2025

Alibaba Cloud Native Community - December 7, 2023

Apache Flink Community China - September 16, 2020

Apache Flink Community China - September 16, 2020

206 posts | 54 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Apache Flink Community