By Ma Qingxiang and compiled by Maohe

This article is based on the live courses on Apache Flink conducted by Ma Qingxiang, an Apache Flink contributor and advanced data developer at 360 Total Security. It consists of four parts: (1) customizing a serialization framework for Flink; (2) best practices for Flink serialization; (3) Flink communication serialization; and (4) FAQs.

The big data ecosystem is quite popular nowadays. Most technical components run on Java virtual machines (JVMs), and Flink is no exception. JVM-based data analysis engines must store a large amount of data in the memory, which leads to JVM-related problems, such as the low-density storage of Java objects. The most common way to solve these problems is to implement explicit memory management. Use a custom memory pool for memory allocation and reclamation, and then store serialized objects in memory blocks.

The Java ecosystem provides many serialization frameworks, such as Java serialization, Kryo, and Apache Avro. However, Flink still uses a proprietary custom serialization framework that offers the following two benefits:

1) Type testing is completed at an earlier time based on full type information.

2) An appropriate serialization method to create a data layout, which saves data storage space and enables direct operations on binary data.

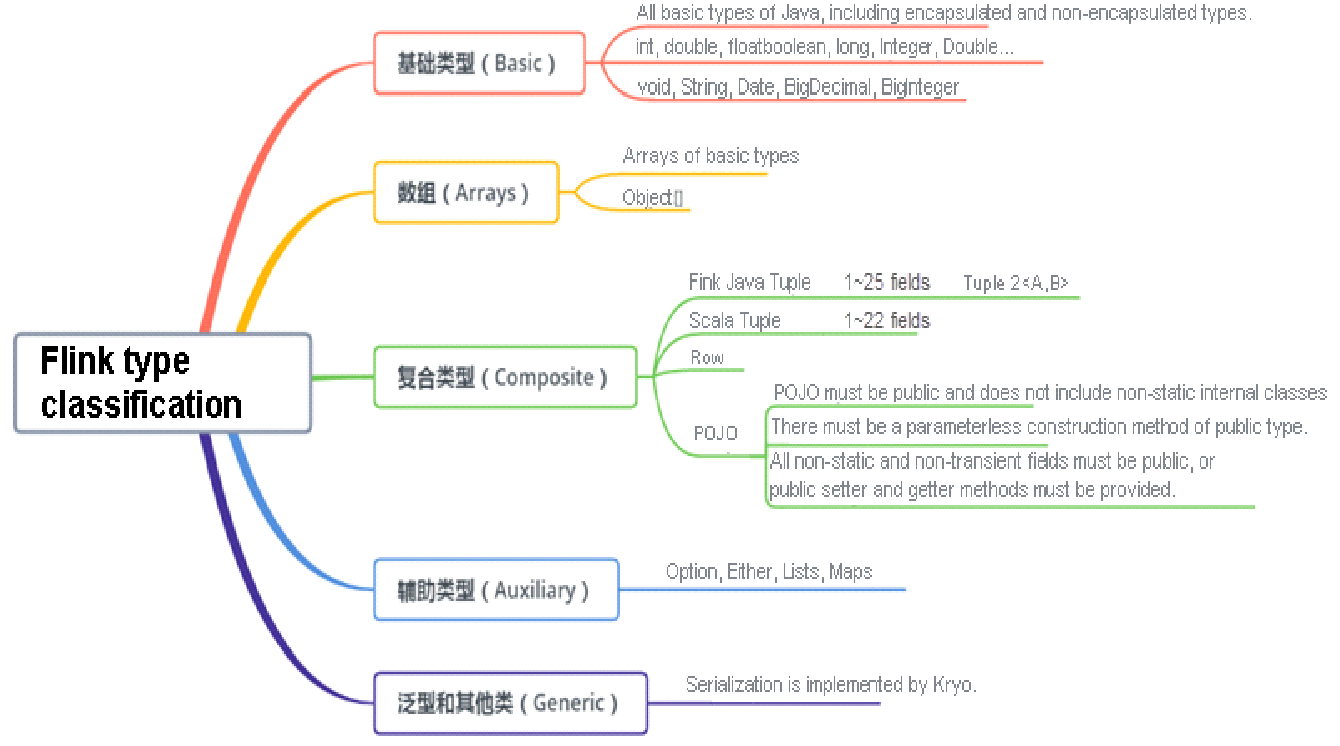

Flink has its own internal data type system. The preceding figure shows the data types currently supported by Flink, including the basic type, array type, composite type, auxiliary type, and generic type. Flink programs process data represented as arbitrary Java or Scala objects. Flink automatically recognizes data types, whereas Hadoop recognizes data types through the org.apache.hadoop.io.Writable interface.

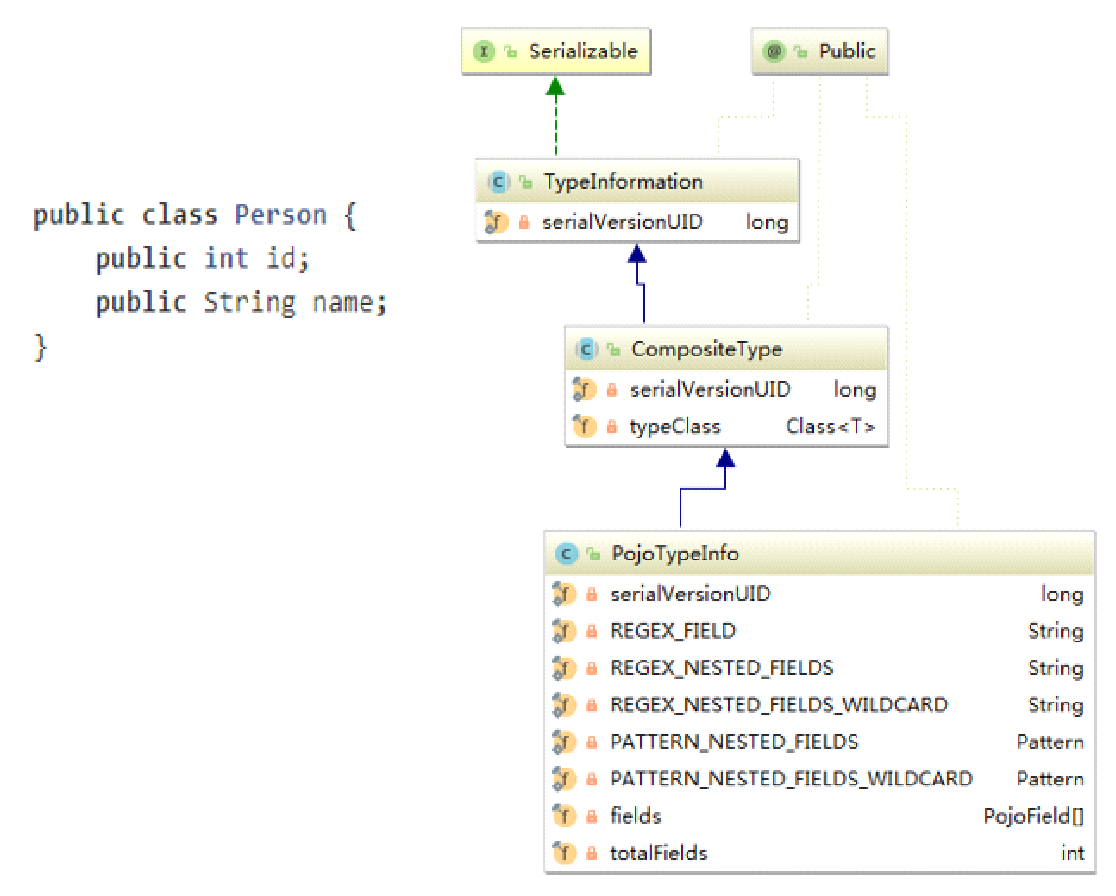

How are data types represented in Flink? For the Person class shown in the figure, a composite-type POJO is represented as PojoTypeInfo in Flink and inherited from TypeInformation. Flink uses TypeInformation as a type descriptor to represent every data type.

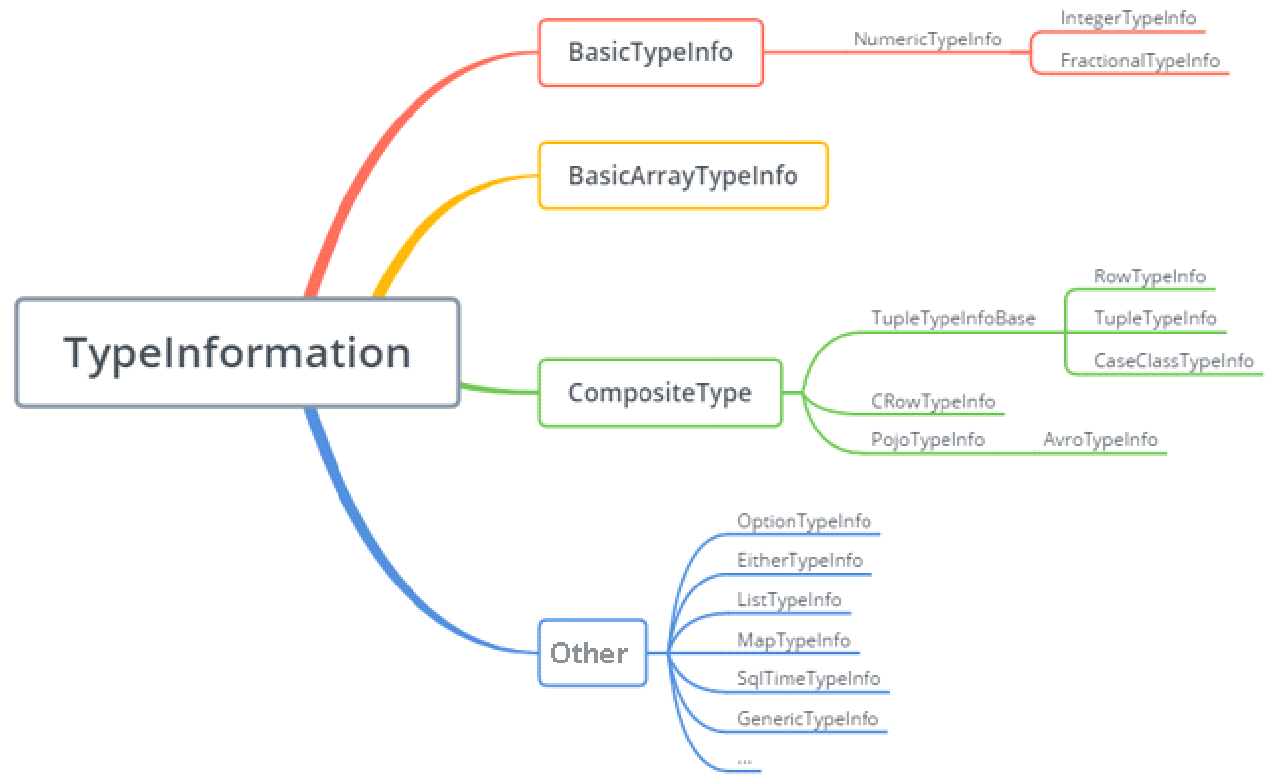

The preceding figure shows the mind map of TypeInformation. Each Flink data type corresponds to a specific TypeInformation class. For example, IntegerTypeInformation and FractionalTypeInformation of BasicTypeInformation each correspond to a TypeInformation. BasicArrayTypeInformation, CompositeType, and other data types each correspond to a TypeInformation.

TypeInformation is the core class of the Flink type system. Flink also uses TypeInformation to define the input and output types of each user-defined function (UDF). TypeInformation is used as a tool to generate TypeSerializer for semantic checking. For example, it checks whether the fields used as the keys for joining or grouping belong to a specified type.

The following section explains how to use TypeInformation.

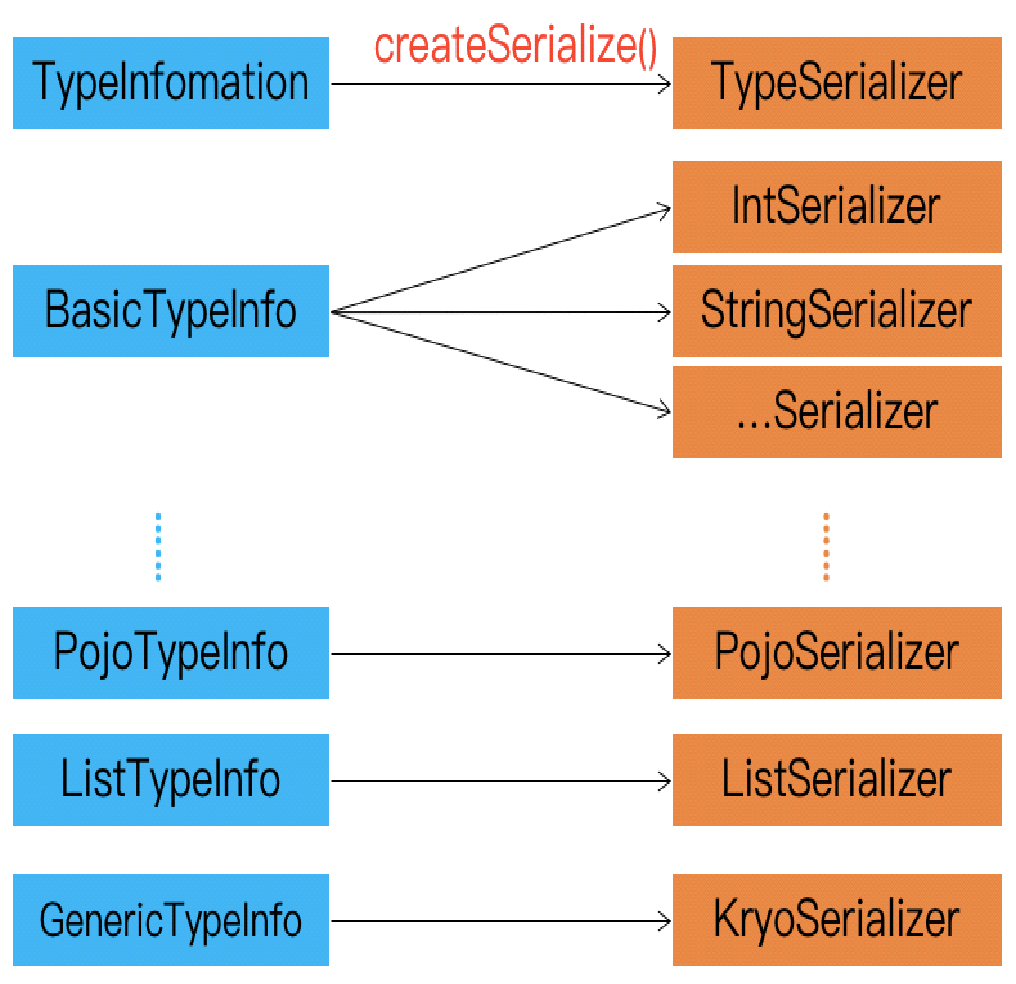

A serializer is required to implement serialization in Flink. Each data type corresponds to a specific TypeInformation, which provides the data type with a dedicated serializer. As shown in the preceding Flink serialization process diagram, TypeInformation provides the createSerialize() method that provides a TypeSerializer for data serialization and deserialization for the corresponding data type.

Flink automatically generates serializers for most data types, such as BasicTypeInfo and WritableTypeIno, to effectively serialize and deserialize datasets. For GenericTypeInfo, Flink uses Kryo for serialization and deserialization. Tuple, POJO, and CaseClass are of the composite type and can be nested with one or more data types. These data types use composite-type serializers. The composite data types entrust their nested serialization to the corresponding types of serializers.

The POJO serializer is used for serialization and deserialization only under the following conditions:

1) The class is public and includes a public parameterless constructor.

2) All the non-static and non-transient fields of this class and all superclasses are public and non-final, or public getter and setter methods are provided.

3) These methods follow the Java bean naming conventions for getter and setter methods.

When a custom data type is not recognized as a POJO type, it must be processed as GenericType and serialized by using Kryo.

Flink comes with many TypeSerializer subclasses. In most cases, custom types are permutations and combinations of commonly used types and is directly reused. If the built-in data types and serialization methods do not meet your needs, create data types by using Flink's type information system. Use TypeInformation, TypeSerializer, and TypeComparator to create custom methods to serialize and compare data types, which improves performance.

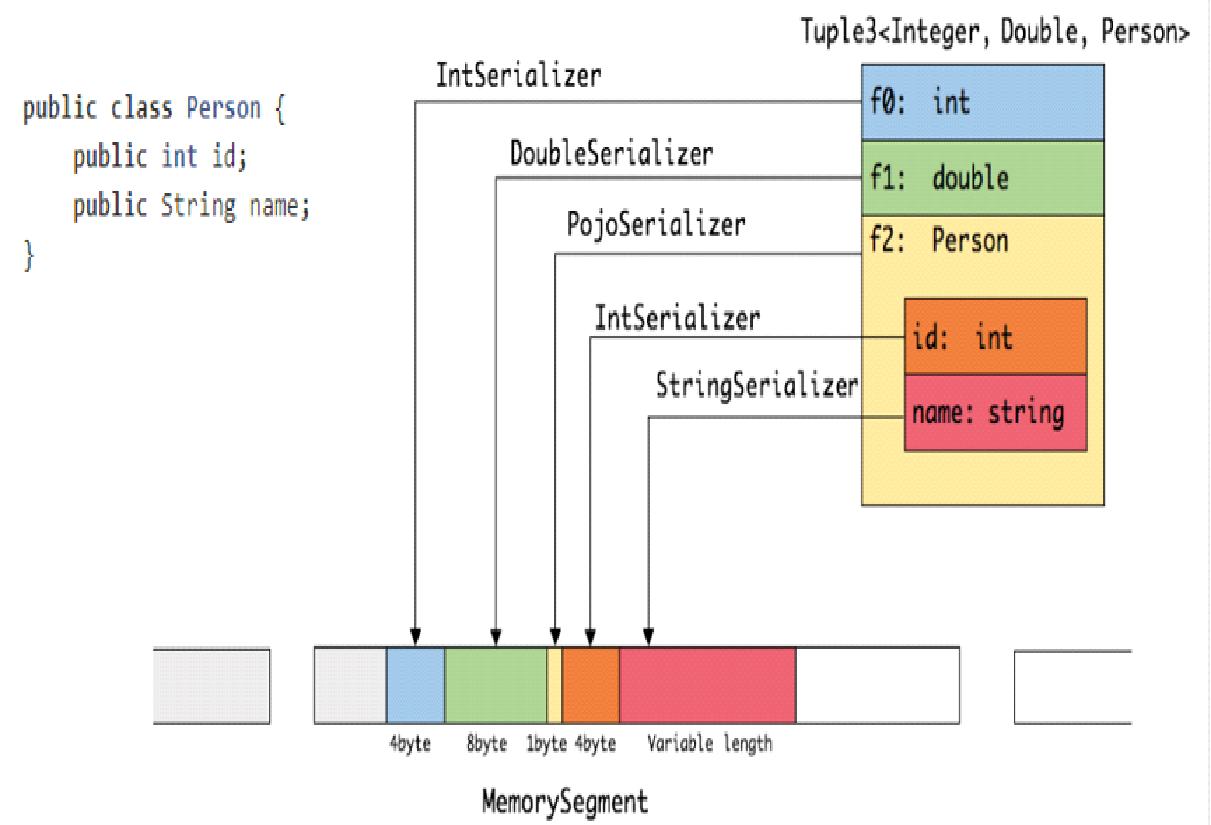

Serialization is the process of converting data structures or objects to a binary string. In Java, a binary string can be simply understood as a byte array. Deserialization is the process of converting the binary strings generated during serialization to data structures or objects. The following describes the serialization process of a nested Tuple 3 object.

Tuple 3 has three levels: int, double, and Person. Person includes two fields, the int-type ID field, and the string-type name field, which are serialized by an entrusted serializer. As shown in the preceding figure, Tuple 3 serializes the int-type field through IntSerializer. This field occupies four bytes. In Flink, serialization and deserialization are improved when data types are known. Java serialization saves more space for storing attribute information, but the storage space occupied by a single data type is relatively large.

The Person class is processed as a POJO object. PojoSerializer stores some attribute information in one byte. Its fields are serialized by using a dedicated serializer. All serialized data is supported by memory segments. What is the purpose of memory segments?

In Flink, a memory segment is used to serialize an object to a pre-allocated memory block with a fixed length and a default size of 32 KB. A memory segment is the smallest unit of memory allocation in Flink. It is equivalent to a byte array in Java. Each record is serialized and stored in one or more memory segments.

Flink is used in the following four common scenarios:

In most cases, you do not have to worry about the serialization framework and registration type. Flink provides many serialization operations, so you do not need to define your own serializer. However, additional processing is required in special scenarios.

During type declaration, use the TypeInformation.of() method to create a TypeInformation object.

PojoTypeInfo<Person> typeInfo = (PojoTypeInfo<Person>) TypeInformation.of(Person.class);final TypeInfomation<Tuple2<Integer,Integer>> resultType = TypeInformation.of(new TypeHint<Tuple2<Integer,Integer>>(){});For example, the BasicTypeInfo class defines shortcuts for common data types. These shortcuts are directly used to declare basic data types, such as string, boolean, byte, short, integer, long, float, double, and char. Flink also provides the equivalent Types class (org.apache.flink.api.common.typeinfo.Types). The Flink Table module also contains a Types class (org.apache.flink.table.api.Types) used to define the module's internal data types. This Types class is used in a different way than the Types class provided by Flink. Therefore, you must be careful when using IDE's automatic import function.

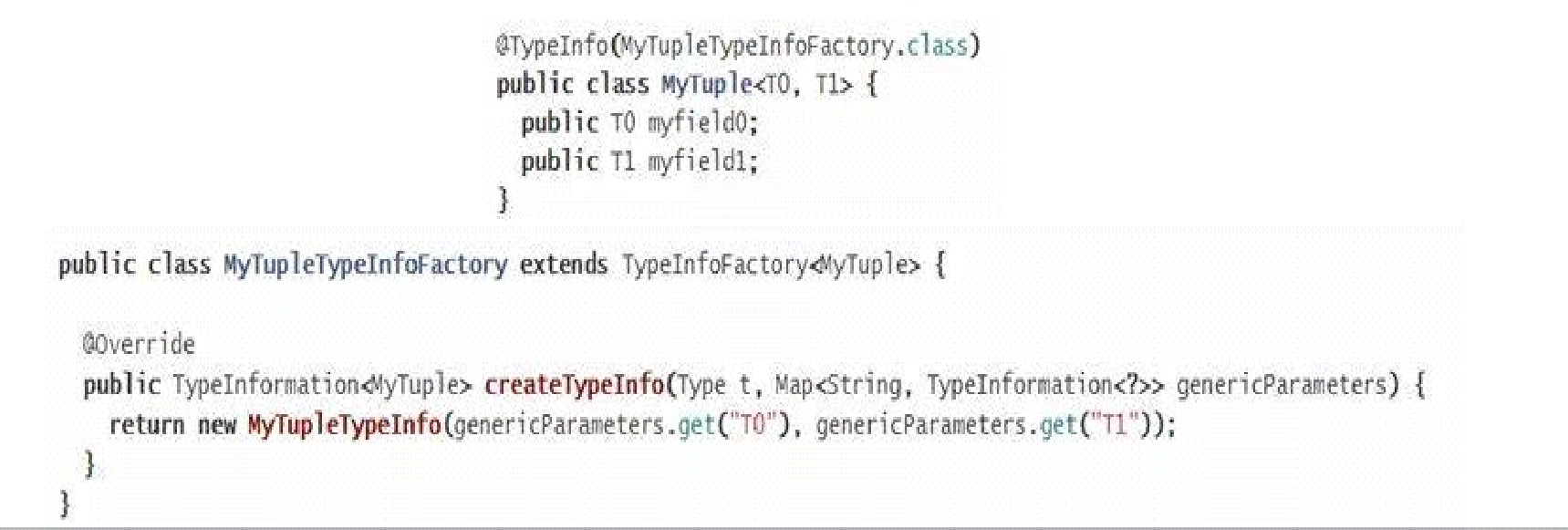

Customize TypeInfo to implement Flink's native memory management (instead of Kryo) for any classes. This ensures compact storage and efficient runtime. You must add the @TypeInfo annotation to custom classes and create the corresponding TypeInfoFactory to overwrite the createTypeInfo() method.

Flink recognizes superclasses but does not necessarily recognize some unique features of subclasses. Therefore, subtypes must be registered separately.

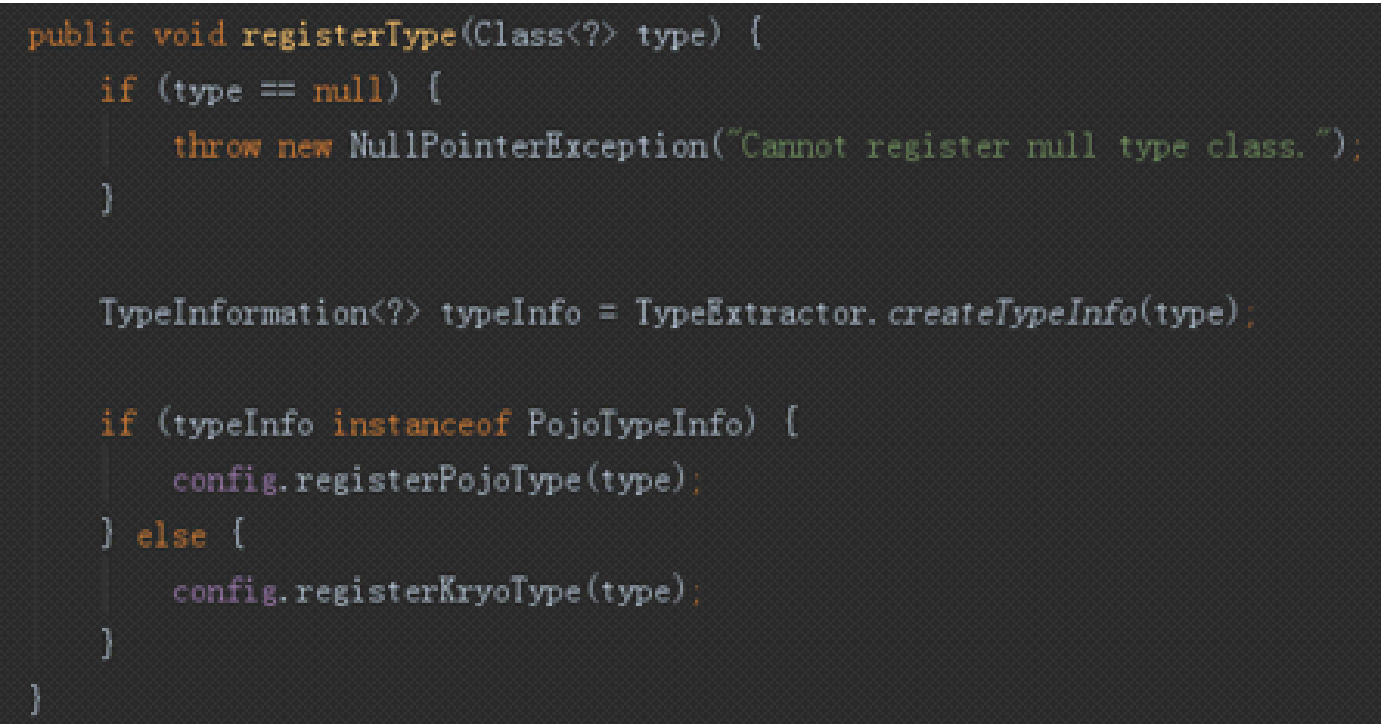

StreamExecutionEnvironment and ExecutionEnvironment provide the registerType() method to register subclasses to Flink.

final ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

Env. registerType(typeClass);

The registerType() method uses TypeExtractor to extract type information, as shown in the preceding figure. The extracted type information belongs to PojoTypeInfo and its subclasses, so you must register them together. If the extracted type information does not belong to PojoTypeInfo, it is sent to Kryo for processing, without Flink's involvement. This may reduce performance.

Flink cannot serialize some data types, such as custom data types without registerType or custom TypeInfo and TypeInfoFactory. These data types are processed by Kryo. For the data types that cannot be processed by Kryo, such as Guava, Thrift, Protobuf, and other third-party library classes, two solutions are available:

env.getConfig().enableForceAvro(); env.getConfig().addDefaultKryoSerializer(clazz, serializer);Note: Use the Kryo-env.getConfig().disableGenericTypes() method to disable Kryo so that all classes are processed by Flink's serialization mechanism. However, exceptions occur when some classes cannot be processed by Flink's serialization mechanism. Therefore, this method is effective for debugging.

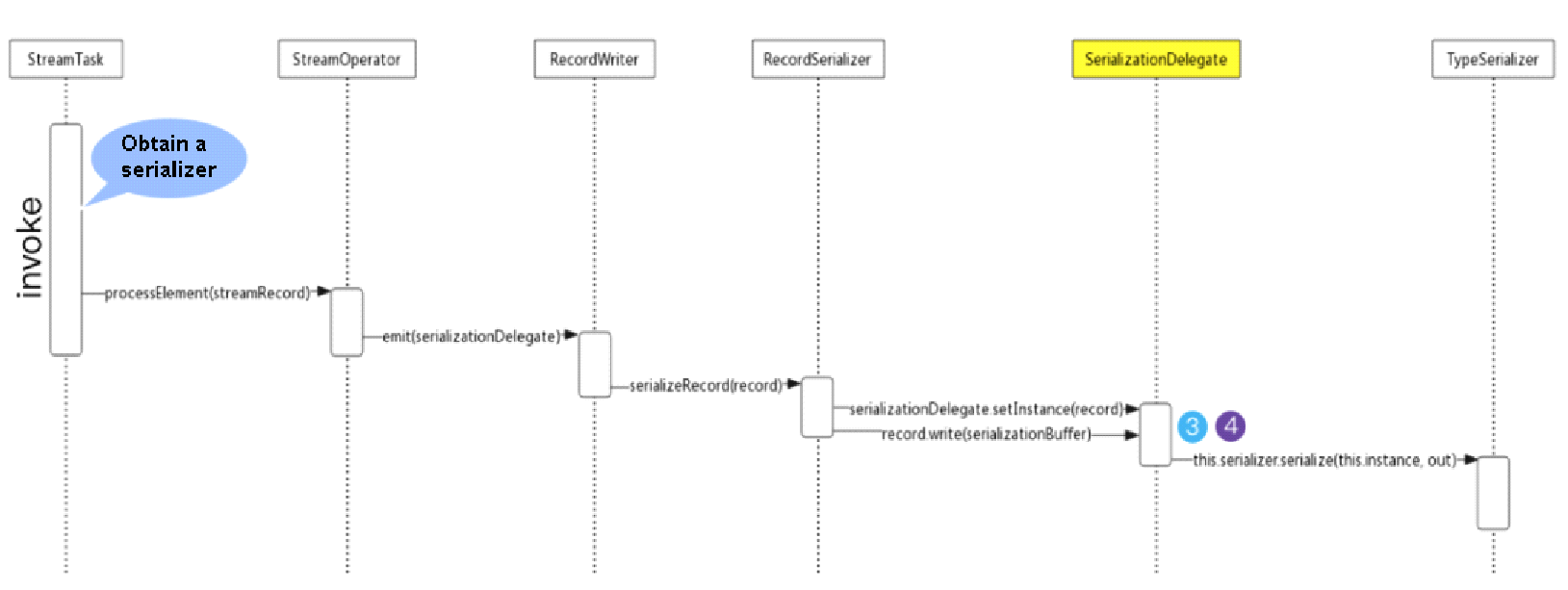

If Flink tasks need to transmit data records across networks, the data must be serialized and written to the network buffer pool. Then, the lower-level tasks read the data and deserialize and process it logically.

To enable records and events to be written to buffers and then read from the buffers for consumption, Flink provides RecordSerializer, RecordDeserializer, and EventSerializer.

Function-sent data is encapsulated into SerializationDelegate, which exposes any elements as IOReadableWritable for serialization. The data to be serialized is passed in by setInstance().

Note the following points about Flink communication serialization:

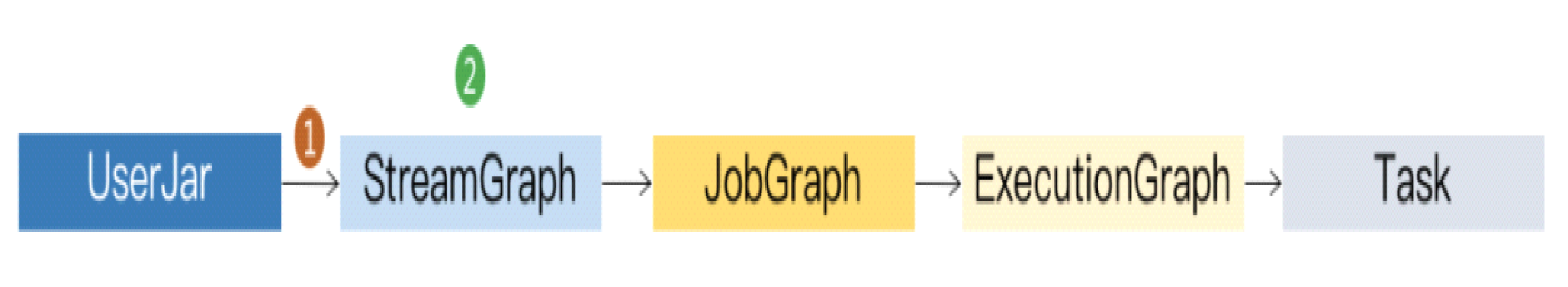

Use TypeExtractor to determine the input and output types of a function when creating a StreamTransformation. The TypeExtractor class automatically extracts or restores type information based on clues such as the method signature and subclass information.

When creating a StreamGraph, use the createSerializer() method of TypeInformation to obtain the TypeSerializer of the corresponding data type, and perform the setSerializers() operation during addOperator() to set the TYPE_SERIALIZER_IN_1, TYPE_SERIALIZER_IN_2, and TYPE_SERIALIZER_OUT_1 attributes of StreamConfig.

TaskManagers directly manages and schedules tasks. Tasks call StreamTasks and encapsulate the operator processing logic. In the run() method, encapsulate the deserialized data into stream records and send them to the operator for processing. Send the processing results downstream through the collector. SerializtionDelegate is determined when the collector is created. Write the serialized data to DataOutput using the record writer. Transfer the serialization operation to SerializerDelegate. Serialization is actually completed by the serialize() method of TypeSerializer.

Principles and Practices of Flink on YARN and Kubernetes: Flink Advanced Tutorials

206 posts | 56 followers

FollowApache Flink Community - May 30, 2024

Apache Flink Community China - September 16, 2020

Apache Flink Community China - April 19, 2022

Alibaba Clouder - December 5, 2016

Apache Flink Community China - September 16, 2020

Apache Flink Community China - December 25, 2019

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Super App Solution for Telcos

Super App Solution for Telcos

Alibaba Cloud (in partnership with Whale Cloud) helps telcos build an all-in-one telecommunication and digital lifestyle platform based on DingTalk.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn MoreMore Posts by Apache Flink Community