By Tang Yun (Chagan)

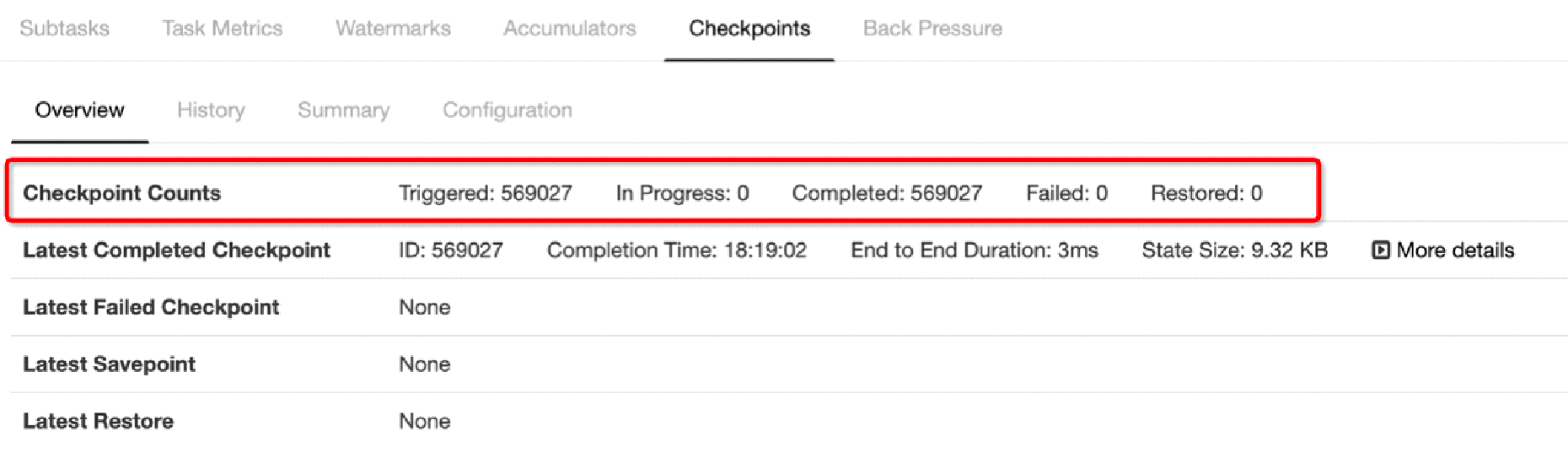

A checkpoint in Apache Flink is a global operation that is triggered by the source nodes to all downstream nodes. As shown in the red box in the following figure, a total of 569,027 checkpoints are triggered and all are completed successfully.

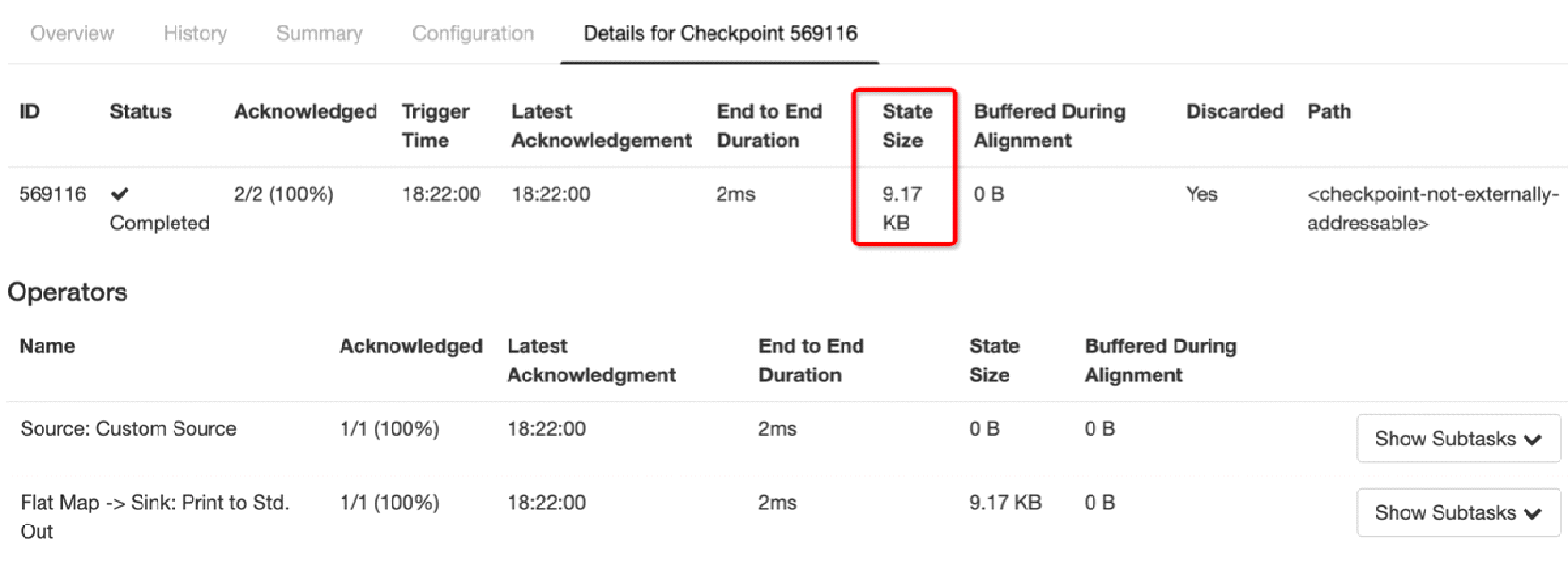

A state is the data for persistent backup made by a checkpoint. As shown within the red box in the following figure, the state size of Checkpoint 569116 is 9.17 KB.

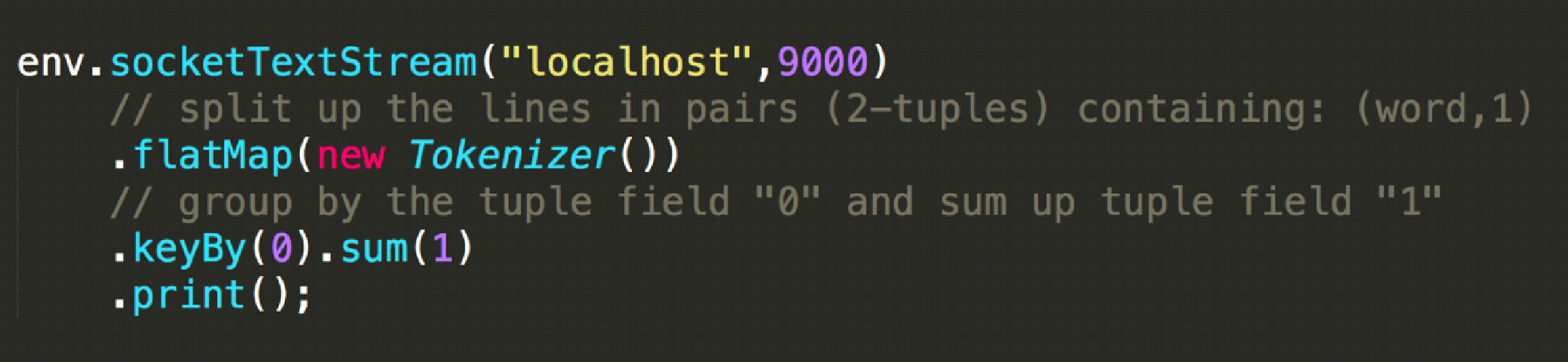

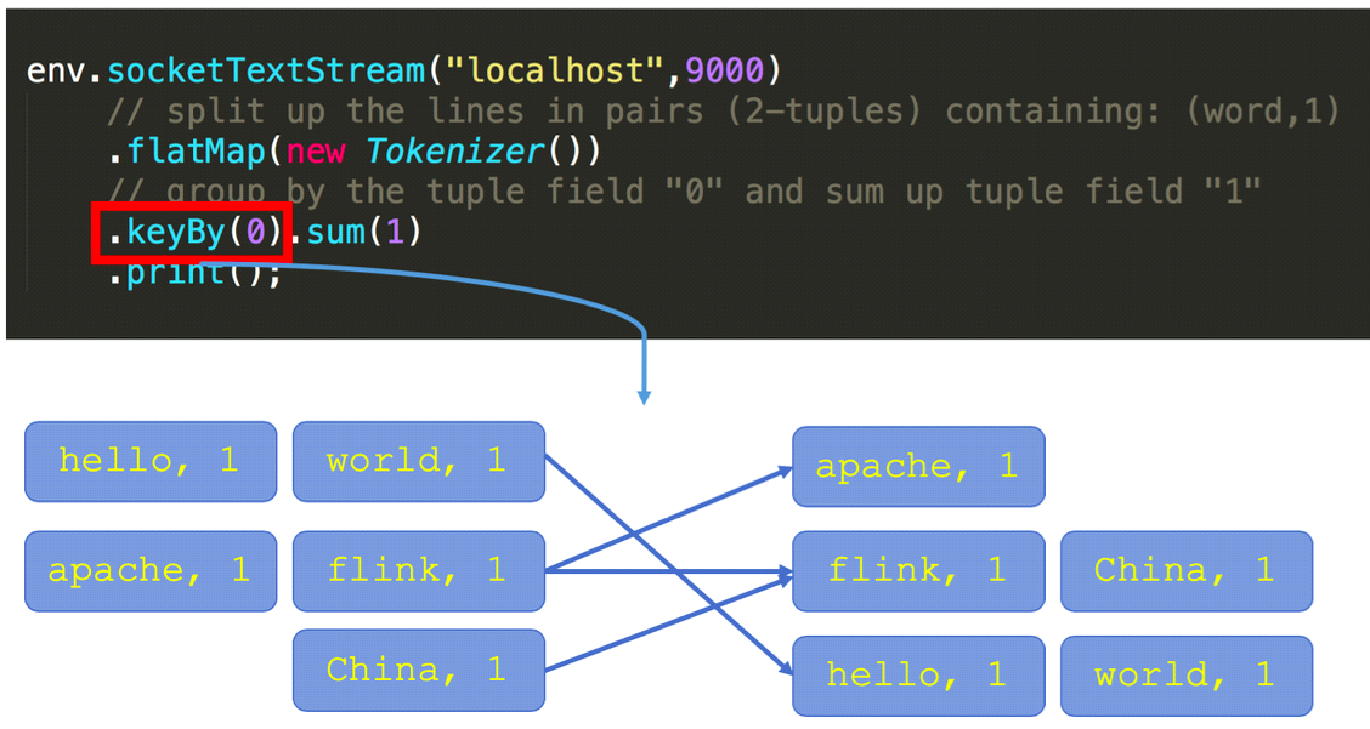

Let's understand the definition of a state. The following figure shows a typical word count code, which is used to monitor the data of the local port 9000 and count the word frequency of network port input. If we execute Netcat locally and enter "hello world" on the command-line interface (CLI), what kind of output will we get?

The output includes (hello, 1) and (world, 1).

If we enter "hello world" on the CLI again, what kind of output will we get?

The output includes (hello, 2) and (world, 2). When "hello world" is entered for the second time, Flink determines that these two words already appeared once each by checking a type of state called keyed state, which stores previous word frequency statistics.

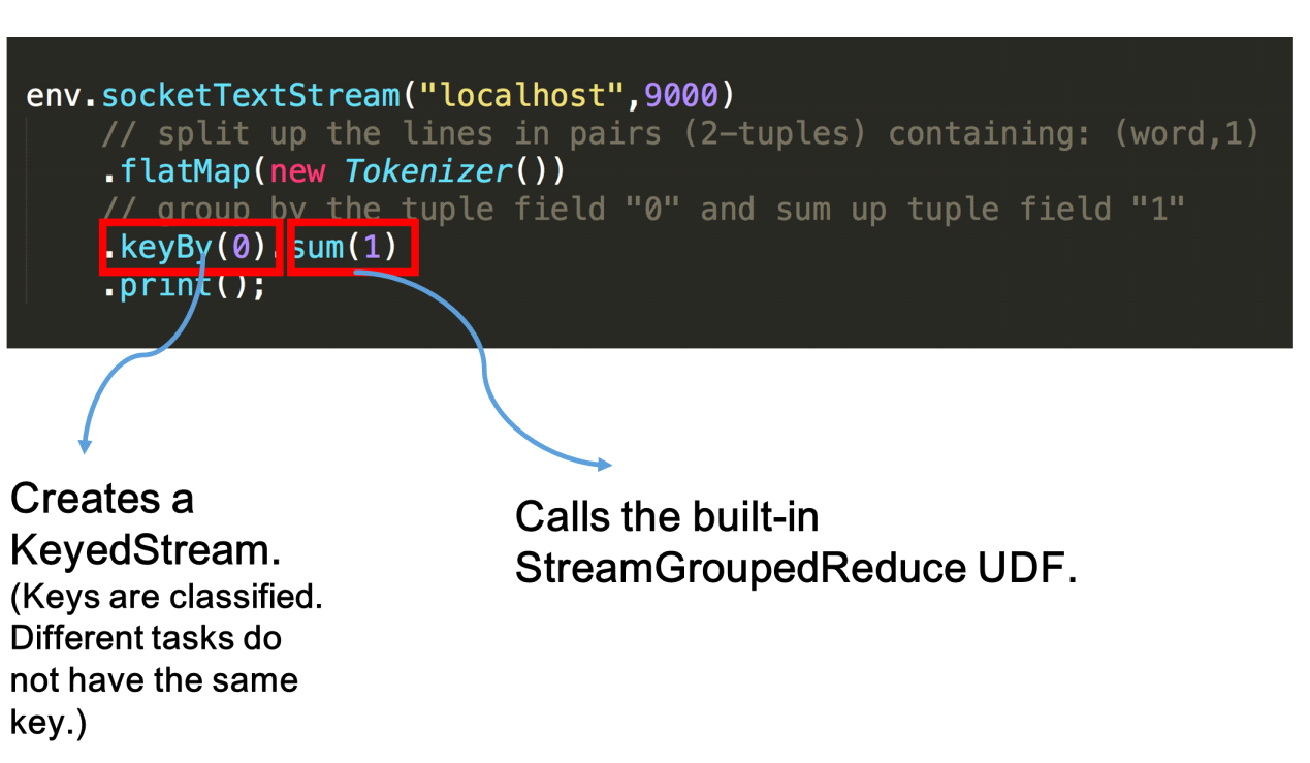

Let's review the word count code. When the keyBy interface is called, a KeyedStream is created to divide the keys, which is a prerequisite for using a keyed state. Then, the sum method calls the built-in StreamGroupedReduce to implement the keyed state.

A keyed state has two features:

It will be better to understand the concept of partitioning by looking at the keyBy semantics. The following figure shows three concurrencies on the left and three concurrencies on the right. The keyBy interface distributes incoming words, as shown in the left part of the figure. For example, when we enter "hello world", the word "hello" is always distributed to the concurrent task in the lower-right corner by the hash algorithm.

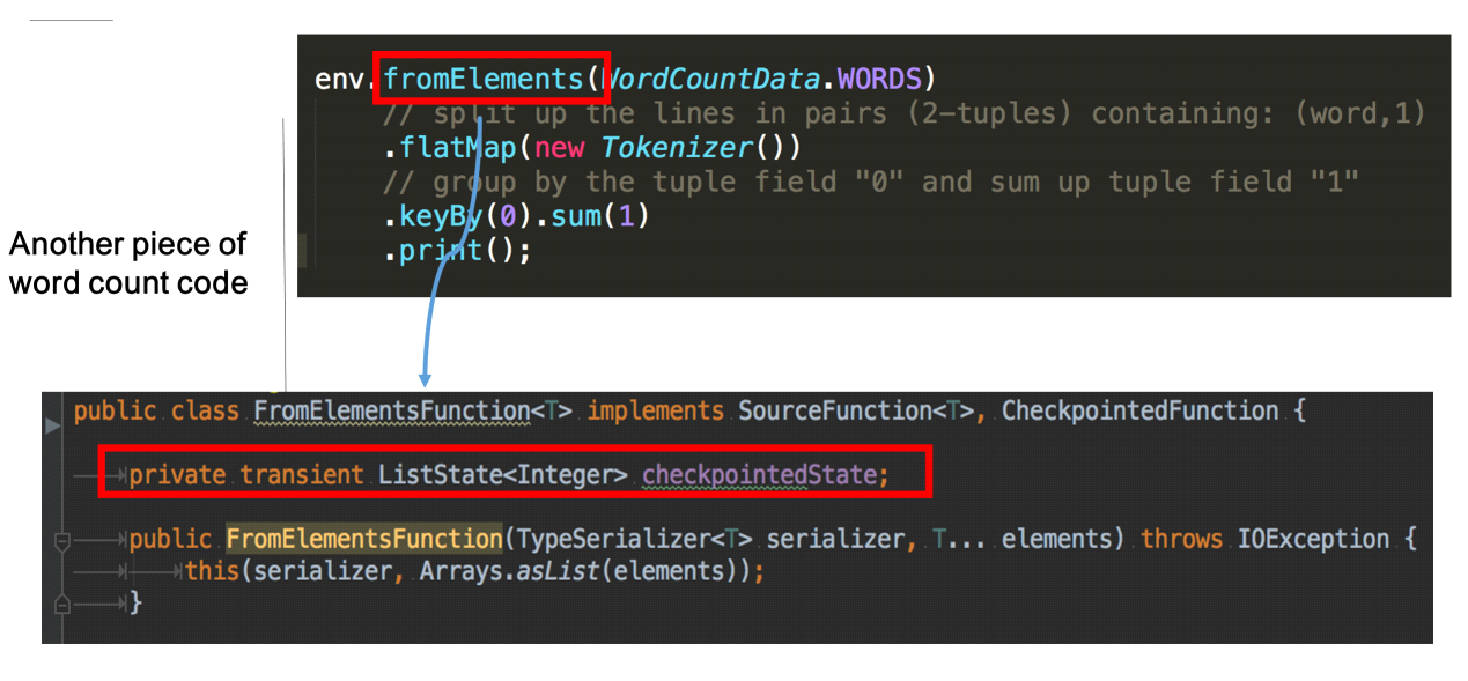

The following figure shows the word count code that uses an operator state.

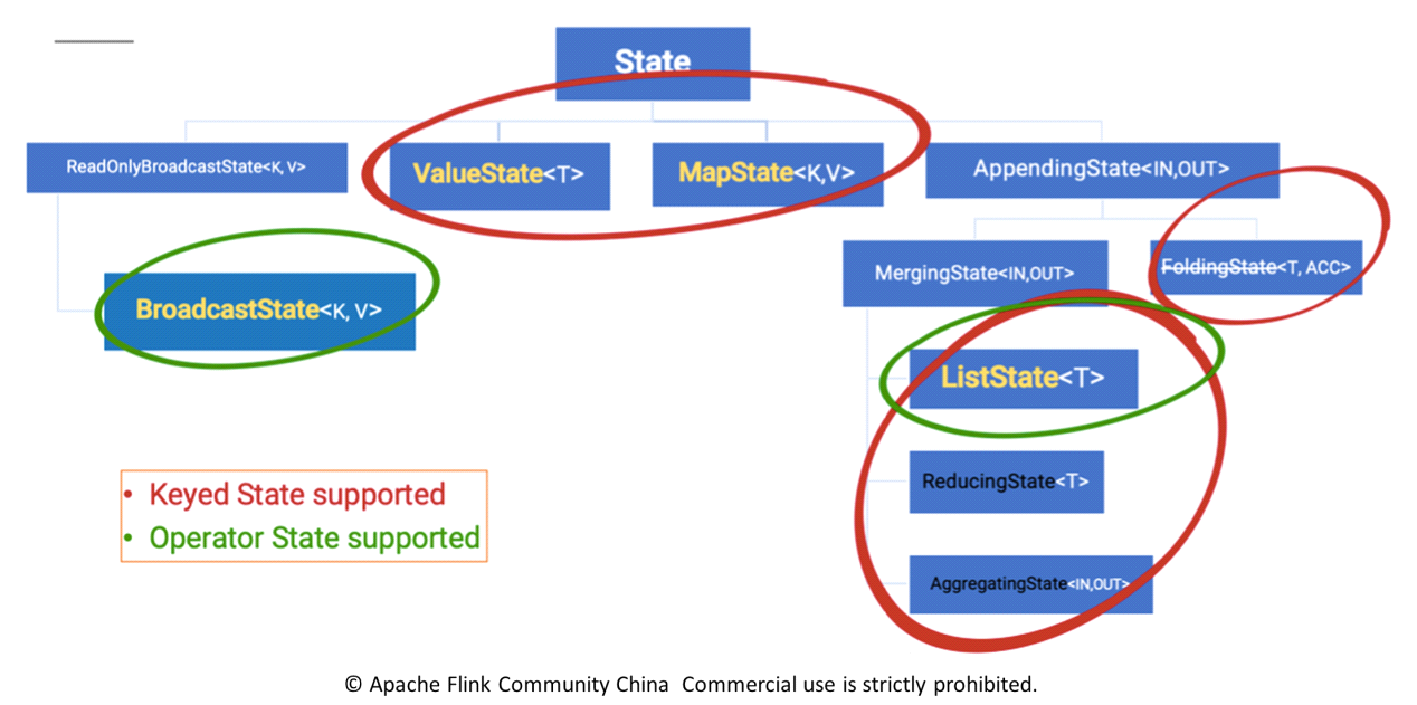

fromElements calls the FromElementsFunction class. An operator state of the list state type is used. The following figure shows a classification of states by type.

The classification of states is also based on whether they are directly managed by Flink.

We recommend using managed states in actual production environments. It is the focus of this article.

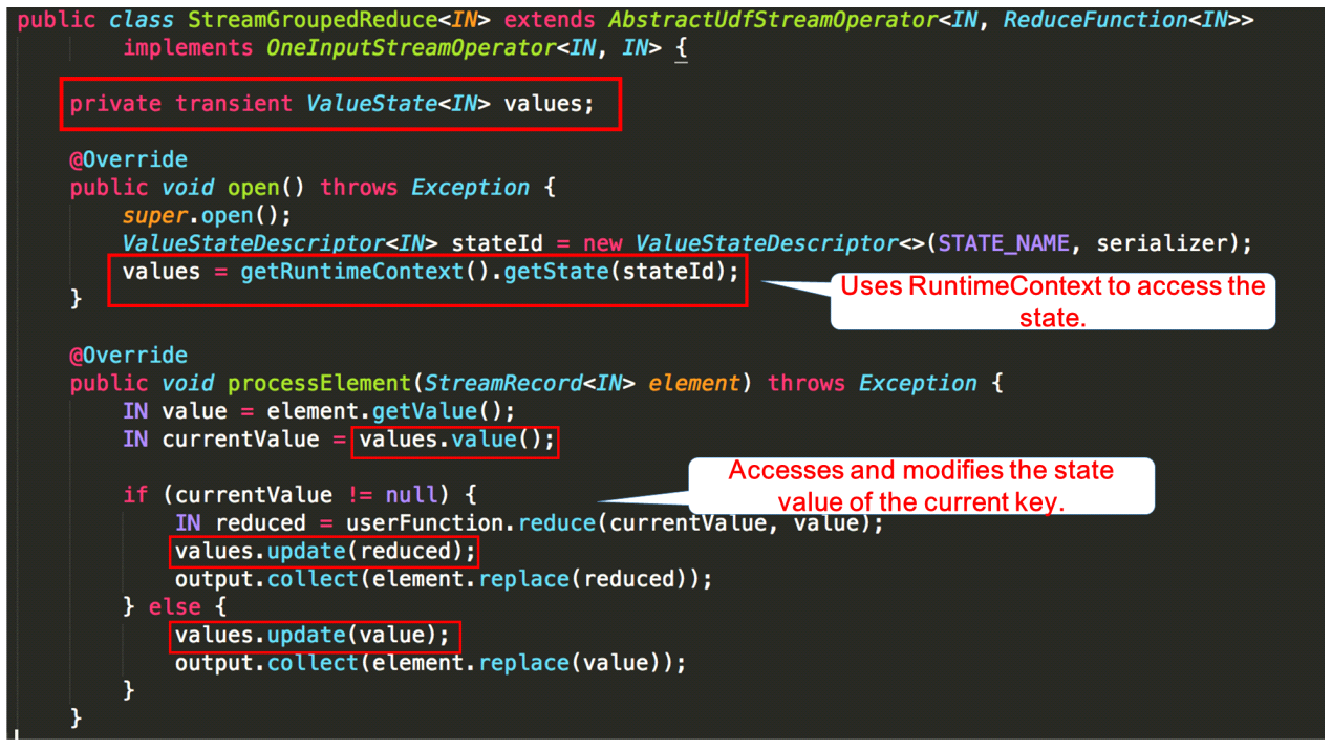

The following figure shows how to use the keyed state in code. Consider the StreamGroupedReduce class used by the sum method in the preceding word count code as an example.

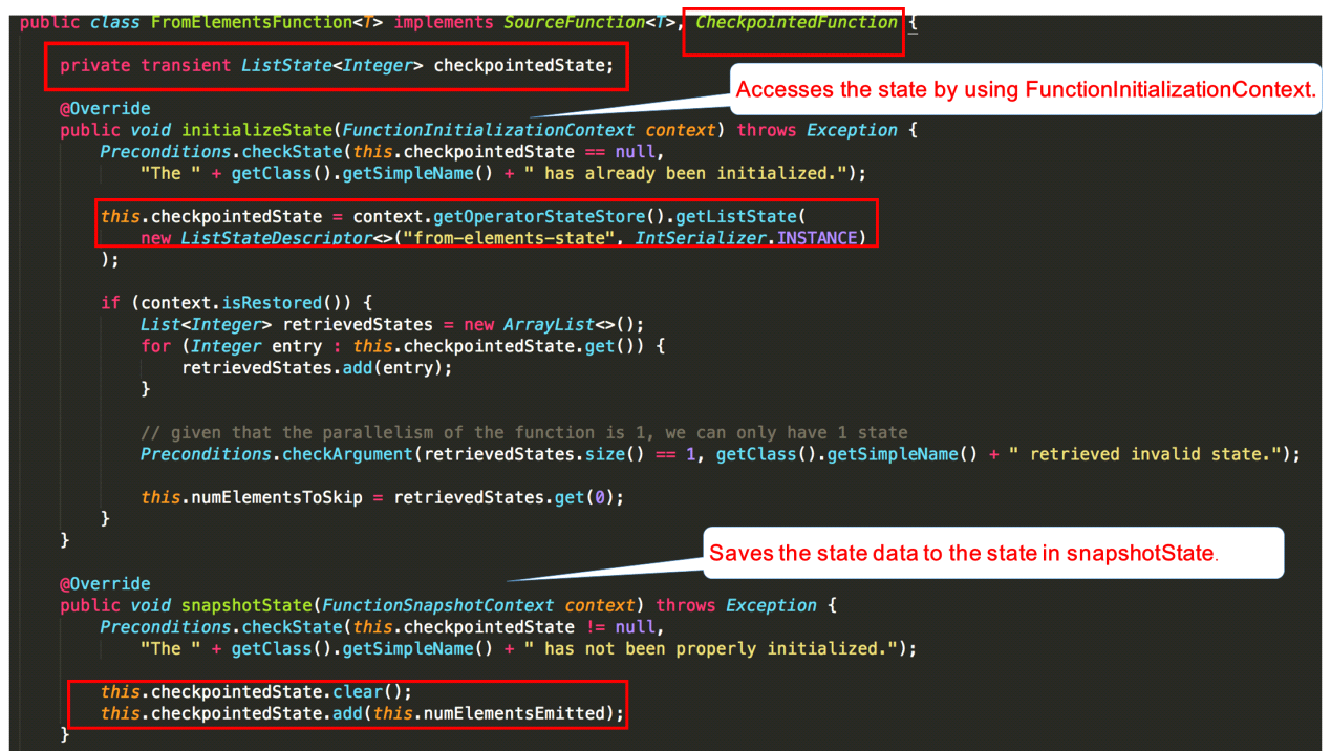

The following figure shows a detailed description of the FromElementsFunction class and illustrates how to use the operator state in code.

Before introducing the checkpoint execution mechanism, we need to understand the storage of states because states are essential for the persistent backup of checkpoints.

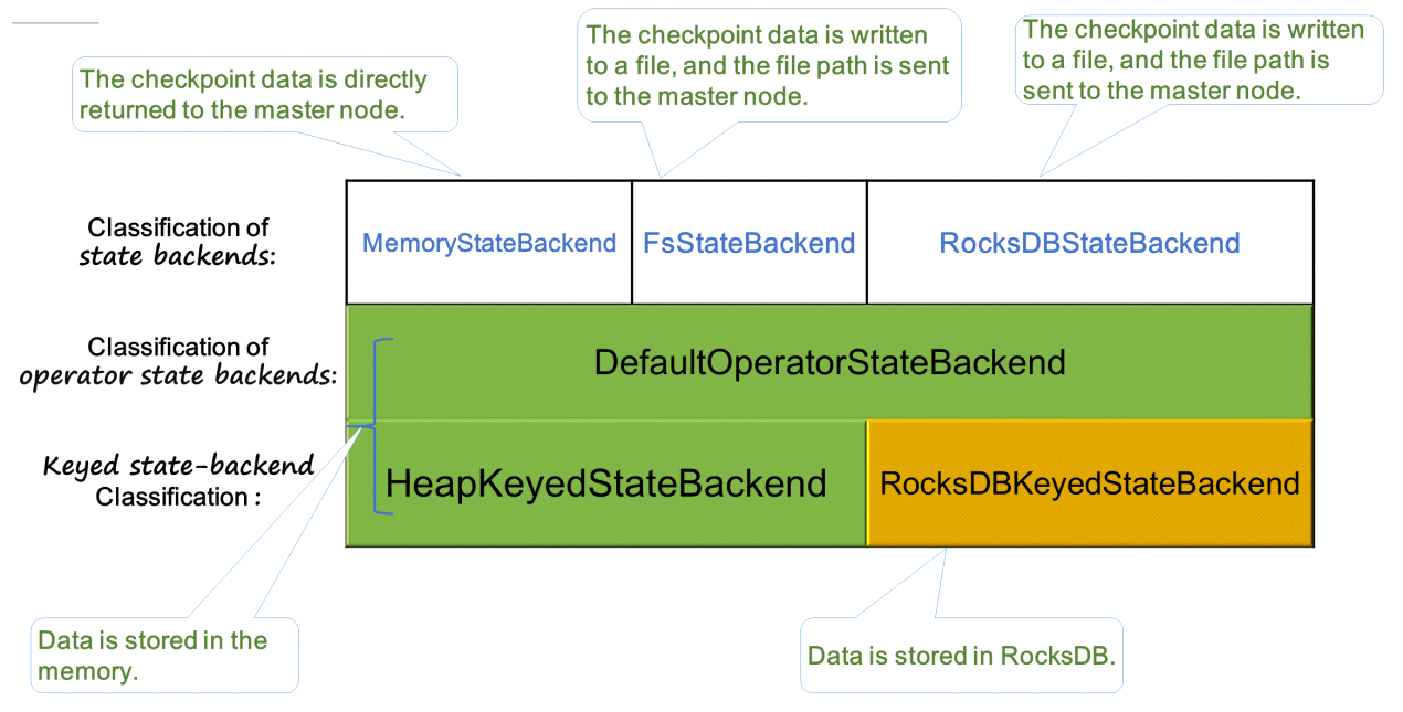

The following figure shows three built-in state backends in Flink. MemoryStateBackend and FsStateBackend are stored in Java heap during runtime. FsStateBackend persistently saves data in the form of files to remote storage only when a checkpoint is executed. RocksDBStateBackend uses RocksDB (an LSM database and combines memory and disks) to store states.

Following are the ways to execute HeapKeyedStateBackend:

When HeapKeyedStateBackend is used in MemoryStateBackend, by default, the maximum data volume is 5 MB during checkpoint-based data serialization.

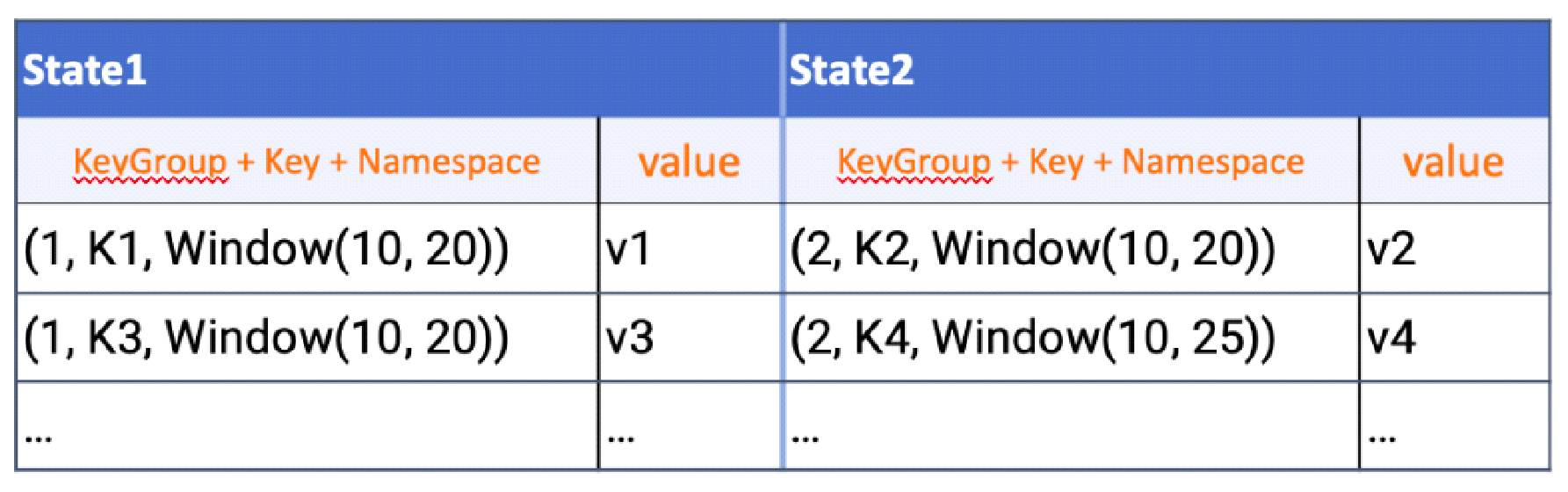

For RocksDBKeyedStateBackend, each state is stored in a separate column family. The keyGroup, Key, and Namespace are serialized and stored in the database in the form of keys.

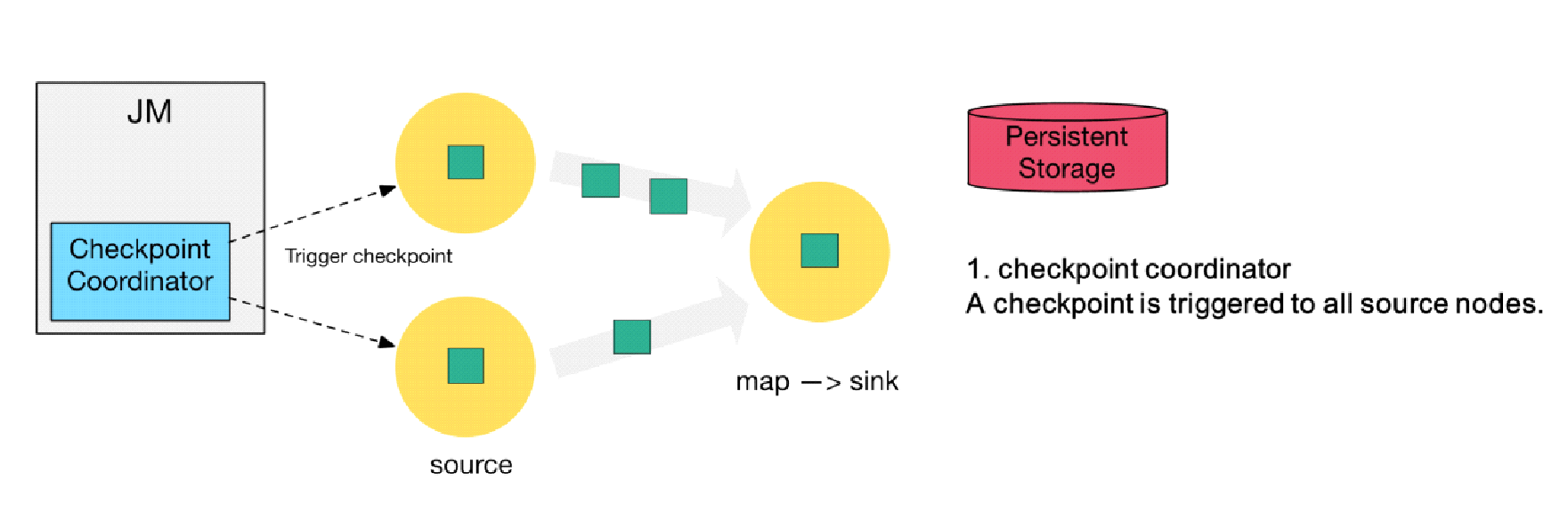

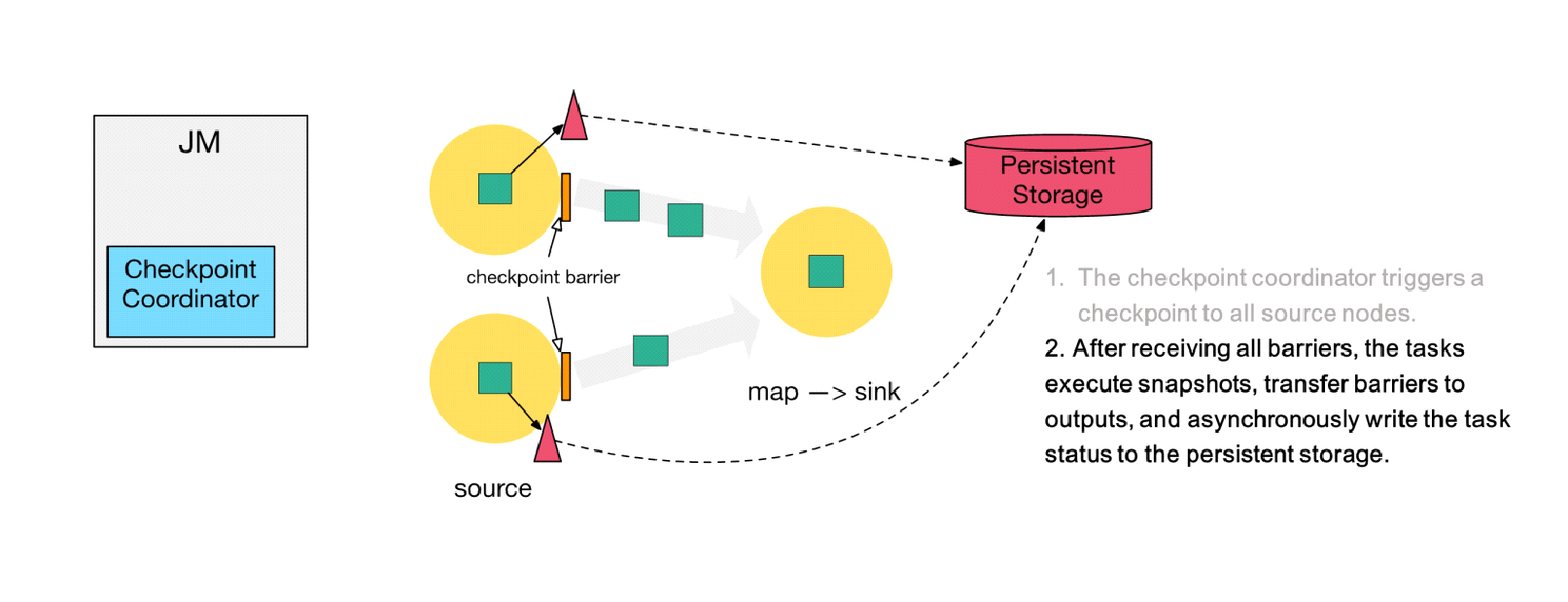

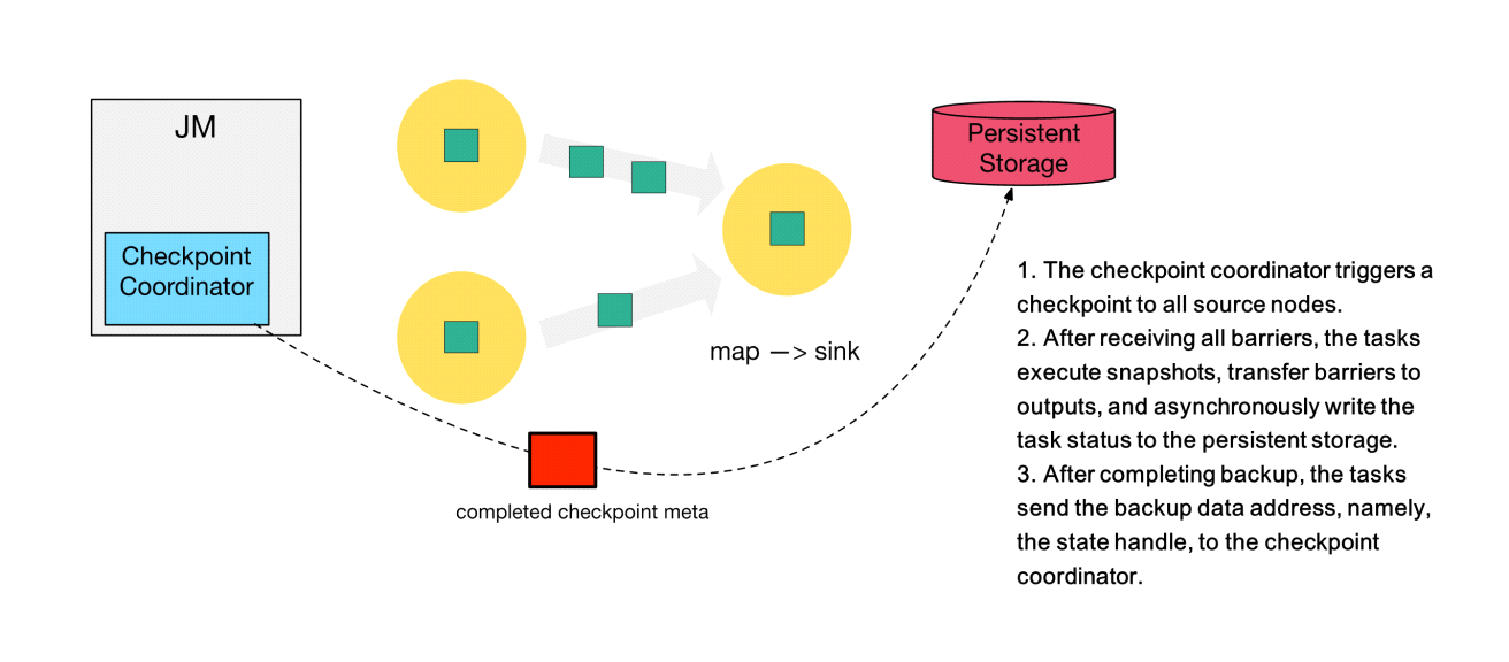

This section explains the step by step checkpoint execution process. As shown in the following figure, a checkpoint coordinator is located on the left, a Flink job that consists of two source nodes and one sink node is in the middle, and persistent storage is located on the right, which is provided by the Hadoop Distributed File System (HDFS) in most scenarios.

Step 1) The checkpoint coordinator triggers a checkpoint to all source nodes.

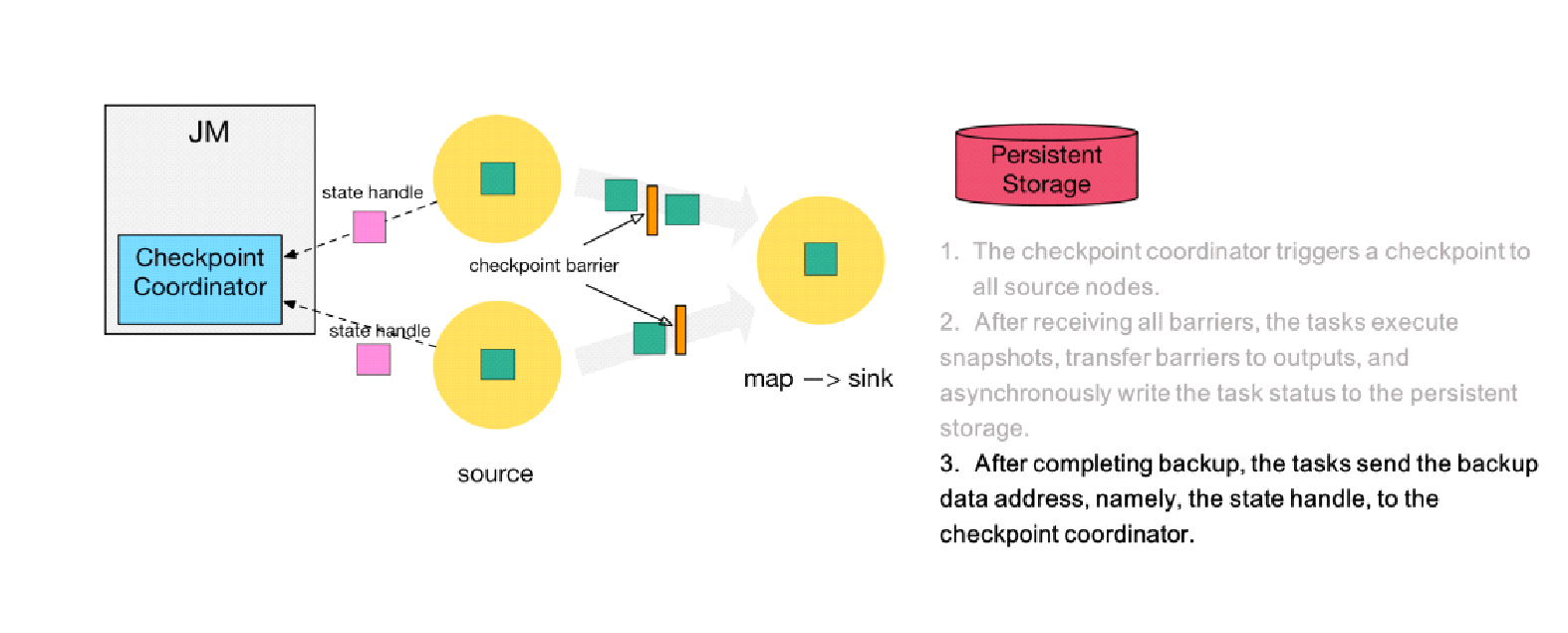

Step 2) The source nodes broadcast a barrier downstream. The barrier is at the core of the Chandy-Lamport distributed snapshot algorithm. The downstream tasks execute the checkpoint only after receiving the barriers of all inputs.

Step 3) After completing state backup, the tasks send the backup data address, namely, the state handle, to the checkpoint coordinator.

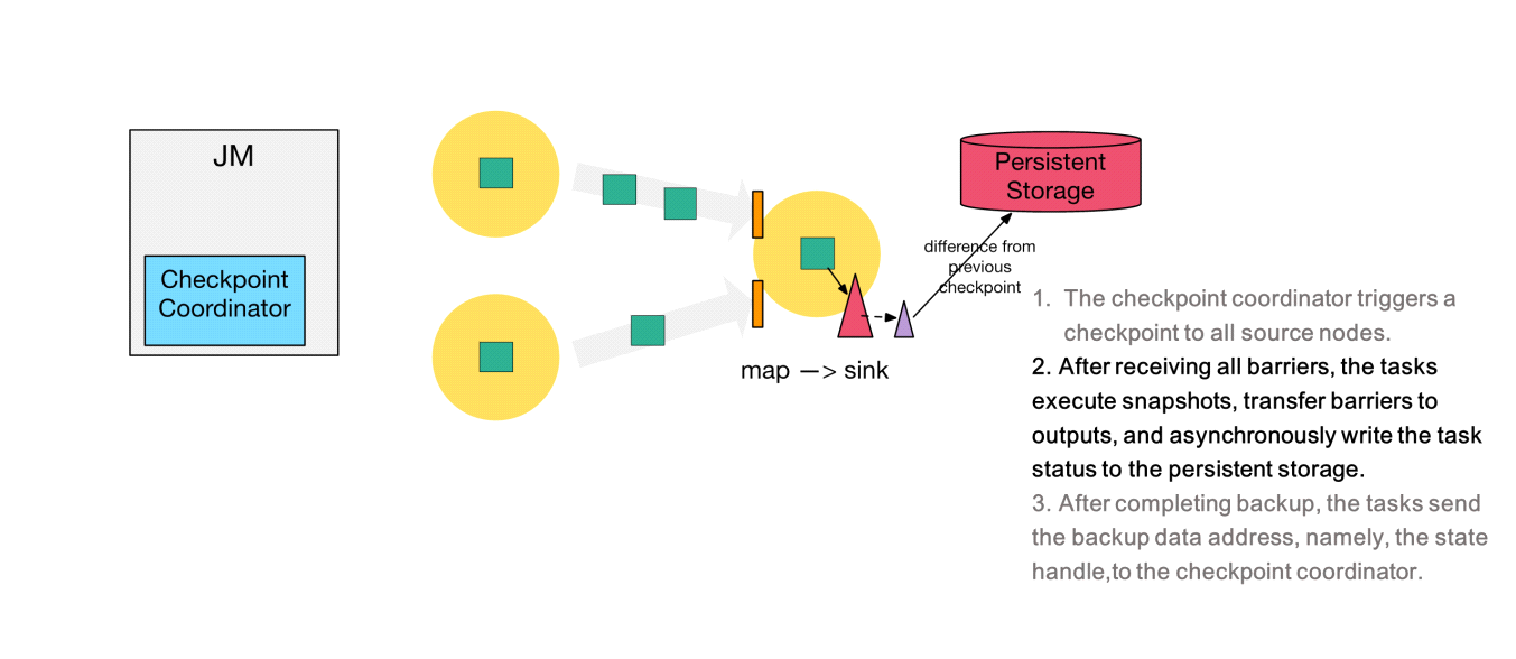

Step 4) After receiving the barriers of the two upstream inputs, the downstream sink node executes the local snapshot. The following figure shows the process of executing the RocksDB incremental checkpoint. RocksDB flushes full data to the disk, as shown by the red triangle. Then, Flink implements persistent backup for the non-uploaded files, as shown by the purple triangle.

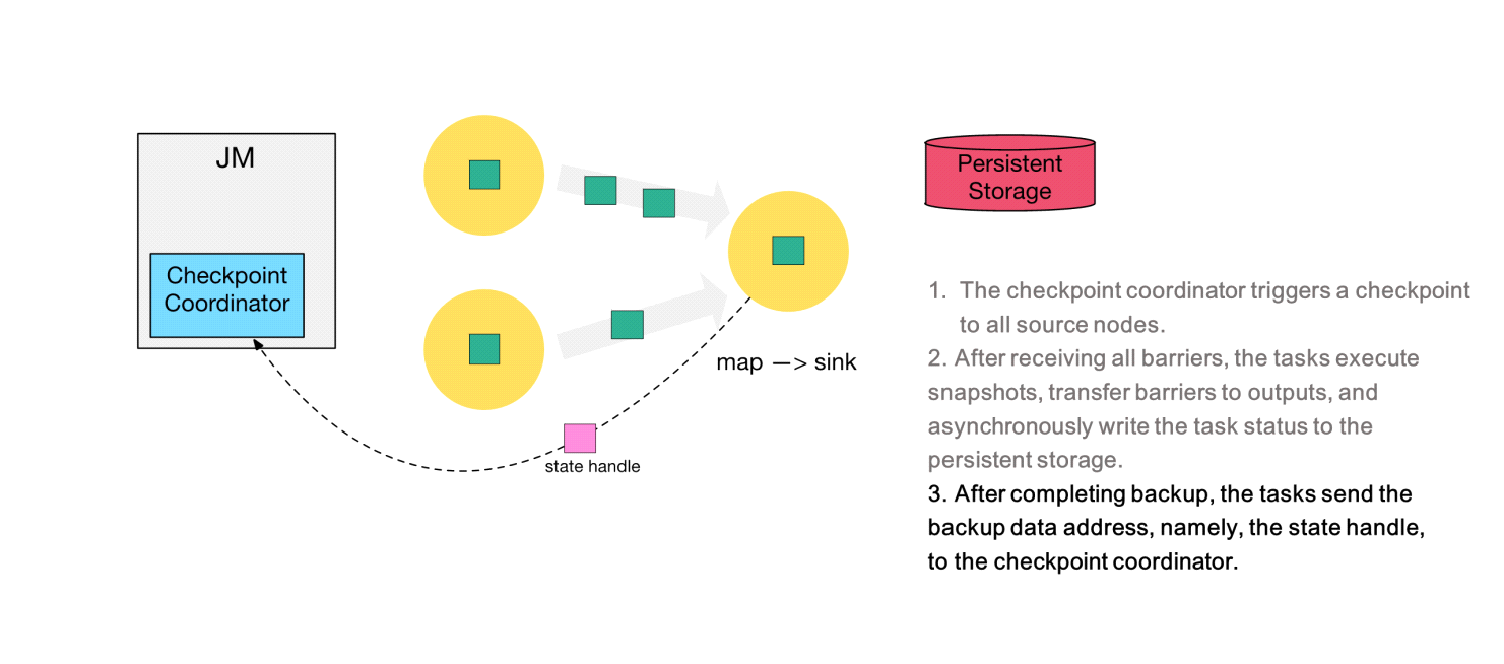

Step 5) After executing its checkpoint, the sink node returns the state handle to the checkpoint coordinator.

Step 6) After receiving the state handles of all tasks, the checkpoint coordinator determines that the checkpoint is globally completed and then backs up a checkpoint metafile to the persistent storage.

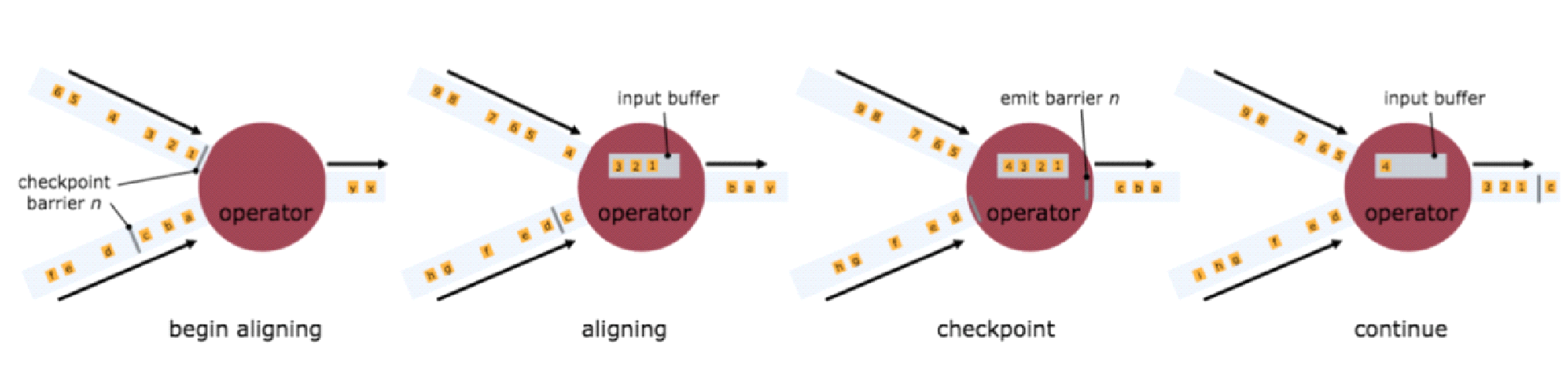

To implement the EXACTLY ONCE semantics, Flink uses an input buffer to cache data during alignment and processes the data after alignment is completed. To implement the AT LEAST ONCE semantics, Flink directly processes the collected data without caching it. In this case, data may be processed multiple times when being restored. The following figure shows the schematic diagram of checkpoint alignment, which is sourced from the official Flink documentation.

The checkpoint mechanism only ensures EXACTLY ONCE for Flink computing. End-to-end EXACTLY ONCE requires the support of source and sink nodes.

Savepoints and checkpoints are used to resume jobs. The following table lists the differences between them.

| Savepoint | Externalized Checkpoint |

|---|---|

| Savepoints are triggered manually by using commands and are created and deleted by the user. | After being completed, checkpoints are saved to the external persistent storage specified by the user. |

| Savepoints are stored in standard format storage, and the job version can be upgraded and its configuration can be changed. | When a job fails or is canceled, externally stored checkpoints are retained. |

| The user must provide the path of the savepoint used to restore a job state. | The user must provide the path of the checkpoint used to restore a job state. |

Best Practices and Tips for Working with Flink State: Flink Advanced Tutorials

Analysis of Network Flow Control and Back Pressure: Flink Advanced Tutorials

206 posts | 56 followers

FollowApache Flink Community China - September 16, 2020

Apache Flink Community China - September 16, 2020

Apache Flink Community China - January 9, 2020

Apache Flink Community China - September 16, 2020

Apache Flink Community China - January 9, 2020

Apache Flink Community - May 9, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Super App Solution for Telcos

Super App Solution for Telcos

Alibaba Cloud (in partnership with Whale Cloud) helps telcos build an all-in-one telecommunication and digital lifestyle platform based on DingTalk.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn MoreMore Posts by Apache Flink Community