Point of Interest (POI) is commonly understood as location information. For general users, POI data contains not only basic information such as names and coordinates, but also pictures, comments, business information, and other content. This is collectively referred to as in-depth information. As a direct reflection of the real world in the virtual world, the richness, accuracy, and timeliness of POI information play a vital role in users' travel decisions. POIs also serve as the basis for many services offered by AMAP.

To enrich the in-depth information, we collect data through multiple channels. Each data access source is called a Content Provider (CP). At the very beginning, there were only a few CPs. For every CP, an application was built, independent storage and independent code were used, and a completely different technology stack was adopted.

However, as the scale of access continued to increase, this method could not cope with the demand for mass production, update, operations and maintenance (O&M), and monitoring. It becomes a stumbling block in business iteration. The amount of time and energy spent on infrastructure maintenance came to exceed that spent on business iteration. To get an idea of the development speed, consider the following example.

A quarter has about 130 working days, but the number of newly accessed tasks exceeds 120. Currently, the total number of accessed tasks is more than 100 times the number of R&D personnel, and about a billion data entries must be processed each day. Based on predictions for the future, the in-depth information team began to explore a platform-based architecture.

The idea of turning a system into a platform is clear, but there are many different choices in terms of specific design and implementation methods.

Most data access systems are designed with a relatively straightforward goal. They simply need to obtain data and input the data into the business system. The remaining functions, such as data analysis and business processing, are completed by other downstream systems to generate the real business data. This means the access system is stateless from the very beginning of its design, and its understanding of the data is basically independent of the business.

However, considering the particularity of the AMAP in-depth information access service, we did not adopt this solution while creating a platform, instead used a more intensive approach. The access platform itself needs to have a full understanding of the data. In addition to data access, the platform also takes charge of data parsing, dimension alignment, specification mapping, lifecycle maintenance, and other related issues. The platform directly integrates all the control logic involved in in-depth information processing.

Additionally, in contrast to common access systems, both R&D engineers and product managers (PMs) are the primary users of the system. The platform should enable the PMs to independently complete the whole process of data access, analysis, and debugging after understanding limited technical constraints. This imposes high requirements on the "what you see is what you get" (WYSIWYG) real-time design and debugging capabilities of the platform. We need to solve the following difficulties in the platform design:

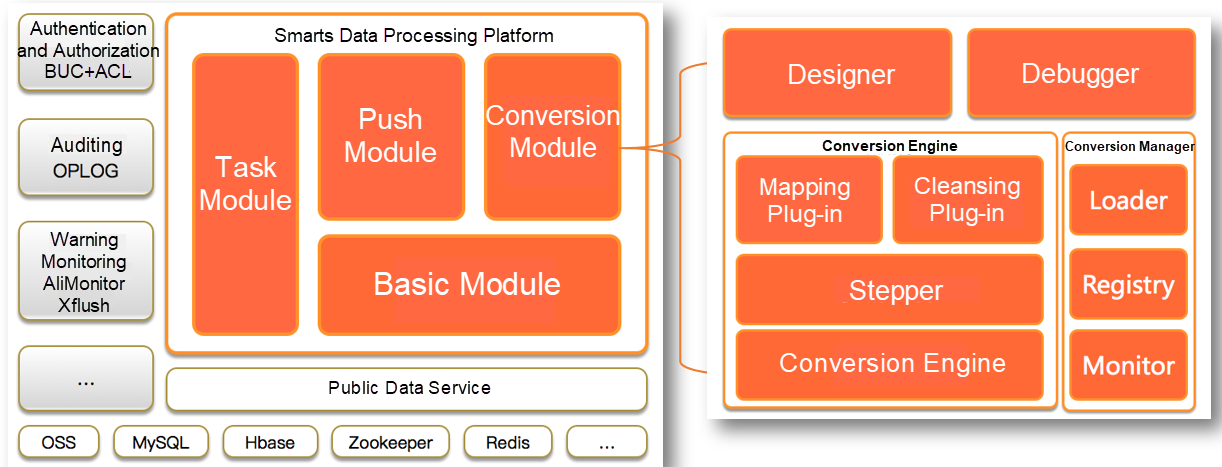

Finally, we designed the platform architecture as shown in the following figure. This architecture integrates four modules that work together to complete in-depth information access.

The platform is divided into the following modules:

The conversion module consists of four parts: the conversion engine, conversion manager, designer, and debugger. It supports all custom business rules in order to reduce the design complexity of the system. As the module with the highest degree of business freedom, the conversion module uses the same underlying layer to support pre-conversion, conversion, and data analysis for upper-layer businesses. It is a core component that allows the system to support various complex business scenarios. The conversion engine must support the most complex and variable parts of the configuration and debugging functions, such as data acquisition, dimension alignment, specification mapping, and cleaning.

The data acquisition capability must support various methods such as HTTP, OSS, MaxCompute, MTOP, MetaQ, and Push services, as well as the combination and stacking of these methods. For example, the system must be able to download a file from OSS, parse the rows in the file, and then call the HTTP service based on the parsed data.

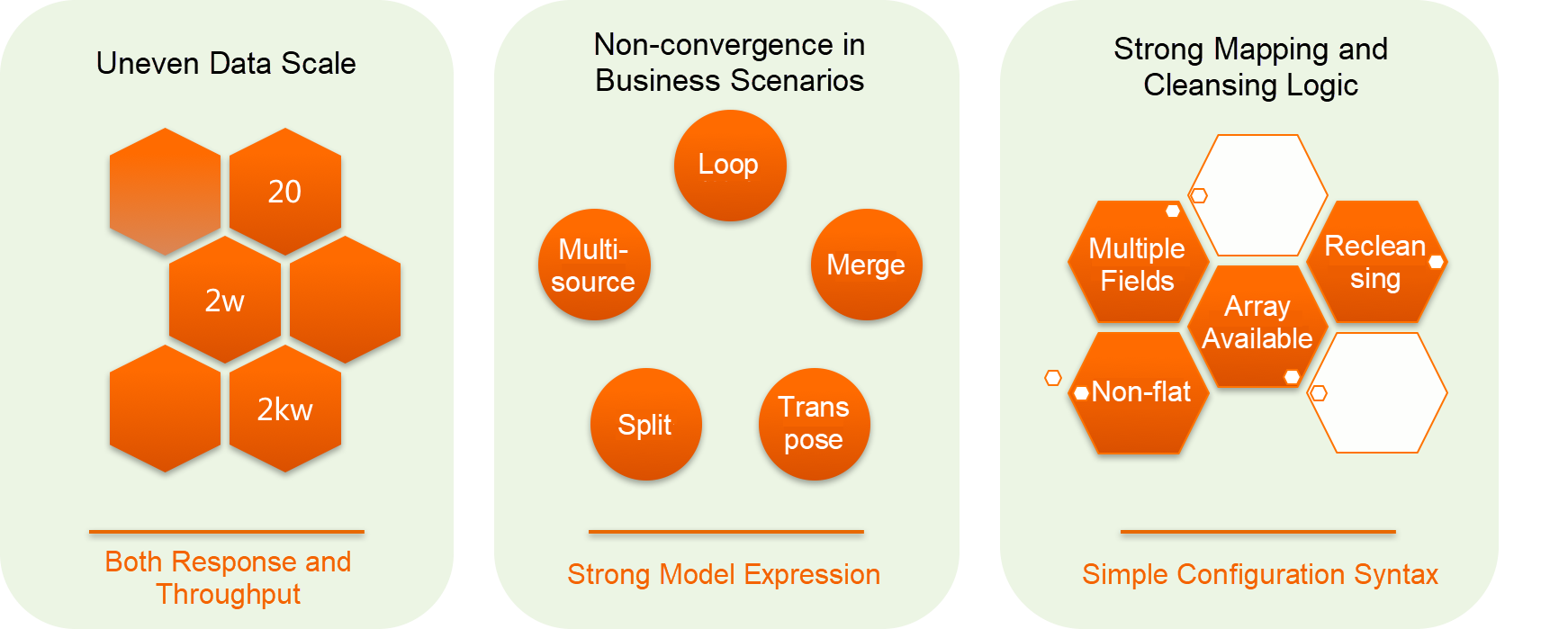

To support nearly unlimited service stacking capabilities and achieve the WYSIWYG design performance, we studied various solutions within and outside the Alibaba economy, such as the Blink and Stream platforms. We did not find any components that could directly meet our business needs. The main problems were:

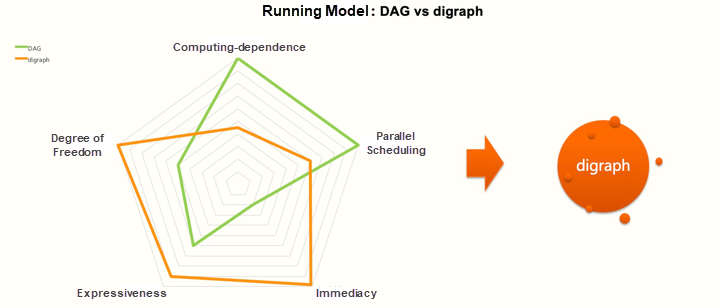

Based on the preceding analysis, we decided not to perform secondary development on the above platforms. Instead, we directly customized an engine based on our current business scenarios. Although these engines could not be directly used, their PDI step-by-step organization and driving modes were quite suitable for our business scenarios. Due to considerations of freedom, expressiveness, and intuitiveness, the conversion engine abandons the directed acyclic graph (DAG) methodology, a technical model that is relatively easy to rely on for computing and parallel scheduling, and uses a directed graph (digraph) model similar to PDI for organization.

To allow PMs to directly describe their business scenarios as far as possible, we ultimately adopted a PDI conversion engine design and directly use the original digraph to drive the execution of steps. This allows us to provide an intuitive design that is consistent with the runtime logic. As a result, it is unnecessary to implement complex components such as translators, optimizers, and executors at the engine level.

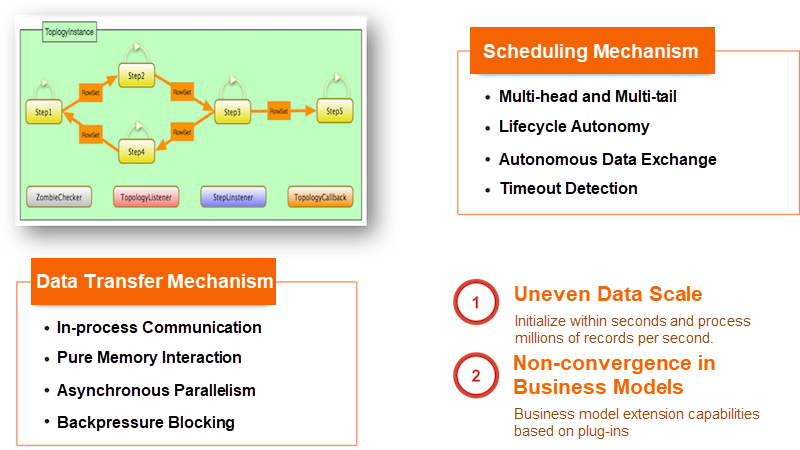

To ensure the execution efficiency and security of the engine, we ensure that data transfer between steps is not implemented across processes. All data interactions are completed within the memory, and steps are executed asynchronously and in a parallel manner. Data is transferred from back to front through the backpressure sensing mechanism to balance the processing speed difference between steps.

Dimension alignment refers to the process of integrating data from different data sources and dimensions into a certain dimension through given business rules. For example, in-depth information businesses generally need to be integrated into the POI dimension data. Theoretically, with the engine, the ability to intuitively express and stack services meet the needs of dimension alignment. However, we still need to address the real-time debugging requirements of data PMs. Therefore, regardless of the complexity of conversion, we must be able to perform debugging at any time and visually display the results. This will allow for quick analysis and troubleshooting by data PMs.

Common extract, transform, load (ETL) methods involve dimension alignment. However, conventional businesses involve the consolidation of associations between two-dimensional data tables. Therefore, solutions such as PDI are basically processed through a solution combining SQL and temporary tables, where input and output are bound during design. As a result, there is no essential difference between debugging and running. Alternatively, you can modify the configuration during debugging to forcibly output data to temporary storage. This means that the conversion engine needs to depend on a specific external environment, such as a specific database table. This causes data cross-pollution during debugging and running.

Our data does not naturally come in a two-dimensional structure, and cannot be simply placed in tables. Therefore, this solution is not feasible. In the method we adopted, data processing only depends on the memory of the local host and the disk. For example, the data dispersion required by flatten is directly implemented in the memory without the need for storage. Local disks are directly used to assist in full data crossover operations, such as merge join. No special external environment is required to implement these functions. We finally implemented a conversion engine that has the following capabilities:

1) Pure Engine

2) Data Delivery

The output does not need to be specified in configuration conversion. The data output step is mounted dynamically.

3) Multi-scenario Manager

The task scenarios are written to the database, and the debugging scenarios are returned to the frontend page through WebSocket.

With such an engine and a consideration of the requirements of debugging scenarios, you do not need to specify the output destinations when designing the conversion engine. The output destinations are dynamically mounted by the scenario manager during runtime based on the debugging scenario and the normal running scenario. This avoids the cross-pollution of data. The platform supports WYSIWYG debugging and analysis functions at basically all levels, covering pre-conversion, conversion, cleaning, pushing, and almost all other processes.

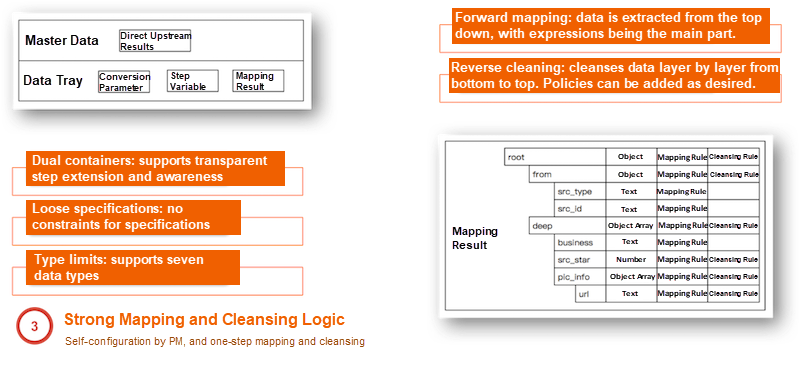

To support loose schema mapping and transparent extension, RowSchema is designed with an innovative dual-container structure.

Through mapping types, mapping rules, and cleaning parameters, the mapping step supports the integration of mapping and cleaning.

The conversion module processes data at three levels: step, mapping, and field cleaning. When using the conversion module, a PM simply needs to drag and drop the corresponding components on the web interface and enter some business parameters to complete the configuration.

To avoid black-box problems, the system design differs from the Stream platform in that the system components are backward compatible, and the step plug-in, mapping plug-in, and cleaning plug-in do involve the version concept. Custom businesses that the system does not support can be implemented in the form of scripts (Groovy) on each system module. However, uploading binary packages is not allowed, and the code must be directly reflected in the configuration format to avoid subsequent maintenance problems.

During a lifecycle, the system triggers data add, update, and delete operations at the appropriate times. Deletion is a complex process from the perspective of data access. In business terminology, it is called "taking data offline". To understand data deletion, we must first understand the in-depth information task model.

Currently, we support two models: batch processing and stream processing. In the batch processing model, each time a batch task, such as a common scheduled task, is executed, the batch number is increased by one. In the stream processing model, once a task is started, the task always runs. Data is generally received passively through methods such as MetaQ and Push, and the batch concept is not involved.

Three policies are supported to meet business needs: batch expiration, time expiration, and conditional offline. In addition, these policies can be stacked. When these policies were designed, we needed to consider different aspects of different policies. For example, for batch expiration, we needed to consider how to avoid the sharing and increment of the batch number when the full-batch historical records were scanned or in historical scans or retry scenarios. For time expiration, we needed to consider how to avoid a rapid increase in the number of timers quantity when a timer is bound to every record.

Lifecycle management involves the design of many task modules, such as the design of task scheduling models and multi-server sharding mechanisms, the design of the task warning lockdown logic, and the design of storage tables. Due to the integration requirements of the in-depth information businesses, the access platform does not use the open-source task scheduling framework or an existing task scheduling framework from the Alibaba economy. Instead, it uses a customized task scheduling framework.

The in-depth information access platform has witnessed the rapid development of the AMAP in-depth information access business over the past few years. With an extremely low manpower investment, AMAP has explored various vertical fields to provide the underlying data support to expand its popular lifestyle service applications. In the future, we will continue to develop and evolve end-to-end debugging, support for scenario-based operation refinement, non-standard data processing, and free service orchestration platforms.

AMAP Front-end Technology Development Over the Last Five Years

AMAP on the Go: Effective Ways to Respond to Customer Feedback

amap_tech - October 29, 2020

Alibaba Clouder - June 17, 2020

Alibaba Clouder - October 15, 2018

Alibaba Cloud Native Community - May 23, 2025

amap_tech - August 27, 2020

amap_tech - April 20, 2020

Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by amap_tech