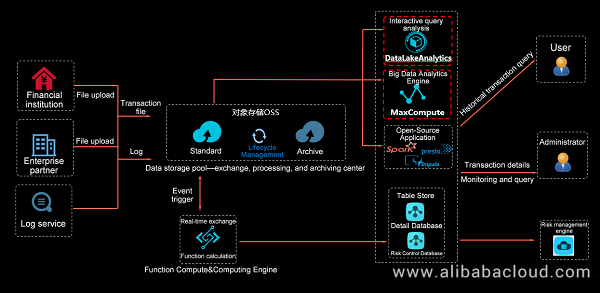

Multiple methods are available for querying and analyzing data stored in Alibaba Cloud Object Storage Service (OSS). For example, you can use Alibaba Cloud serverless query and analysis services such as the big data computing service MaxCompute and Data Lake Analytics (DLA). You can also create Spark, Presto, or Impala applications to analyze data on OSS.

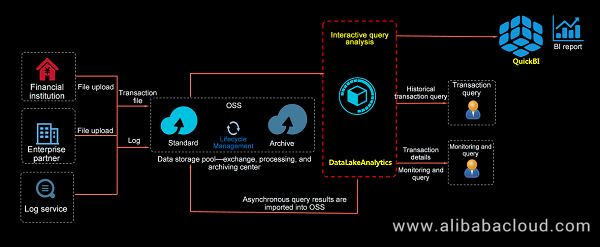

This document describes a test for using OSS, DLA, and Quick BI to implement data storage, serverless ad hoc queries, and visualized BI reports.

The overall process of data processing, including data storage, interactive query and analysis, and BI report export, is tested by using OSS, DLA, and Quick BI. This test applies to scenarios such as queries and analysis on logs and transaction records and report export.

OSS:

Go to the Object Storage Service page and click Buy Now.

DLA:

Apply for a free trial subscription to DLA.

Note: DLA is in open beta testing (OBT) now. To use DLA and its console, you must apply for the OBT qualification first. DLA can be used for query and analysis execution against specified OSS data files, including CSV, JSON, Parquet, ORC, Avro, and RCFile files.

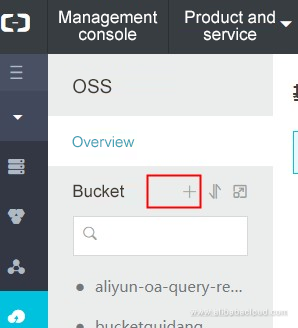

Log on to the OSS console and create a directory.

Create an OSS test bucket. In the Region drop-down list, select your preferred region. We will be using China East 1 in this example.

Note: The bucket name must be unique among all existing buckets in Alibaba Cloud OSS. Change your bucket name if the system prompts that it already exists.

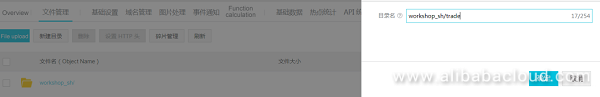

We recommend that you create the workshop_sh/trade and workshop_sh/user directories.

http://testdatasample.oss-cn-hangzhou.aliyuncs.com/workshop_sh/workshop_sh.zip

Upload the transaction record and account information files to the trade and user directories, respectively

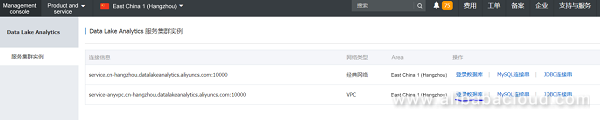

Click here and enter your username and password to log on to the DLA console.

Check your username and password in the DLA service activation notification email in your http://www.alibabacloud.com website mailbox.

Enter the following CREATE SCHEMA statements and click "Synchronous Execution."

CREATE SCHEMA my_schema_name WITH DBPROPERTIES (

CATALOG = 'oss',

LOCATION = 'oss://bucket name/test data directory/'

);Note:

In the "Database" drop-down list, select the created schema.

Enter the following CREATE TABLE statements and click "Synchronous Execution." The LOCATION directory is oss://bucket name/test data directory/.

Create a transaction record table.

Note: The LOCATION directory is in the 'oss://bucket name/transaction record table directory/' format.

Replace the LOCATION directory with 'oss://your OSS bucket name/workshop_sh/user/' in the test.

For example, enter oss://workshopsh20180608100/workshop_sh/user/.

CREATE EXTERNAL TABLE tradelist_csv (

t_userid STRING COMMENT 'user ID',

t_dealdate STRING COMMENT 'application time',

t_businflag STRING COMMENT 'service code',

t_cdate STRING COMMENT 'confirmation date',

t_date STRING COMMENT 'application date',

t_serialno STRING COMMENT'application No.',

t_agencyno STRING COMMENT'agency No.',

t_netno STRING COMMENT'network No.',

t_fundacco STRING COMMENT'fund account',

t_tradeacco STRING COMMENT'transaction account',

t_fundcode STRING COMMENT'fund code',

t_sharetype STRING COMMENT'share type',

t_confirmbalance DOUBLE COMMENT'confirmed balance',

t_tradefare DOUBLE COMMENT'transaction fee',

t_backfare DOUBLE COMMENT'later payment fee',

t_otherfare1 DOUBLE COMMENT'other fees 1',

t_remark STRING COMMENT'remarks'

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFIlE

LOCATION 'oss://testdatasample/workshop_sh/trade/';Create an account information table.

Note: The LOCATION directory is in the 'oss://bucket name/account information table directory/' format.

Replace the LOCATION directory with 'oss://your OSS bucket name/workshop_sh/user/' in the test.

For example, enter oss://workshopsh20180608100/workshop_sh/user/.

CREATE EXTERNAL TABLE userinfo (

u_userid STRING COMMENT 'user ID',

u_accountdate STRING COMMENT 'account activation date',

u_gender STRING COMMENT 'gender',

u_age INT COMMENT 'age',

u_risk_tolerance INT COMMENT 'risk tolerance level within the 1–10 range (10: highest level)',

u_city STRING COMMENT'city',

u_job STRING COMMENT'job category (A to K)',

u_income DOUBLE COMMENT'annual income (CNY10,000)'

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFIlE

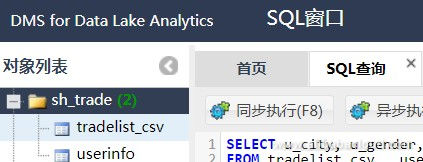

LOCATION 'oss://testdatasample/workshop_sh/user/';After creating the tables, refresh the page. The two tables are displayed under the schema created for the test.

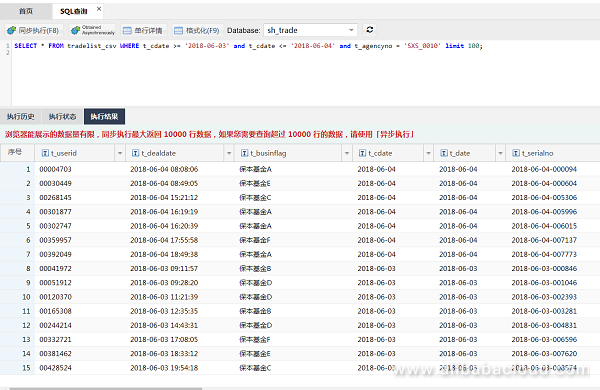

SELECT * FROM tradelist_csv

WHERE t_cdate >= '2018-06-03' and t_cdate <= '2018-06-04' and t_agencyno = 'SXS_0010'

limit 100;Execution result:

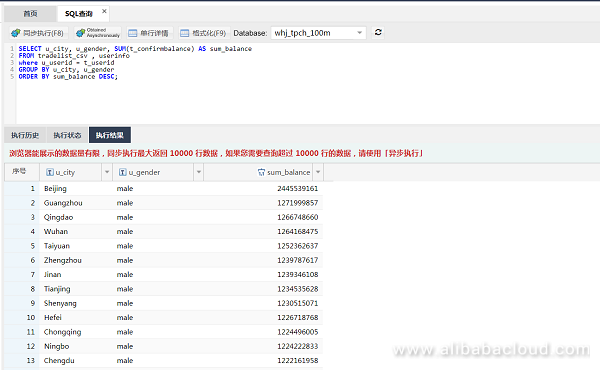

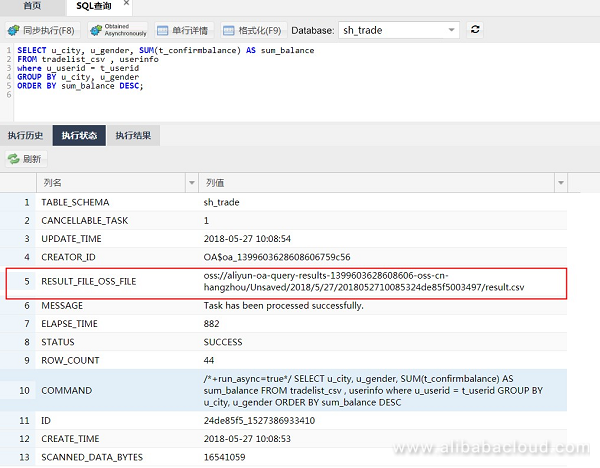

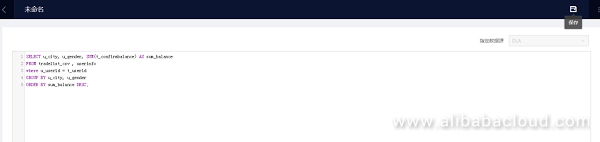

SELECT u_city, u_gender, SUM(t_confirmbalance) AS sum_balance

FROM tradelist_csv , userinfo

where u_userid = t_userid

GROUP BY u_city, u_gender

ORDER BY sum_balance DESC;

Export the asynchronous execution result to OSS in the CSV format.

Click Execution Status and check the asynchronous query task status

The execution status can be "RUNNING", "SUCCESS", or "FAILURE."

Click "Update." When "SUCCESS" is displayed in "STATUS", the OSS directory for storing the query result is displayed.

View the query result file in OSS

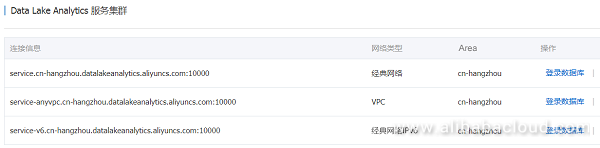

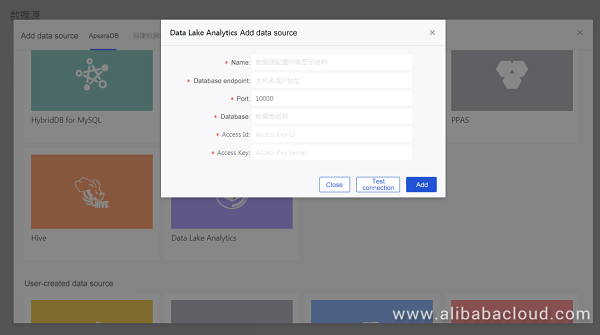

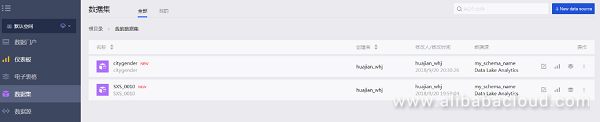

Only Quick BI Professional supports connections to DLA data sources.

You can check the values of "Database Endpoint" and "Port" on the DLA console.

Set "Database" to the schema name you have created in DLA.

"Access Id" and "Access Key" are the username and password for DLA database logon, respectively. (For more information, check the DLA service activation notification email in your http://www.alibabacloud.com website mailbox.)

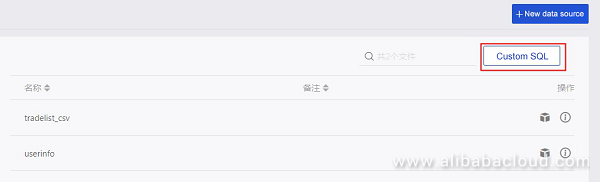

Click the "Create Dataset" icon next to a table.

To select a data source, click "Customize SQL."

Enter SQL query statements and save the output (for example, in a file named "citygender").

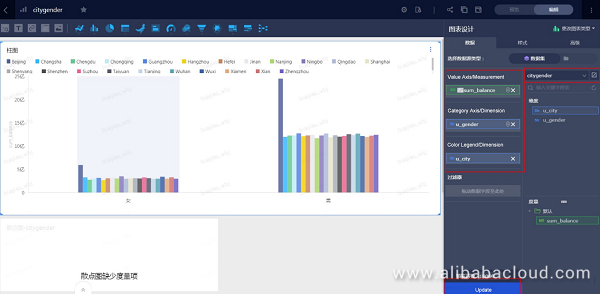

Display the dashboard editing page and select a dashboard display mode.

Select a dataset (for example, the "citygender" dataset).

Set "Value Axis (Measures)", "Category Axis (Dimensions)", and "Colors (Dimensions)."

Click "Update."

The following figure shows the total balances in accounts of male and female users in different cities.

To learn more about Alibaba Cloud Object Storage Service, www.alibabacloud.com/product/oss

57 posts | 12 followers

FollowAlibaba EMR - August 5, 2024

Alibaba Clouder - August 8, 2018

Alibaba Clouder - July 15, 2020

Hologres - June 30, 2021

Alibaba Cloud Community - July 16, 2025

Alibaba Clouder - August 31, 2018

57 posts | 12 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More China Gateway Solution

China Gateway Solution

Power your progress in China by working with the NO.1 cloud provider of this dynamic market.

Learn MoreMore Posts by Alibaba Cloud Storage

Raja_KT February 18, 2019 at 5:30 am

Interesting you shared sample data besides telling the beauty of serverless DLA on OSS... QuickBI. Connecting to DataV too?