11.11 The Biggest Deals of the Year. 40% OFF on selected cloud servers with a free 100 GB data transfer! Click here to learn more.

I am often asked by colleagues and customers about Alibaba Cloud Object Storage Service (OSS) OSSUTIL concurrent upload performance. In this article, I will briefly introduce OSSUTIL concurrent upload, and give some examples to help you understand it.

Before proceeding with the article, you should download the OSSUTIL tool from the official website: https://www.alibabacloud.com/help/doc-detail/50452.htm

You can also look at the source code at: https://github.com/aliyun/ossutil

When uploading files to OSS, if file_url is the directory, we must specify the --recursive option; if it is not the directory, we won't have to specify it.

When we download files from OSS or copy files among OSS instances:

--recursive option is not specified, a single object will be copied. In this case, be sure src_url accurately leads to the object to be copied. Otherwise, an error will be thrown.--recursive option is specified, OSSUTIL will perform prefix match search on the src_url, and batch copy the matching objects. If a copy operation fails, the implemented copy operations will not be rolled back.During batch file upload (or download or copy), if one of the file operations fails, OSSUTIL will not quit. Instead, it will continue to upload (or download or copy) other files, and record the error information of the failed file into the report file. Information about successfully uploaded (or downloaded or copied) files will not be recorded into the report file.

Batch operation errors that may cause OSSUTIL to be terminated

The report file format will be: ossutil_report_date_time.report. As one of OSSUTIL's output files, the report file is placed under OSSUTIL's output directory. The path of this directory can be specified using the outputDir option or the --output-dir command line option in the configuration file. If this directory is not specified, the default output directory—the ossutil_output directory under the current directory—will be used.

OSSUTIL does not maintain report files. Please check and clean up the report files and avoid accumulation of too many report files.

--jobs option controls the number of concurrent operations enabled between files when multiple files are uploaded/downloaded/copied--parallel option controls the number of concurrent operations between multiparts when big files are uploaded/downloaded/copied.OSSUTIL calculates the number of parallel operations based on the file size by default (this option does not work for small files, and the threshold for large files to be uploaded/downloaded/copied in multiparts can be controlled by the --bigfile-threshold option). When large files are uploaded/downloaded/copied in batches, the actual number of concurrent operations is calculated by multiplying the number of jobs by the number of parallel operations. If the default number of concurrent operations set by OSSUTIL doesn't meet your performance requirements, these two options can be adjusted to tweak the performance accordingly.

This option sets the size of each part during multipart upload/download/copy of big files.

Generally, we don't need to set this value, because OSSUTIL will automatically decide the size and concurrency of parts based on the file size. When the upload/download/copy performance doesn't meet the requirements, or if there are any special requirements, we can set this option.

After we set this option (the part size), the part number will be: rounded up (file size/part size). Note, if the --parallel option's value is larger than the number of parts, the additional parallel value does not work, and the actual concurrency is equal to the number of parts.

If the part size is set to be too small, OSSUTIL's file upload/download/copy performance may be affected; if it is set to be too large, the multipart concurrency that actually works will be affected. Please take this into consideration when setting the part size option value.

If the number of concurrent operations is too large, OSSUTIL's upload/download/copy performance may be reduced due to inter-thread resource switching and hogging. Therefore, adjust the values of these two options based on the actual machine conditions. To perform pressure testing, set the two options to small values first, and slowly adjust them to the optimal values.

If the values of the --jobs and --parallel options are too large, an EOF error may occur due to the slow network transfer speed if machine resources are limited. In this case, appropriately reduce the values of the --jobs and --parallel options.

If there are too many files and they have uneven sizes, directly set the options as --jobs=3 and --parallel=4 (concurrency among different files is 3, and concurrency within a single file is 4), and observe the MEM, CPU, and network conditions. If the network and CPU are not fully occupied, continue to tune up the values of the --jobs and --parallel options.

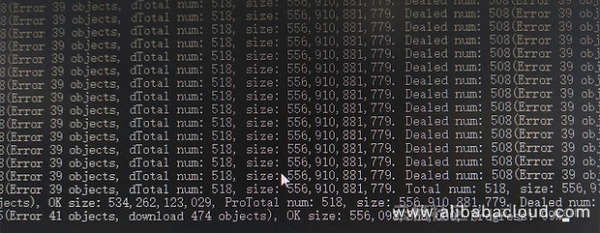

The download speed of the customer was approximately 265 Mb/s.

5 files were downloaded (version<=1.4.0, and the concurrency among different files was 5) simultaneously by default, because we were downloading many files.

The average file size was approximately 1.1 GB, and each file to be downloaded had 12 threads by default (the concurrency within a single file was 12, which was calculated based on the file size when the parallel parameter and the partsize parameter were not set).

So, there were at least 5 x 12 = 60 OSSUTIL threads running in the customer's environment. So many threads must have fully occupied the network and caused the CPU to be very busy. We suggested the customer observe the environment's CPU, network, process/thread conditions during concurrent download.

Based on the customer's screenshots, we suggested the customer set the part size of each file to be between 100 MB and 200 MB (both inclusive). For example, when setting the part size as 100 MB, the download concurrency of each file would be filesize/partsize.

ossutil cp oss://xxx xxx -r --part-size=102400000

If there are too many files and they have uneven sizes, directly set the options as --jobs=3 and --parallel=4 (concurrency among different files is 3, and concurrency within a single file is 4)

Our general suggestion was: the ratios between the number of jobs and the number of CPU cores, and the parallel number and the number of CPU cores should be respectively 1:1 and 2:1, and should not be too big.

It's not about resources required by OSS, but the CPU, MEM, and network resources required by each concurrent operation (reading files, splitting the files into specified parts, upload, and other operations).

--jobs option sets the concurrency among different files, which is 5 by default (version <= 1.4.0, and then 3)--parallel option sets the multipart concurrency within a big file. When the parallel and partsize parameters are not set, the value of this option will be calculated based on the file size, and will not exceed 15 (version <= 1.4.0, and then 12)--jobs=3 and --parallel=4 (concurrency among different files is 3, and concurrency within a single file is 4; the actual numbers should be adjusted based on actual conditions of the machine)General suggestions

57 posts | 12 followers

FollowAlibaba Clouder - November 12, 2018

Alibaba Clouder - April 12, 2021

roura356a - June 15, 2020

H Ohara - December 24, 2023

Alibaba Clouder - April 21, 2021

Alibaba Clouder - April 30, 2020

57 posts | 12 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn MoreMore Posts by Alibaba Cloud Storage