By Wang Siyu (Jiuzhu)

Photo Credit @ Wang Siyu (Jiuzhu)

OpenKruise is an open-source cloud-native application automation management suite supported by Alibaba Cloud. It is now hosted by the Cloud Native Computing Foundation (CNCF) as a Sandbox Level Project. Built on Alibaba's years of experience in containerization and cloud-native technologies, OpenKruise is a standard controller widely used in the Alibaba internal production environment, which extends and complements the Kubernetes core controllers. It is a technological concept and best practice in accordance with the upstream community standard and suits large-scale Internet scenarios.

On May 20, 2021, OpenKruise released the latest version v0.9.0 (ChangeLog), with new features, such as Pod restart and resource cascading deletion protection. This article provides an overview of this new version.

Restarting container is a necessity in daily operation and a common technical method for recovery. In the native Kubernetes, the container granularity is inoperable. Pod, as the minimum operation unit, can only be created or deleted.

Some may ask: why do users still need to pay attention to the operation such as container restart in the cloud-native era? Aren't the services the only thing for users to focus on in the ideal Serverless model?

To answer this question, we need to see the differences between cloud-native architecture and traditional infrastructures. In the era of traditional physical and virtual machines, multiple application instances are deployed and run on one machine, but the lifecycles of the machine and applications are separated. Thus, application instance restart may only require a systemctl or supervisor command but not the restart of the entire machine. However, in the era of containers and cloud-native, the lifecycle of the application is bound to that of the Pod container. In other words, under normal circumstances, one container only runs one application process, and one Pod provides services for only one application instance.

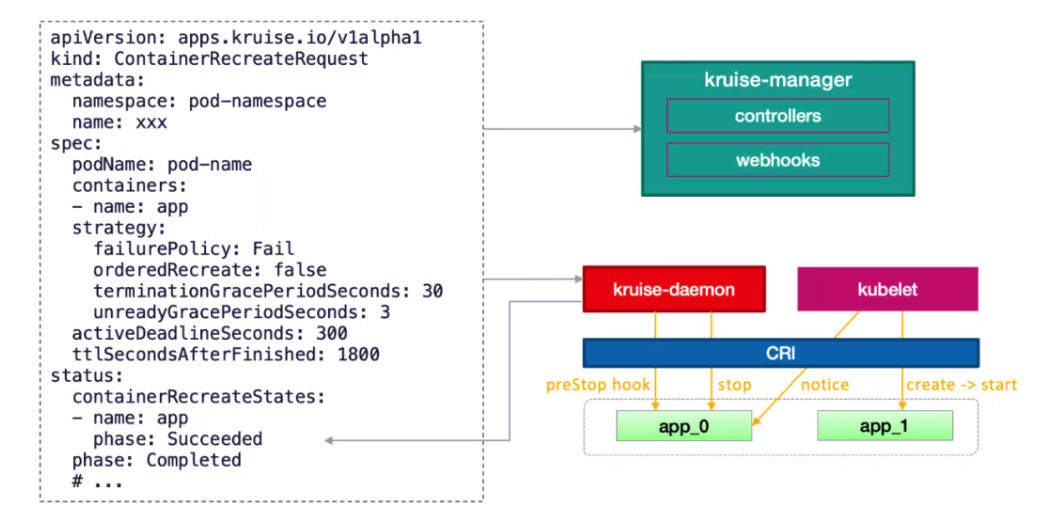

Due to these restrictions, current native Kubernetes provides no API for the container (application) restart for upper-layer services. OpenKruise v0.9.0 supports restarting containers in a single Pod, compatible with standard Kubernetes clusters of version 1.16 or later. After installing or upgrading OpenKruise, users only need to create a ContainerRecreateRequest (CRR) object to initiate a restart process. The simplest YAML file is listed below:

apiVersion: apps.kruise.io/v1alpha1

kind: ContainerRecreateRequest

metadata:

namespace: pod-namespace

name: xxx

spec:

podName: pod-name

containers:

- name: app

- name: sidecarThe value of namespace must be the same as the namespace of the Pod to be operated. The name can be set as needed. The podName in the spec clause indicates the Pod name. The containers indicate a list that specifies one or more container names in the Pod to restart.

In addition to the required fields above, CRR also provides a variety of optional restart policies:

spec:

# ...

strategy:

failurePolicy: Fail

orderedRecreate: false

terminationGracePeriodSeconds: 30

unreadyGracePeriodSeconds: 3

minStartedSeconds: 10

activeDeadlineSeconds: 300

ttlSecondsAfterFinished: 1800failurePolicy: Values: Fail or Ignore. Default value: Fail. If any container stops or fails to recreate, CRR ends immediately.orderedRecreate: Default value: false. Value true indicates when the list contains multiple containers, the new container will only be recreated after the previous recreation is finished.terminationGracePeriodSeconds: The time for the container to gracefully exit. If this parameter is not specified, the time defined for the Pod is used.unreadyGracePeriodSeconds: Set the Pod to the unready state before recreation and wait for the time expiration to execute recreation.

KruisePodReadinessGate to be enabled, which will inject a readinessGate when a Pod is created. Otherwise, only the pods created by the OpenKruise workload are injected with readinessGate by default. It means only these Pods can use the unreadyGracePeriodSeconds parameter during the CRR recreation.minStartedSeconds: The minimal period that the new container remains running to judge whether the container is recreated successfully.activeDeadlineSeconds: The expiration period set for CRR execution to mark as ended (unfinished container will be marked as failed.)ttlSecondsAfterFinished: The period after which the CRR will be deleted automatically after the execution ends.how it works under the hood After it is created, a CRR is processed by the kruise-manager. Then, it will be sent to the kruise-daemon (contained by the node where Pod resides) for execution. The execution process is listed below:

preStop is specified for a Pod, the kruise-daemon will first call the CRI to run the command specified by preStop in the container.preStop exists orpreStop execution is completed, the kruise-daemon will call the CRI to stop the container.postStart will be executed at the same time.

The container "serial number" corresponds to the restartCount reported by kubelet in the Pod status. Therefore, the restartCount of the Pod increases after the container is restarted. Temporary files written to the rootfs in the old container will be lost due to the container recreation, but data in the volume mount remains.

The level triggered automation of Kubernetes is a double-edged sword. It brings declarative deployment capabilities to applications while potentially enlarging the influence of mistakes at a final-state scale. For example, with the cascading deletion mechanism, once an owning resource is deleted under normal circumstances (non-orphan deletion), all owned resources associated will be deleted by the following rules:

Due to failures caused by cascading deletion, we have heard many complaints from Kubernetes users and developers in the community. It is unbearable for any enterprise to mistakenly delete objects at such a large scale in the production environment. Alibaba is no exception.

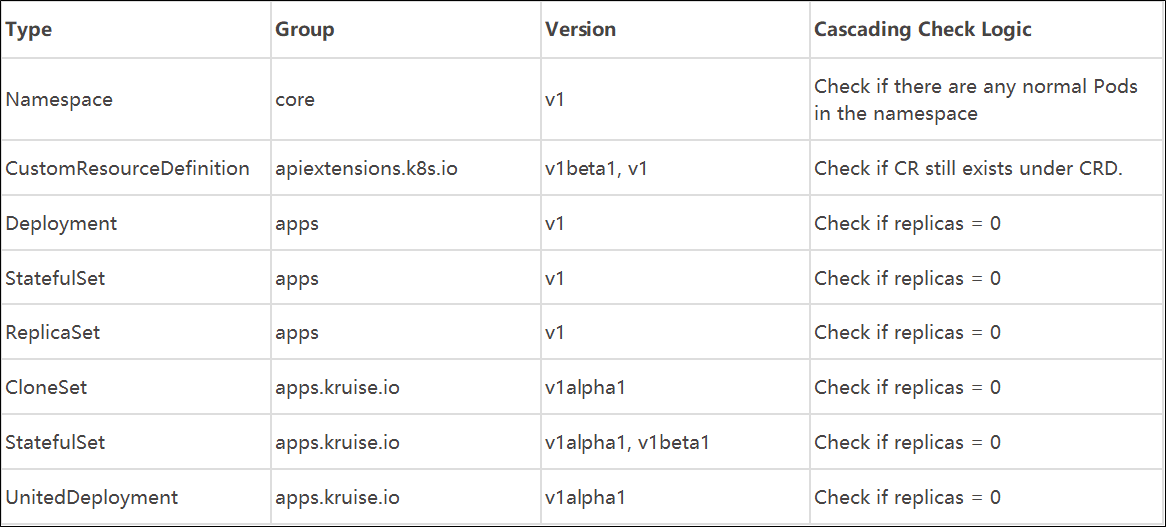

Therefore, in OpenKruise v0.9.0, we applied the feature of cascading deletion protection used in the internal environments of Alibaba to the community in the hope of ensuring stability for more users. If you want to use this feature in the current version, the feature-gate of ResourcesDeletionProtection needs to be explicitly enabled when installing or upgrading OpenKruise.

A label of policy.kruise.io/delete-protection can be given on the resource objects that require protection. Its value can be the following two things:

The following table lists the supported resource types and cascading relationships:

The controller.kubernetes.io/pod-deletion-cost annotation was added to Kubernetes after version 1.21. ReplicaSet will sort the Kubernetes resources according to this cost value during scale in. CloneSet has supported the same feature since OpenKruise v0.9.0.

Users can configure this annotation in the pod. The int type of its value indicates the deletion cost of a certain pod compared to other pods under the same CloneSet. Pods with a lower cost have a higher deletion priority. If this annotation is not set, the deletion cost of the pod is 0 by default.

Note: This deletion order is not determined solely by deletion cost. The real order serves like this:

When CloneSet is used for the in-place update of an application, only the container image is updated, while the Pod is not rebuilt. This ensures that the node where the Pod is located will not change. Therefore, if the CloneSet pulls the new image from all the Pod nodes in advance, the Pod in-place update speed will be improved substantially in subsequent batch releases.

If you want to use this feature in the current version, the feature-gate of PreDownloadImageForInPlaceUpdate needs to be explicitly enabled when installing or upgrading OpenKruise. If you update the images in the CloneSet template and the publish policy supports in-place update, CloneSet will create an ImagePullJob object automatically (the batch image pre-download function provided by OpenKruise) to download new images in advance on the node where the Pod is located.

By default, CloneSet sets the parallelism to 1 for ImagePullJob, which means images are pulled for one node and then another. For any adjustment, you can set the parallelism in the CloneSet annotation by executing the following code:

apiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

metadata:

annotations:

apps.kruise.io/image-predownload-parallelism: "5"In previous versions, the maxUnavailable and maxSurge policies of CloneSet only take effect during the application release process. In OpenKruise v0.9.0 and later versions, these two policies also function when deleting a specified Pod.

When the user specifies one or more Pods to be deleted through podsToDelete or apps.kruise.io/specified-delete: true, CloneSet will only execute deletion when the number of unavailable Pods (of the total replicas) is less than the value of maxUnavailable. In addition, if the user has configured the maxSurge policy, the CloneSet will possibly create a new Pod first, wait for the new Pod to be ready, and then delete the old specified Pod.

For more information, please refer to the official documentation on CloneSet

The replacement method depends on the value of maxUnavailable and the number of unavailable Pods. For example:

maxUnavailable=2, maxSurge=1 and only pod-a is unavailable. If you specify pod-b to be deleted, CloneSet will delete it promptly and create a new Pod.maxUnavailable=1, maxSurge=1 and only pod-a is unavailable. If you specify pod-b to be deleted, CloneSet will create a new Pod, wait for it to be ready, and then delete the pod-b.maxUnavailable=1, maxSurge=1 and only pod-a is unavailable. If you specify this pod-a to be deleted, CloneSet will delete it promptly and create a new Pod.In the native workload, Deployment does not support phased release, while StatefulSet provides partition semantics to allow users to control the times of gray scale upgrades. OpenKruise workloads, such as CloneSet and Advanced StatefulSet, also provide partitions to support phased release.

For CloneSet, the semantics of Partition is the number or percentage of Pods remaining in the old version. For example, for a CloneSet with 100 replicas, if the partition value is changed in the sequence of 80 :arrow_right: 60 :arrow_right: 40 :arrow_right: 20 :arrow_right: 0 by steps during the image upgrade, the CloneSet is released in five batches.

However, in the past, whether it is Deployment, StatefulSet, or CloneSet, if rollback is required during the release process, the template information (image) must be changed back to the old version. During the phased release of StatefulSet and CloneSet, reducing partition value will trigger the upgrade to a new version. Increasing partition value will not trigger rollback to the old version.

The partition of CloneSet supports the "final state rollback" function after v0.9.0. If the feature-gate CloneSetPartitionRollback is enabled when installing or upgrading OpenKruise, increasing the partition value will trigger CloneSet to roll back the corresponding number of new Pods to the old version.

There is a clear advantage here. During the phased release, only the partition value needs to be adjusted to flexibly control the numbers of old and new versions. However, the "old and new versions" for CloneSet correspond to updateRevision and currentRevision in its status:

updateRevision: The version of the template defined by the current CloneSet.currentRevision: The template version of CloneSet during the previous successful full release.

By default, the value of controller-revision-hash in Pod label set by CloneSet is the full name of the ControllerRevision. For example:

apiVersion: v1

kind: Pod

metadata:

labels:

controller-revision-hash: demo-cloneset-956df7994The name is concatenated with the CloneSet name and the ControllerRevision hash value. Generally, the hash value is 8 to 10 characters in length. In Kubernetes, a label cannot exceed 63 characters in length. Therefore, the name of CloneSet cannot exceed 52 characters in length, or the Pod cannot be created.

In v0.9.0, the new feature-gate CloneSetShortHash is introduced. If it is enabled, CloneSet will set the value of controller-revision-hash in the Pod to a hash value only, like 956df7994. Therefore, the length restriction of the CloneSet name is eliminated. (CloneSet can still recognize and manage the Pod with revision labels in the full format, even if this function is enabled.)

SidecarSet is a workload provided by OpenKruise to manage sidecar containers separately. Users can inject and upgrade specified sidecar containers within a certain range of Pods using SidecarSet.

By default, for the independent in-place sidecar upgrade, the sidecar stops the container of the old version first and then creates a container of the new version. This method applies to sidecar containers that do not affect the Pod service availability, such as the log collection agent. However, for sidecar containers acting as a proxy such as Istio Envoy, this upgrade method is defective. Envoy, as a proxy container in the Pod, handles all the traffic. If users restart and upgrade directly, service availability will be affected. Thus, you need a complex grace termination and coordination mechanism to upgrade the envoy sidecar separately. Therefore, we offer a new solution for the upgrade of this kind of sidecar containers, namely, hot upgrade:

apiVersion: apps.kruise.io/v1alpha1

kind: SidecarSet

spec:

# ...

containers:

- name: nginx-sidecar

image: nginx:1.18

lifecycle:

postStart:

exec:

command:

- /bin/bash

- -c

- /usr/local/bin/nginx-agent migrate

upgradeStrategy:

upgradeType: HotUpgrade

hotUpgradeEmptyImage: empty:1.0.0upgradeType: HotUpgrade indicates that the type of the sidecar container is a hot upgrade, so the hot upgrade solution, hotUpgradeEmptyImage, will be executed. When performing a hot upgrade on the sidecar container, an empty container is required to switch services during the upgrade. The empty container has almost the same configuration as the sidecar container, except the image address, for example, command, lifecycle, and probe, but it does no actual work.lifecycle.postStart: State migration. This procedure completes the state migration during the hot upgrade. The script needs to be executed according to business characteristics. For example, NGINX hot upgrade requires shared Listen FD and traffic reloading.For specific sidecar injection and hot upgrade procedures, please refer to the official documentation on SidecarSet

For more information, please refer to the official website documentation. We welcome anyone interested in OpenKruise to join our community, follow us in slack.

[1] OpenKruise

[2] ChangeLog

[3] Install or Upgrade OpenKruise

[4] controller.kubernetes.io/pod-deletion-cost

[5] Official Website Documentation

[6] Issue

[7] Slack

Cloud-Native Boosts Full Cloud-Based Development and Practices

640 posts | 55 followers

FollowAlibaba Cloud Native Community - May 27, 2025

Alibaba Cloud Native - June 9, 2022

Alibaba Cloud Native Community - October 18, 2022

Alibaba Cloud Native Community - June 19, 2024

Alibaba Container Service - September 14, 2024

Alibaba Developer - January 9, 2020

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community