Monolithic applications were popular in the early era of the Internet (Web 1.0). At that time, the R&D Team was relatively small, mainly developing external web pages and news portals. The number of Internet users surged in the era of Web 2.0 in the new century. Internet products from fields (such as e-commerce and social networking) have appeared one after another. An R&D Team may contain hundreds or even thousands of members. Traffic and business complexity have undergone qualitative changes compared to the previous era, so the drawbacks of monolithic services have emerged, such as R&D inefficiency.

At that time, Service-Oriented Architecture (SOA) emerged. It is similar to microservices and has a centralized component similar to ESB. Alibaba's HSF was born at this stage.

After the emergence of the mobile Internet era, all kinds of applications were born, and life began to be Internet-based. Large traffic, high concurrency, and large-scale R&D teams are becoming more common, and the corresponding requirements for high technology and productivity are increasing. The concept of microservices was created at this time.

Microservices have existed throughout the development of the architecture. Frameworks like Spring Cloud and Double have been popular in the Java technology stack. It is easy to find that the whole society has entered the stage of rapid digital development. Bigger issues are embedded, such as increased traffic and application complexity, expanded R&D team, and increased requirements for efficiency.

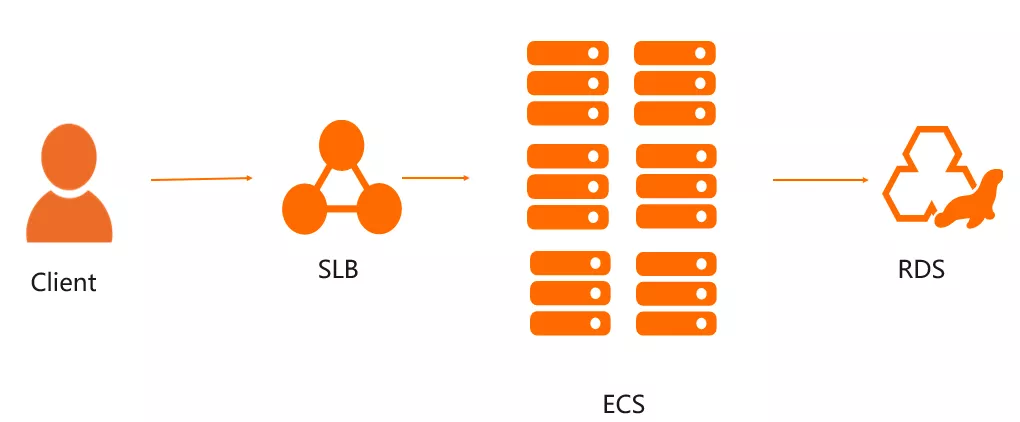

Most companies or early businesses have gone through such a process (as shown in the figure). First, the client needs to access it through a portal. In the preceding figure, SLB is a load balancing service of Alibaba Cloud. It is equivalent to a network portal and can correspond to a single service in ECS (a virtual machine of Alibaba Cloud). They share one database. This is the first period.

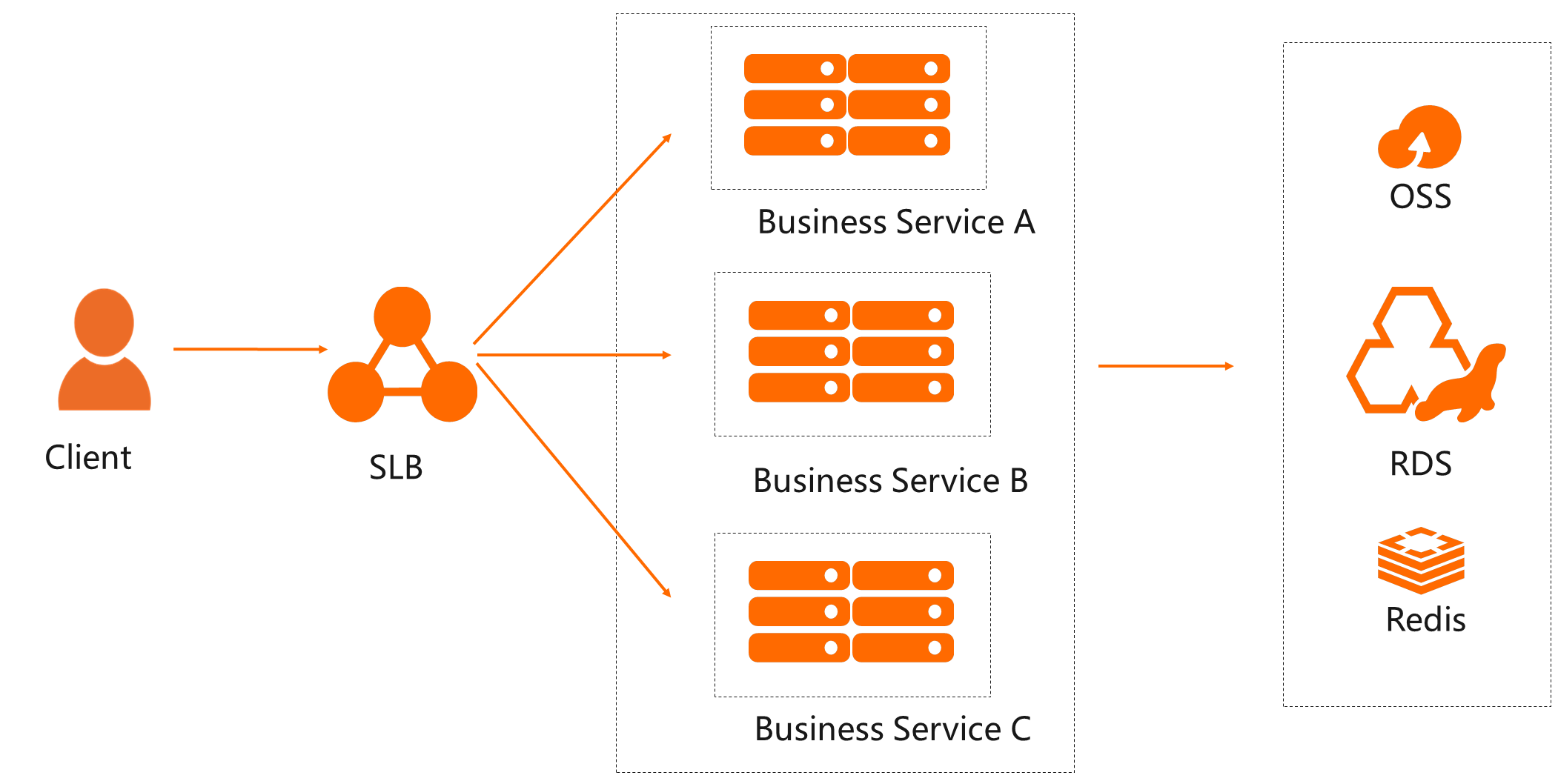

The SOA architecture can split some businesses. However, SOA does not split the service from the underlying layer like the storage database, which means the same database is used. So, it is still a monolithic architecture.

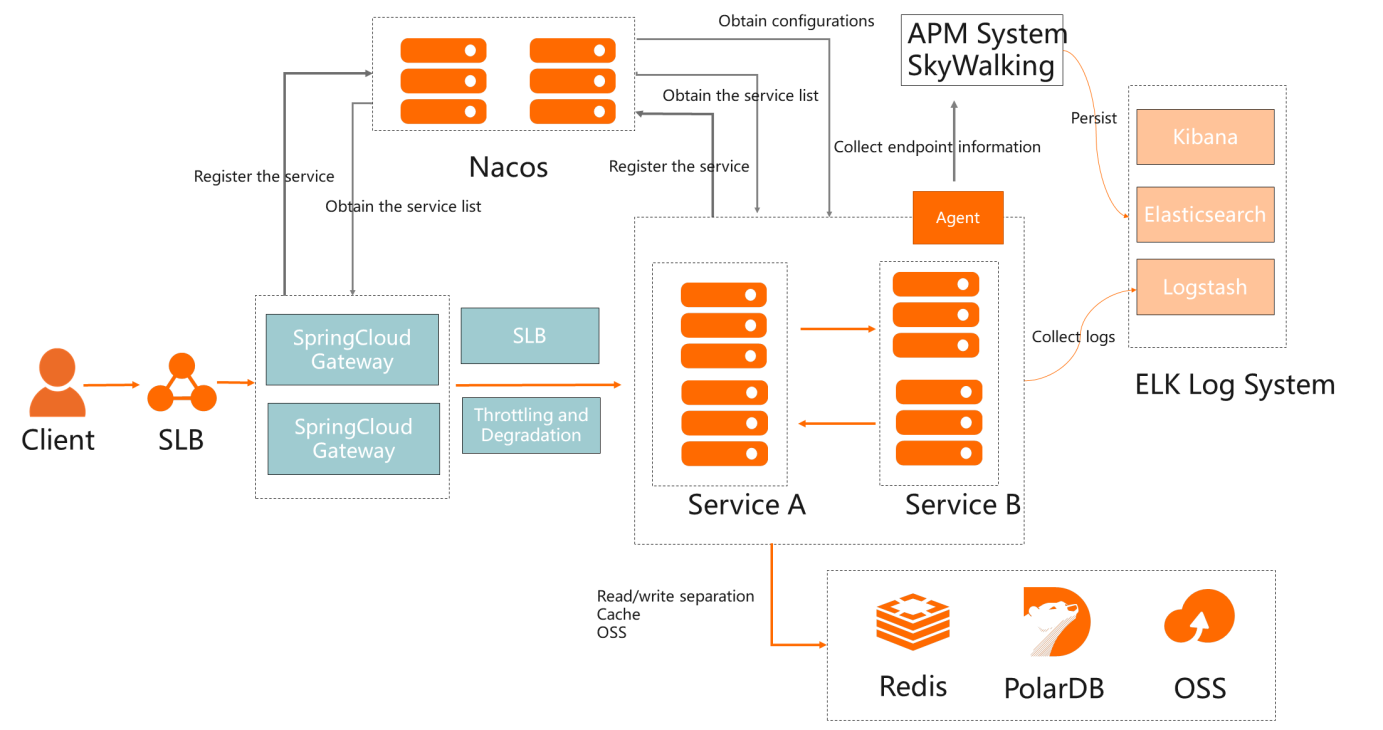

In the microservice period, if the client accesses the gateway through SLB (as shown in the above figure), the corresponding services will be forwarded, and some calls will be generated between the services. Each service will correspond to a separate database or cache, and each service will be registered, discovered, and configured through services similar to Nacos.

After the introduction of microservices, the separation of architecture services has been solved, and the R&D Team can be specialized in a certain field and business, but microservices are more complicated. Therefore, some operation and maintenance problems are incurred.

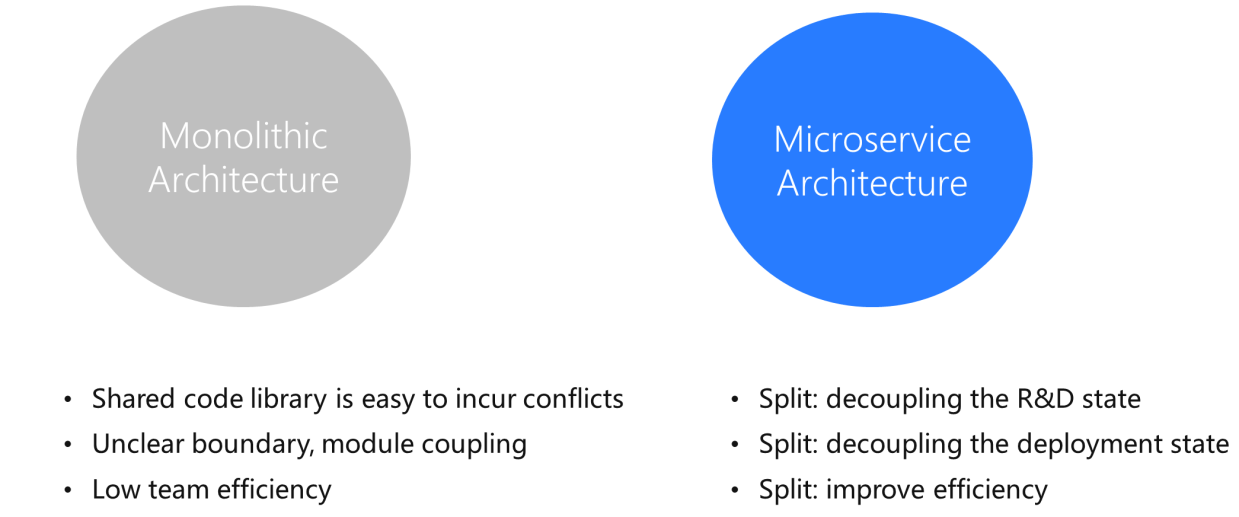

In the case of a monolithic application in a monolithic architecture, there will be problems, such as unclear boundaries, module coupling, and conflicts between shared code libraries. Meanwhile, if the team size is large, the collaboration efficiency will be relatively low. However, the core of the microservice architecture is decoupling. If decoupling is achieved after splitting, the Development Team can improve efficiency.

Cloud-native is a macro concept. If we check the changes and evolution brought by cloud-native to microservices, we can understand what cloud-native is.

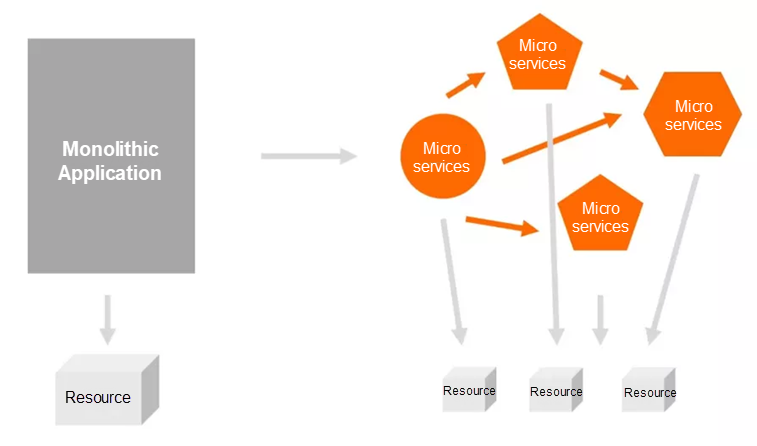

What is the essence of microservices and monolithic applications? As shown in the figure, a large monolithic application is split into several tiny services for collaboration. As such, a dependency relationship is formed between microservices, which need to be deployed to one or more resources, and the resources are the computing resources.

In the past, the relationship between monolithic applications and resources was simple. Monolithic applications only involve internal collaboration without external dynamic dependencies. However, after converting to microservices, the entire system becomes a mesh and is complicated in terms of management due to the sharp increase of external dependencies and nodes. More than 50% of enterprises say their biggest challenge is complex operations and maintenance when adopting the microservice architecture. In other words, the management of the entire service lifecycle.

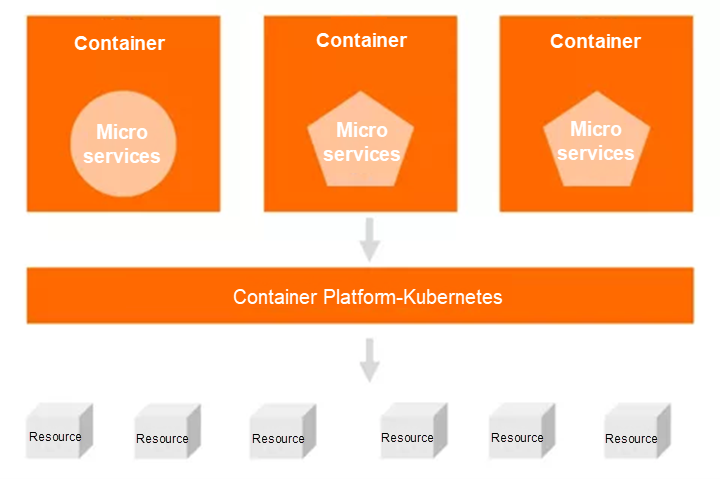

Nowadays, it is generally accepted that the foundation of cloud-native lies in containers and the management and orchestration of containers (Kubernetes). The technologies of container and Kubernetes can help us solve the complicated operation and maintenance problems in the microservice system.

First of all, different microservices will be heterogeneous. A team may allow different small teams to use different programming languages and runtime environments to run microservices to maximize the performance under the microservice system. Therefore, when we initially operated and managed microservices, there was no unified standard to handle these heterogeneous environments. This has led to the popularity of cloud-native container technology, which limits microservice deployment through a layer of standardized runtime and encapsulation. From the perspective of lifecycle and management, the reduction of differences between microservices is conducive to resource scheduling.

Subsequently, a container platform is derived based on container scheduling. The container platform manages containers. Kubernetes can run microservices on the underlying resources in a standard and convenient manner. Then, the storage/computing network can be encapsulated in a unified way through Kubernetes. It is similar to the operating system in the cloud-native era.

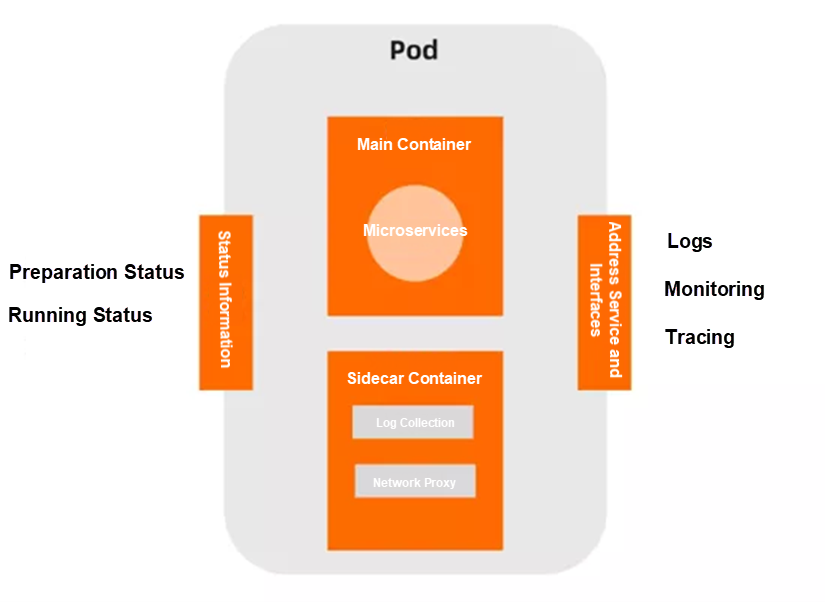

What specific help will it provide? In Kubernetes, there is a concept called POD, which is the combination of a group of containers. It is coupled with the lifecycle of microservice entities. One POD can support one or more containers.

When using the microservice architecture, we usually put the body of the microservice operation in the main container, which means the main logic of microservice execution is put inside the main container. Thus, the lifecycle of the main container is coupled with POD. When POD dies, the body of the microservice will die. In addition, we will also run Sidecar, which provides auxiliary functions for the main container, such as log collection, network proxy, and identity authentication. In addition to providing core capabilities, microservices can dynamically provide additional auxiliary capabilities, which make the management of microservices more stable and convenient.

The POD model also provides many useful functions, such as status information. (Status information refers to what POD will provide for a standard interface to display the status of the runtime.) We can judge the running status of microservices or containers through this information, including whether it is running or whether the service is ready to receive traffic access. POD provides a guarantee for overall stability. The other function is address service. Each POD will have a standardized DNS address service, which is helpful for APIs that need to be uniformly exposed and log monitoring and tracking capabilities. Runtime problems can be quickly found through DNS log addresses and exposed observability information. Therefore, it can be concluded that containers and container platforms can help microservices possess more capabilities at the micro-level.

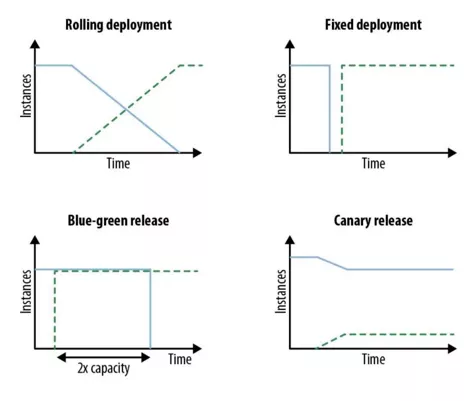

This figure shows four release models:

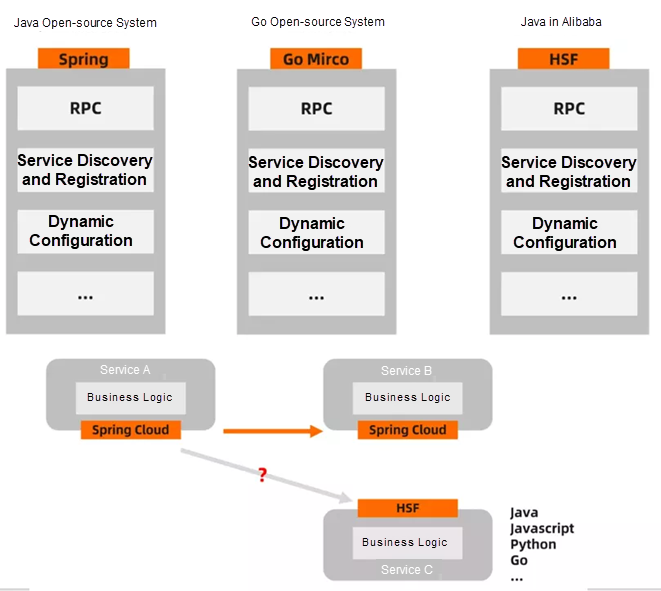

Microservices split the static communication relationships of the monolithic period into dynamic runtime. Generally, the communication and collaboration between services need to be managed separately. The microservice framework helps us abstract and implement the common functions of each service.

The abstraction level includes business logic and capabilities of communication, traffic, and service governance. We can abstract the underlying common capabilities into a concrete framework, but there is no way for frameworks between different microservices to call each other. However, different development languages and models in the cloud-native age can be used for programming to realize the research and development of microservices.

Service Mesh aims to solve the problem of multi-language and multi-environment traffic governance.

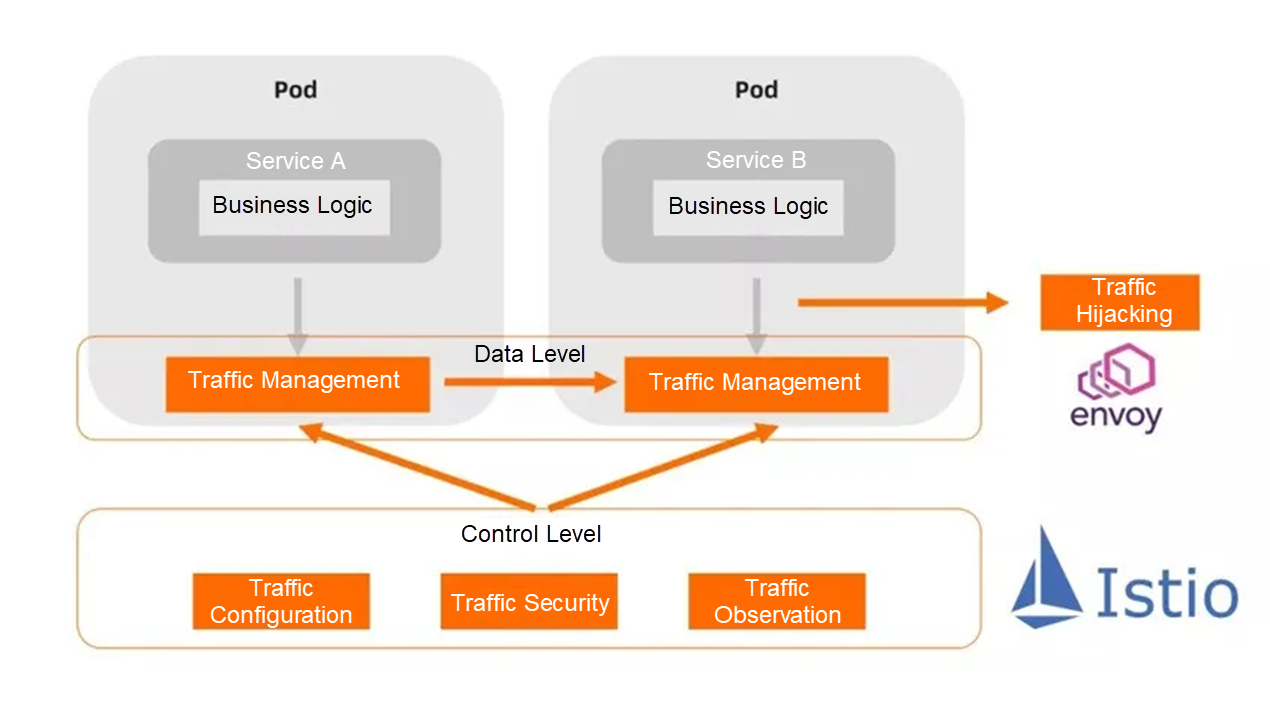

At the data level, Sidecar is responsible for traffic hijacking, forwarding, and management. A typical Sidecar implementation is Envoy.

As shown in the figure, it will abstract the upper part from the framework level and then decouple it from the business. It will put the common capabilities in Sidecar and manage them through communication and forwarding. This will make the problem simpler. Developers only need to communicate between traffic management and Sidecar, and microservice instances of different technology stacks can communicate with each other.

In addition to the data level, we need support at the control level. We need a component to manage the policy rules in the original microservice system. The classic implementation is Istio. For example, capabilities, such as service registration, service discovery, and traffic observation in the original microservice system, need to be completed by the mainline of the control level. Service Mesh was created with these capabilities. We can manage the traffic in POD and the single point of data so they can form a mesh structure and become clusters to realize traffic distribution, security, and observation.

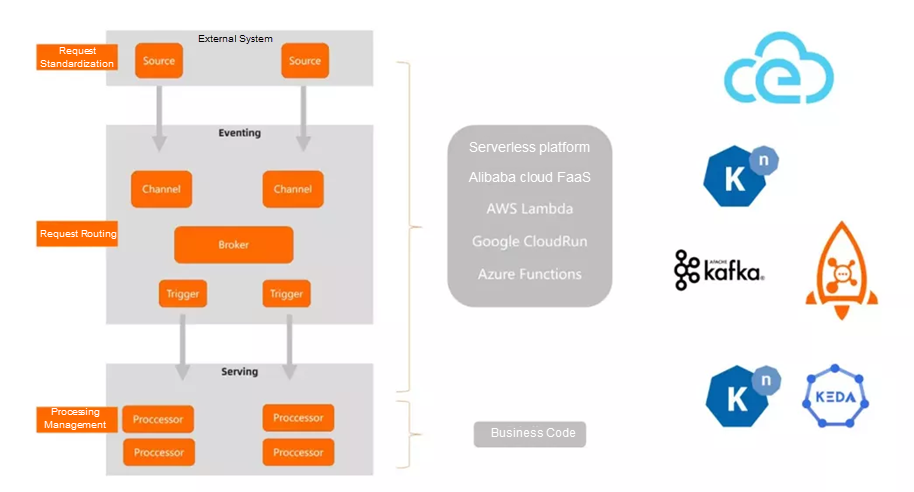

The programming model in the figure is Function Compute related.

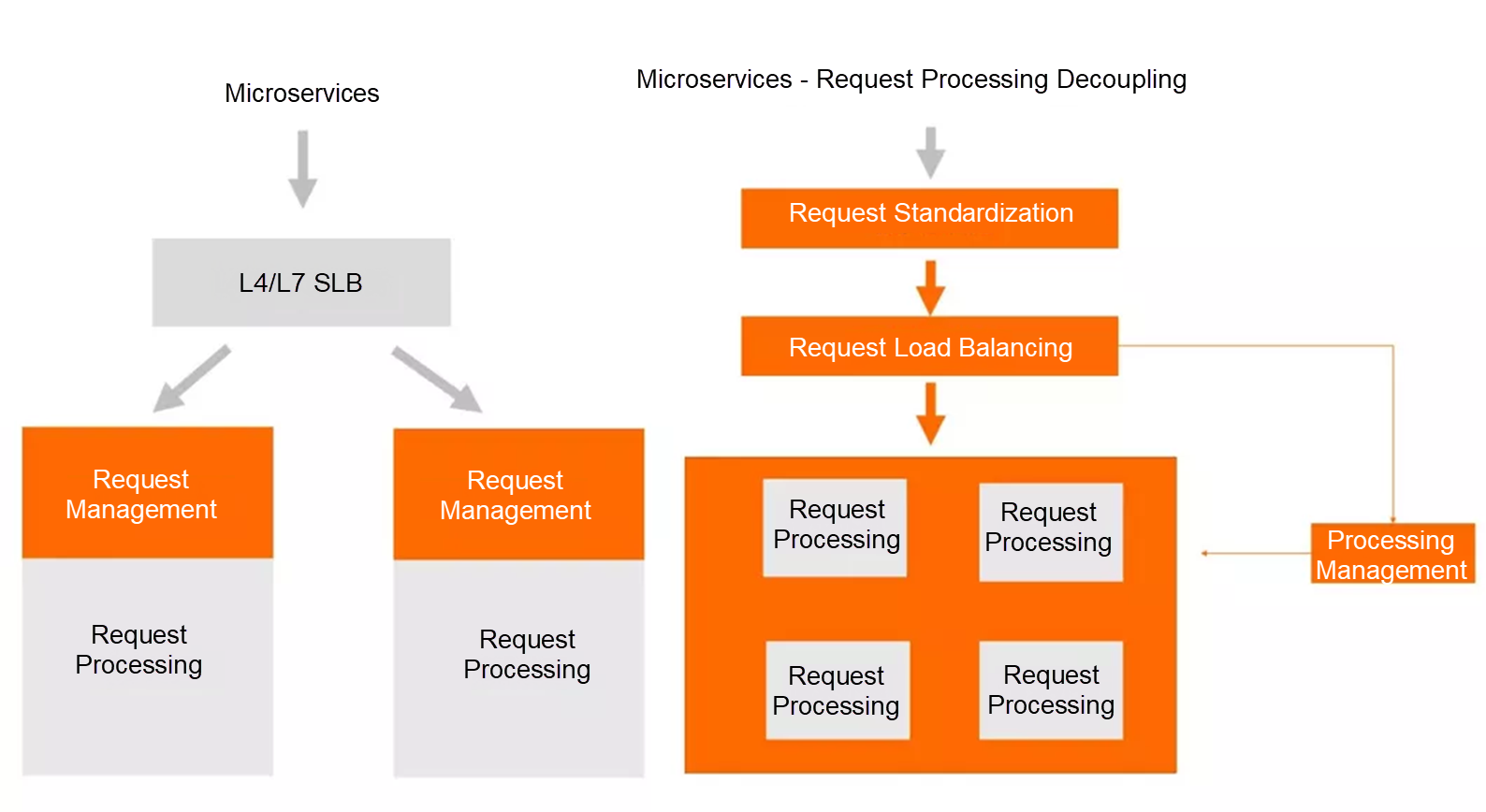

Request-driven is the dynamic auto-scaling based on requests and simplifies the logic of request processing. The call of microservices will be distributed to different microservice instances through 4-layer or 7-layer SLB after the traffic comes in. However, inside the same microservice instance process, two logics are generally present. The first is request management, which may be an HTTP server, Handler, or the combination of queue management and request distribution capabilities. This combination will eventually submit the request to the second part, request processing, which is also the logic that developers need to implement.

For example, Java Go and Python have their request management logic, and a strong coupling can form between request management and request processing. This instance contains the logic of both request management and request processing. In this architecture, no control layer that is globally independent and can be aware of requests and manage traffic exists. Only the processing layer of the entire instance interprets requests this way. Even if the microservice instance is overloaded, it is difficult to forward the request to other microservice instances for SLB. Therefore, the request-driven system needs to check data and solve these two elements. Developers are decoupling the request-driven system.

As shown in the figure, the request transmitted by the external system will be standardized first, and there will be an adapter. After standardization, it will be placed in the request load balancer. This load balancer can understand the semantics of the request itself. Then, it can drive and process. When processing units are not enough, it can scale out through the manager. When there are many logical units, it can also scale in, thus forming dynamic management for reducing costs.

Request-Driven Model:

The combination of request standardization, request routing, and processing management is consistent with the concept of Serverless. Developers do not need to care about the Server and can focus on business logic. This is also the integration process of microservice systems and platform-based Serverless architectures. Alibaba Cloud Function Compute (FC) and Serverless App Engine (SAE) focus on solving these problems.

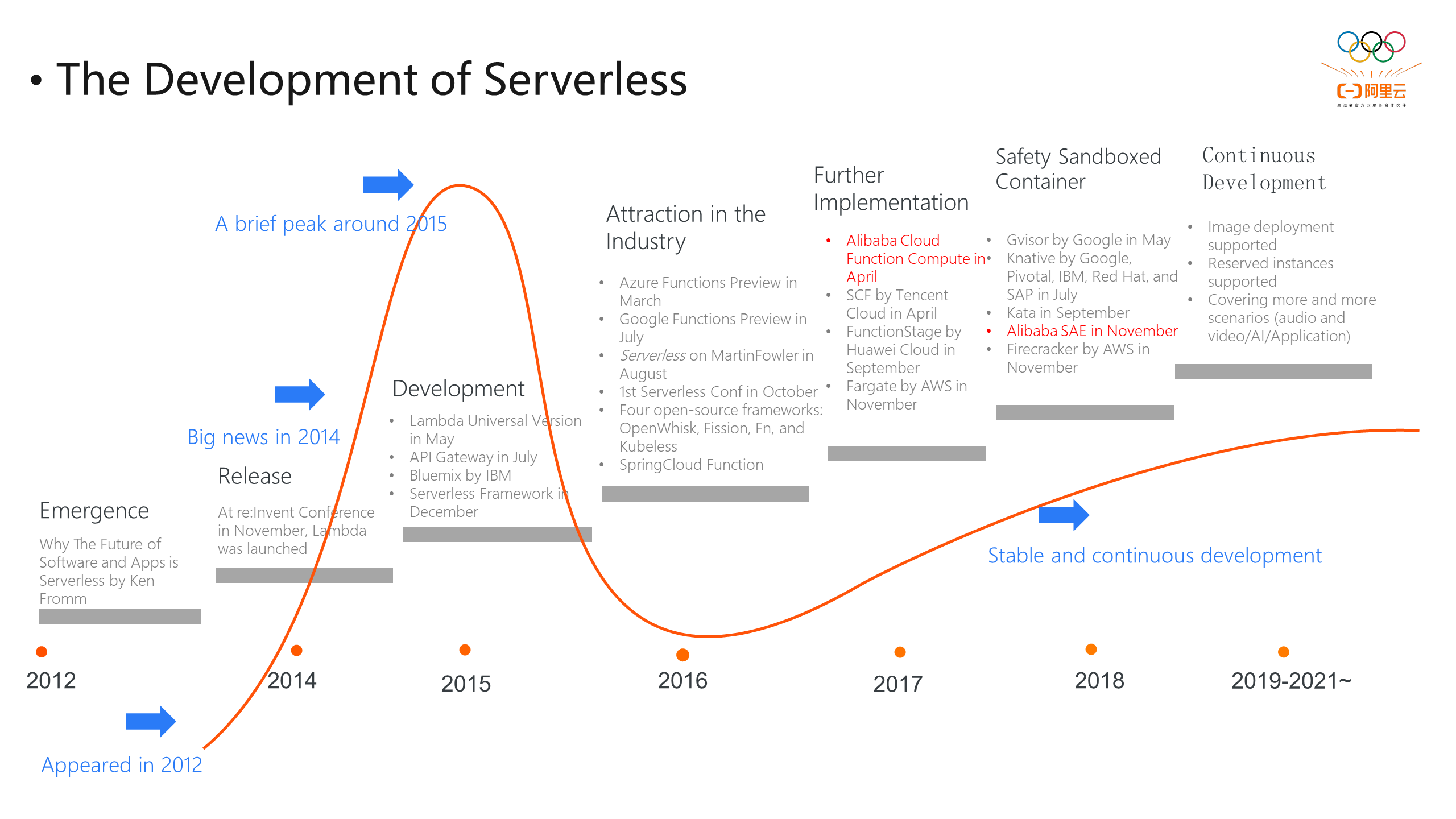

Serverless has gone through years of development, and its concept can be traced back to 2012. AWS officially launched Lambda in 2014, which started the Serverless trend. However, a quiet period of development followed because the development model of FC is different from the original model. FC is suitable for some frontend applications rather than long-running applications, and it is inclined to request-based processing. Therefore, those services or application architectures that need to run for a long time cannot enjoy the benefits of Serverless, such as elasticity, cost reduction, and efficiency improvement.

The pain point of microservices is stability. Microservices bring many other components, such as service discovery and some other tools. They will become complicated in monolithic cases because the entire architecture becomes a mesh structure. Containers and container platforms help us carry operation and maintenance of microservices to some extent, but they are complicated as Kubernetes.

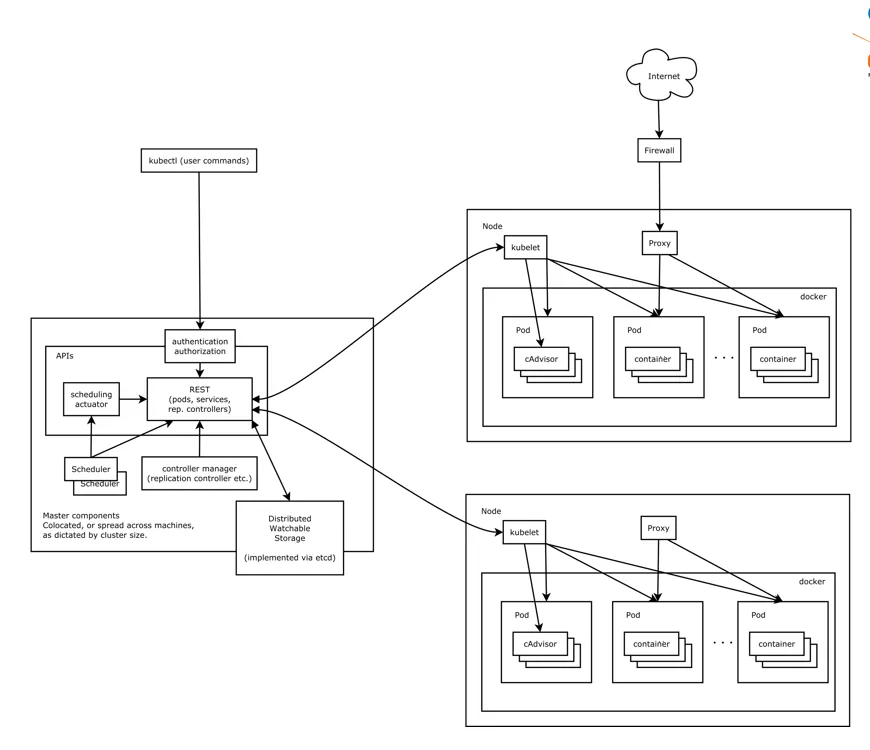

The Architecture of Kubernetes

Kubernetes is complex and has some pain points:

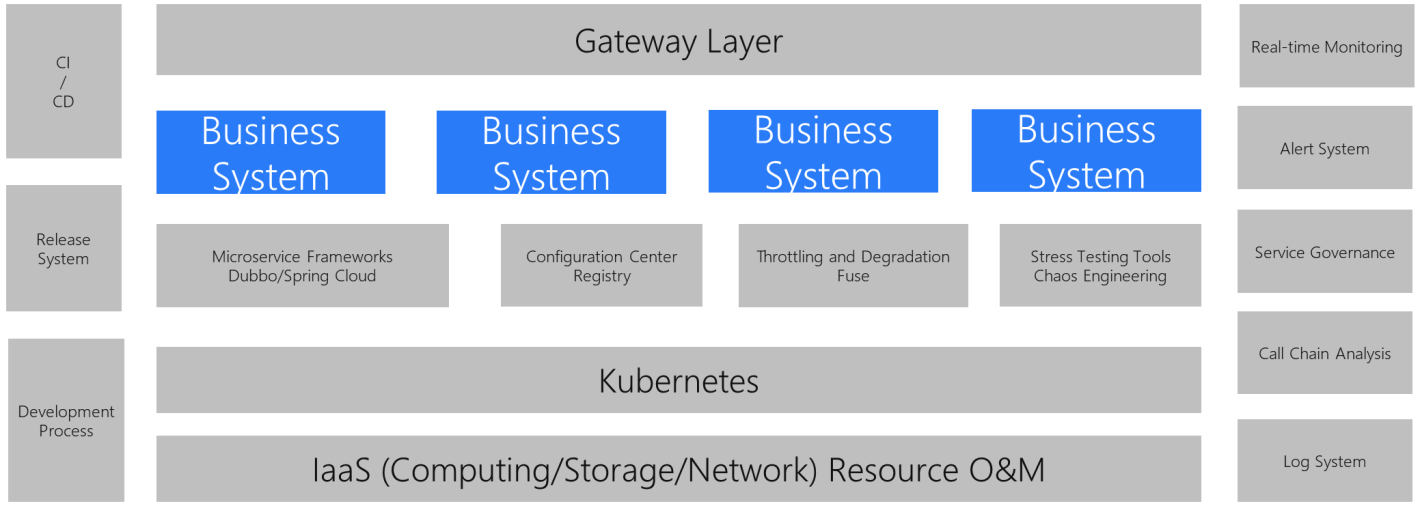

The most attractive thing for developers is that they can focus on business logic without changing the original development method. The ideal state of microservices is that developers only need to pay attention to the business system in the architecture. They do not need to care about other parts, such as gateway, CI/CD, release system, inspection process, registry, alarm and monitoring, and analysis logs. Its advantages are summarized below:

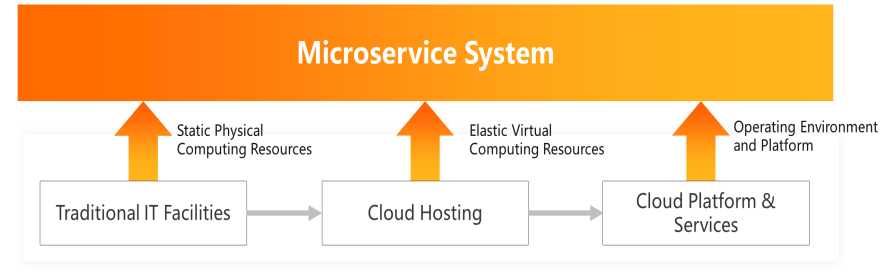

The microservice system has different events in the whole development of cloud computing. For example, traditional IT facilities (like IDC) were deployed in the early days, and microservices provided static physical computing resources.

Then, the cloud hosting era was introduced, including products like Alibaba Cloud ECS. It can provide flexible computing resources without substantial changes. It does not change much for the deployment and O&M of services and microservices.

Now, we are in the cloud-native era. Cloud platforms and services can undertake these complex O&M operations, configuration, and management. Microservices provide an operating environment and platform. Users only need to care about the business system and the implementation of the system. The complex technology becomes simpler. The platform replaces users to complete repetitive and unfriendly work, which is in line with the overall development direction of computer technology.

Next Generation Software and Hardware Architecture Collaborative Optimization Revealed

99 posts | 7 followers

FollowAlibaba Cloud Native - October 9, 2021

Alibaba Clouder - November 23, 2020

Alibaba Cloud Community - November 25, 2021

Alibaba Cloud Serverless - March 16, 2023

Alibaba Cloud Community - June 14, 2024

Alibaba Cloud Community - May 17, 2024

99 posts | 7 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn MoreMore Posts by Alibaba Cloud Serverless

Dikky Ryan Pratama May 30, 2023 at 2:55 am

awesome!