By Chen Tao

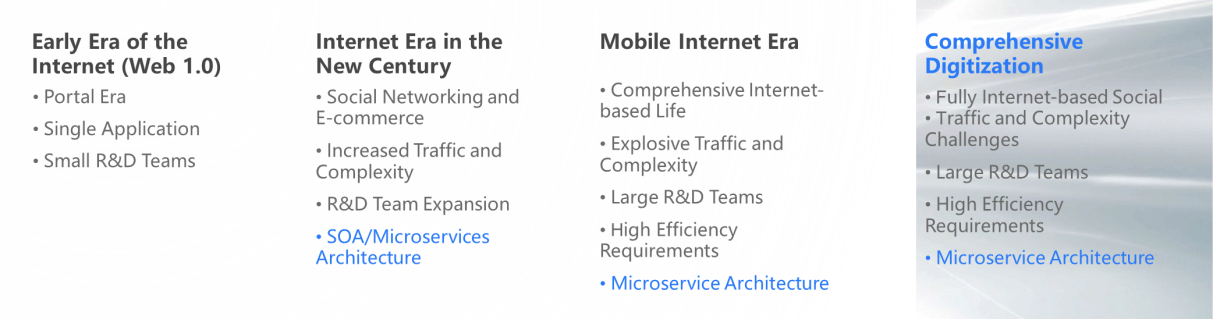

In the early era of the Internet, the web 1.0 era, there were mainly some portal sites. Monolithic applications were the mainstream at that time, and the R&D teams were relatively small. The challenge at this time was the complexity of technology and the lack of technicians.

By the time the Internet era in the new century arrived, some large-scale applications emerged, such as social and e-commerce, and the complexity of traffic and business increased dramatically. Besides, R&D teams of hundreds or thousands of people appeared. After the teams expanded, collaboration became an issue. The SOA solution is a product of the Internet. Its core lies in distribution and splitting. However, some single-point components like ESB were not popularized. At that time, the technologies Alibaba introduced, such as HSF and open-source Dubbo, were a distributed solution. They had a concept of microservice architecture.

The official name of microservice architecture was born in the mobile Internet era. At that time, life has become fully Internet-based; various essential apps for life have emerged. In addition, the complexity of Internet users and traffic has increased significantly compared to the Internet era in the new century. Furthermore, large-scale R&D teams have become mainstream. Therefore, people generally have a higher pursuit of efficiency; it does not fall solely on tech giants that need this technology. The introduction of microservice architectures and microservice technologies, such as Spring Cloud and Dubbo, has popularized microservice technologies substantially.

Now, we have entered the comprehensive digital age. Society is fully Internet-based, and various units, including government and relatively traditional enterprises, need strong R&D capabilities. The challenges of traffic, business complexity, and the expansion of R&D teams have led to a higher demand for efficiency. Now, microservice architecture has been promoted and popularized more.

After all these years of development, microservice architecture is an enduring technology. Why does it have continuous development?

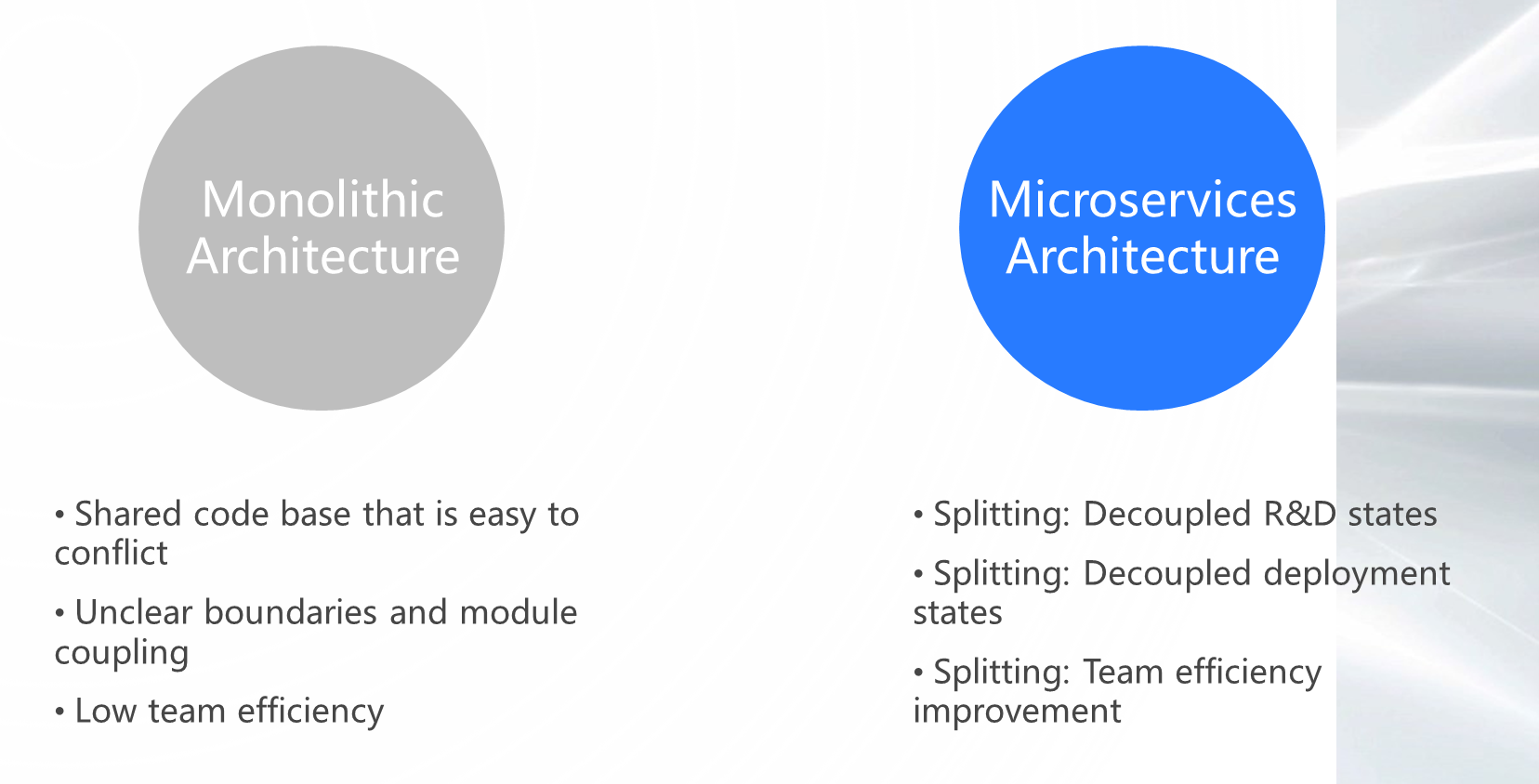

Let's review the differences between microservice architecture and monolithic architecture and the core advantages of microservice architecture:

The core problem with monolithic architecture is that the conflict domain is too large, including the shared code library. The development process is particularly prone to conflicts, and the size of boundaries and modules are also unclear, reducing team efficiency.

In the microservice architecture, the core is splitting, including decoupled R&D states and decoupled deployment states, improving the R&D efficiency of the team significantly. This is one reason why microservices architecture continues to evolve.

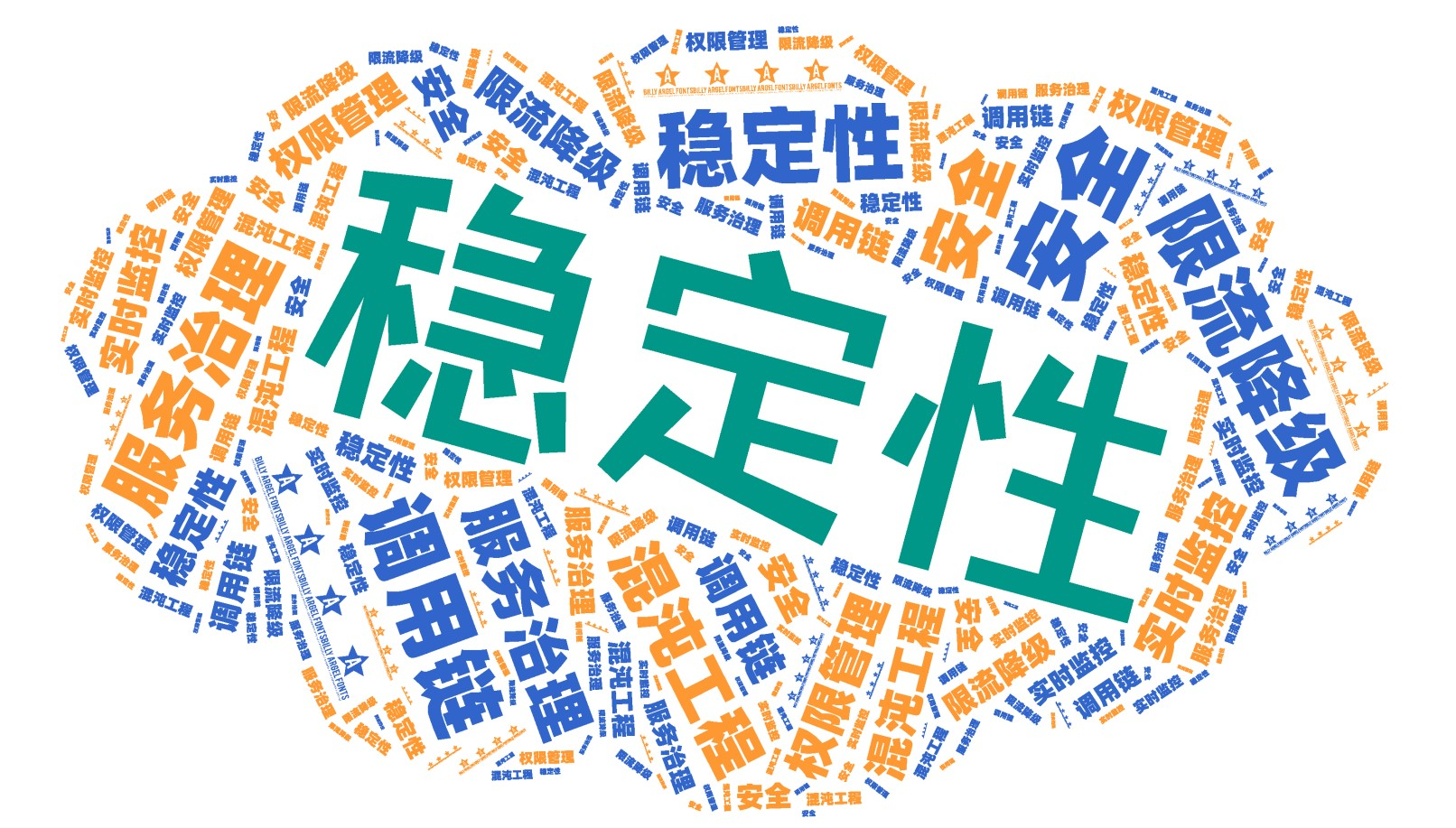

According to the law of conservation of complexity, after we have solved one problem, the problem will appear in another form, and we need to solve it again. Under the era of microservices, many pain points have been introduced, and the core pain point is stability. After local calls are changed to remote calls, a slight increase in stability may occur, including scaling up the scheduling. That is to say, due to underlying remote call problems, some instability issues in the upper layer will be introduced. It also requires throttling, degradation, and traces.

The complexity of locating a problem in the era of microservices will also grow exponentially, which may also require service governance. In addition, without better design and some preconceptions, there may be an explosion of microservice applications, including collaboration between R&D and testing personnel, which can also become an issue.

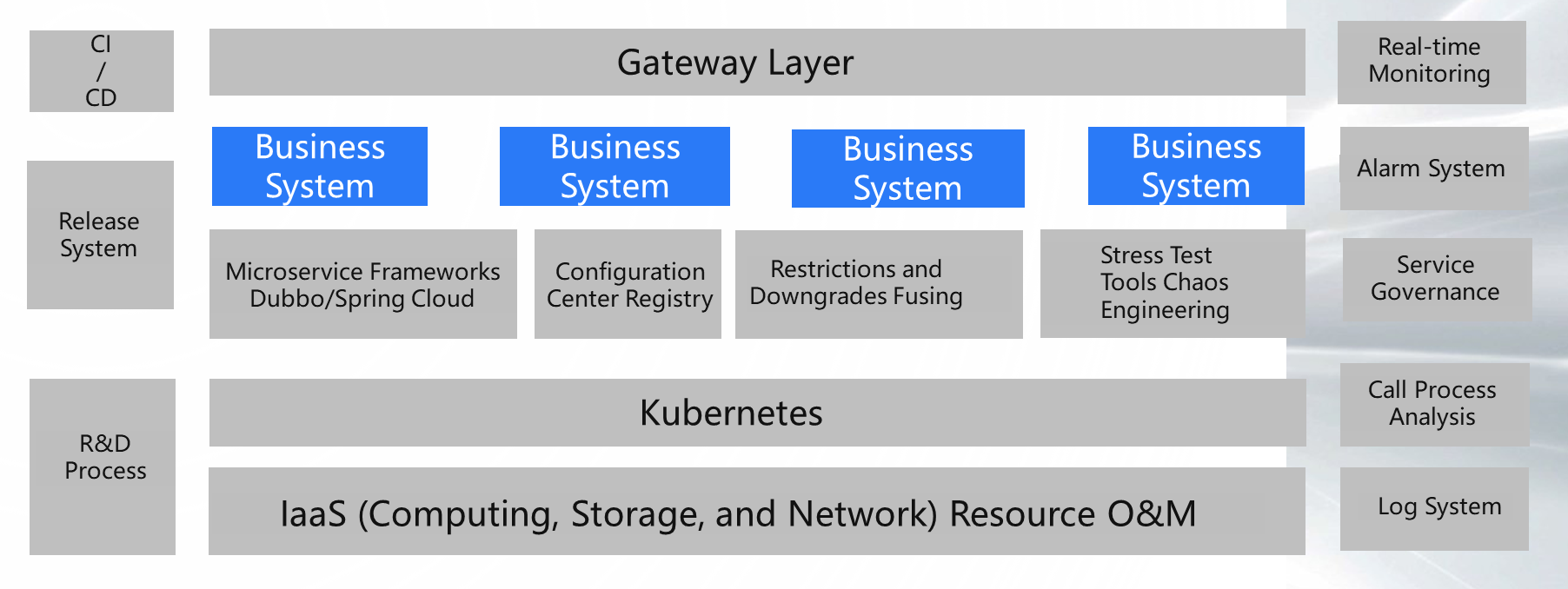

After all these years of development, some solutions already exist in the industry.

As shown in the figure above, in addition to developing their own business systems, they may have to build multiple systems, including CI/CD, release system, R&D process, some tools related to microservice components, real-time monitoring, alarm system, service governance, and a call chain for observability. Moreover, IaaS resources with an O&M base are also needed. In this era, you may need to maintain a Kubernetes cluster to maintain IaaS resources better.

Therefore, in this context, many enterprises may set up an O&M team or a middleware team or ask backend R&D engineers to help complete. However, how many enterprises are satisfied with the system built internally? How much is the iteration efficiency of the system? Have they encountered some open-source problems and solved them? These should be persistent pain points in the minds of CTOs and architects of enterprises.

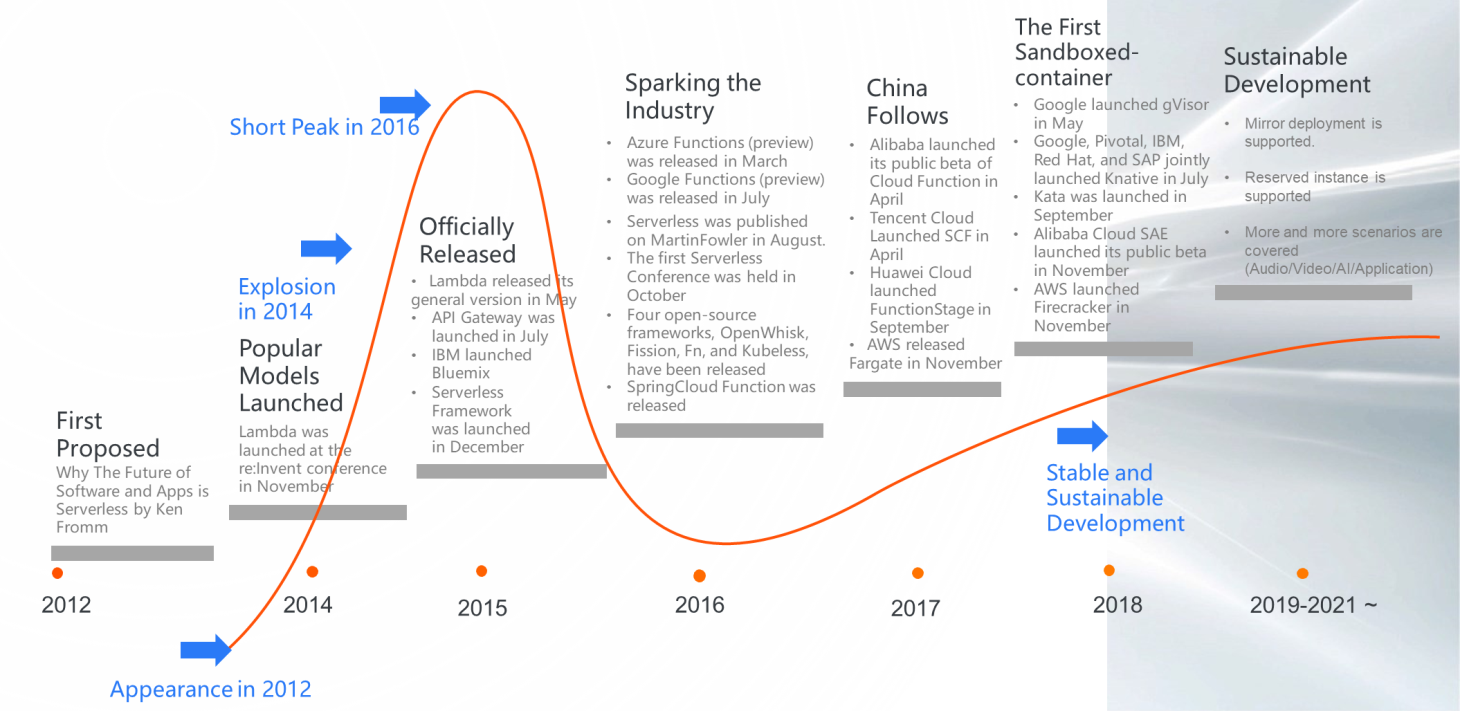

Serverless was first proposed in 2012. By 2014, Serverless briefly reached the pinnacle of influence after the release of the explosive product Lambda. However, when a new thing suddenly enters the real and complex production environment, it does not adapt to many problems that need to be improved. Therefore, it may go into a slump for a few years.

However, the idea "leave the simplicity to the user while leaving the complexity to the platform" of Serverless is the correct direction. In the open-source community and the industry, there is some ongoing exploration and development of Serverless.

Alibaba Cloud launched Function Compute (FC) in 2017 and Serverless Application Engine (SAE) in 2018. Since 2019, Alibaba Cloud has continued to invest in Serverless, supporting image deployment, reserved capability, and microservice scenarios.

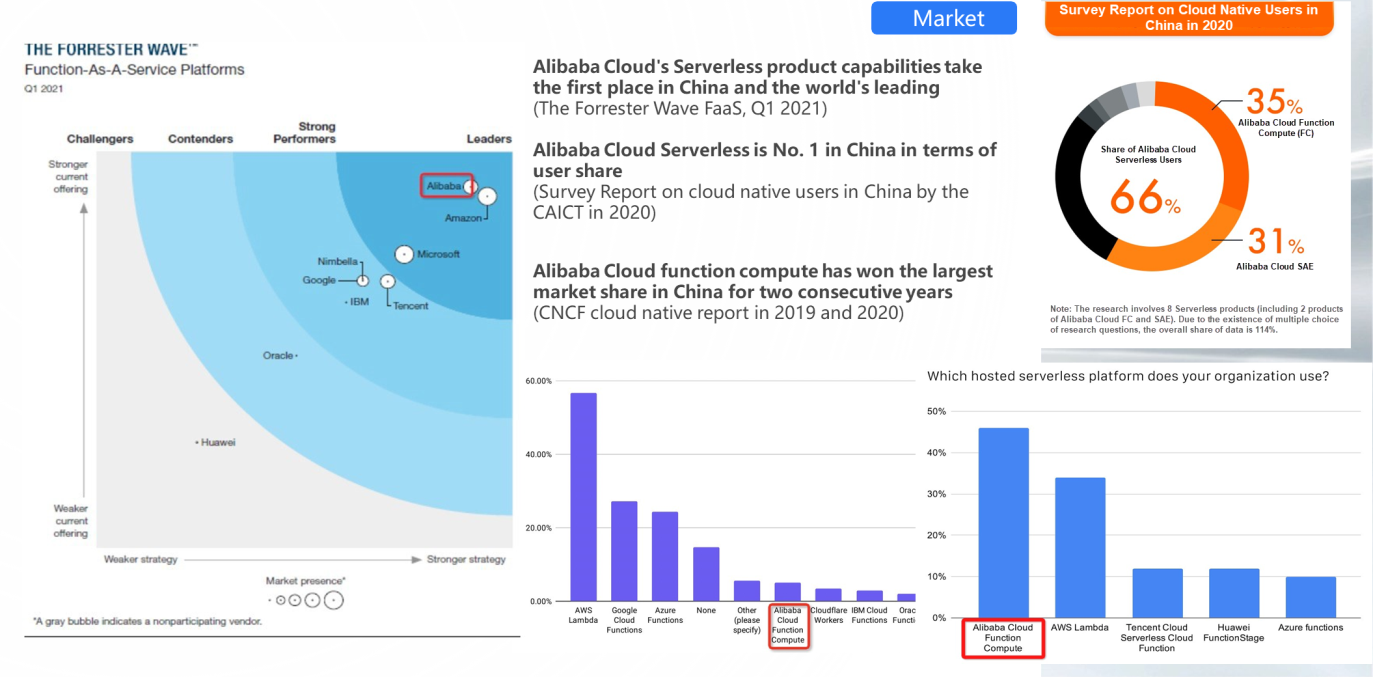

In the latest Forrester evaluation from 2021, Alibaba Cloud's Serverless product capabilities took first place in China and the world. Alibaba Cloud also had the largest proportion of Serverless users in China. This shows that Alibaba Cloud Serverless has increasingly entered the real production environments of enterprises. More enterprises recognize the capabilities and values of Serverless and Alibaba Cloud Serverless.

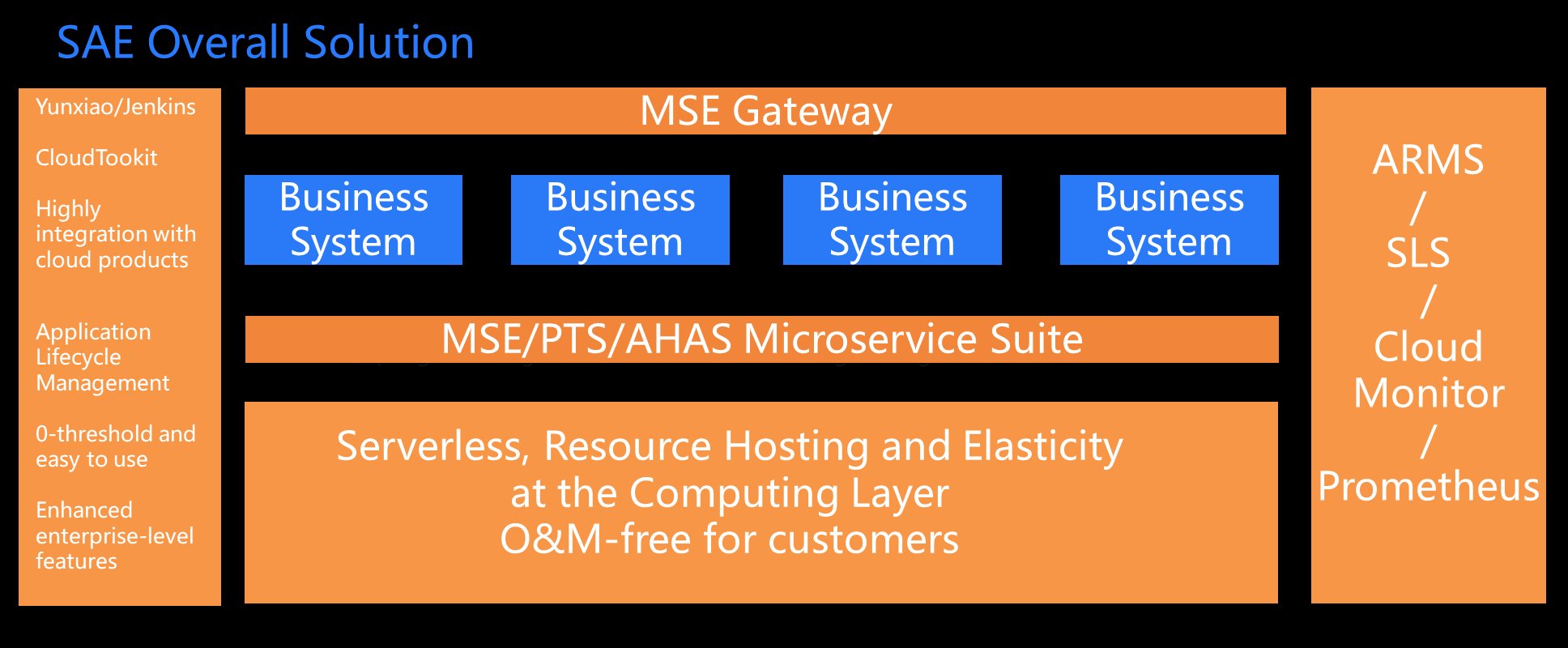

In a traditional microservice architecture, enterprises need to develop many solutions to make good use of microservice-related technologies. In the Serverless era, how is it solved in SAE?

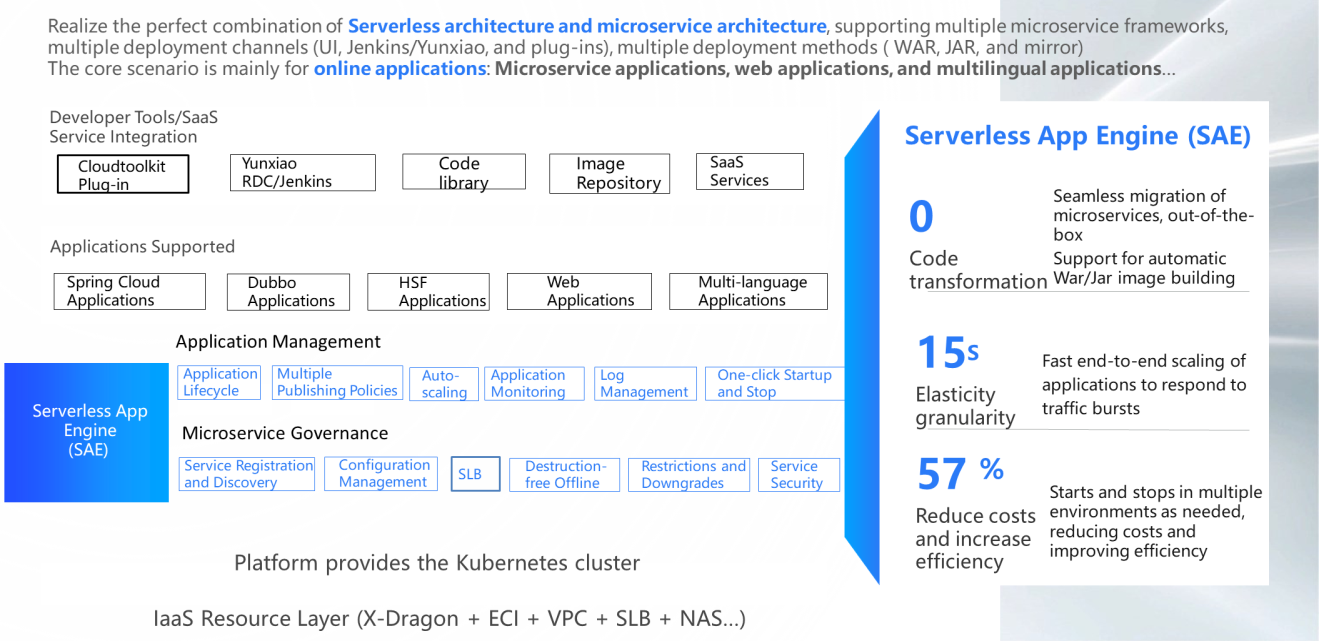

We can see that SAE manages the Serverless idea perfectly. It hosts IaaS resources (including Kubernetes) on top and integrates white-screen PaaS and the enterprise-level suites related to microservices and observability. These solutions are integrated into the SAE solution to provide users with an out-of-the-box microservice solution with easy-to-use microservices for enterprises and developers.

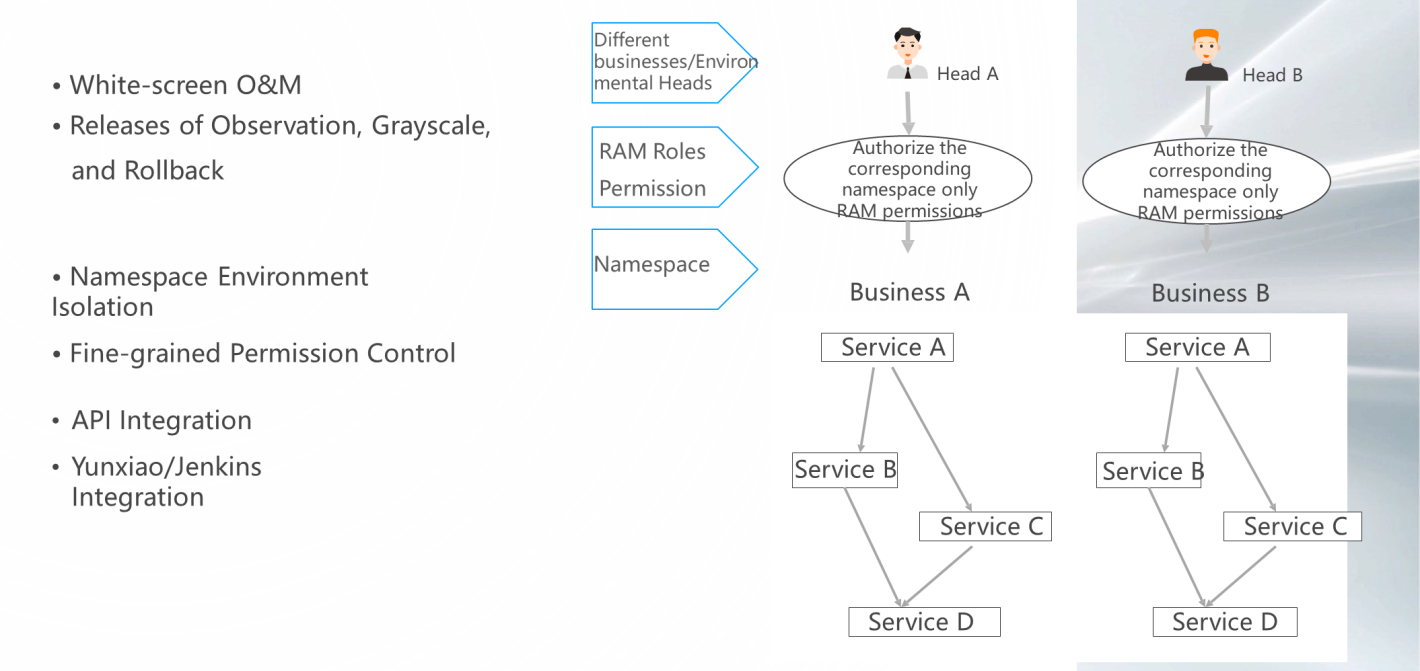

As shown in the figure, SAE provides users with a white-screen operating system at the top. Besides, its design concept is in line with the general PaaS system of enterprises, including the release system or some open-source PaaS systems. This lowers the threshold significantly for companies to get started with SAE. Some could say that there is no threshold. It also integrates some of Alibaba's best releases, such as observation, grayscale, and rollback.

Moreover, it provides several enterprise-level capability enhancements, including namespace environment isolation and fine-grained permission control. As shown in the figure, two independent modules in an enterprise can be isolated through namespace processes.

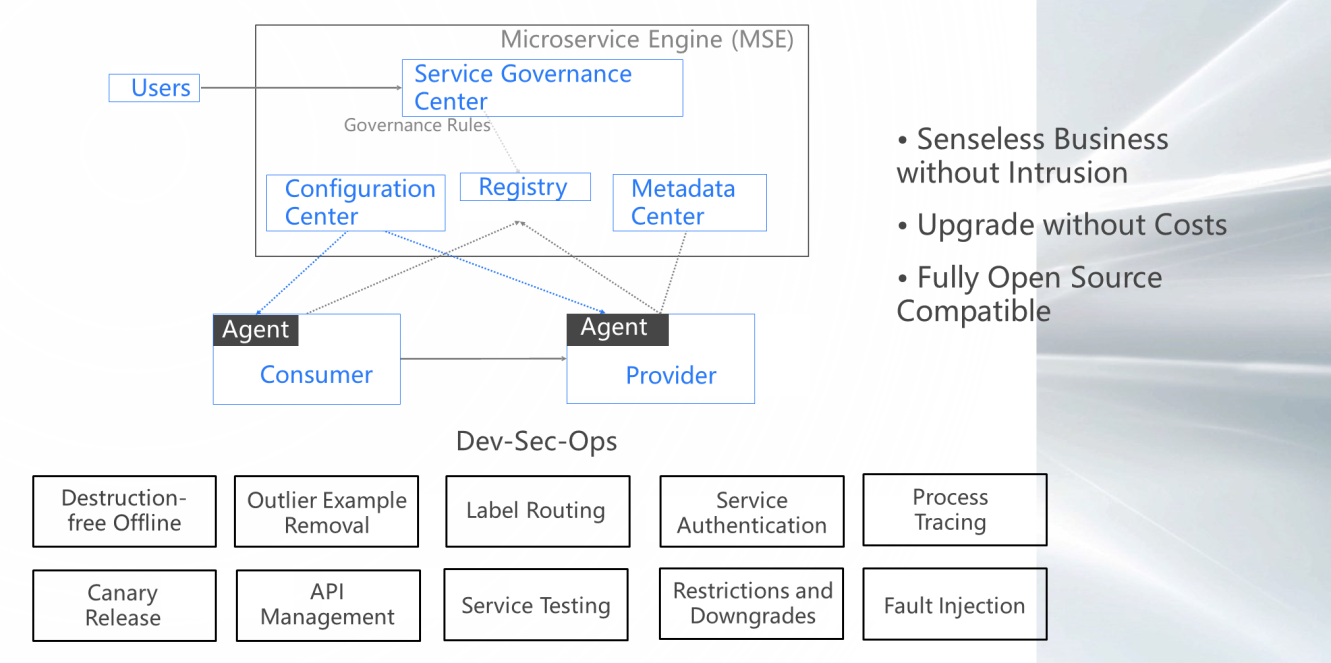

In terms of microservice governance enhancement, especially in the Java language, SAE uses an agent, which is equivalent to no intrusion, no perception, and zero upgrades for users. Meanwhile, the agent's full open-source compatibility allows users to enjoy lossless downscaling, API management, flow-limiting downscaling, and process-tracking with almost no modifications.

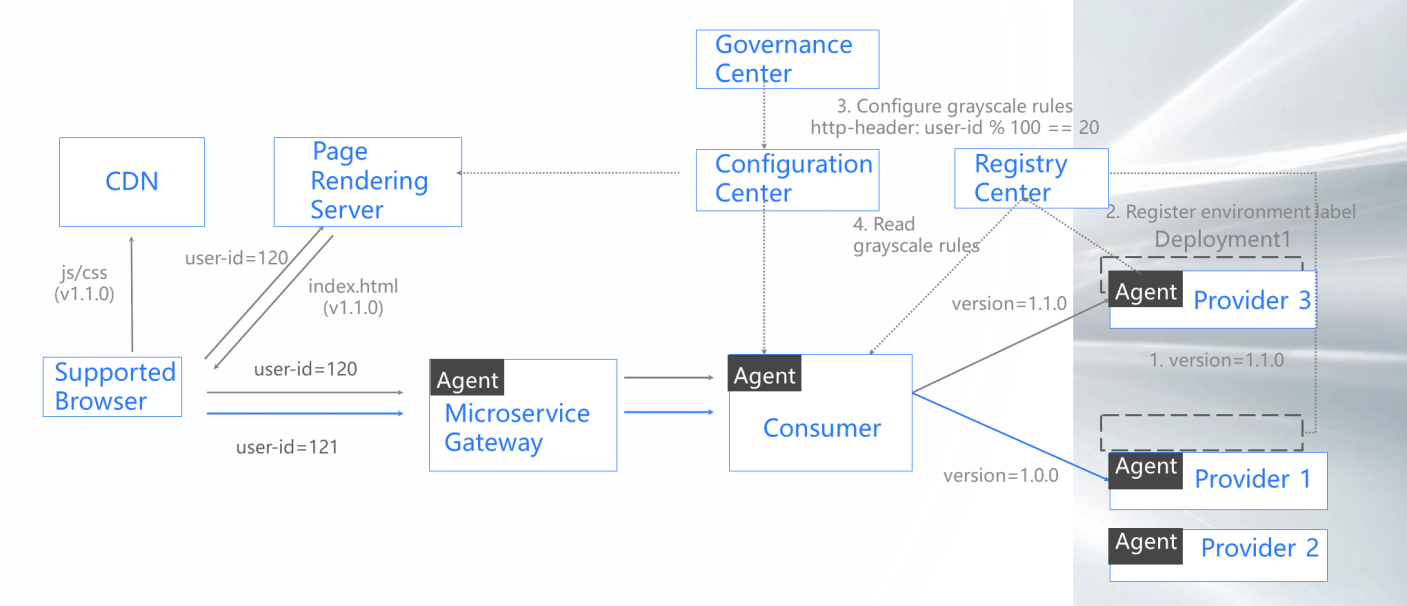

Here, we expand two capabilities. The first capability is frontend and backend grayscale across the comprehensive process. With the help of the preceding agent, SAE provides a comprehensive process from web request to a gateway to the consumer to provide, allowing users to implement a grayscale release scenario with simple white-screen configurations. However, if enterprises need to build such technology on their own, the complexity involved should be very clear.

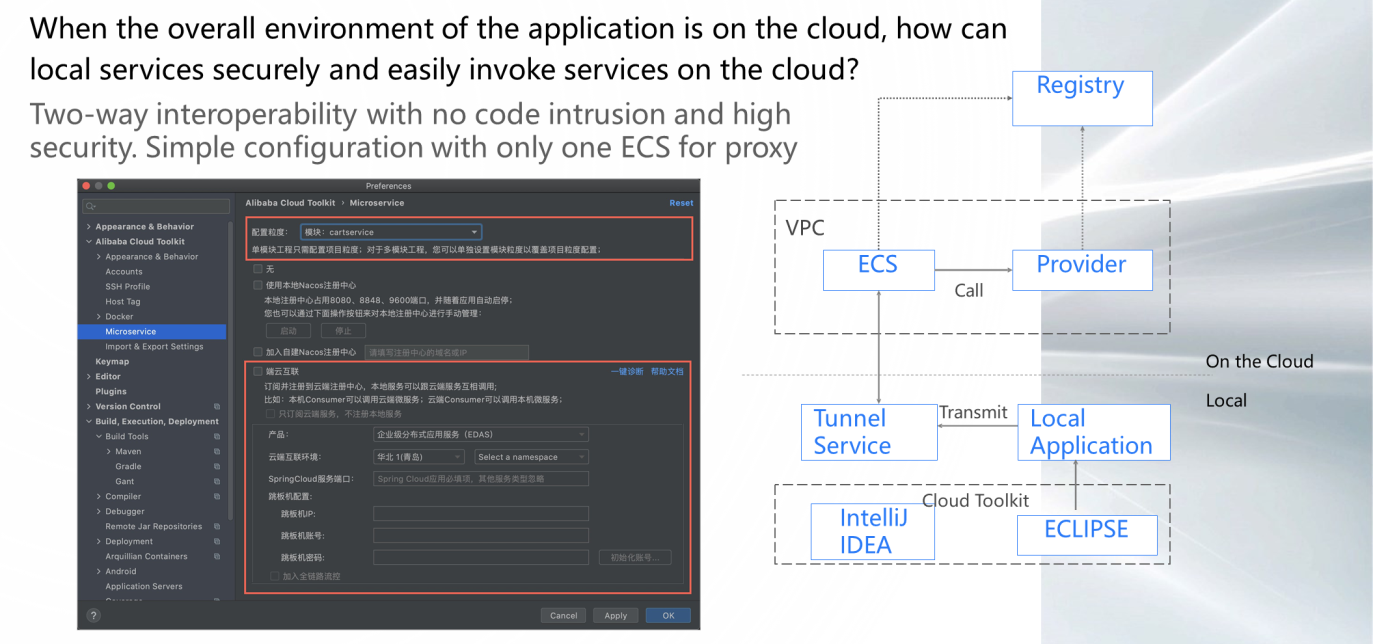

The second feature is CloudToolkit-based joint debugging. As we all know, the number of applications in a microservice scenario is showing an exploding trend. If there is a need for local development and so many applications need to be launched, how can we debug one of the services on the cloud safely and conveniently? CloudToolkit allows users to connect the cloud environment locally and perform on-premises and off-premises joint debugging, lowering the requirements for development and testing significantly.

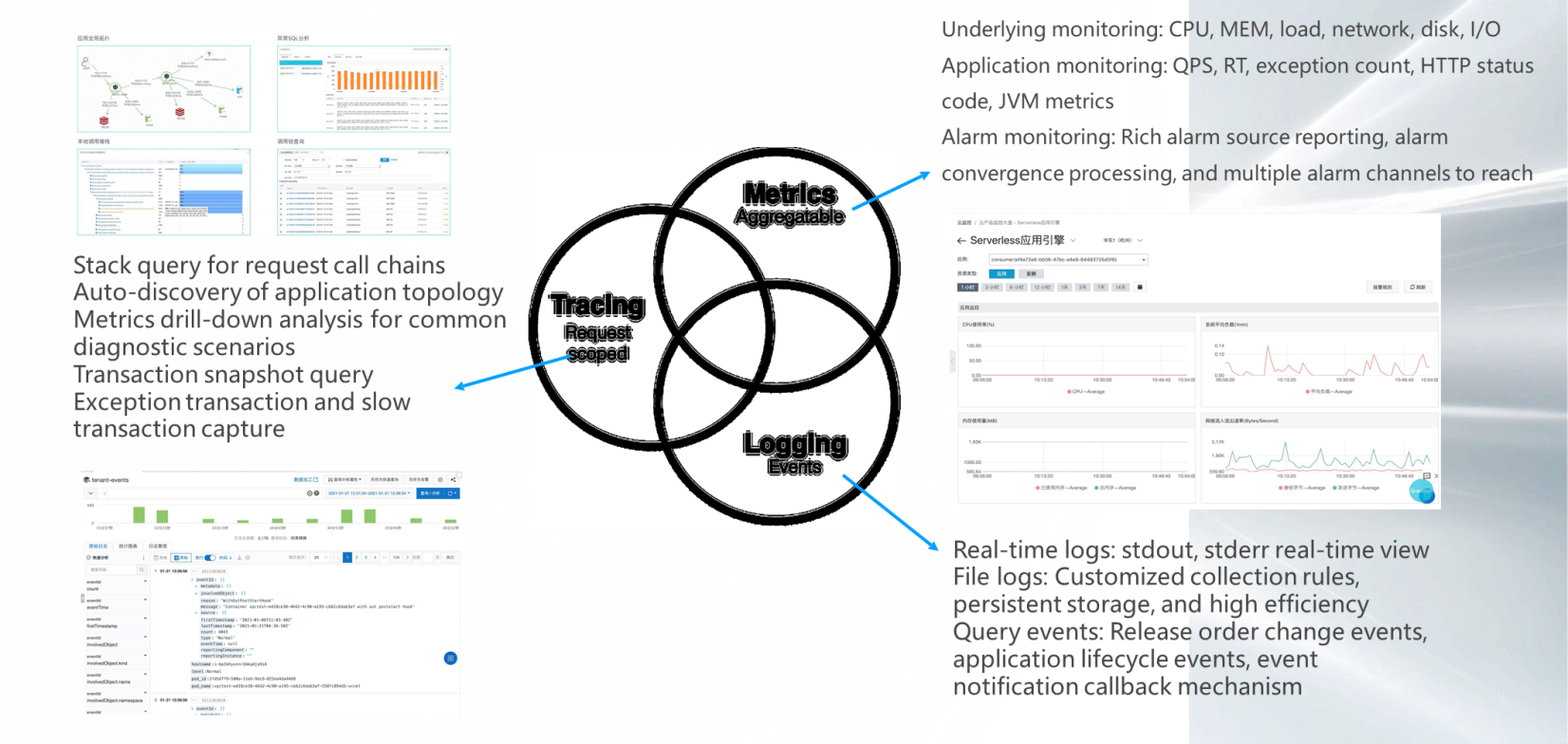

In microservice scenarios, due to the rapid divergence of microservices and the extremely increasing number of call processes, it is very complicated to locate the problem in problematic scenarios. SAE integrates various Alibaba Cloud observable products, including Prometheus, IaaS, SLS, and underlying monitoring. Also, it provides a wide range of solutions in the Tracing Logging Metrics, including request process queries, analysis of common metrics for diagnosis scenarios, underlying monitoring, real-time logs, and event notifications. All these can reduce some of the daily positioning problems of enterprises in operating scenarios of microservices.

An explanation has been given for the three parts, which are zero-threshold PaaS, enterprise-level suites related to microservices, and observability. This section describes a core module of Serverless, which is the construction at the IaaS level without O&M and elasticity.

Through this SAE business architecture diagram, we can clearly see that IaaS resources (including storage and network) do not need users to care about them. Besides, SAE also manages Kubernetes, a component of the PaaS layer, which users do not need to maintain Kubernetes. At the Kubernetes layer, SAE provides enhanced capabilities, such as microservice governance and application lifecycle management. In addition, SAE has an elasticity of up to 15 seconds, which is expected to help developers deal with burst traffic in many enterprise-level scenarios. Moreover, cost reduction and efficiency improvement can be achieved through multiple environments and some best practices.

How does SAE build a maintenance-free, user-neutral equivalent of IaaS resources and Kubernetes resources without hosting?

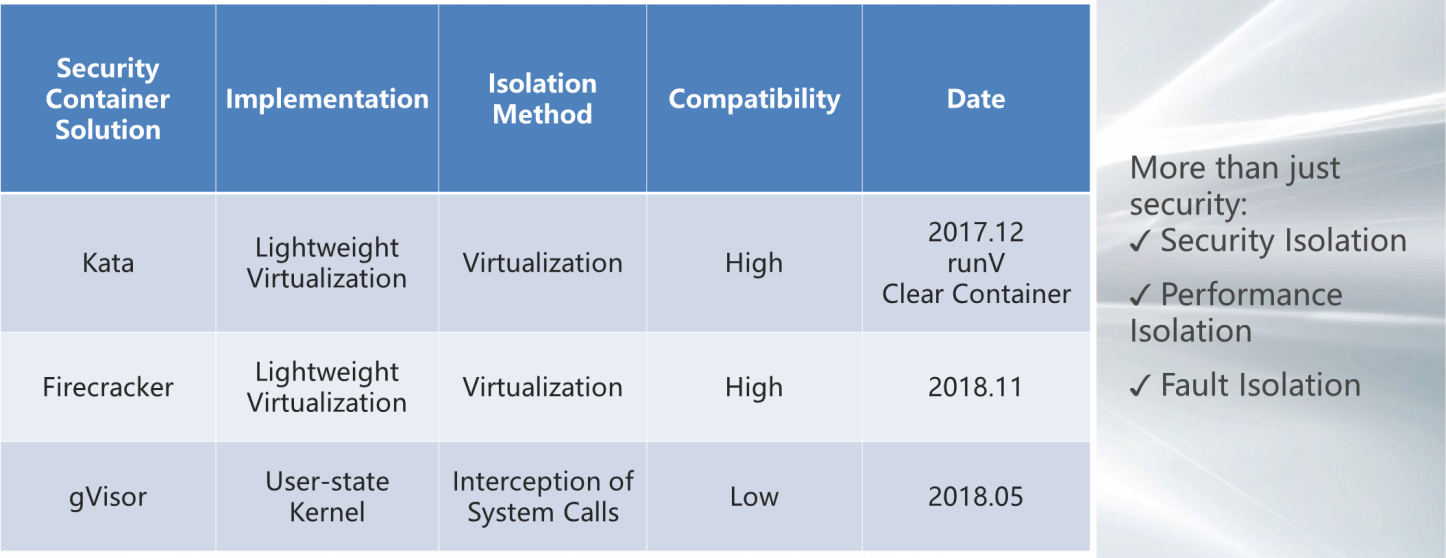

As shown in the preceding figure, SAE uses container security technology at the underlying layer. Compared with Docker, security containers are virtual machine-level security solutions. In the RunC scenario, since the shared kernel is on the public cloud product, it is possible for user A to penetrate one of user B's containers, causing some security risks. By adopting the security container technology (the security technology related to virtual machines), production-level security isolation is achieved, including the security containers entering Kubernetes and container ecosystems. By doing so, the combination of the security containers and container ecosystems can achieve a better balance of security and efficiency.

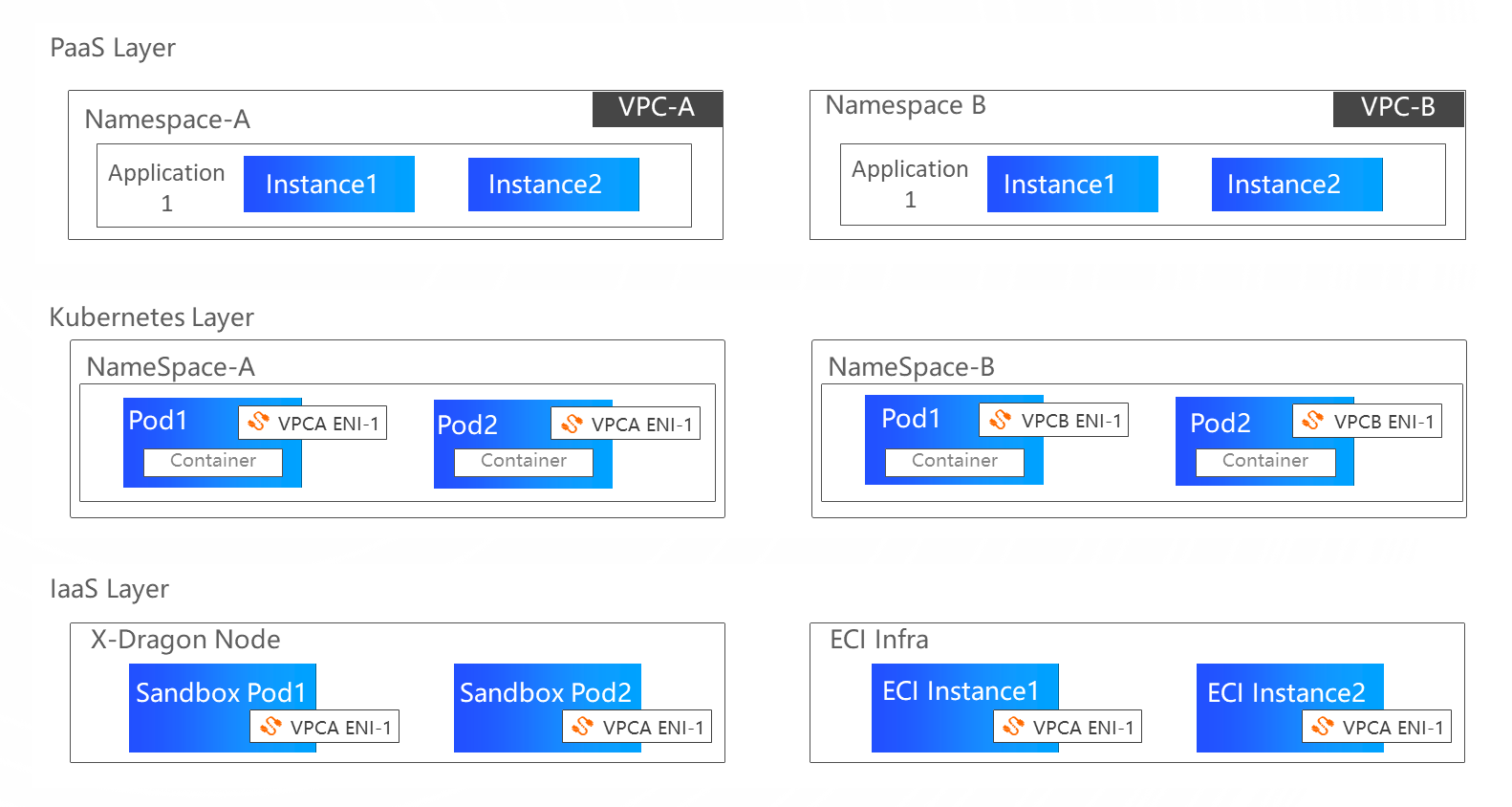

In addition, in terms of storage and network isolation, SAE needs to consider network isolation on traditional Kubernetes and some storage resources and network resources on the public cloud, where most users already have a lot of resources. These also need to be connected.

SAE uses the ENI NIC technology of the cloud product, which directs it into a sandbox. By doing so, users can isolate the computing layer and connect the network layer.

The mainstream security container technologies are Kata, Firecracker, and gVisor. In SAE, Kata is the earliest and most mature technology to implement the computing-as-a-security isolation. In addition, the security container implements security isolation, performance isolation, and fault isolation.

Let's give a more understandable example. In a RunC shared kernel scenario, a user's container causes some kernel failure that may affect the physical machine directly. However, there is no risk of this in SAE when using a security container. At most, it will only affect that one security container.

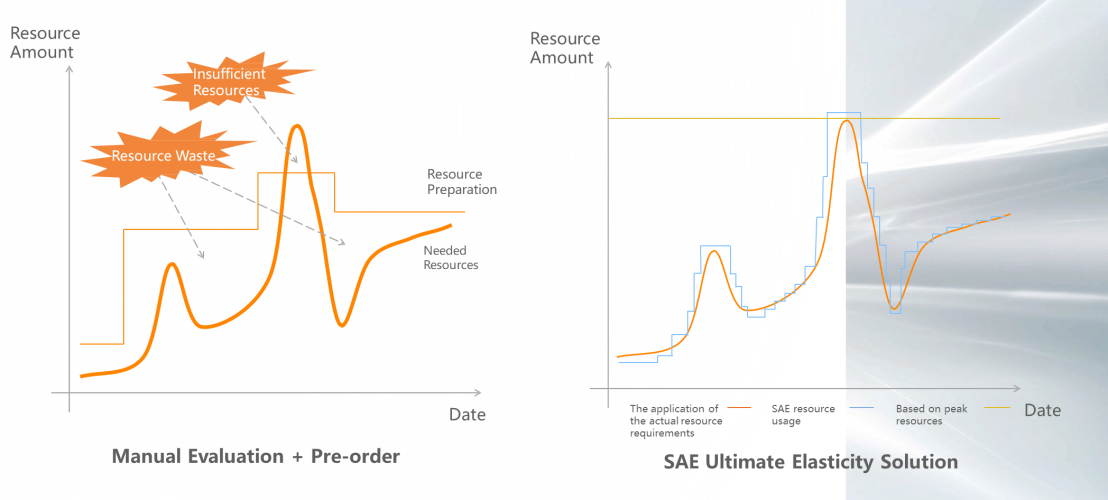

The following figure shows that if the elastic efficiency reaches an extreme, the user cost can also reach an extreme. The figures on the left and the right show a better understanding of the impact of elasticity on user costs.

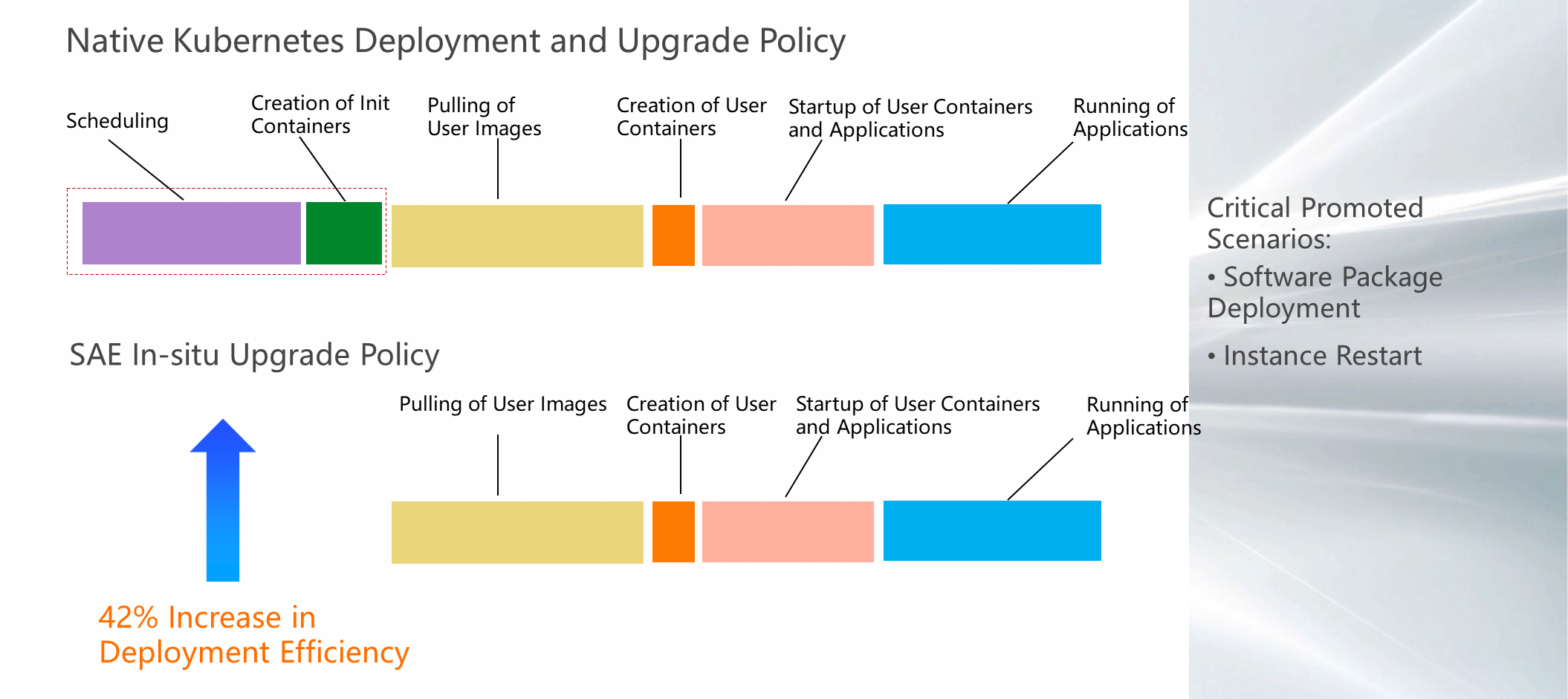

What has SAE done in terms of elasticity? In traditional Kubernetes, the creation of a Pod goes through scheduling, creation of the init containers, pulling of user images, creation of user containers, startup of user containers, and running of applications. Although it conforms to the design idea and specification of Kubernetes, it does not quite meet enterprise-level requirements for some scenarios that require relatively more efficiency in a production environment. With the in-place update policy of Alibaba's open-source CloneSet component, SAE does not need to rebuild the entire Pod. Instead, SAE only needs to rebuild the internal container, saving the scheduling and creation process of init containers, increasing deployment efficiency by 42%.

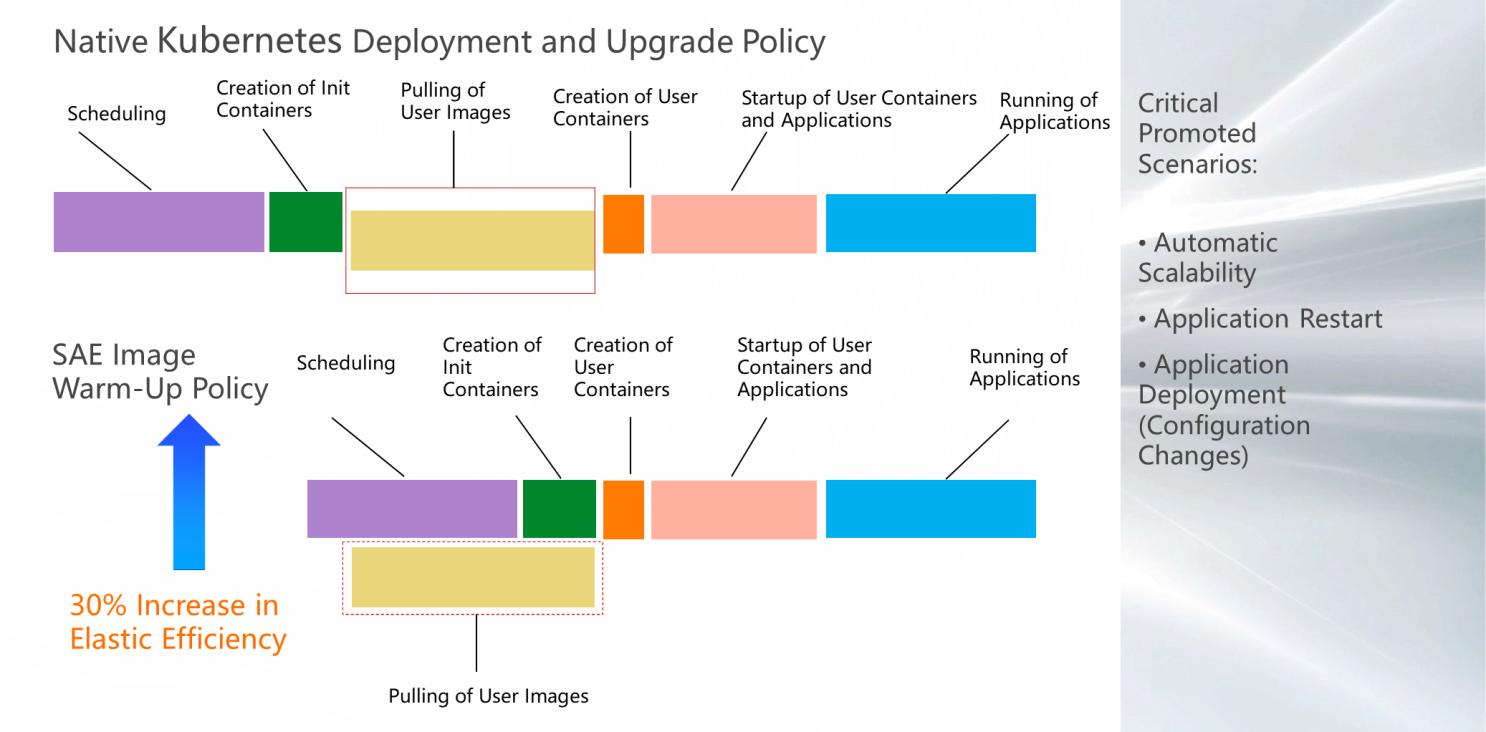

SAE also implements parallel scheduling in the image push scenario. Note: In the standard scenario, pulling images by scheduling users is a serial process. Then, optimization has been made here. When it recognizes that the pod is about to be called into a single physical machine, it will start pulling users' images in parallel. By doing so, the elastic efficiency can also be improved by 30%.

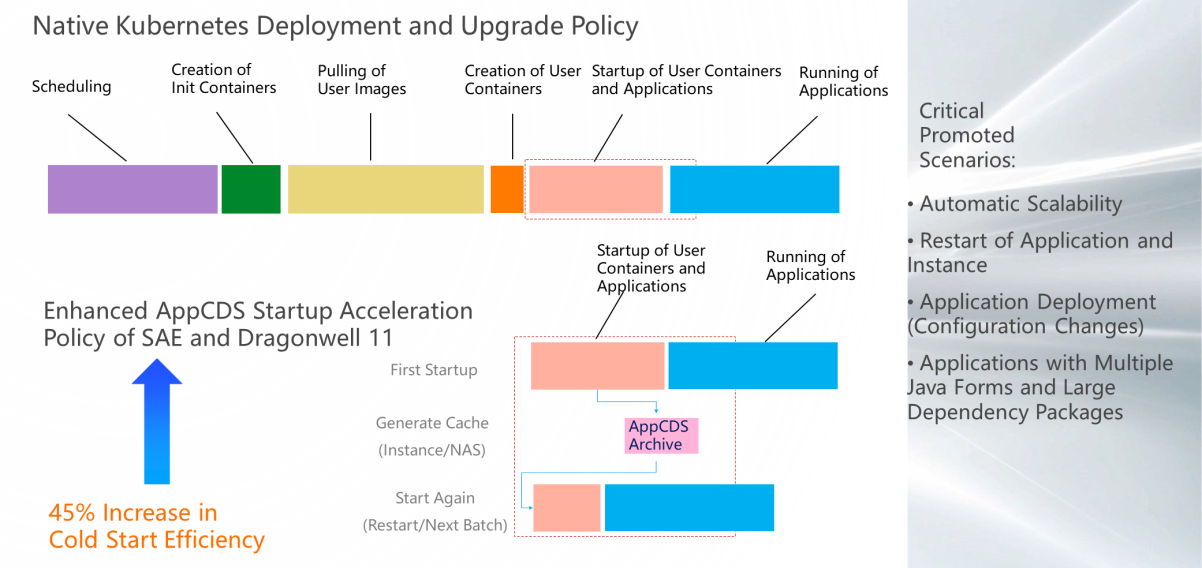

During the application startup phase, we have also improved the elastic efficiency. For example, Java applications have always had a pain point of a slow start up in Serverless scenarios. The core lies in the fact that Java needs to be loaded one by one. In some enterprise-level applications, loading thousands of classes can be a relatively slow process.

SAE, in conjunction with Alibaba's open-source Dragonwell, implements the App CDS technology. It will load the class into a package when the application is launched for the first time, and the subsequent application loads will only need to load the package. This eliminates a lot of serialized loading of classes and achieves a 45% improvement in deployment efficiency.

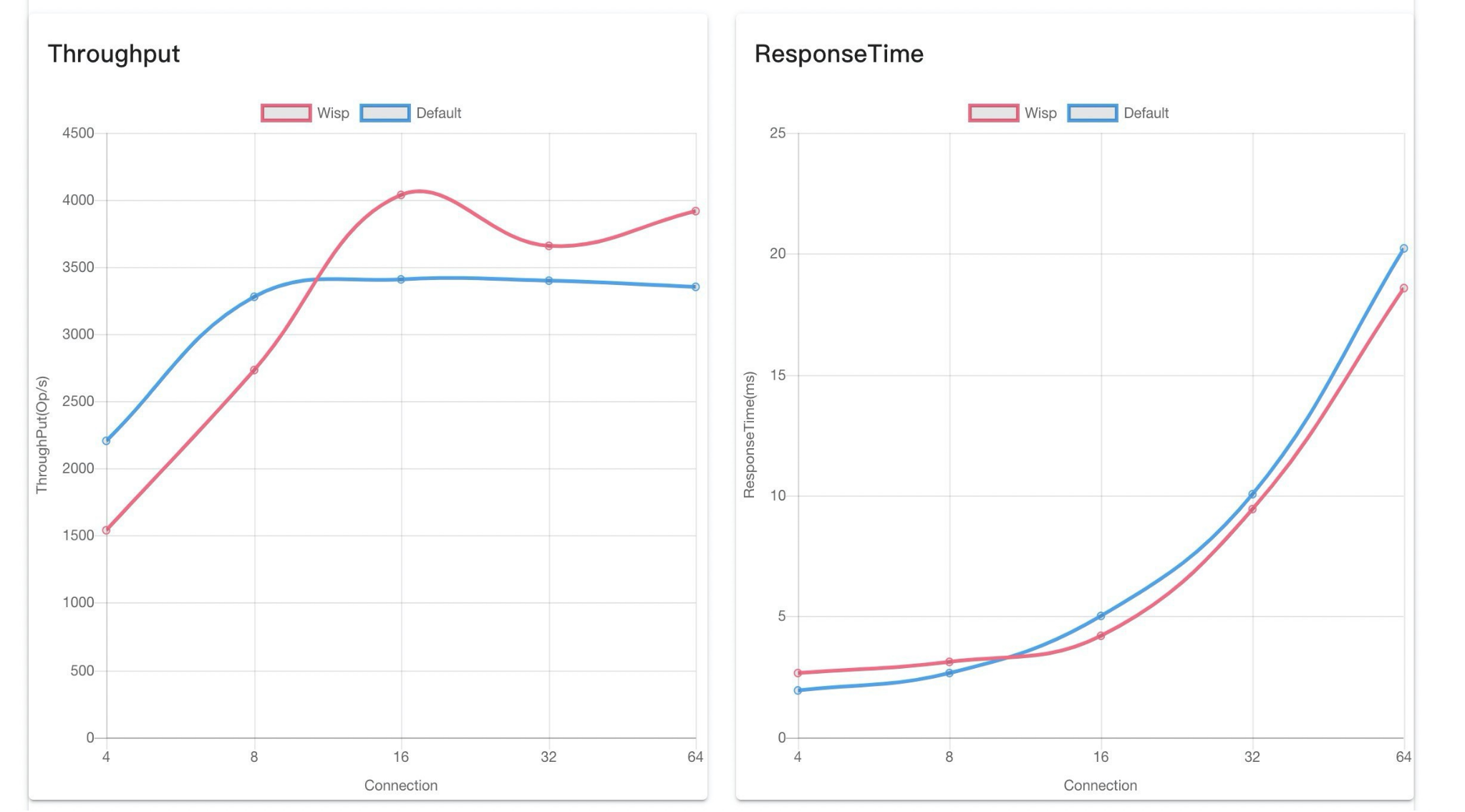

Finally, we make some elastic enhancements when running the application. Microservice applications usually need to configure a large number of threads, and these threads often correspond to the Linux underlying threads. In high concurrency scenarios, this leads to high thread switching overhead. In combination with Alibaba's open-source Dragonwell and WISP thread technologies, SAE maps hundreds of threads in the upper layer to a dozen threads in the underlying layer, reducing the overhead of thread switching significantly.

The preceding figure shows the data of a stress test. The red line point is where the Dragonwell and WISP technologies are used. We can see that the operation efficiency is improved by about 20%.

The preceding describes some technical principles and effects of SAE in terms of Serverless, IaaS hosting, Kubernetes hosting, and elastic efficiency construction.

Originally, microservice users needed many components, including some technical frameworks for PaaS microservices, the O&M of IaaS and Kubernetes, and the observability components. SAE has developed an overall solution for these aspects, so that users only need to pay attention to their own business systems, lowering the threshold significantly for users to use microservice technologies.

In the future, SAE will continuously build capabilities for each module in the following aspects:

Finally, in terms of observability, SAE operates and maintains user applications. Observability is very important for SAE or the platform itself. In this regard, we will continue to perform some monitoring and alarms, including pre-planning and grayscale construction. For users, it also needs to host their applications on SAE, which requires the product to lower the threshold in this aspect. Therefore, it will be followed by the application dashboard, event center, and other constructions.

Business-Driven Upgrade of Alibaba Group – The Evolution of Dubbo 3.0

Build Modernized Applications Using Cloud-Native Technologies

212 posts | 13 followers

FollowAlibaba Cloud Serverless - April 7, 2022

Alibaba Cloud Native Community - December 6, 2022

Alibaba Cloud Community - March 8, 2022

Alibaba Clouder - January 4, 2021

Alibaba Developer - March 3, 2022

Alibaba Developer - January 5, 2022

212 posts | 13 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native

Dikky Ryan Pratama May 30, 2023 at 2:55 am

awesome!