Focusing on LoongCollector, the core component of the LoongSuite ecosystem, this article conducts an in-depth analysis of the component's technological breakthroughs in intelligent computing services. LoongCollector covers multi-tenant observation isolation, GPU cluster performance tracking, and event-driven data pipeline design. Through zero-intrusion collection, intelligent preprocessing, and adaptive scaling mechanisms, LoongCollector builds a full-stack observability infrastructure for cloud-native AI scenarios and redefines the capability boundaries of observability in high-concurrency and strongly heterogeneous environments.

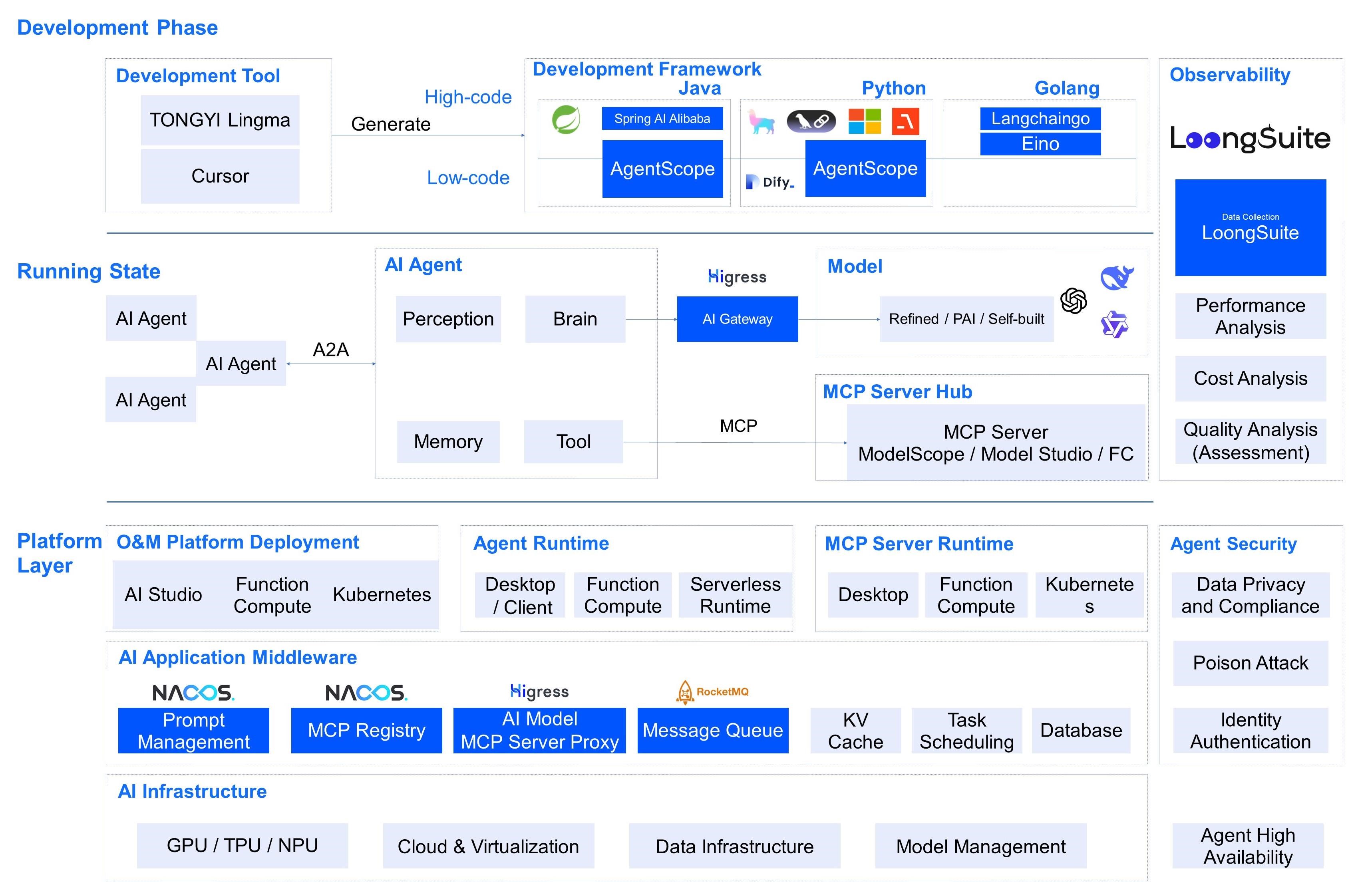

In the AI Agent technology system, observability serves as a core cornerstone. By real-time collecting key data such as model call links, resource consumption, and system performance indicators, observability provides the decision-making basis for intelligent agents in terms of performance optimization, security risk control, and fault location. It not only supports visual monitoring of core processes such as dynamic Prompt management and task queue scheduling, but also serves as a key infrastructure for ensuring the trustworthy operation and continuous evolution of AI systems by tracking the flow of sensitive data and abnormal behaviors.

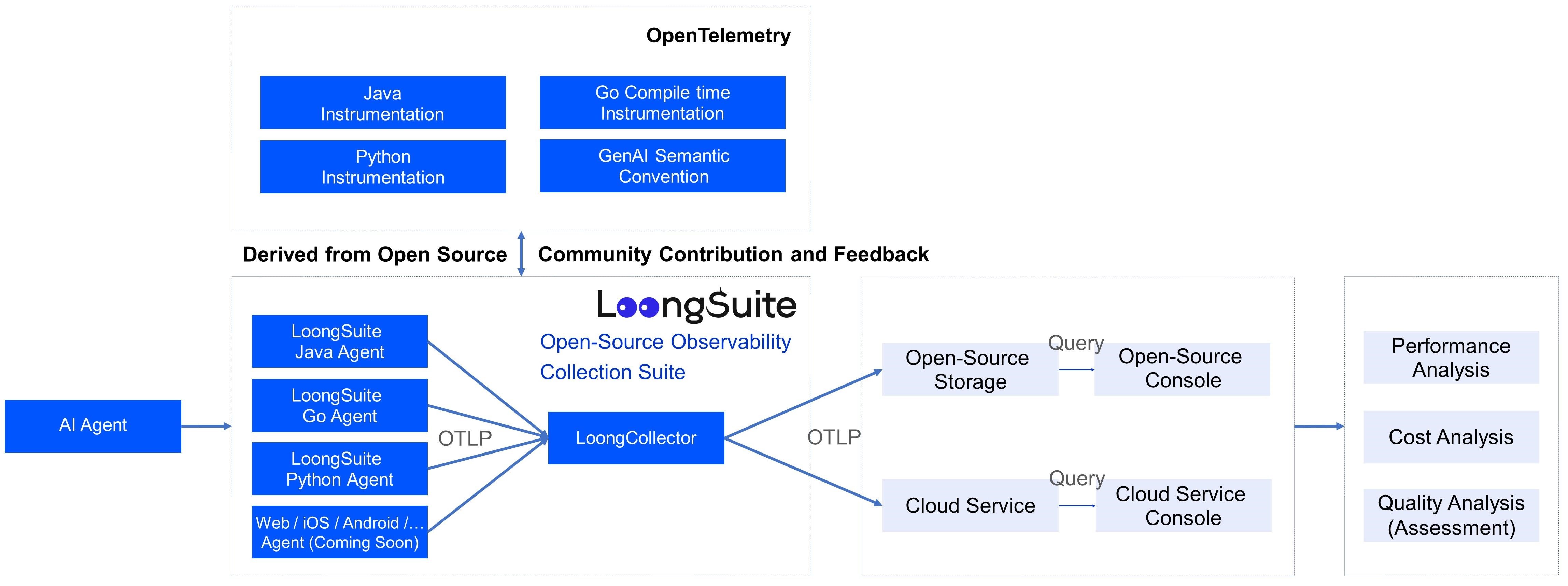

LoongSuite (/lʊŋ swiːt/) (phonetically translated as "Loong-sweet") is a high-performance and low-cost observability collection suite open-sourced by Alibaba Cloud for the AI era. It aims to help more enterprises efficiently establish an observability system through high-efficiency, cost-effective methods, enabling them to better acquire and utilize standardized data specification models.

The LoongSuite boasts the following unique advantages:

LoongCollector serves as the "heart" of the LoongSuite and its core data collection engine, boasting three key capabilities:

The position of LoongCollector within the LoongSuite components can be summarized as shown in the following diagram:

LoongCollector is able to play a crucial role in the LoongSuite components precisely because it boasts numerous core advantages.

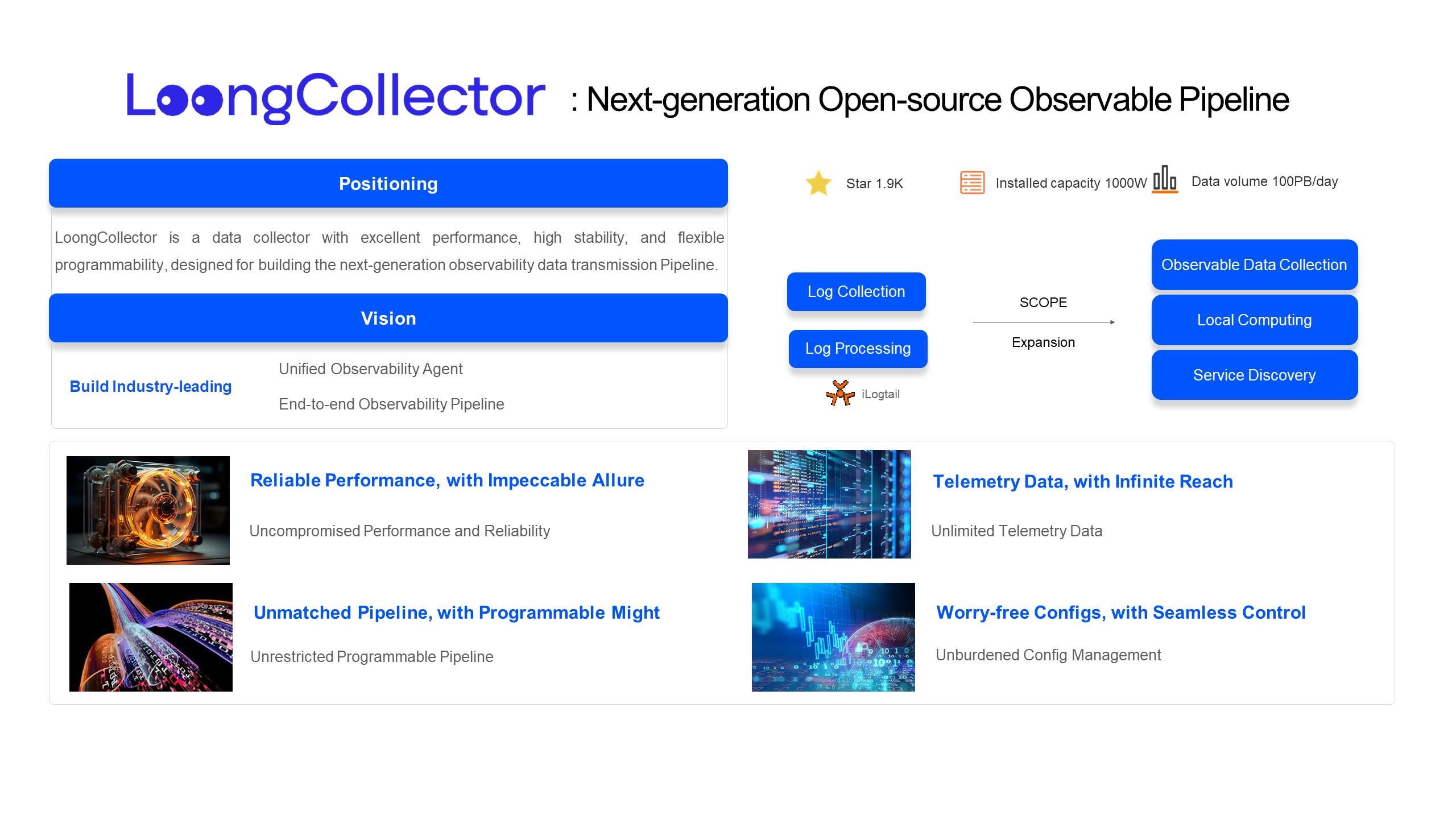

LoongCollector is a data collector that integrates exceptional performance, superior stability, and flexible programmability, designed specifically for building next-generation observability pipelines. The vision is to build an industry-leading "Unified Observability Agent" and "End-to-End Observability Pipeline".

LoongCollector originates from the iLogtail project, an open-source initiative by Alibaba Cloud Observability Team. Building on iLogtail's robust log collection and processing capabilities, LoongCollector has undergone comprehensive functional upgrades and expansions. It has gradually expanded from the original single log scenario to an integrated entity encompassing observable data collection, local computing, and service discovery. Endowed with features such as extensive data access, superior performance, robust reliability, programmability, manageability, cloud-native support, and multi-tenant isolation, LoongCollector can well adapt to the demand scenarios of observable collection and preprocessing for intelligent computing services.

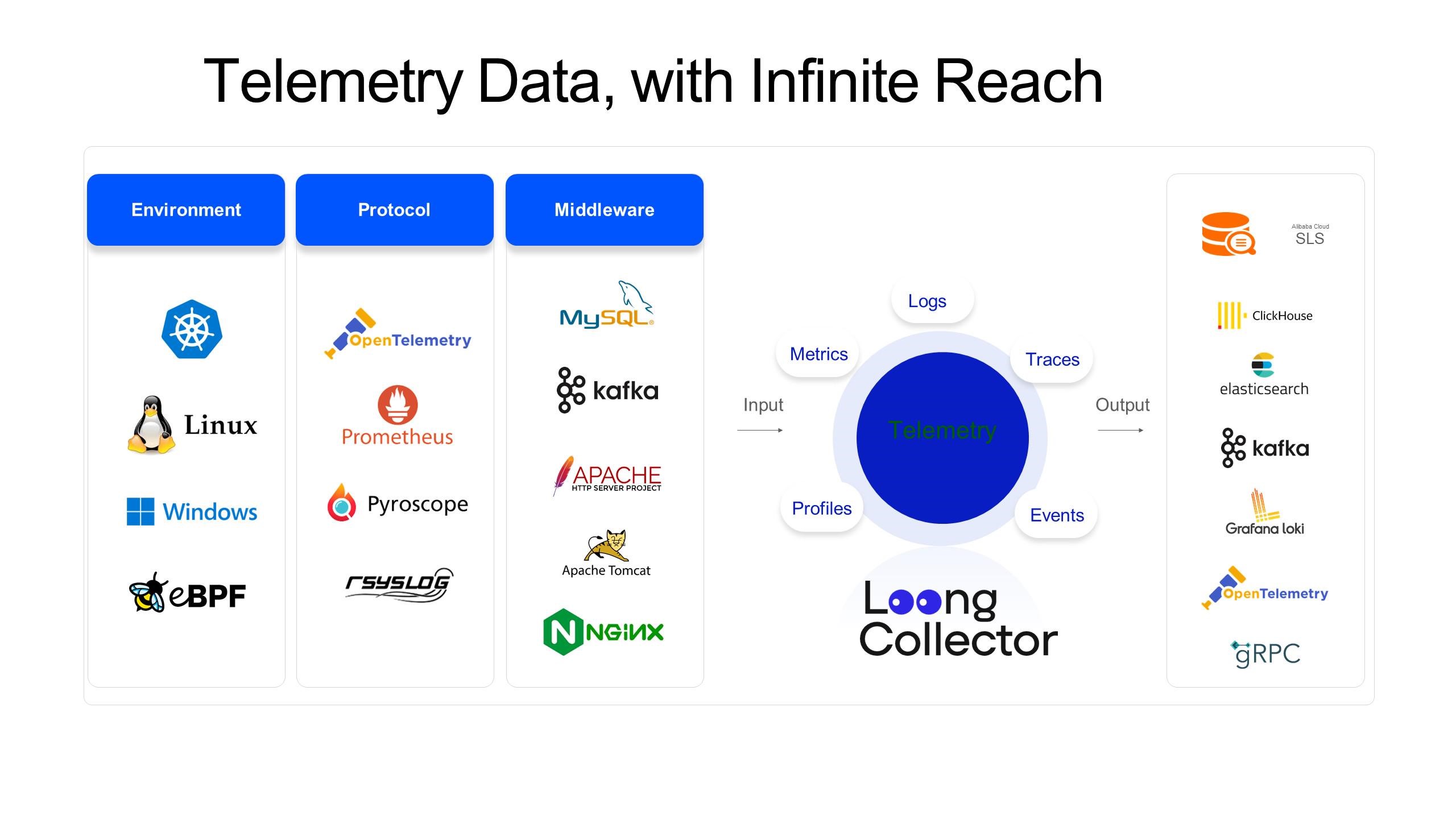

LoongCollector adheres to the all-in-one design philosophy and aims to enable a single agent to handle all collection tasks, including the collection, processing, routing, and transmission of Logs, Metrics, Traces, Events, and Profiles. LoongCollector emphasizes the enhancement of Prometheus metric scraping capabilities, deeply integrates Extended Berkeley Packet Filter (eBPF) technology to achieve non-intrusive collection, and provides native metric collection functions, thus realizing a true OneAgent.

Adhering to the principles of openness and open source, LoongCollector actively embraces open-source standards, including OpenTelemetry and Prometheus. Meanwhile, it supports connectivity with a wide range of open-source ecosystems such as OpenTelemetry Flusher, ClickHouse Flusher, and Kafka Flusher. As an observability infrastructure, LoongCollector continuously enhances its compatibility in heterogeneous environments and actively strives to achieve comprehensive and in-depth support for mainstream operating system (OS) environments.

The capability to handle Kubernetes (K8s) collection scenarios has always been a core strength of LoongCollector. As is well known in the observability field, K8s metadata (such as Namespace, Pod, Container, and Labels) often plays a crucial role in observability data analysis. LoongCollector interacts with the underlying definitions of Pods through the standard CRI API to obtain various metadata information in K8s, thereby achieving non-intrusive K8s metadata AutoTagging capability during collection.

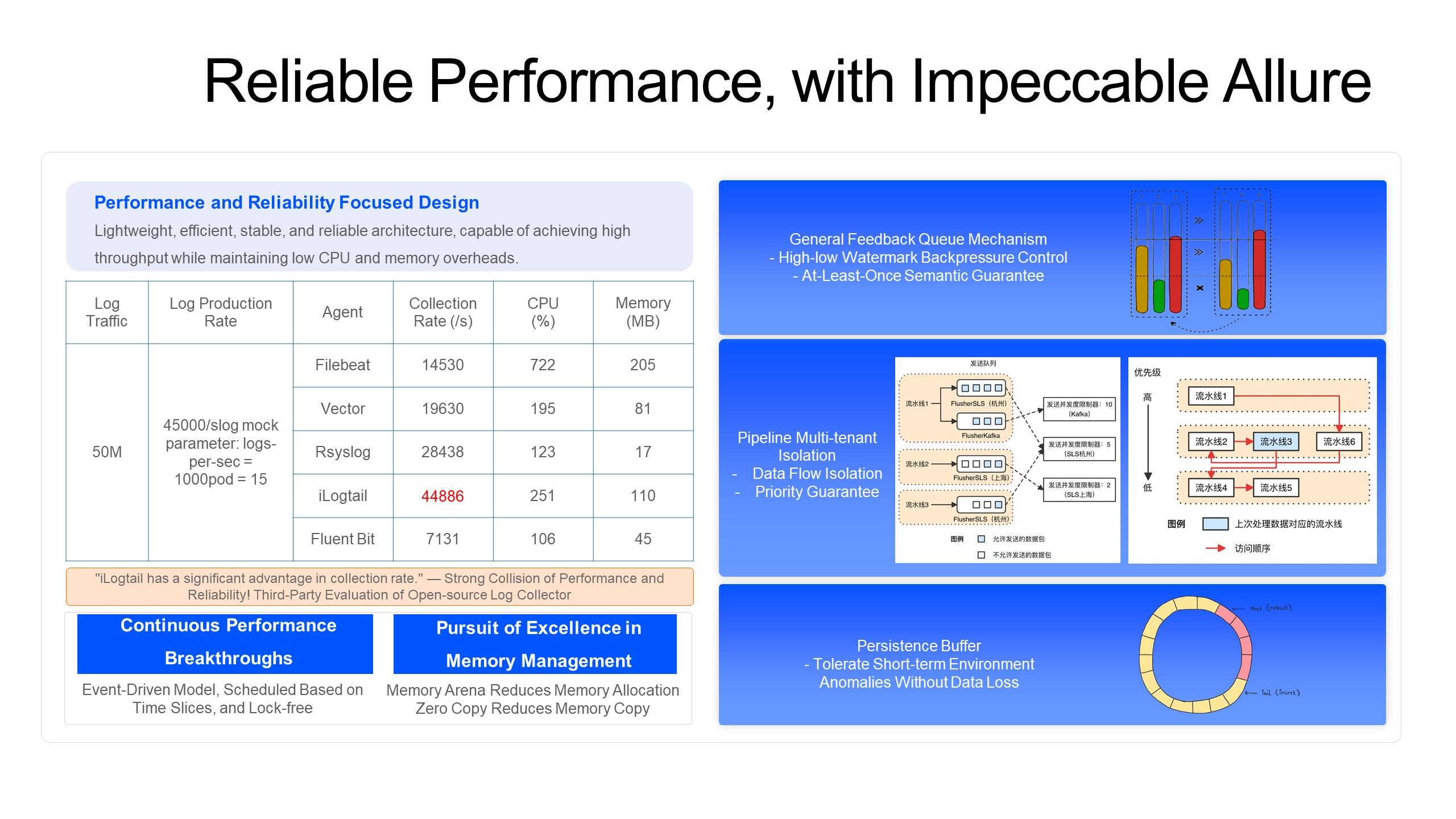

LoongCollector always prioritizes the pursuit of ultimate collection performance and superior reliability, firmly believing that these form the foundation for practicing the concept of long-termism. This is mainly reflected in the relentless refinement of performance, resource consumption, and stability.

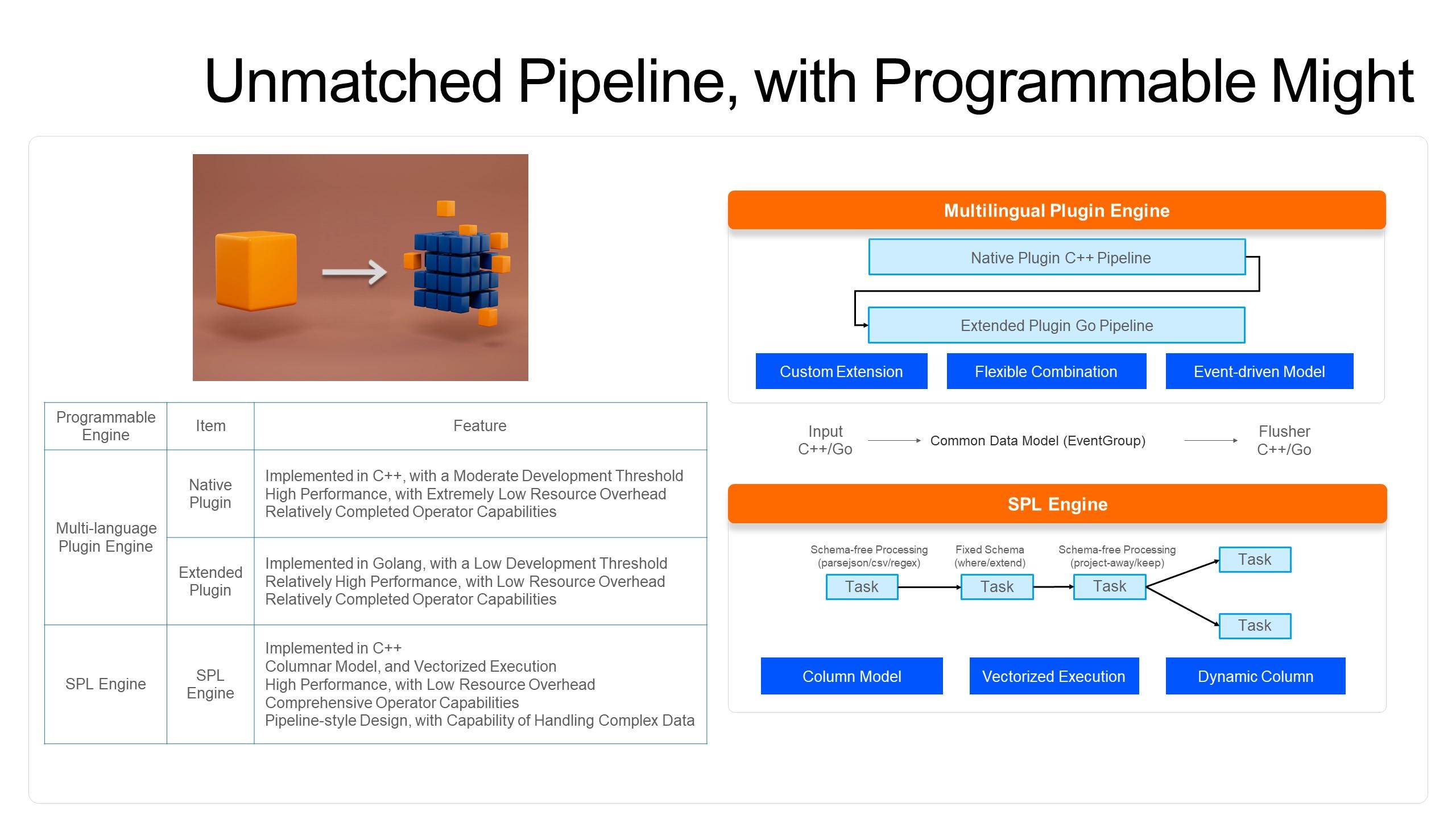

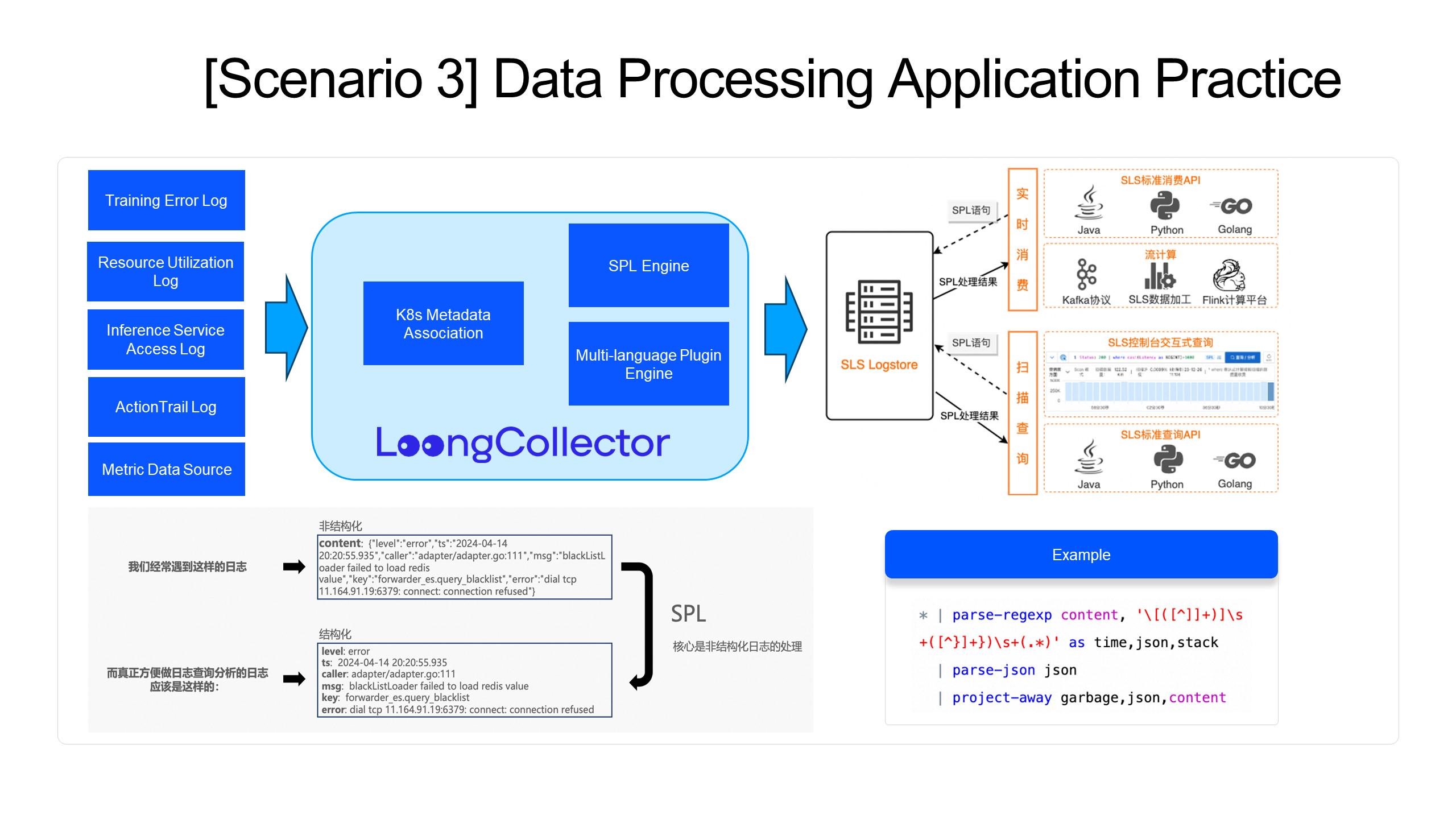

LoongCollector builds a comprehensive programmable system empowered by the dual engines of SPL and multi-language Plug-in, providing robust data preprocessing capabilities. Different engines can be interconnected, and the expected computing capabilities can be achieved through flexible combinations.

Developers have the flexibility to choose a programmable engine based on their needs. If execution efficiency is a priority, native plug-ins can be chosen. If comprehensive operators and the need to handle complex data are valued, the SPL engine is an option. If low-threshold customizations are emphasized, extended plug-ins can be selected, with programming done in Golang.

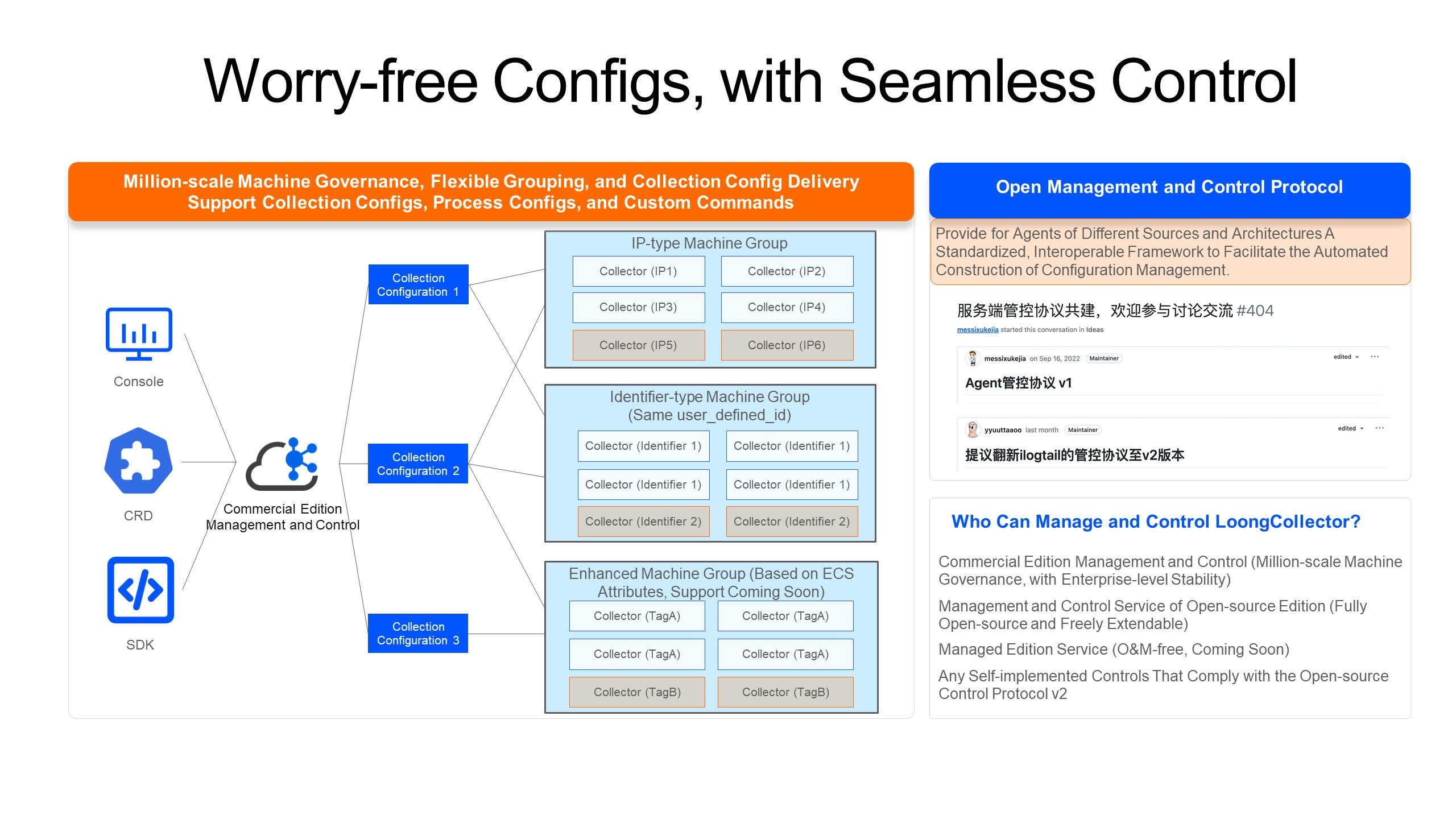

In the complex production environment of distributed intelligent computing services, it is a serious challenge to manage the configuration access of thousands of nodes. This especially highlights the lack of a set of unified and efficient control specifications in the industry. To address this issue, the LoongCollector community has designed and implemented a detailed agent management and control protocol. This protocol aims to provide a standardized and interoperable framework for agents of different origins and architectures, thereby facilitating the automation of configuration management.

The ConfigServer service, implemented based on this management and control protocol, can manage any agent that complies with the protocol, significantly enhancing the uniformity, real-time performance, and traceability of configuration policies in large-scale distributed systems. As a management and control service for observable agents, ConfigServer supports the following functions:

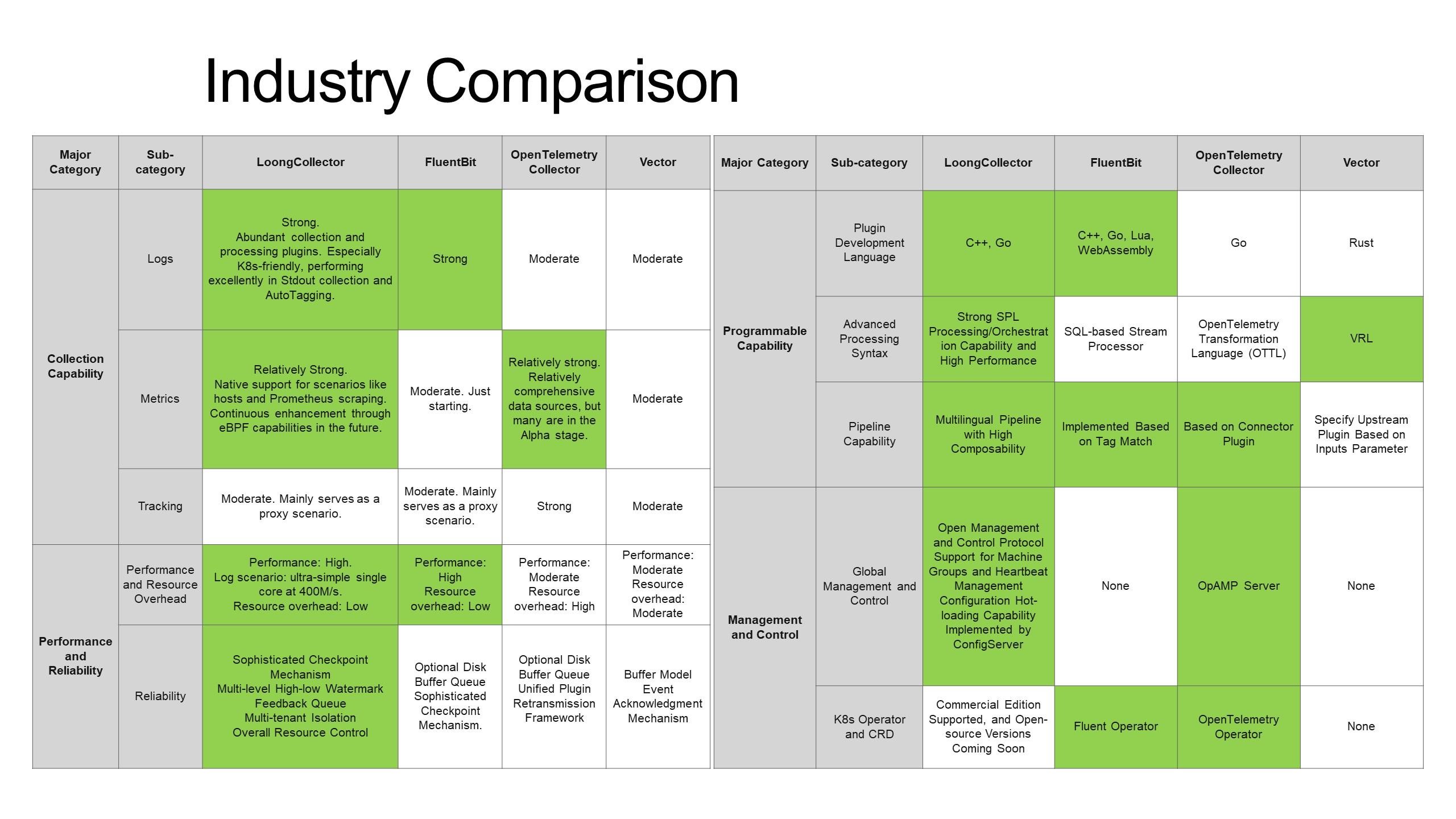

In the field of observability, Fluent Bit, OpenTelemetry Collector, and Vector are all highly regarded observable data collectors. Among them, FluentBit is lightweight and robust, known for its performance. OpenTelemetry Collector, backed by CNCF, has built a rich ecosystem based on OpenTelemetry concepts. Vector, supported by Datadog, offers a new option for data processing through the combination of Observability Pipelines and VRL. On the other hand, LoongCollector is based on log scenarios and provides more comprehensive OneAgent collection capabilities by continuously improving metrics and tracking. It relies on the advantages of performance, stability, pipeline flexibility, and programmability to differentiate its core capabilities. At the same time, it provides large-scale collection and configuration management capabilities with powerful control capabilities. For more information, see the following table. Green sections denote advantages.

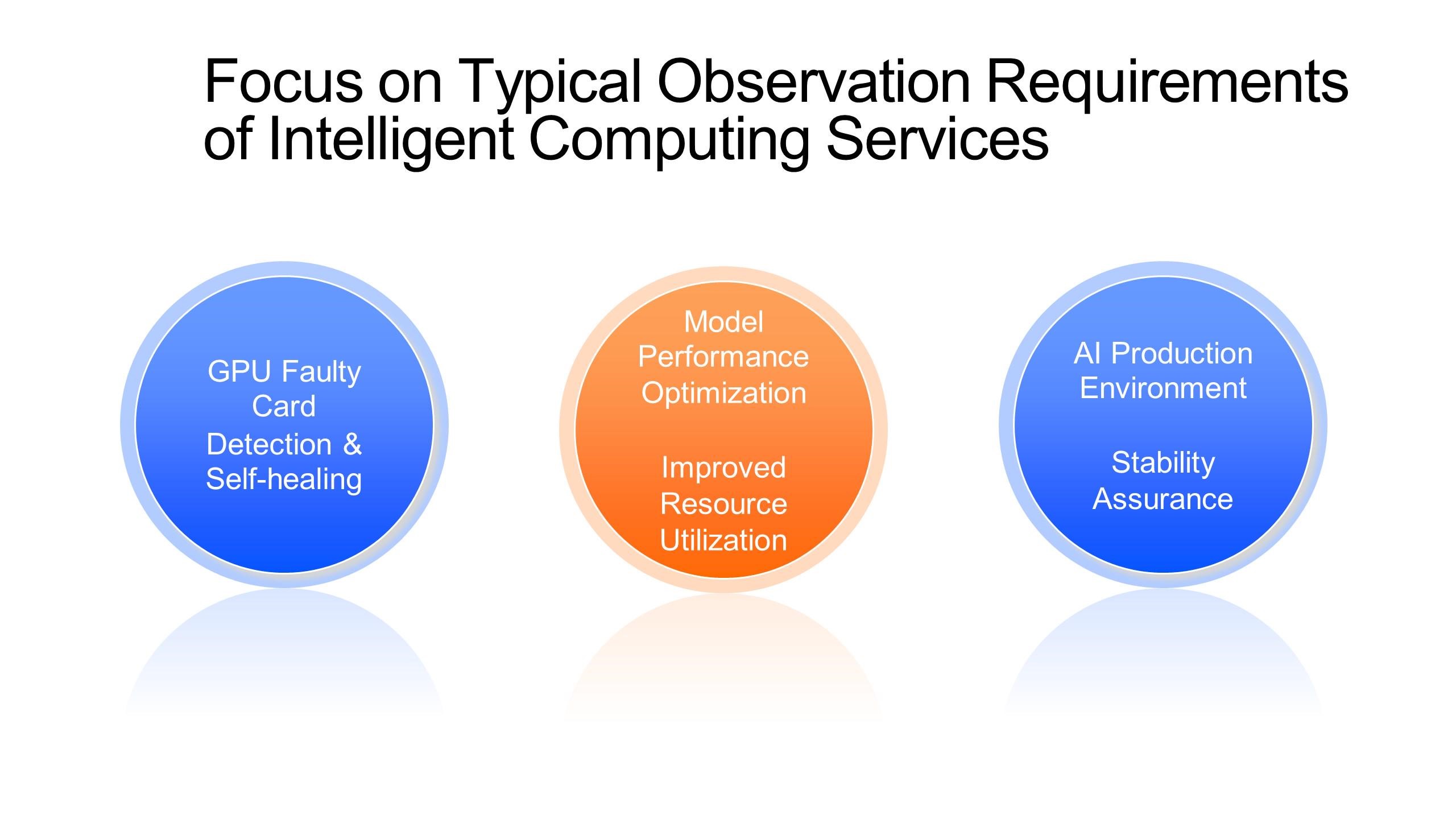

As established earlier, container services based on cloud-native architecture have gradually become the foundational infrastructure supporting AI intelligent computing. With the rapid development of AI task scale, especially the number of parameters of large models jumping from the billion to the trillion level, the rapid expansion of training scale not only triggers a significant increase in cluster costs, but also poses challenges to system stability. Typical challenges include:

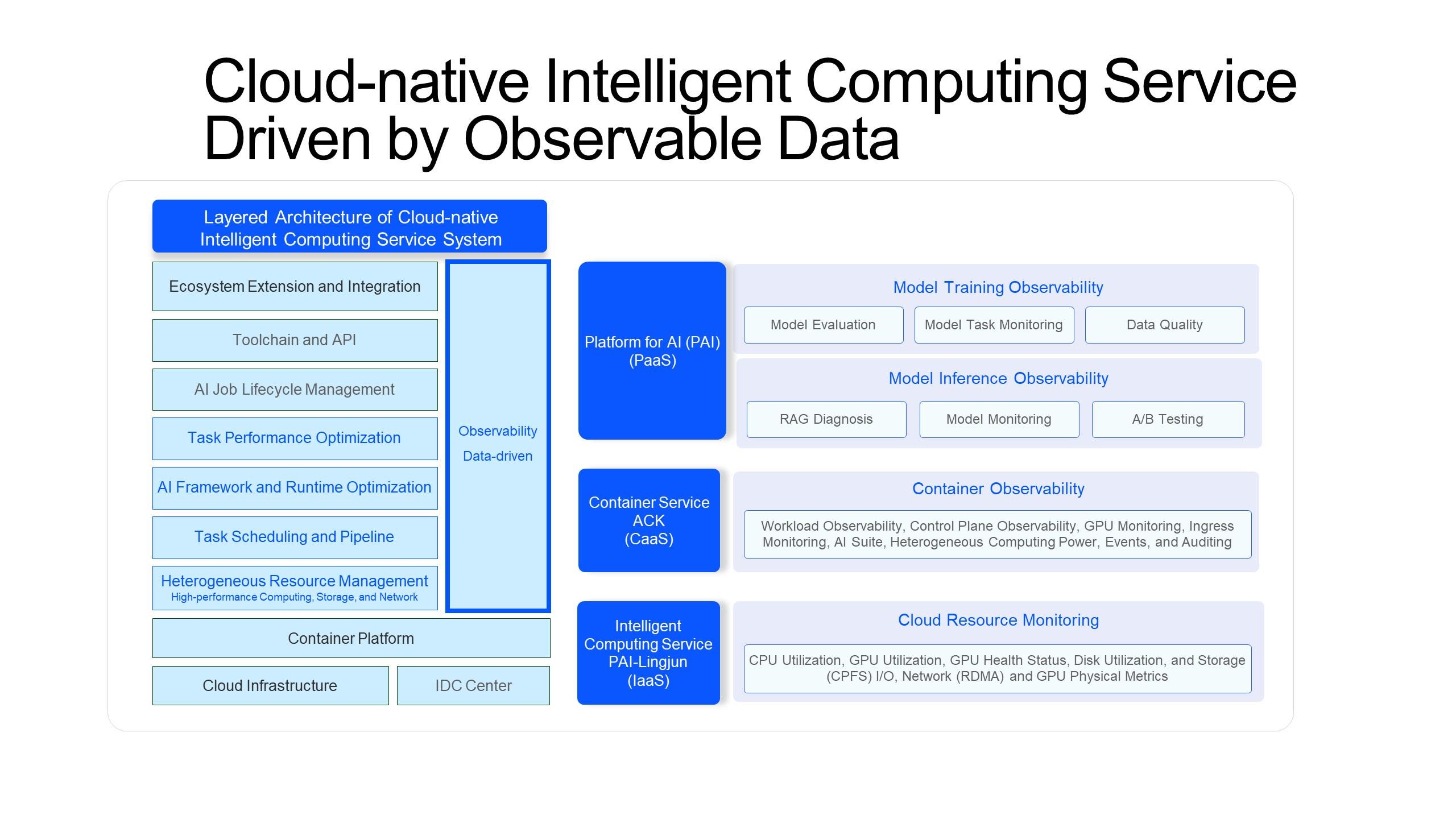

To effectively address these challenges, building an observability data-driven cloud-native intelligent computing service architecture becomes an urgent task. Corresponding to the hierarchical architecture of the intelligent computing service system, the observable system is also divided into three levels: observability of IaaS layer cloud resources, observability of CaaS layer containers, and observability of PaaS layer model training/inference.

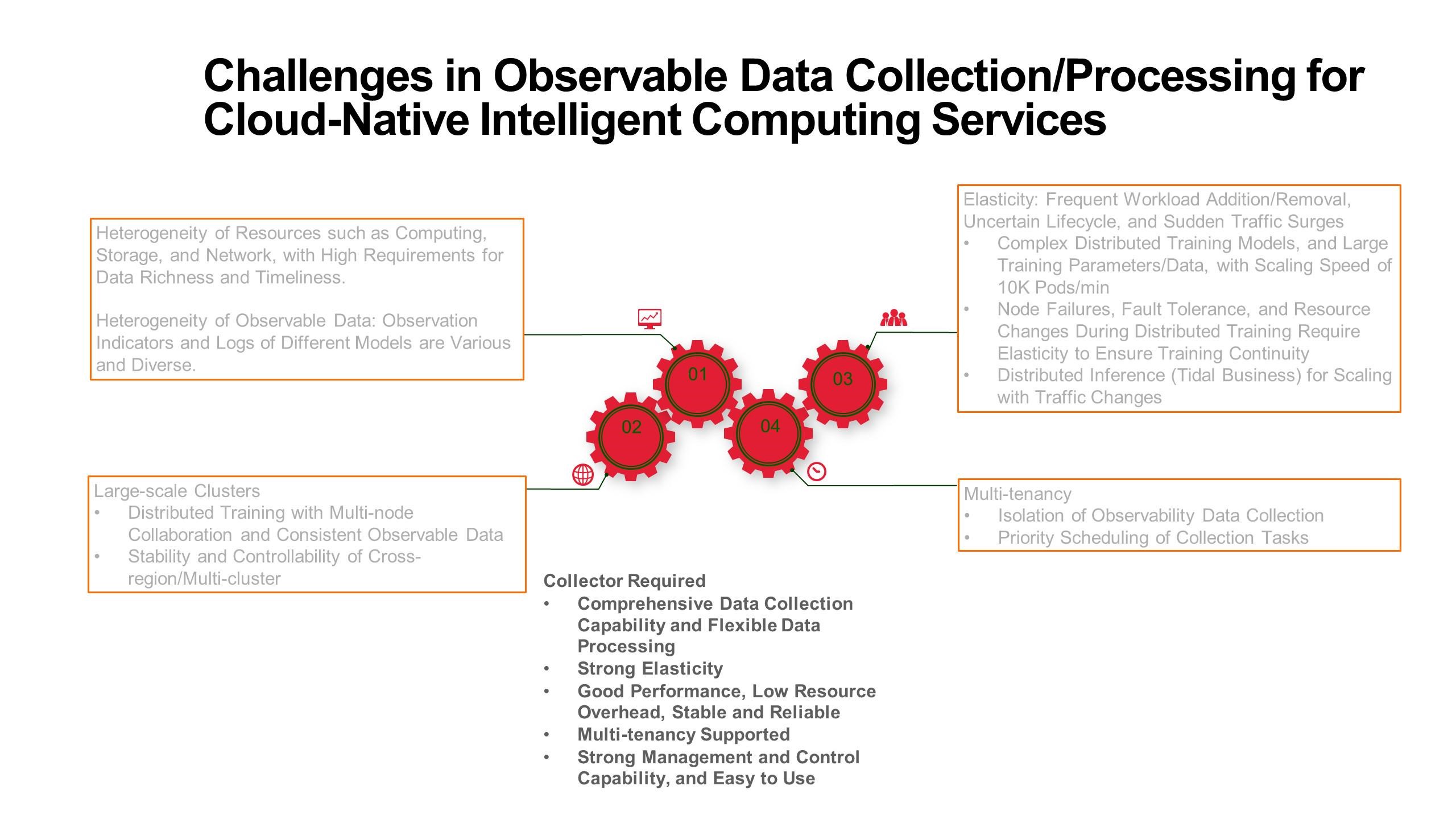

When building an observability system for intelligent computing services, a key requirement is how to adapt to the characteristics of the cloud-native AI infrastructure for effective observability data collection and preprocessing. The main challenges in this process include, but are not limited to:

Heterogeneous Attributes

Large Cluster Size

Elasticity: Frequent addition and removal of workloads, uncertain lifecycles, and large traffic bursts.

Multi-tenancy

Therefore, there is an urgent need for a robust observability pipeline adapted to cloud-native intelligent computing services. This pipeline should integrate observable data collection and preprocessing, featuring comprehensive data collection capabilities, flexible data processing, strong elasticity, high performance, low resource overhead, stability and reliability, support for multi-tenancy, strong management and control capabilities, and user-friendliness.

To address this, LoongCollector is precisely such an observable data collector that integrates observable data collection and preprocessing, featuring strong elastic scaling capabilities, high performance, low overhead, user-friendly multi-tenancy management, and stability and reliability. Next, this article will explain how LoongCollector addresses and responds to these challenges.

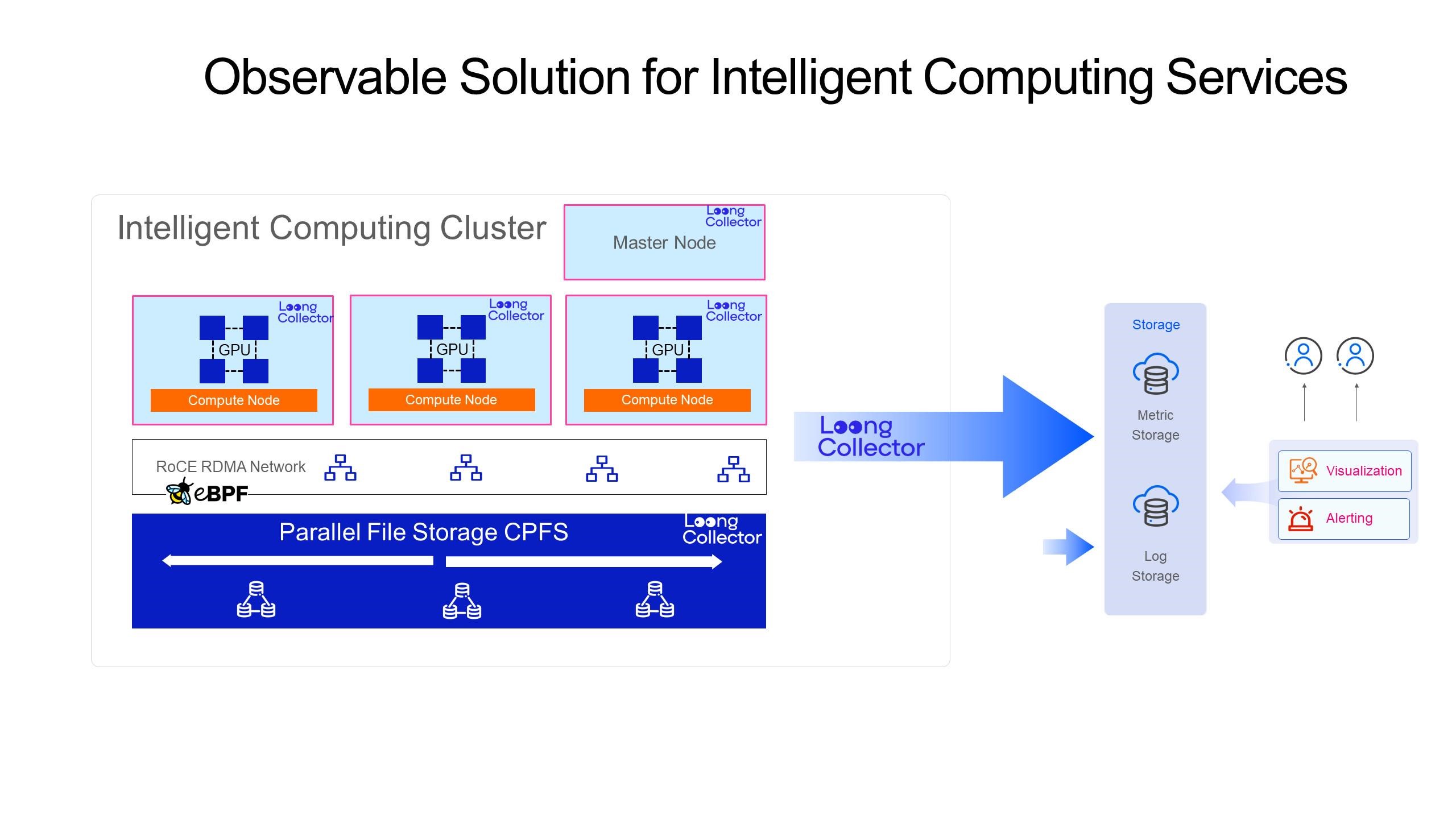

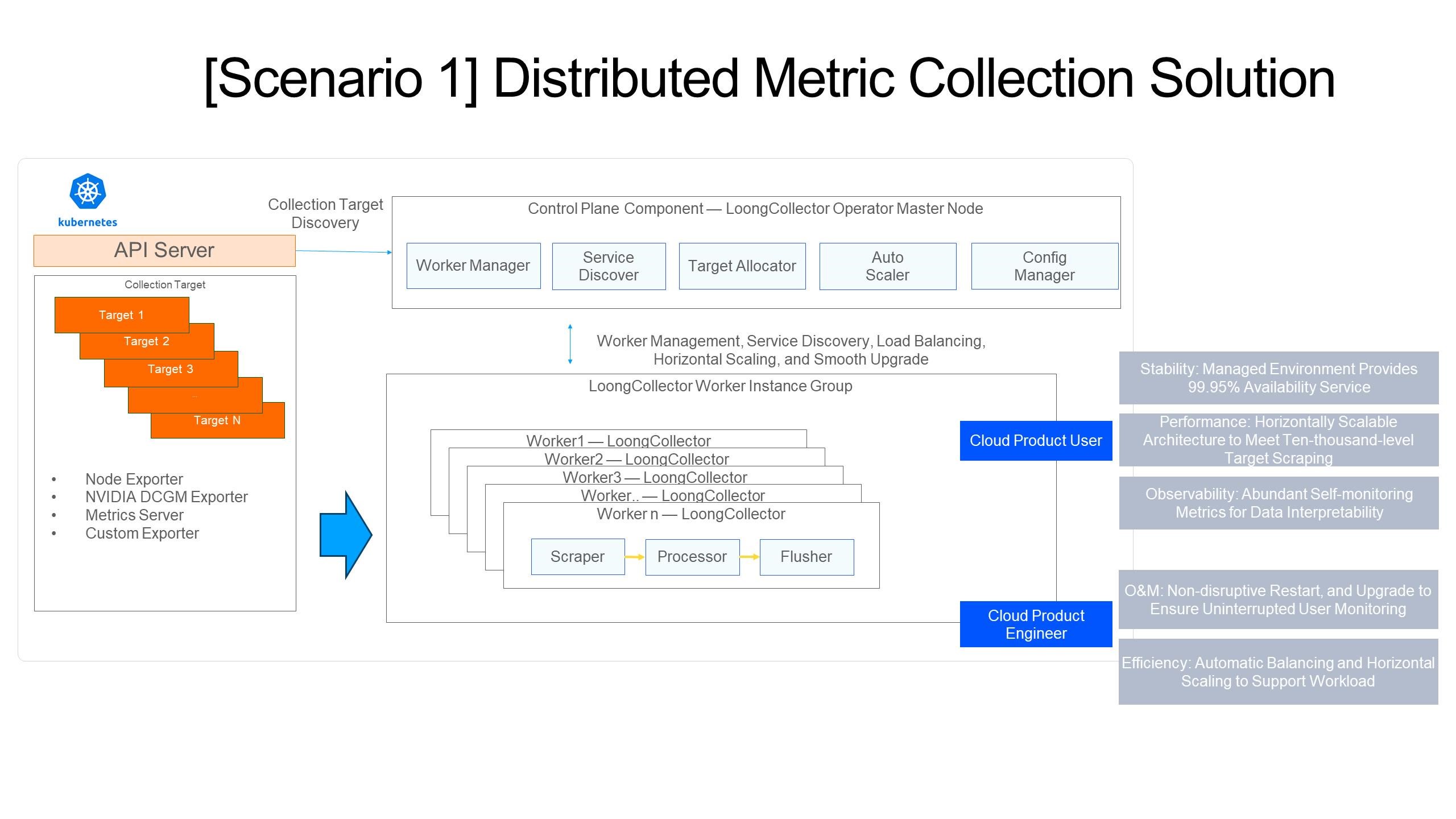

As a high-performance observability data collection and preprocessing pipeline, LoongCollector works in the following modes in intelligent computing clusters:

Agent Mode: As An Agent

Cluster Mode: As A Service

Given the complexity and diversity of the intelligent computing service system architecture, it is necessary to monitor a variety of key performance indicators. These metrics range from the infrastructure level to the application level, and provide external data interfaces in the form of Prometheus Exporter. For example, there are Node Exporter for computing node resources, NVIDIA DCGM Exporter for GPU devices, kube-state-metrics for clusters, and TensorFlow Exporter and PyTorch Exporter for training frameworks.

LoongCollector natively supports the capability to directly scrape various metrics exposed by Prometheus Exporters, adopting a Master-Slave multi-replica collection mode.

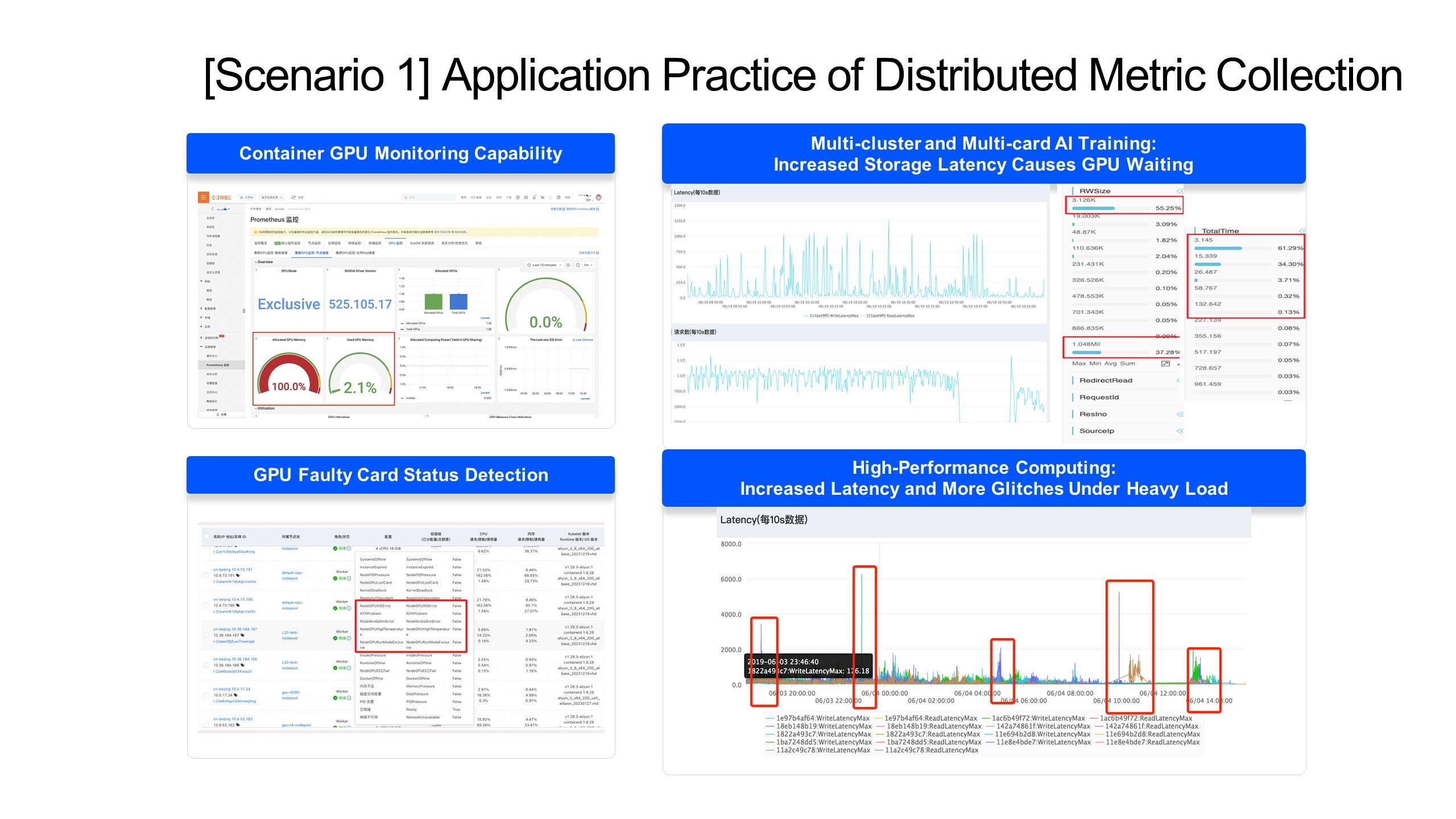

Based on the metric results collected by the intelligent computing service, GPU usage monitoring and faulty card status detection can be performed through a visual dashboard. At the same time, for high-throughput scenarios, you can quickly find the bottleneck of multi-cluster and multi-card AI training, to better improve the utilization of resources such as GPUs.

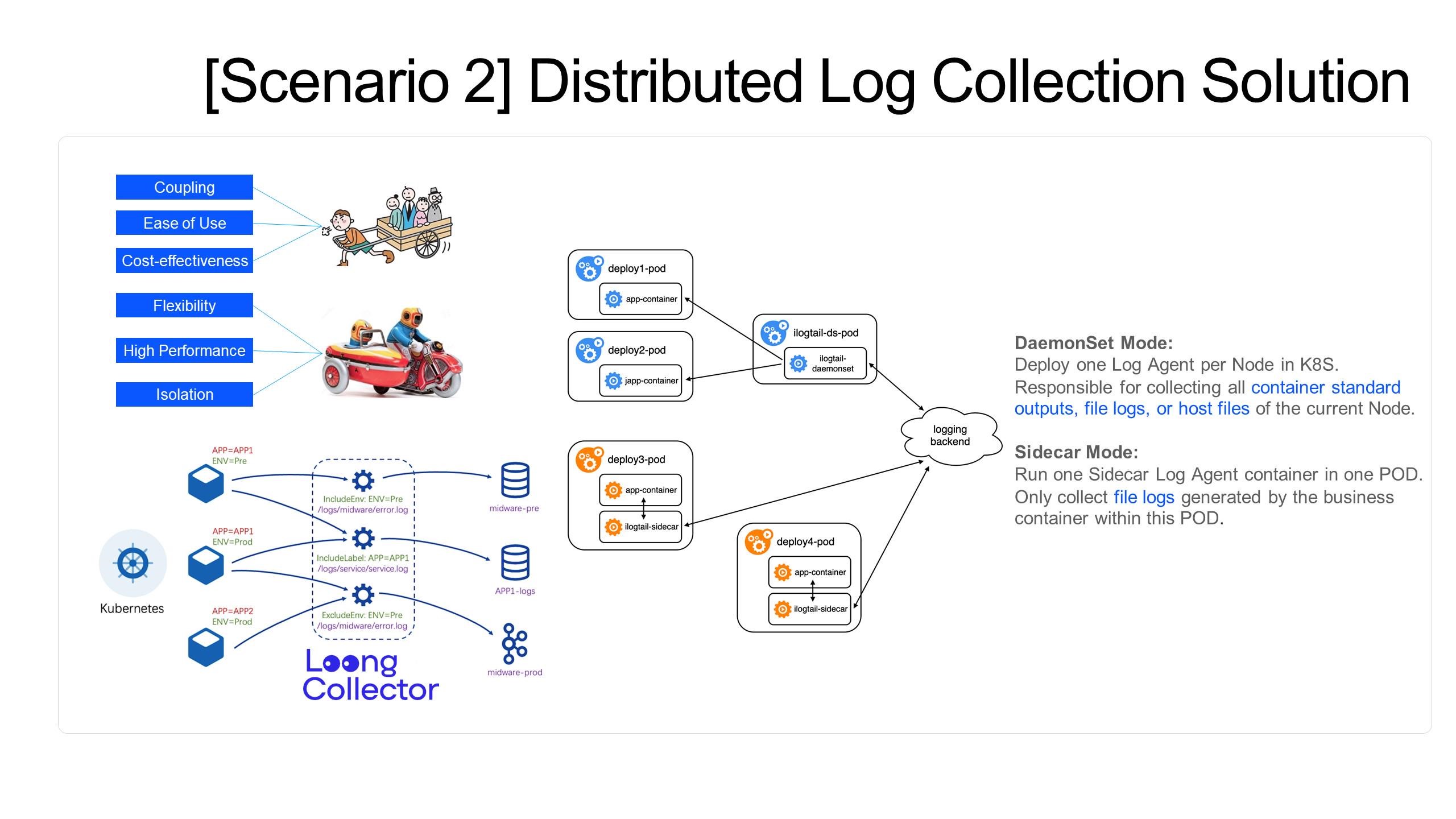

In the scenario of log collection using intelligent computing clusters, LoongCollector provides flexible deployment methods according to business requirements.

Whether it is distributed training or inference service deployment, they both have strong elasticity characteristics. LoongCollector is well adapted to the requirements of elasticity and multi-tenancy:

Container auto-discovery: Obtain container context information by accessing the socket of the container runtime (Docker Engine/ContainerD) located on the host machine.

Collection path discovery

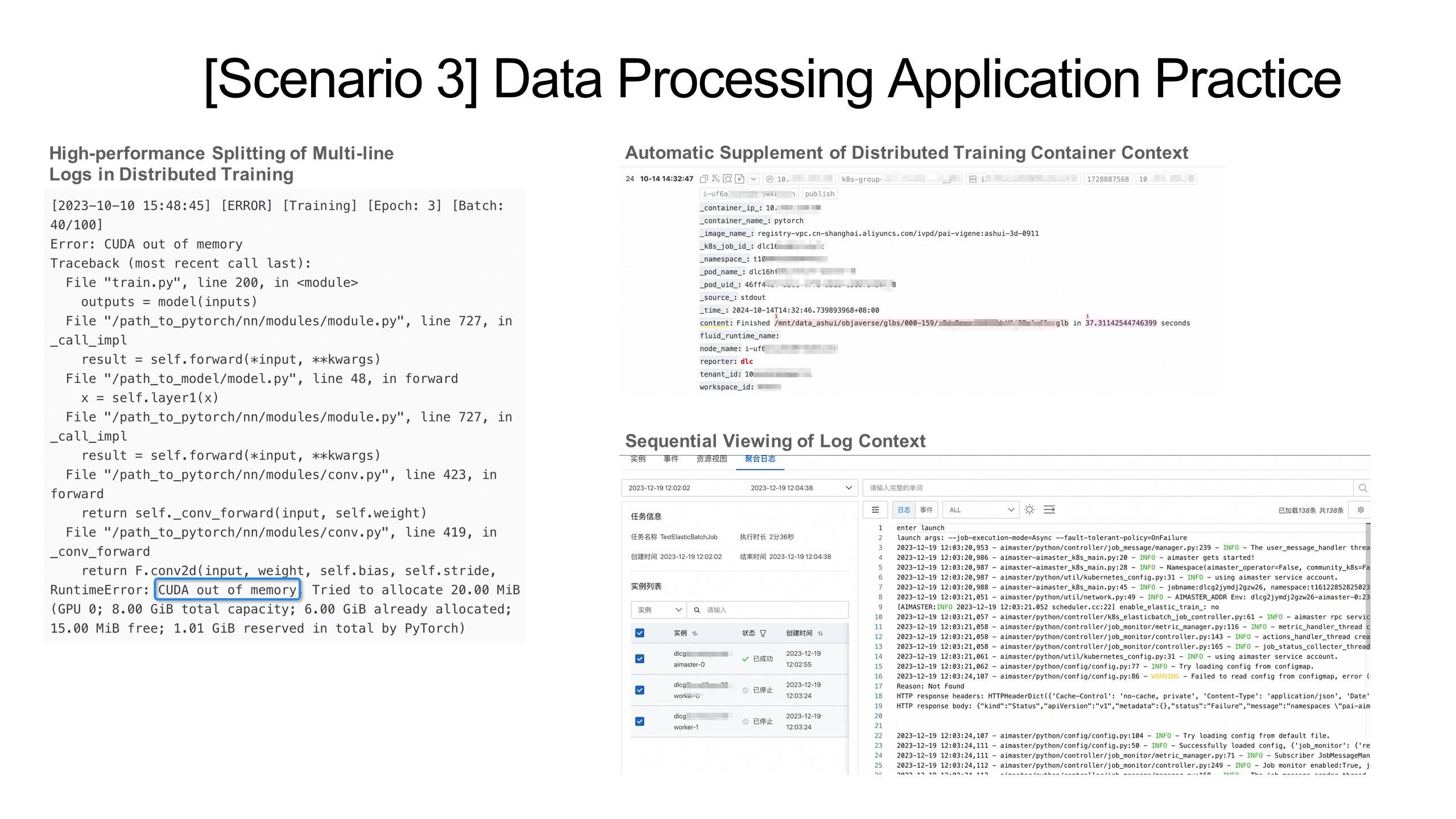

In intelligent computing service scenarios, to maximize the value of data, it is usually necessary to efficiently collect and transmit cluster logs, distributed training logs, and inference service logs to the backend log analysis platform, and on this basis, implement a series of data enhancement strategies. For distributed training logs, it is necessary to associate container context, which should include container ID, Pod name, namespace, and node information, to ensure the tracking and optimization of AI training tasks across containers. For inference services, in order to conduct more efficient analysis on access traffic and other aspects, it is necessary to perform field standardization processing on logs while also associating the container context.

LoongCollector, with its powerful computing capability, can associate K8s metadata with the accessed data. Meanwhile, by utilizing SPL and multi-language plug-in computing engines, it provides flexible data processing and orchestration capabilities, facilitating the handling of various complex formats.

The following are some typical log processing scenarios:

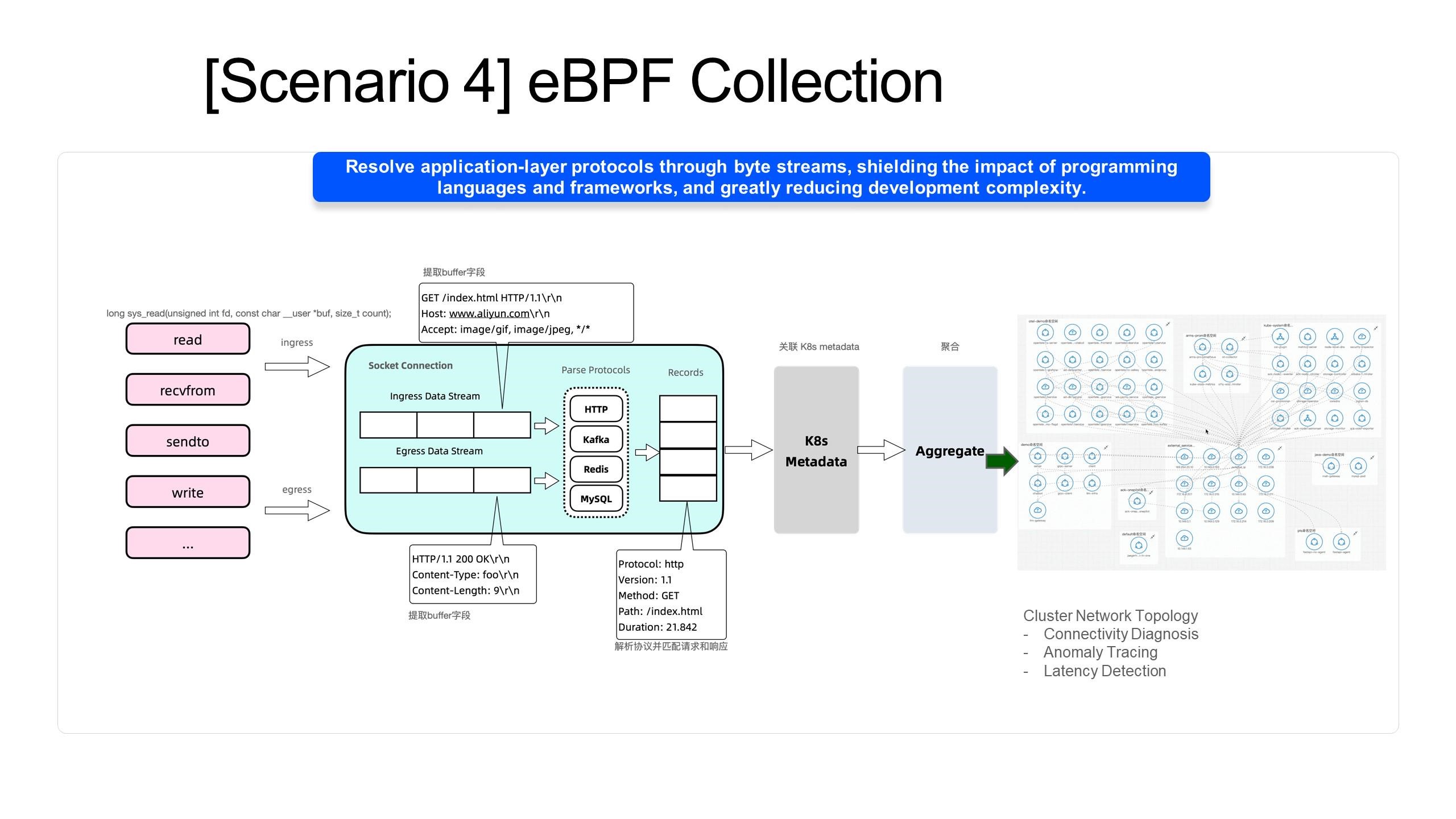

In the distributed training framework, multiple computing nodes work together to accelerate the model training process. However, in actual operations, the overall system performance may become unstable due to the impact of various factors, such as network latency, bandwidth limitations, and computing resource bottlenecks. These factors may lead to fluctuations or even a decline in training efficiency. LoongCollector implements non-intrusive network monitoring in intelligent computing services through eBPF technology. By capturing and analyzing traffic in real-time, it identifies the cluster network topology, enabling rapid detection of anomalies and thereby improving the efficiency of the entire model training process.

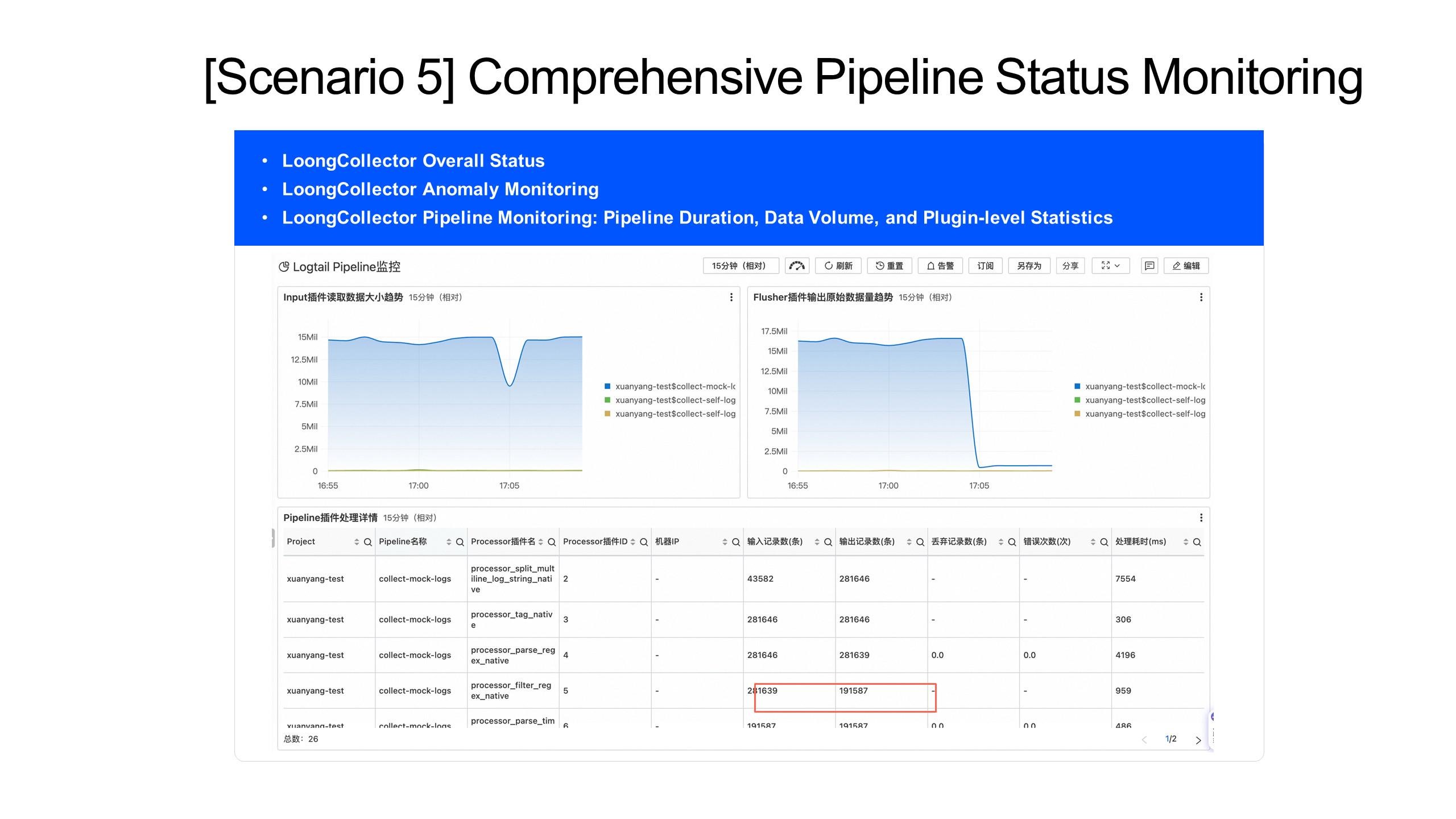

LoongCollector serves as the infrastructure for observable data collection, and the importance of its stability is self-evident. However, the operating environment is complex and variable. Therefore, emphasis has been placed on building observability for its operating status, facilitating the timely detection of collection anomalies or bottlenecks in large-scale clusters.

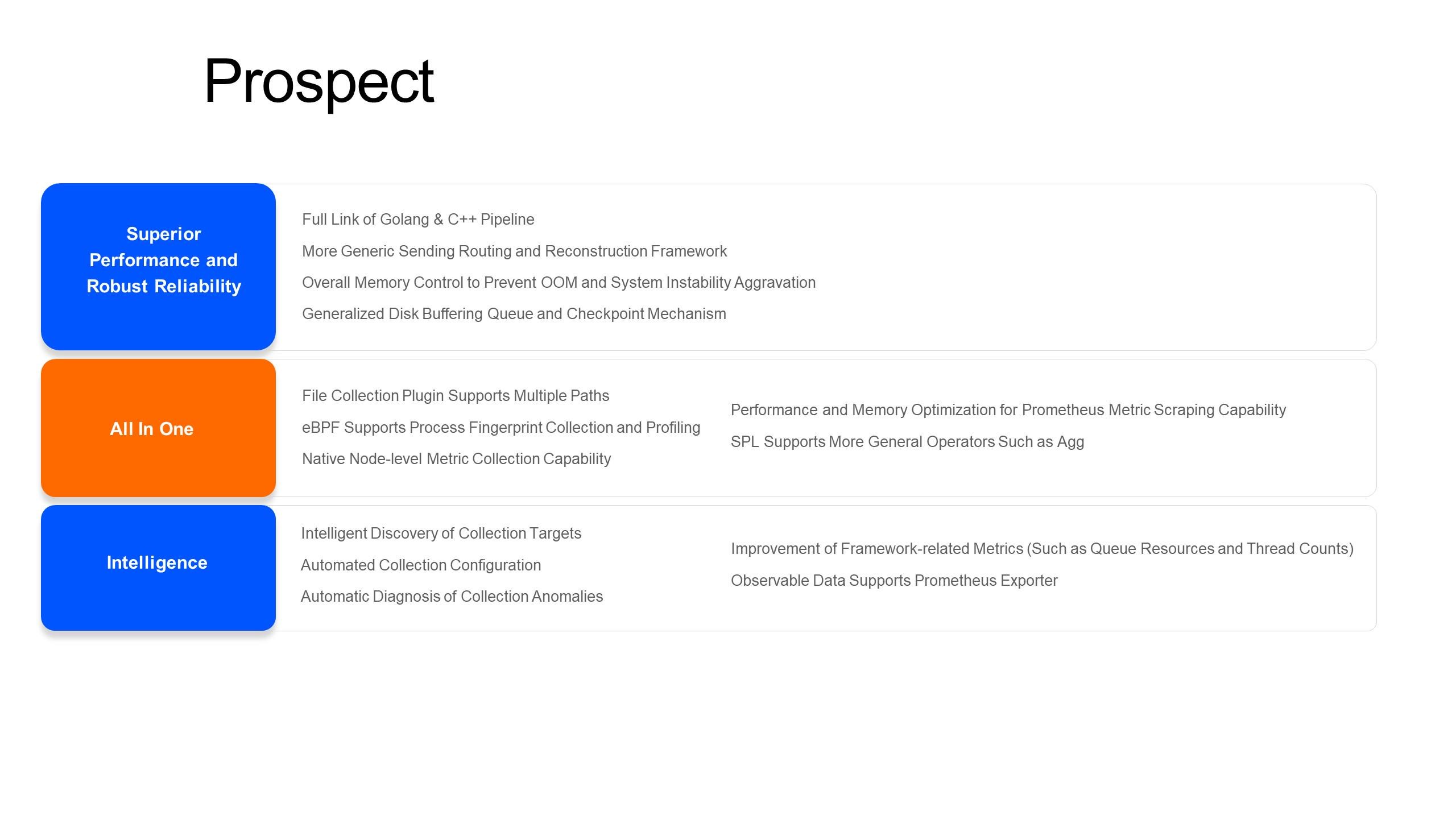

In the future, LoongCollector will continue to build around long-termism and build core competitiveness to meet the needs of the rapid development of the AI era.

We will further optimize the performance and enhance the stability of LoongCollector through methods such as C++, framework optimization, memory control, and cache.

By further enhancing capabilities such as Prometheus scraping support, deep eBPF integration, and adding host metric collection, we will achieve a better All-in-One agent.

We will also implement a series of optimizations to make LoongCollector more automated and intelligent, so that it can better serve the AI era.

LoongCollector will not only be a tool, but also a cornerstone for building intelligent computing infrastructure. You can try it out on GitHub, participate in LoongCollector and other projects of LoongSuite, and work together to illuminate the future of AI with observability.

640 posts | 55 followers

FollowAlibaba Cloud Native Community - July 28, 2025

Alibaba Cloud Native Community - August 25, 2025

Alibaba Cloud Native Community - September 5, 2025

Alibaba Cloud Native Community - August 13, 2025

Alibaba Cloud Native Community - August 7, 2025

Alibaba Cloud Native Community - November 6, 2025

640 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community