Author: Wang Cheng

LLM is the brain, and MCP is the hands and feet. LLM continually raises the minimum intelligence level while MCP continually enhances the upper limits of creativity. All applications and software will be transformed by AI, leading to a new paradigm where all applications and software will be transformed by MCP. Over the past year or two, industry practitioners have spent considerable resources optimizing models; in the coming years, everyone will focus on creating various intelligent agents around MCP, which represents a vast territory outside of the dominion of large model vendors.

Although AI still faces ROI challenges in the short term, almost everyone does not doubt its future and does not want to miss out on this "arms race."

We have recently open-sourced or released related capabilities that support the MCP protocol to reduce the engineering costs for developers to adopt MCP. For more details, visit:

1. Why is MCP Getting Popular?

2. What are the Differences Between MCP and Function Calling?

3. MCP Changes the Supply Side, But the Transformation is on the Consumption Side

4. Has MCP Accelerated the Monetization of Large Models?

5. The More Prosperous the MCP Ecosystem, What Does It Depend On?

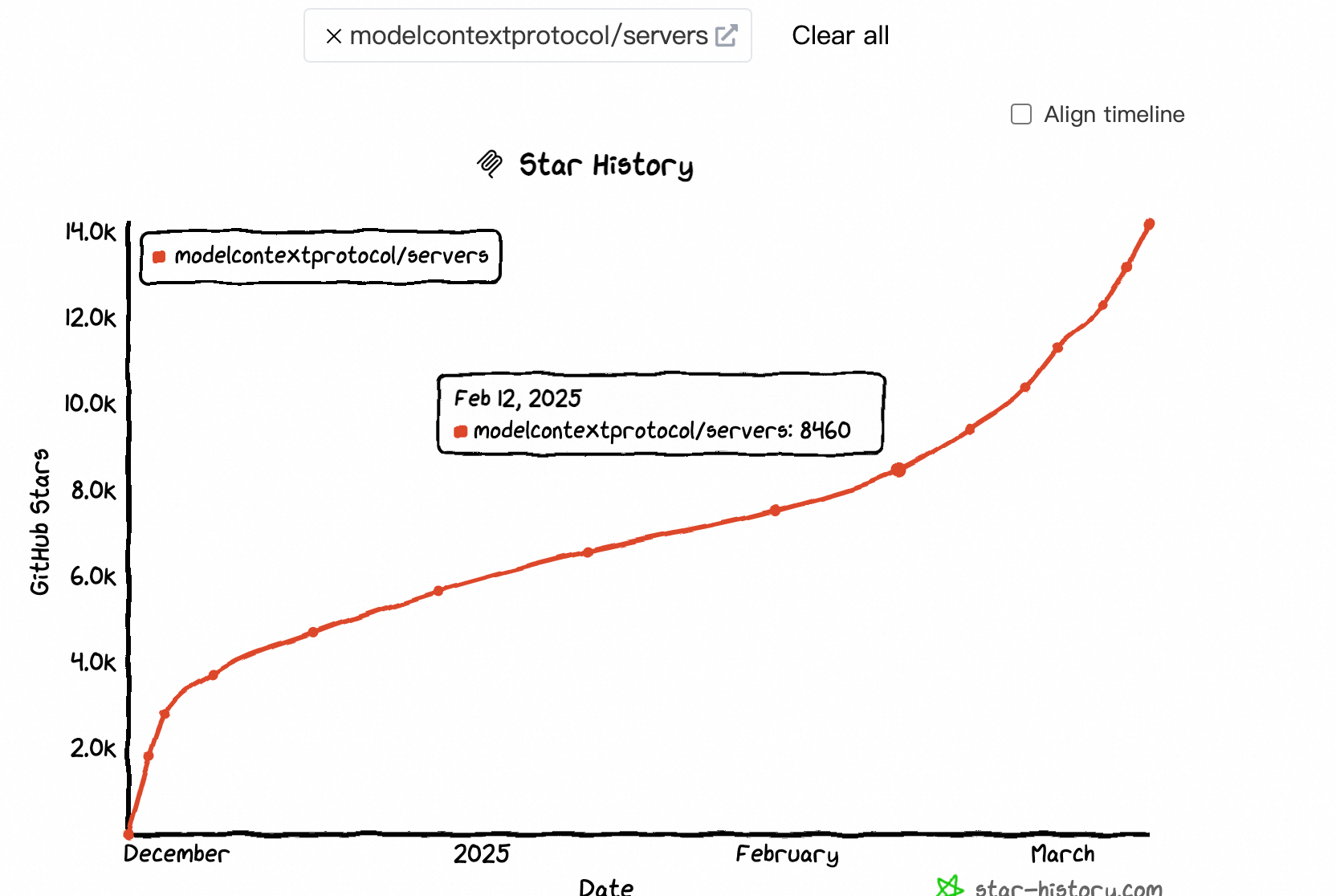

Readers who open this article share a common observation: since mid-February, MCP has become popular. Let's take a look at two key indicators that reflect the popularity of open-source projects: GitHub Stars and search indexes.

Stars have been accelerating growth since February:

WeChat Index has shown a sudden increase in traffic since February.

From discussions in the community, it is expected that in April, there will be a surge of MCP middleware providers in the country, including Server, Client, Server hosting, Registry, Marketplace, etc., expanding into their respective areas of expertise. This article aims to clarify some easily confused concepts, share some observed monetization opportunities, and discuss our plans and progress on MCP.

MCP replaces fragmented integration approaches with a single standard protocol during interactions between large models and third-party data, APIs, and systems, evolving from N x N to One for All, allowing AI systems to obtain necessary data in a simpler and more reliable manner.[1]

MCP was released last November and quickly garnered the first wave of market attention. By February of this year, Cursur, Winsurf, and Cline began integrating MCP. Unlike the thousands of previously integrated callers, the introduction of MCP in AI programming can be considered a clarion call for the ecological effects of large models, attracting a large number of developers on the AI programming tool side to the callers, thereby awakening the massive existing applications and systems.

From the perspective of the industry chain, this not only addresses the isolation and fragmentation of AI applications and the vast array of classic online applications but also significantly enhances the depth of use of AI programming tools and expands the user base. It also provides substantial monetization opportunities for AI applications and introduces more traffic to classic online applications. Moreover, it could potentially spur a market for using natural language with specialized software. For instance, Blender MCP connects AI to Blender, allowing users to create, modify, and enhance 3D models with simple text prompts.

Within this ecosystem, MCP, AI applications, AI programming tools, and classic online applications are all beneficiaries; those who integrate first will gain the most benefits.OpenAI's announcement of support for MCP will accelerate its status as the core infrastructure for AI-native applications.

P.S. Since domestic large models have not yet acted on the model context protocol, there remains uncertainty about whether MCP can ultimately become a de facto standard in China.

From the perspective of key productivity programmers, programmers no longer need to switch to Supabase to check database status; instead, they can execute read-only SQL commands using Postgres MCP servers and interact directly with Redis key-value storage using Redis MCP servers from their IDE.

While iterating code, they can also use Browsertools MCP to allow coding assistants to access live environments for feedback and debugging.

This is not new; when using cloud products, programmers tend to prefer calling the capabilities of cloud products via APIs rather than jumping between multiple cloud product consoles.

Programmers are often early adopters of new technologies. As MCP matures, regular consumers will also leverage natural language to boost the prosperity of the MCP industry chain.

Firstly, both MCP and Function Calling are technical implementations for large models to call external data, applications, and systems.

MCP was launched by Anthropic at the end of November 2024, while Function Calling was first introduced by OpenAI in June 2023 (creating an external function as an intermediary to pass requests from the large model while calling external tools, a method most large model vendors also adopt).

However, they have significant differences in positioning, development costs, and other aspects.

In summary, the comprehensive adaptation of MCP will reduce reliance on Function Calling, particularly in cross-platform and standardized tool integration scenarios.

However, Function Calling will remain irreplaceable in specific scenarios, such as model-driven dynamic decision-making, real-time task execution, proprietary ecosystem integration, etc. Additionally, in some lightweight calling scenarios, Function Calling has advantages in terms of effectiveness.

In the future, the two can complement each other, with MCP serving as the foundational protocol layer and Function Calling as the model enhancement layer, jointly promoting seamless interactions between AI and the external world.

Different people have varying understandings of the supply side and the consumption side. Here, we define both terms in this article:

First, we must mention Devin and Manus.

The emergence of Devin marked a qualitative change in AI programming, transforming from a programming assist tool to a programmer proxy. It is no longer just code completion and assistance; instead, it covers the entire process from requirement analysis to code writing to testing, deployment, and debugging, independently handling complete tasks. Devin revolutionizes the programmer community (domestic users utilize programming proxies, recommending Lingma); on the other hand, Manus transforms the ordinary internet users, allowing interaction with AI to shift from a mere question-and-answer dialogue service model to a general AI proxy that can mobilize online internet services beyond AI applications, autonomously and completely implementing user ideas, realizing a qualitative change from "passive response" to "active co-creation."

The more intelligent the outcome, the more complex the process.The view that "cognitive load is a core barrier to engineering effectiveness" is even more pronounced in AI Agents.

Therefore, AI Agents have a stronger demand for efficient development and engineering paradigms.Unlike classic internet applications, the productization and engineering of AI Agents are considerably more complex.E-commerce applications meet the demand for users to shop without leaving home, and chat applications meet the demand for users to socialize without leaving home; these are forms of physical substitution. AI Agents, on the other hand, represent a substitution of mental and cognitive effort, assisting users in completing a full range of activities from basic survival to advanced creation.

Relying solely on Function Calling to invoke external applications is clearly not an efficient development paradigm.

Only MCP allows developers to easily craft the next Manus.It is akin to the HTTP protocol in the internet world, enabling all clients and websites to communicate based on a single standard, thereby promoting global developer collaboration and accelerating the advent of AGI.

From our observations, this is indeed the case.

Take Firecrawl as an example; this open-source project offers:

Before supporting MCP, Firecrawl already had fully automated web crawling capabilities, but it relied on traditional technologies, requiring users to manually call Firecrawl services through REST APIs or SDKs, with no seamless integration with large models.

In January of this year, Firecrawl officially introduced the MCP protocol through its integration with the Cline platform, allowing developers to invoke Firecrawl's crawling capabilities via the MCP server, achieving an automated process where "AI models directly control web scraping."

More importantly, users need not worry that protocol binding will affect scalability; in order to realize richer large model capabilities, reliance on multiple middleware providers similar to Firecrawl is essential.

Thus, MCP opens up network effects for large model middleware suppliers, accelerating the monetization capabilities of these players.

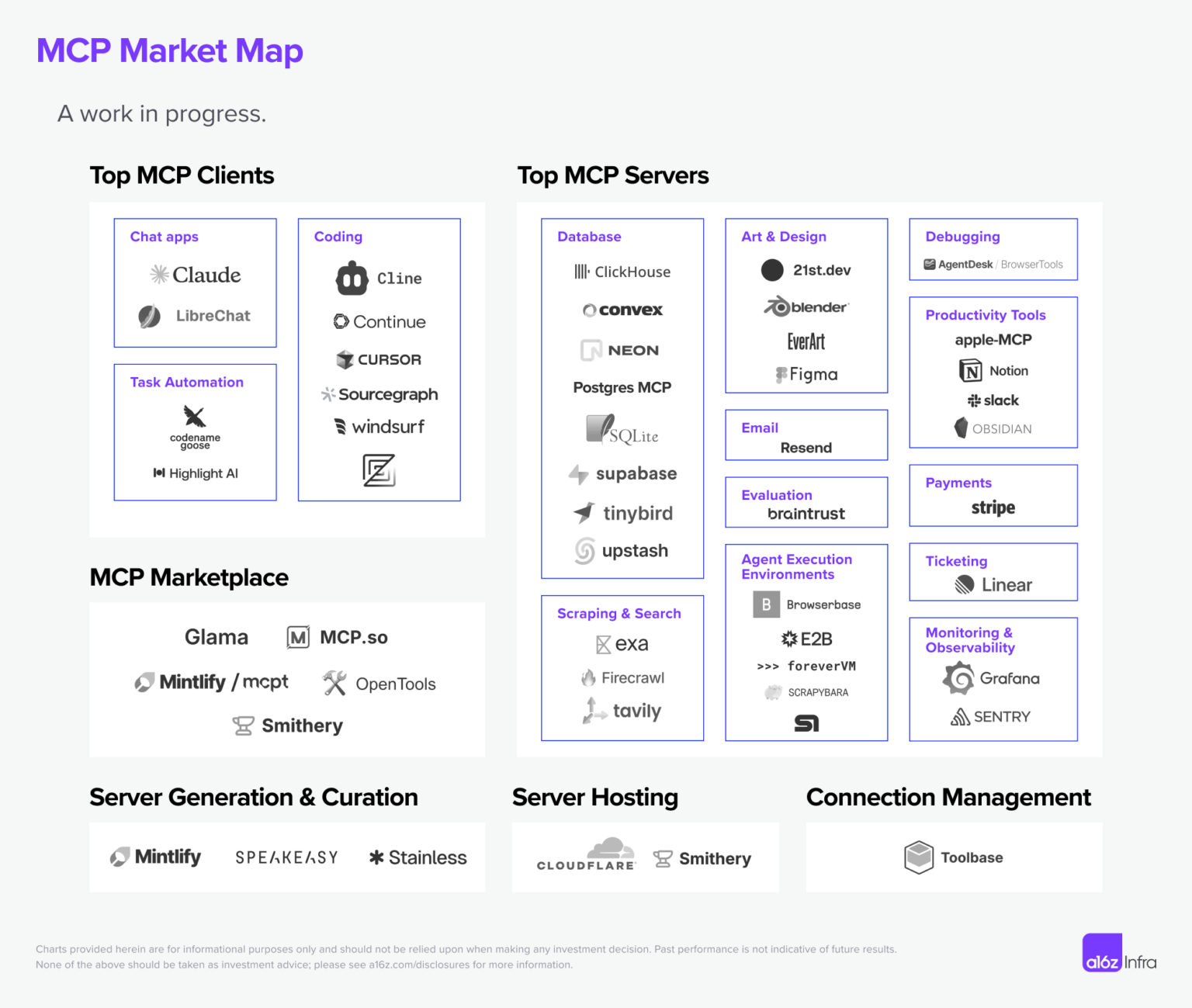

The a16z Infra team has created a MCP Market Map.[2]

This map covers the most active areas in the MCP ecosystem today; while there are still many blanks, it will provide inspiration for innovations domestically.

As the adoption rate of MCP continues to rise, infrastructure and tools will play a crucial role in the scalability, reliability, and accessibility of the MCP ecosystem.

This leads to a potential outcome that could be entirely different from the traditional internet industry chain: opportunities in the B2B space will be richer compared to B2C.

Higress and its cloud product API gateway are collaborating with Tongyi Lingma, adopting a technical solution of SSE + Tool + Redis, and have launched Higress MCP Server Hosting[3]. This provides two capabilities: firstly, Higress acts as a platform providing simple IO Tool capabilities to interface with several mainstream external applications, and users can also implement complex IO Tool capabilities independently; secondly, as a gateway, it manages identity verification and authorization for access to MCP Servers.

Additionally, Nacos will release capabilities for MCP Registry, including dynamic discovery and management of MCP Servers, and distribution of dynamic prompts to manage the MCP protocol, which can assist non-MCP services in transforming into MCP services.

The combination of Nacos + Higress, along with open-source solutions such as Apache RocketMQ and OTel, is maximizing the reuse of existing cloud-native technology components, significantly reducing the construction costs of AI Agents for classic internet applications.

MCP is essentially a type of API.

The MCP Server encapsulates functional services; its essence is to provide a standardized interface on the server side via the MCP protocol.Whenever cross-network access is involved, it requires authentication, authorization, data encryption and decryption, and anti-attack mechanisms.At this point, a gateway for managing MCP Servers is necessary.

Similar to an API gateway, a gateway managing MCP will enforce access controls, route requests to the correct MCP servers, handle load balancing, and cache responses to improve efficiency.This is particularly important in multi-tenant environments, as different users and agents require different permissions.The standardized gateway will simplify interactions between clients and servers, enhance security, and provide better observability, thus making MCP deployments more scalable and manageable.

You will soon experience these capabilities on the Higress gateway.Higress, as an AI-native API gateway, provides a complete open-source MCP Server hosting solution that facilitates protocol conversion from existing APIs to MCP.For more details, visit Higress Open Source Remote MCP Server Hosting Solution and Upcoming MCP Market.

Additionally, Nacos acts as the MCP Registry, playing a control plane role that not only manages metadata for tools but also transforms existing APIs into MCP protocols.Nacos can assist applications in quickly converting existing API interfaces into MCP protocol interfaces, in conjunction with the Higress AI gateway, to achieve conversions between the MCP protocol and existing protocols.

In the MCP ecosystem, due to the more complex and diverse calling relationships, observability is also a crucial infrastructure that cannot be overlooked:

As a standardized map service capability platform, Amap has taken the lead in launching its MCP Server, offering 12 core functions to support the development of enterprise-grade intelligent agent applications.

We anticipate a swift emergence of a large wave of MCP Servers and MCP middleware in the country, accelerating the productization and engineering of AI Agents.

[1] https://mp.weixin.qq.com/s/zYgQEpdUC5C6WSpMXY8cxw

[2] https://a16z.com/a-deep-dive-into-mcp-and-the-future-of-ai-tooling/

[3] https://github.com/alibaba/higress/tree/main/plugins/wasm-go/mcp-servers

Higress Open Source Remote MCP Server Hosting Solution and Upcoming MCP Market

639 posts | 55 followers

FollowAlibaba Cloud Community - January 4, 2026

Alibaba Cloud Native Community - April 16, 2025

Alibaba Cloud Indonesia - April 14, 2025

Alibaba Cloud Community - January 30, 2024

Alibaba Cloud Native Community - September 9, 2025

Alibaba Cloud Native Community - April 24, 2025

639 posts | 55 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Native Community