By Nanxian

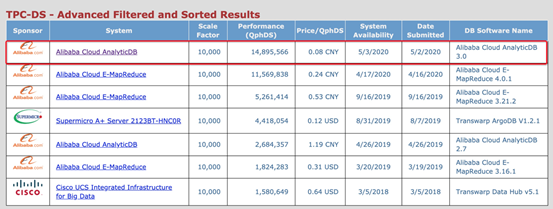

On May 4, 2020, Transaction Processing Performance Council (TPC), the world's most famous non-profit data management system evaluator, officially published the results for AnalyticDB on the TPC-DS on its official website. AnalyticDB passed the rigorous TPC-DS tests with a Query-per-Hour Performance (QphDS) of 14895566 and a unit price of CNY 0.08, as shown in the following figure. AnalyticDB performed 29% better than the world record set by an in-depth optimization of Spark, at a unit price of two-thirds less.

These results make AnalyticDB the world's leading data warehouse in terms of performance and cost-effectiveness on the TPC-DS benchmark, breaking its own record set on April 26, 2019. For more information, see TPC-DS Results.

Enterprises' data needs are constantly changing, evolving from traditional big data to fast data. This is mainly manifested in the following four areas (some data is sourced from Gartner and IDC):

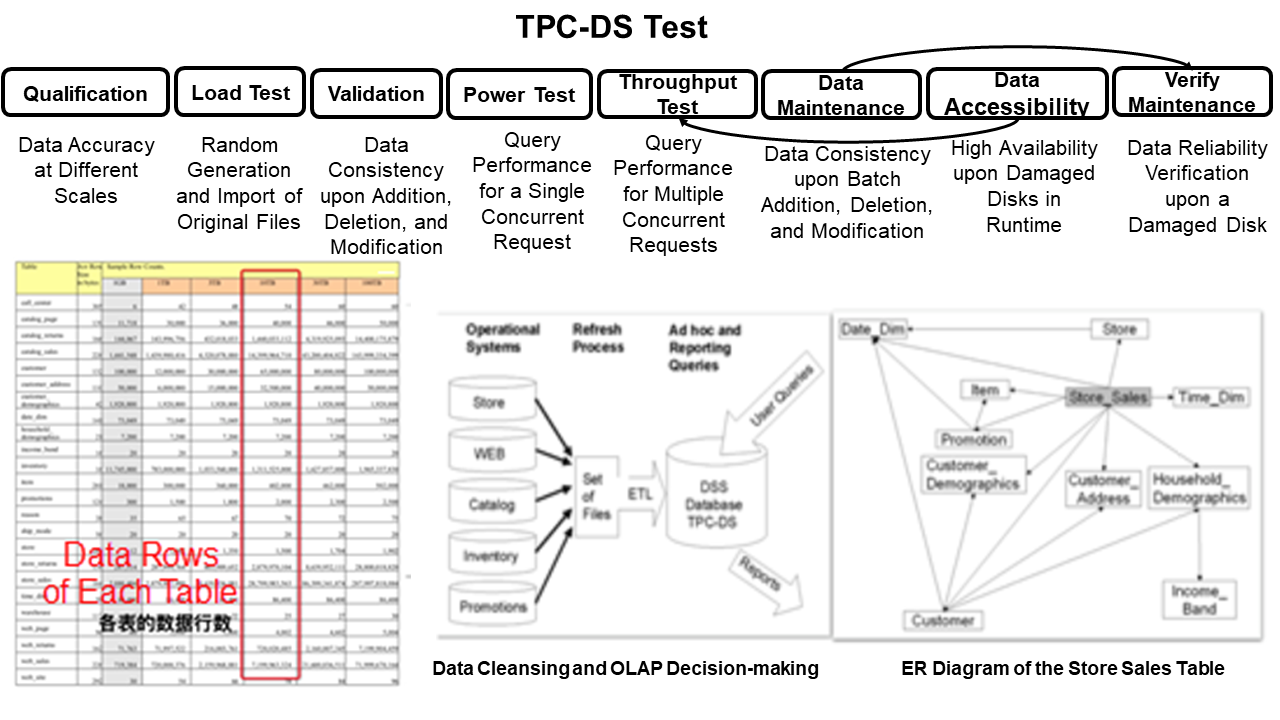

With the explosive data growth and full migration of enterprise data to the cloud, the key technical metrics of cloud-native data warehouses will be the storage and processing performance and cost-effectiveness of massive data. The TPC-DS test is a rigorous assessment and audit of a data warehouse in terms of:

TPC-DS is a core benchmark for measuring the maturity and competitiveness of a data warehouse.

The TPC-DS test of AnalyticDB is an important process to improve the product development capabilities of Alibaba and verify core technical breakthroughs. The core technical breakthroughs in this process are helping our customers improve the performance of real-time processes and greatly reduce costs, getting them ready for a new era of database and big data integration and online businesses.

AnalyticDB is Alibaba Cloud's only proprietary PB-level real-time data warehouse that has been verified by using it in massive core businesses. Since its launch in 2012, nearly a hundred versions have been released, providing support for many online analytics businesses, such as e-commerce, advertising, logistics, entertainment, tourism, and risk control. Alibaba Cloud started providing AnalyticDB for external enterprises in 2014, initially serving traditional large and medium-sized enterprises, government institutions, and numerous internet companies in more than ten industries.

AnalyticDB for MySQL 3.0 is a cloud-native data warehouse, which has evolved based on advances in database and big data integration and engineering over the past eight years. In the TPC-DS test, AnalyticDB for MySQL 3.0 fully demonstrated its outstanding cloud-native technology advantages, proving that it is nearly 10 times better than its closest competition.

TPC is the world's most famous non-profit data management system evaluator. It formulates the standards, performance, and price metrics for business application benchmarks and publishes the test results. The benchmark results are a core metric for measuring the performance and cost-effectiveness of a data management system.

The TPC-DS test simulates a typical decision support system for retail data warehouses. It is the most challenging database benchmark and is an upgraded version of TPC-H. It uses the star and snowflake schemas, and the test set includes complex applications such as big data set statistics, report generation, online query, and data mining, making it similar to a real scenario.

The following factors make TPC-DS challenging:

The following figure displays the TPC-DS test procedure and data model:

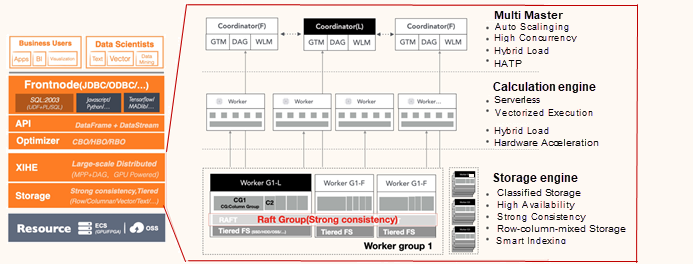

AnalyticDB for MySQL 3.0 uses a cloud-native architecture that separates computing and storage and also separates cold and hot data. It supports high-throughput real-time writing and strong data consistency, ensuring high performance with hybrid loads of high-concurrency queries, and high-throughput batch processing.

The first layer is the access layer, which consists of Multi-Master scalable coordinators. The coordinators implement protocol access, SQL parsing and optimization, real-time data writing to shards, data scheduling, and query scheduling.

The second layer is the computing engine, which supports the integrated execution of distributed massively parallel processing (MPP) and directed acyclic graphs (DAGs). By using intelligent optimizer, it supports high-concurrency and complex SQL statements. Leveraging the cloud-native infrastructure, compute nodes implement elastic scheduling and can be scaled out in minutes or even seconds based on business needs, which ensures effective resource utilization.

The third layer is the Raft-based distributed, real-time, and high-availability storage engine. It implements parallelization based on data shards and multiple Raft groups, separates cold and hot data to reduce costs through tiered storage, and achieves high performance through row-column-mixed storage and intelligent indexing.

AnalyticDB for MySQL 3.0 was independently researched and developed by Alibaba Cloud. Based on the Raft protocol, it provides a distributed, highly consistent, and highly reliable lightweight storage architecture for high-throughput real-time data writing. Therefore, it is suitable for scenarios that require very high analytics performance.

Compared with HBase and Kudu, AnalyticDB for MySQL 3.0 provides higher performance in SQL analytics. It also provides high consistency and visibility in real-time writing and supports ACID, which is not supported by open-source Elasticsearch and ClickHouse.

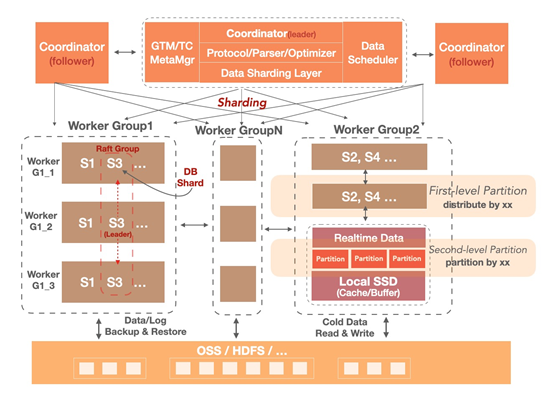

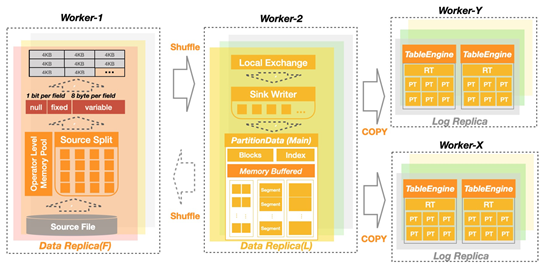

The following figure shows the overall storage architecture of AnalyticDB.

AnalyticDB for MySQL 3.0 is a database-based parallel data model. It is implemented in a multi-layer parallel architecture based on storage models and MPP computing models.

In the TPC-DS test, the distributed parallel storage architecture works closely with the query optimization and execution engine that perceives storage distribution, resulting in excellent overall performance.

Data import is the basic capability of a cloud data warehouse. Accordingly, TPC-DS requires high import performance.

The first optimization we made was to adopt a lightweight build, which converts real-time data into full partition data. AnalyticDB for MySQL 3.0 implements a lightweight local build with full memory and a single replica. Compared with the earlier MR-like full build, this greatly reduces the overhead of the Distributed File System (DFS) read and write and writing data to disks. It allows the system to make full use of the CPU to improve performance by localizing vector commands.

Then, we turned to I/O and network optimization. We used technologies such as DirectIO, Binary, full stream, asynchronization, and zero-copy to significantly improve import performance.

Next, we reduced the data volume. We used the Raft 2+1 technology (2 data records + 1 log record) to reduce data volumes by a third while ensuring data reliability. We further compressed the data by using the high-performance LZ4 compression algorithm, which greatly reduces the data read and write I/O and network transmission overhead.

Finally, we were able to import 50 million records per second on 18 nodes in TPC-DS.

AnalyticDB for MySQL 3.0 provides high-throughput real-time data update capabilities based on Raft and achieves excellent performance by using the full asynchronization, zero-copy, and high-efficiency coding compression technologies during writing. In the TPC-DS Dynamic Mechanical Loading (DML) test, AnalyticDB can write tens of millions of transactions per second (TPS) in real-time while ensuring linear consistency (allowing query upon writing). In actual production environments, the writing performance is fully scalable, easily reaching 100 million TPS.

In TPC-DS, the data modification and ACID capabilities of the data warehouse need to be verified. AnalyticDB for MySQL 3.0 supports ETL transactions and ACID capabilities (supporting a complete TPC-C test). In the TPC-DS DML test, the Multiversion Concurrency Control (MVCC) capability of the storage engine assumes a major role. The storage engine is divided into real-time data (Delta), partition data (Main), and asynchronous data conversion (Build), creating an LSM-like write optimization architecture. AnalyticDB provides block-level MVCC and snapshot isolation to ensure the isolation (visibility) of data during ETL and data update and the atomicity of data update upon disk failures.

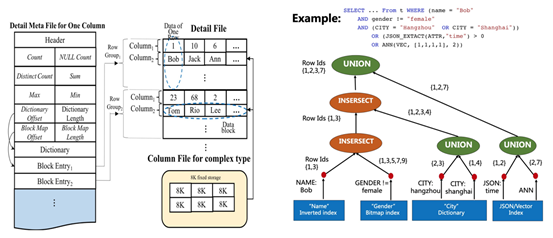

The proprietary row-column-mixed storage format enables AnalyticDB for MySQL 3.0 to ensure a high filtering rate and high throughput scanning. This format ensures better details checking than the column-only-storage format of open-source ORCFile, provides higher random read performance than Parquet, and has a lower cost than the data redundancy models of row- and column-oriented storage. In AnalyticDB for MySQL, each table has a row-oriented or column-oriented storage file. Data is divided into different row groups. In a row group, data is stored in blocks of columns. In a block, data with a fixed length or unfixed length (toast) is encoded and compressed and can be read randomly or sequentially.

In the TPC-DS test, we significantly improved storage scanning performance by properly configuring the storage block size (4 KB), data block prefetching, and source operator vector reading. Having accurate statistical information (such as min, max, sum, and cnt) for storage can accelerate Smart Scan and provide various statistics for the query optimizer, allowing it to formulate an optimal execution plan.

AnalyticDB for MySQL provides a proprietary intelligent indexing framework that supports five types of indexes: inverted indexes (string), bitmap indexes, KD-tree indexes (digit), JSON indexes, and vector indexes. Different types of indexes support combinations of multiple conditions (intersect, union, and except) at the column level. Unlike a traditional data framework, this framework does not require the creation of a compound index (which avoids space expansion) and supports Index Condition Pushdown (ICP) for more conditions such as OR and NOT. For greater ease of use, you can enable automatic full-column indexing when creating a table in AnalyticDB. ICP is dynamically selected through Cost-Based Optimization (CBO) during query, and the ICP index chains will be merged and output at the predicate calculation layer in a streaming and progressive manner.

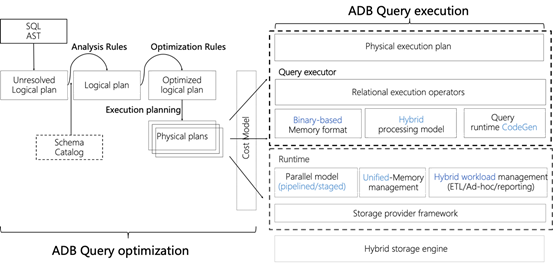

The query engine of AnalyticDB for MySQL 3.0 consists of the proprietary query optimizer and query executor. This is an important component that enables AnalyticDB for MySQL to provide high-concurrency and high-throughput data warehouse analytics. Its core advantages over a single computing engine include its awareness of data characteristics, in-depth integration with the storage engine architecture, and support for data warehouse analytics scenarios such as reporting, ad hoc, and ETL.

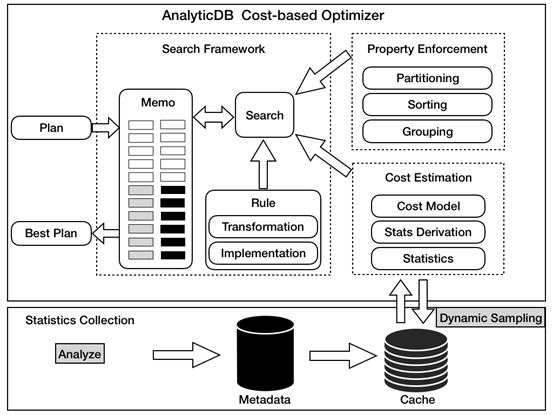

The optimizer of AnalyticDB for MySQL is a distributed cloud-native real-time data warehouse product. It must be able to deal with the problems of a traditional optimizer such as NP-hard issues in complex Join Reorder and the uncertainty of cost estimates, as well as new problems arising from distributed parallel plans in a distributed environment. CBO, the latest achievement of AnalyticDB for MySQL 3.0, was first used in the TPC-DS test and has helped a lot in the overall plan optimization.

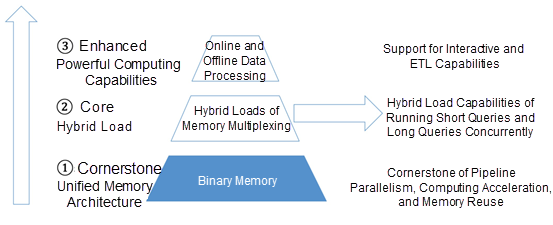

Based on the hybrid load management capability of unified memory pooling and queries, the query execution engine of AnalyticDB for MySQL uses technologies such as dynamic code generation, innovative hybrid execution models, vectorization algorithms of the single instruction, multiple data (SIMD) instruction set, and adaptive query execution for row-column-mixed storage. This ensures the superior query performance for AnalyticDB for MySQL in TPC-DS.

The cost-based optimizer is essentially a complex search problem. To solve this problem, you need to start with the following factors:

A common belief is that the traditional volcano model cannot meet the high-throughput performance requirements of analytics scenarios. With the continuous development of various systems, two types of post-evolution execution frameworks have been developed for computing engines in the industry:

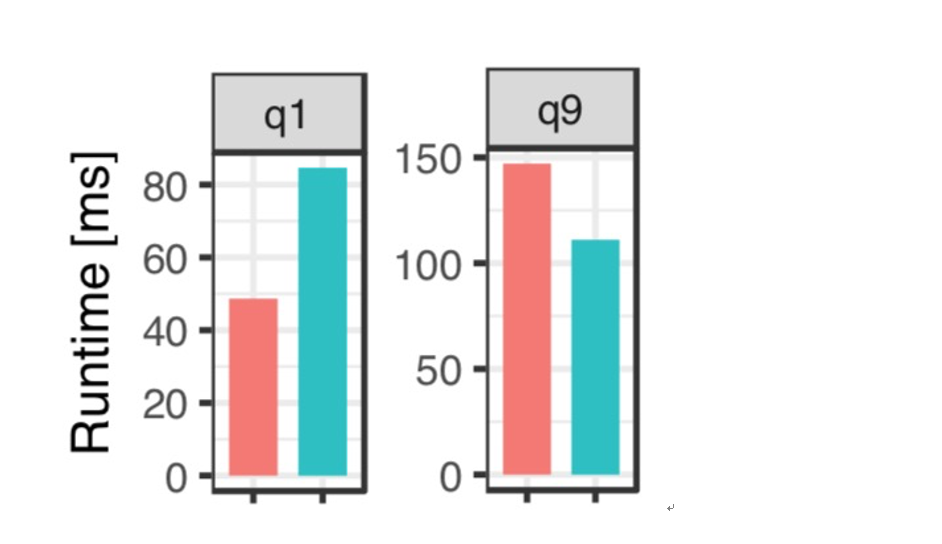

In the preceding figure, the red column indicates JIT compilation, while the green column indicates vectorization. AnalyticDB for MySQL is the only proprietary analytics engine that supports these two query modes. The hybrid execution framework adaptively integrates multiple computing-intensive operators into a drive based on the vectorization mode. It empowers a query execution engine that features both compilation and vectorization.

Efficient memory management is the cornerstone of computing optimization. In a type-oriented memory model, different basic type storages are used for different data types. As a result, different types of data cannot be stored in contiguous memory addresses. Instead, the data can be stored only by column, reducing the additional cost of multiple memory objects. In addition, memory cannot be reused across different memory types, which results in additional memory management costs.

The query execution engine of AnalyticDB for MySQL solves the preceding problems through unified memory management.

Join operations between a fact table and a dimension table are typical in a data warehouse. The ratio of the data volumes of the two tables can reach into the tens of millions. The computing costs of Join operations arise mainly from data scanning. Therefore, we use the DynamicFilterPushDown method to greatly reduce the data volume of the left table. In addition, the data warehouse contains a large number of WITH statements and implicitly shared statements. In this case, common table expressions (CTEs) can be shared to avoid repeated computing.

After DynamicFilterPushDown (DFP) reads the Join operation with a high filtering rate (low hit rate) and the data on the probe from the storage, most of the data will be discarded. Therefore, if the evaluated build data is maintained below a small threshold, we can use the build result as the filter condition (dynamic filter) for the left table and push down the data to the storage, reducing the scanning amount. The optimizer's primary task is to properly evaluate the number of distinct values (NDV) that hit the Join condition at the build end.

Different Join orders directly affect the range and granularity of dynamic filters. The cost of a Join operation that can be optimized is significantly different from that of a real Hash join, which affects the Join orders. Based on the complete and scalable CBO framework of AnalyticDB for MySQL, we selected the globally optimal dynamic filter solution based on cost considerations.

We implement an efficient DFP at the following three key points:

For a CTE, more than 30% of SQL statements in TPC-DS contain the "with as" subquery. The "with as" subquery is referenced multiple times in the main query, and each reference causes repeated computing, resulting in a waste of resources. For basic CTE optimization, the results of the "with" clause are reused for multiple references to reduce the cost of repeated computing. However, in some scenarios, derivation of the relationship with the main query can further reduce the amount of computing in the "with" subquery. In this case, directly sharing the complete "with" clause will lead to additional performance rollback. Then, the optimal plan generated after inline can be used to identify common subtrees. This further reduces the amount of repeated computing and ensures no bad cases. In implementing the executor, we introduced deadlock detection to solve the deadlock problem by analyzing the dependencies among multiple consumers of common subtrees.

Since its debut in the cloud in 2014, AnalyticDB has been available in major regions around the world and has entered the global analytics market. In 2018, AnalyticDB was selected as a contender in Forrester's research report "The Forrester Wave™: CloudData Warehouse, Q4 2018" and Gartner's "Magic Quadrant for Data Management Solutions for Analytics" report.

AnalyticDB is widely used in the Alibaba Group and by our customers to replace Teradata and Oracle RAC. It has been applied and verified on a large scale in core industries such as the internet, new retail, finance, taxation, transportation, meteorology, and logistics. For example, in the logistics industry, AnalyticDB has enabled China Post to perform large-scale centralized query and analysis on nationwide logistics data for the first time.

AnalyticDB has been verified theoretically by the Very Large Data Bases (VLDB) paper, “AnalyticDB: Real-time OLAP Database System at Alibaba Cloud”. This is an honor reserved for top large-scale commercial systems, such as Google F1 (VLDB'2013) and AWS Aurora (SIGMOD'2017). AnalyticDB has also proven itself through the TPC-DS benchmark (proving itself to be the world-leader in cost-effectiveness and performance), through customer feedback (including major government ministries and internet-scale customers), and through successful application in the Alibaba Group over many years. We have now upgraded AnalyticDB to a cloud-native data warehouse based on the highly efficient cloud computing and the integration of databases and big data.

In the future, big data and database integration and cloud-native will redefine the data warehouse for the cloud computing era. Breaking the TPC-DS world record is just a start. AnalyticDB will continue to strive to become an infrastructure for digital transformation and upgrade and a way for enterprises to realize the value of online data.

Alibaba Cloud Makes a Big Move with Database Autonomy Service (DAS)

Alibaba Cloud New Products - August 10, 2020

ApsaraDB - July 2, 2020

Alibaba EMR - May 7, 2020

ApsaraDB - January 9, 2023

ApsaraDB - November 17, 2020

Alibaba Clouder - February 11, 2021

ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB