By Chen Qiukai (Qiusuo)

Kubernetes-Deep-Learning (KubeDL) is an open-source and Kubernetes-based AI workload management framework of Alibaba. We hope the experience from large-scale machine learning and job scheduling and management can facilitate the development of the technical community by relying on Alibaba's scenarios. KubeDL has joined CNCF (Cloud Native Computing Foundation) as a Sandbox project. We will continue to explore the best practices in cloud-native AI scenarios to help algorithm scientists achieve innovation in a simple and efficient manner.

We have added model version management in the latest KubeDL 0.4.0. It enables AI scientists to track, mark, and store model versions as easily as they can manage images. More importantly, in the classic machine learning pipeline, the two stages, training and inference are relatively independent. The training-> model-> reasoning pipeline in algorithmic scientists' eyes lacks faults. However, the model can serve as a link to connect them as an intermediate between training and inference.

GitHub: https://github.com/kubedl-io/kubedl

Website: https://kubedl.io/model/intro/

Model files are the product of distributed training and the essence of algorithms retained after a full iteration and search. Algorithm models have become valuable digital assets in the industry. Different distributed frameworks usually output model files in different formats. For example, TensorFlow training jobs usually output CheckPoint (.ckpt), GraphDef (.pb), SavedModel, and other formats, while PyTorch output is usually suffixed with .pth. When loading the model, different frameworks will analyze the runtime data-flow diagram, running parameters and their weights and other information in the model. File systems are all files in a special format, such as JPEG and PNG.

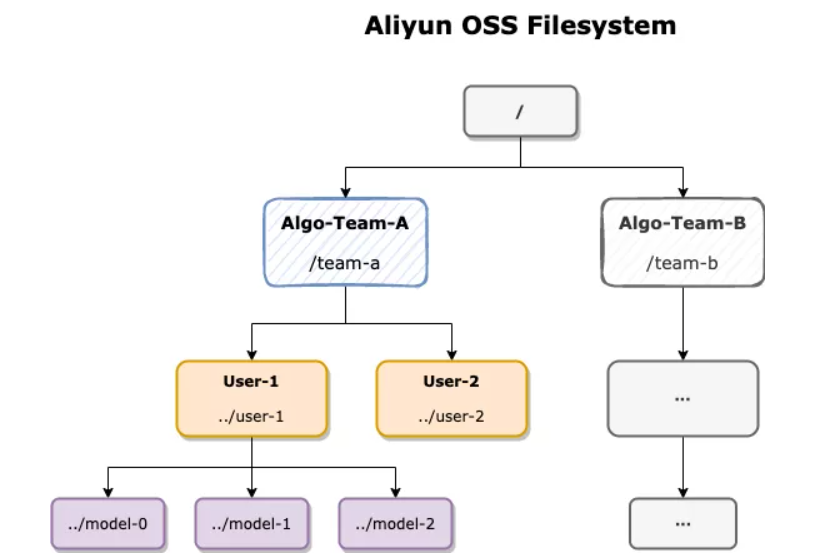

Therefore, the typical management method is to treat them as files and host them in a unified Object Storage Service (such as Alibaba Cloud OSS and AWS S3). Each tenant or team is assigned a directory, and their members store the model files in their corresponding subdirectories. SRE is responsible for the management of read and write permissions in a unified manner:

The advantages and disadvantages of this management method are clear:

Considering the situation above, KubeDL fully combines the advantages of Docker image management and introduces a set of Image-Based image management APIs. They enable more close and natural integration of distributed training and inference services and simplify the complexity of model management.

Image is the soul of Docker and the core infrastructure in the container era. The image itself is a layered immutable file system, and the model file can naturally serve as an independent image layer in it. The combination of the two can bring other possibilities:

Based on the model image, we can use open-source image management components to maximize the advantages brought by Image.

KubeDL model management introduces two resource objects: Model and ModelVersion. Model represents a specific model, and ModelVersion represents a specific version of the model during iteration. A set of ModelVersions is derived from the same Model. The following is an example:

apiVersion: model.kubedl.io/v1alpha1

kind: ModelVersion

metadata:

name: my-mv

namespace: default

spec:

# The model name for the model version

modelName: model1

# The entity (user or training job) that creates the model

createdBy: user1

# The image repo to push the generated model

imageRepo: modelhub/resnet

imageTag: v0.1

# The storage will be mounted at /kubedl-model inside the training container.

# Therefore, the training code should export the model at /kubedl-model path.

storage:

# The local storage to store the model

localStorage:

# The local host path to export the model

path: /foo

# The node where the chief worker run to export the model

nodeName: kind-control-plane

# The remote NAS to store the model

nfs:

# The NFS server address

server: ***.cn-beijing.nas.aliyuncs.com

# The path under which the model is stored

path: /foo

# The mounted path inside the container

mountPath: /kubedl/models

---

apiVersion: model.kubedl.io/v1alpha1

kind: Model

metadata:

name: model1

spec:

description: "this is my model"

status:

latestVersion:

imageName: modelhub/resnet:v1c072

modelVersion: mv-3The Model resource only corresponds to the description of a certain type of model and tracks the latest version of the model and its image name to inform the user. The user mainly uses ModelVersion to customize the configuration of the model:

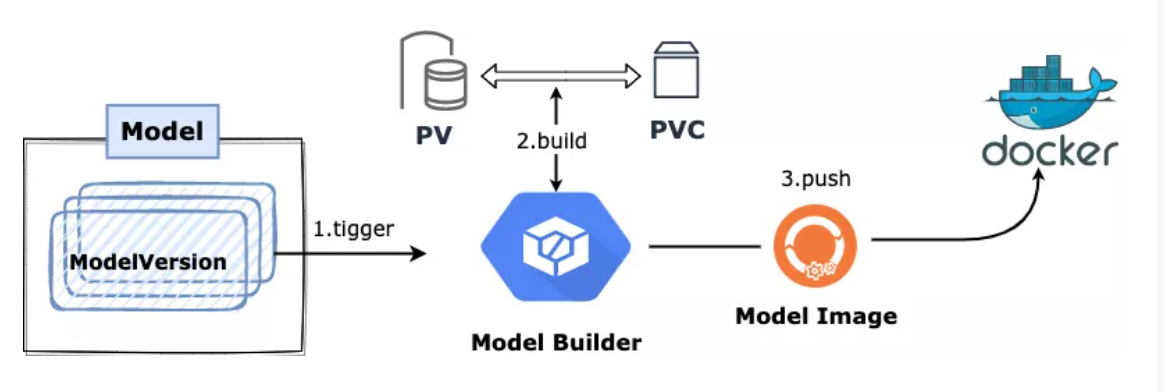

When KubeDL listens to the creation of ModelVersion, the workflow of model building is triggered:

At this point, the model of ModelVersion's'corresponding version is solidified in the image repository and can be distributed to subsequent inference services for consumption.

Although ModelVersion supports independent creation and initiation of building, we prefer to automatically trigger the building of the model after the distributed training job is successfully completed, constituting a natural pipeline.

KubeDL supports such a submission method. Let's take TFJob as an example. The output path of the model file and the warehouse address to push the model file are specified when launching distributed training. A ModelVersion object is automatically created and createdBy points to the upstream job name when a job is successfully executed. The creation of ModelVersion will not be triggered when the job execution fails or terminates in advance.

The following is an example of distributed mnist training. We output the model file to the local node's path: /models/model-example-v1. When running is successfully completed, the building of the model is triggered.

apiVersion: "training.kubedl.io/v1alpha1"

kind: "TFJob"

metadata:

name: "tf-mnist-estimator"

spec:

cleanPodPolicy: None

# modelVersion defines the location where the model is stored.

modelVersion:

modelName: mnist-model-demo

# The dockerhub repo to push the generated image

imageRepo: simoncqk/models

storage:

localStorage:

path: /models/model-example-v1

mountPath: /kubedl-model

nodeName: kind-control-plane

tfReplicaSpecs:

Worker:

replicas: 3

restartPolicy: Never

template:

spec:

containers:

- name: tensorflow

image: kubedl/tf-mnist-estimator-api:v0.1

imagePullPolicy: Always

command:

- "python"

- "/keras_model_to_estimator.py"

- "/tmp/tfkeras_example/" # model checkpoint dir

- "/kubedl-model" # export dir for the saved_model format% kubectl get tfjob

NAME STATE AGE MAX-LIFETIME MODEL-VERSION

tf-mnist-estimator Succeeded 10min mnist-model-demo-e7d65

% kubectl get modelversion

NAME MODEL IMAGE CREATED-BY FINISH-TIME

mnist-model-demo-e7d65 tf-mnist-model-example simoncqk/models:v19a00 tf-mnist-estimator 2021-09-19T15:20:42Z

% kubectl get po

NAME READY STATUS RESTARTS AGE

image-build-tf-mnist-estimator-v19a00 0/1 Completed 0 9minOther Artifacts files that will only be output when the job is successfully executed can be solidified into the image and used in subsequent stages through this mechanism.

With the foundation we have discussed, we can directly reference the built ModelVersion to load the corresponding model and provide external inference services when deploying the inference service. At this point, the stages of the algorithmic model lifecycle (code-> training-> model-> deployment launch) are linked through model-related APIs.

When deploying an inference service through the Inference resource object provided by KubeDL, we only need to fill the corresponding ModelVersion name in a predictor template. Inference Controller will inject a Model Loader when creating a predictor. Model Loader will pull the image that hosts the model file to the local and mount the model file to the main container by sharing volumes between containers to realize model loading. As mentioned above, we can easily prefetch the model image to accelerate model loading with OpenKruise's ImagePullJob. For user perception consistency, the model mount path of the inference service is the same as the model output path of the distributed training job by default.

apiVersion: serving.kubedl.io/v1alpha1

kind: Inference

metadata:

name: hello-inference

spec:

framework: TFServing

predictors:

- name: model-predictor

# model built in previous stage.

modelVersion: mnist-model-demo-abcde

replicas: 3

batching:

batchSize: 32

template:

spec:

containers:

- name: tensorflow

args:

- --port=9000

- --rest_api_port=8500

- --model_name=mnist

- --model_base_path=/kubedl-model/

command:

- /usr/bin/tensorflow_model_server

image: tensorflow/serving:1.11.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

- containerPort: 8500

resources:

limits:

cpu: 2048m

memory: 2Gi

requests:

cpu: 1024m

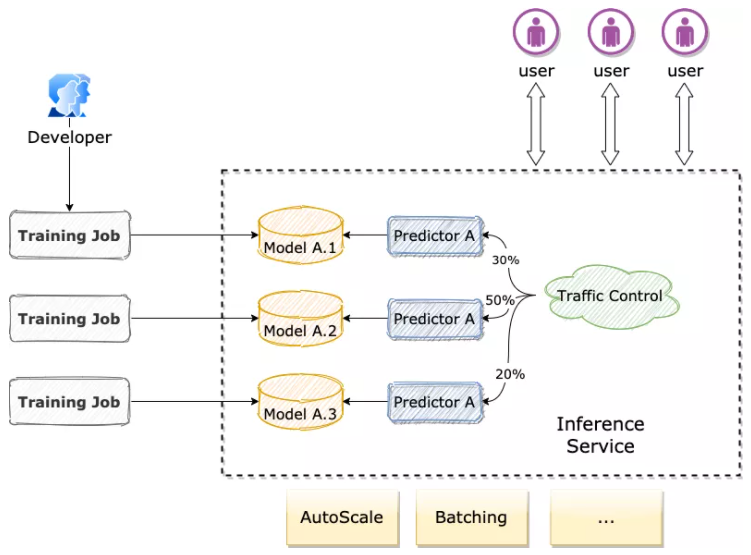

memory: 1GiIt is possible for a complete inference service to serve multiple predictors of different model versions at the same time. In common search and recommendation scenarios, we expect to use A/B testing to compare the effects of multiple model iterations at the same time. This can be easily achieved through Inference + ModelVersion. We reference different versions of models for different predictors and assign traffic with reasonable weights. This way, we can achieve the purpose of serving and comparing the effects of different versions of the model under an inference service at the same time:

apiVersion: serving.kubedl.io/v1alpha1

kind: Inference

metadata:

name: hello-inference-multi-versions

spec:

framework: TFServing

predictors:

- name: model-a-predictor-1

modelVersion: model-a-version1

replicas: 3

trafficWeight: 30 # 30% traffic will be routed to this predictor.

batching:

batchSize: 32

template:

spec:

containers:

- name: tensorflow

// ...

- name: model-a-predictor-2

modelVersion: model-version2

replicas: 3

trafficWeight: 50 # 50% traffic will be roted to this predictor.

batching:

batchSize: 32

template:

spec:

containers:

- name: tensorflow

// ...

- name: model-a-predictor-3

modelVersion: model-version3

replicas: 3

trafficWeight: 20 # 20% traffic will be roted to this predictor.

batching:

batchSize: 32

template:

spec:

containers:

- name: tensorflow

// ...

Combining with standard container images, KubeDL implements model building, tagging and version tracing, immutable storage, and distribution by introducing two resource objects: Model and ModelVersion. It liberates the extensive model file management mode. Image can also be combined with other excellent open-source communities to realize functions, such as image distribution acceleration and model image preheating, thus improving model deployment efficiency. At the same time, the introduction of the model management API connects the two otherwise separate stages: distributed training and inference services. This significantly improves the automation of the machine learning pipeline and the experience and efficiency of algorithm scientists to launch models and compare experiments. We welcome more users to try KubeDL and give us your valuable opinions. We also hope more developers will join us in building the KubeDL community!

Comprehensive and Deep Integration of Alibaba Cloud Function Compute and EventBridge

635 posts | 55 followers

FollowAlibaba Cloud Native Community - June 29, 2022

Alibaba Cloud Native Community - March 29, 2024

Alibaba Cloud Native Community - September 4, 2025

Farah Abdou - November 27, 2024

Alibaba Cloud Native Community - August 25, 2025

Alibaba Cloud Community - March 8, 2022

635 posts | 55 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community