This article was compiled from the speech delivered by Yi Li, top tech expert and Head of Container Service R&D at Alibaba Cloud, at the 2021 Cloud Architecture and O&M Summit co-hosted by Alibaba Cloud. In his speech, he shared the important changes in O&M technology in the cloud-native era and the CloudOps practices in the development process of Alibaba Cloud's ultra-large-scale cloud-native applications.

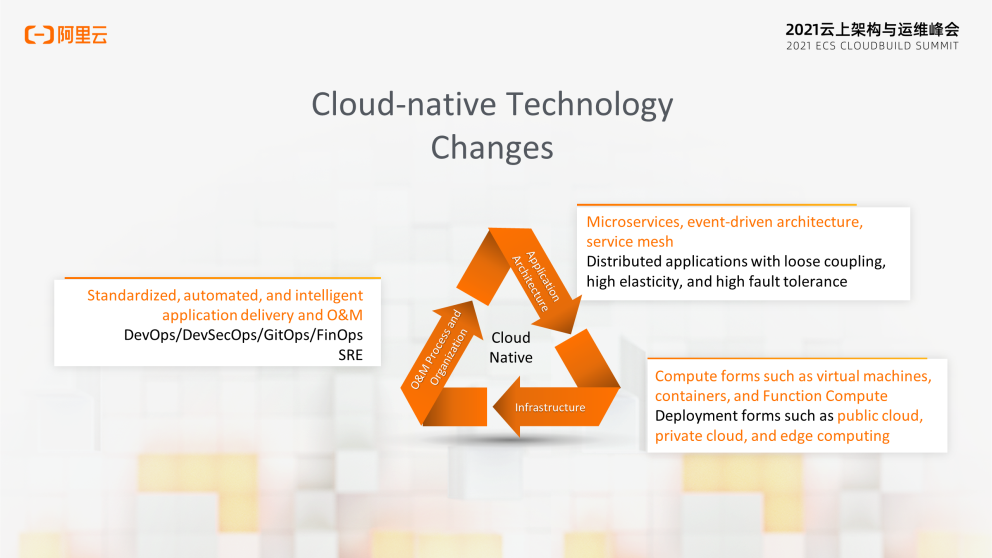

Cloud-native has become the foundation of innovation in digital economy technology. It is significantly changing cloud migration and use for enterprises. Cloud-native can help enterprises maximize cloud value and bring a new round of changes to their computing infrastructure, application architecture, organizational culture, and R&D processes. Business and technical challenges have also given birth to a new generation of cloud-native O&M technology systems.

Yi Li, Senior Technical Expert and Head of Container Service R&D at Alibaba Cloud

Alibaba Cloud defines cloud-native as software, hardware, and architecture created for the cloud intended to help enterprises maximize cloud value. The changes brought about by cloud-native technology include several dimensions.

Before the birth of cloud-native, the mobile Internet changed the form of business and the way people communicate with each other. Anyone can obtain the services they need at any time and any place. IT systems need to be able to cope with the Internet-scale rapid growth and iterate quickly at a low cost.

A series of Internet companies represented by Netflix and Alibaba have promoted the transformation of a new generation of application architecture. As a result, Spring Cloud, Apache Dubbo, and other microservice architectures were created. Microservice architectures have solved several problems of traditional monolithic applications. Microservice architectures allow each service to be independently deployed and delivered, improving business agility, and each service can independently scale out horizontally to meet Internet-scale challenges.

Compared with traditional monolithic applications, distributed microservice architecture has faster iteration speed, lower development complexity, and better scalability. However, the complexity of deployment and O&M has increased significantly. What should we do to solve this problem?

In addition, pulse computing became the norm. For example, computing power was required at midnight dozens of times during Double 11. A piece of breaking news may cause tens of millions of social media visits. Cloud computing is a more economical and efficient way to deal with burst traffic peaks. The O&M teams of enterprises focus on how to migrate to the cloud, how to make good use of the cloud, how to manage the cloud, and how to make applications fully utilize the elasticity of the infrastructure.

These business and technical challenges have spawned a cloud-native O&M technology system called CloudOps.

CloudOps has to address several key issues:

Tools are often fragmented in the traditional application distribution and deployment process due to a lack of standards. For example, the deployment of Java applications and AI applications requires different technology stacks, causing low delivery efficiency. In addition, we often need to deploy each application on an independent physical or virtual machine to avoid conflicts between application environments, which causes a lot of resource waste.

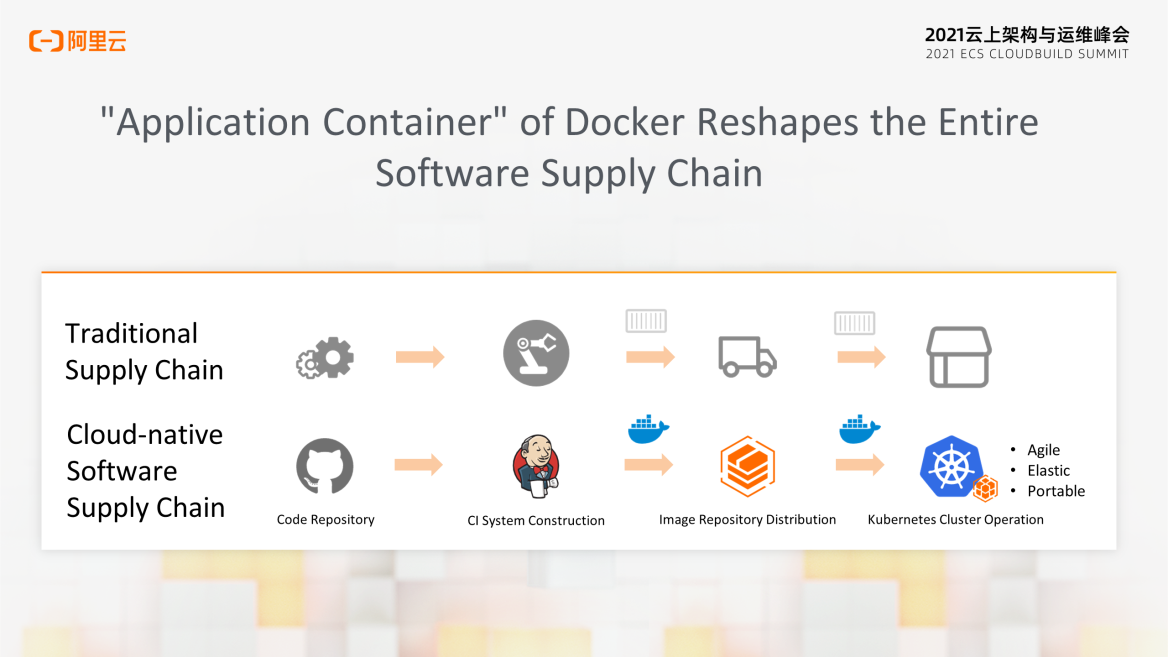

In 2013, Docker was released as an open-source container technology. It innovatively proposed application distribution and delivery based on container images, reshaping the entire lifecycle of software development, delivery, and O&M.

Just like the traditional supply chain system, any kind of product is transported in containers, which improves logistics efficiency and makes the global division of labor and collaboration possible.

Container images package applications and their dependent application environments. Images can be distributed through the image repository and can consistently in development, test, and production environments.

Container technology is a lightweight OS virtualization capability that can improve application deployment density and optimize resource utilization. Compared with traditional virtualization technology, it is more agile and lightweight with better elasticity and portability. As an application container in the cloud era, containers have reshaped the entire software supply chain and started a wave of cloud-native technology.

In the traditional software deployment and change process, applications are often unavailable due to differences between environments. For example, an application of a new version depends on the capabilities of JDK11. If the JDK version is not updated in the deployment environment, the application will fail. “It works on my machine” has become a teasing mantra among developers. The configuration of the system has become untestable. Therefore, you have to be very careful when using the in-place upgrade method for change.

Immutable Infrastructure is a concept proposed by Chad Fowler in 2013. Its core idea says, “once any infrastructure instance is created, it becomes read-only. If the instance needs to be modified and upgraded, use a new instance.”

This mode can reduce the complexity of configuration management and ensure that system configuration changes can be reliably and repeatedly executed. Moreover, quick rollback can be performed once a deployment error occurs.

Docker and Kubernetes are the best way to implement the Immutable Infrastructure mode. When we update an image version for a container application, Kubernetes creates a new container, routes new requests to the new container through load balancing, and destroys the old container. This avoids the annoying configuration drift.

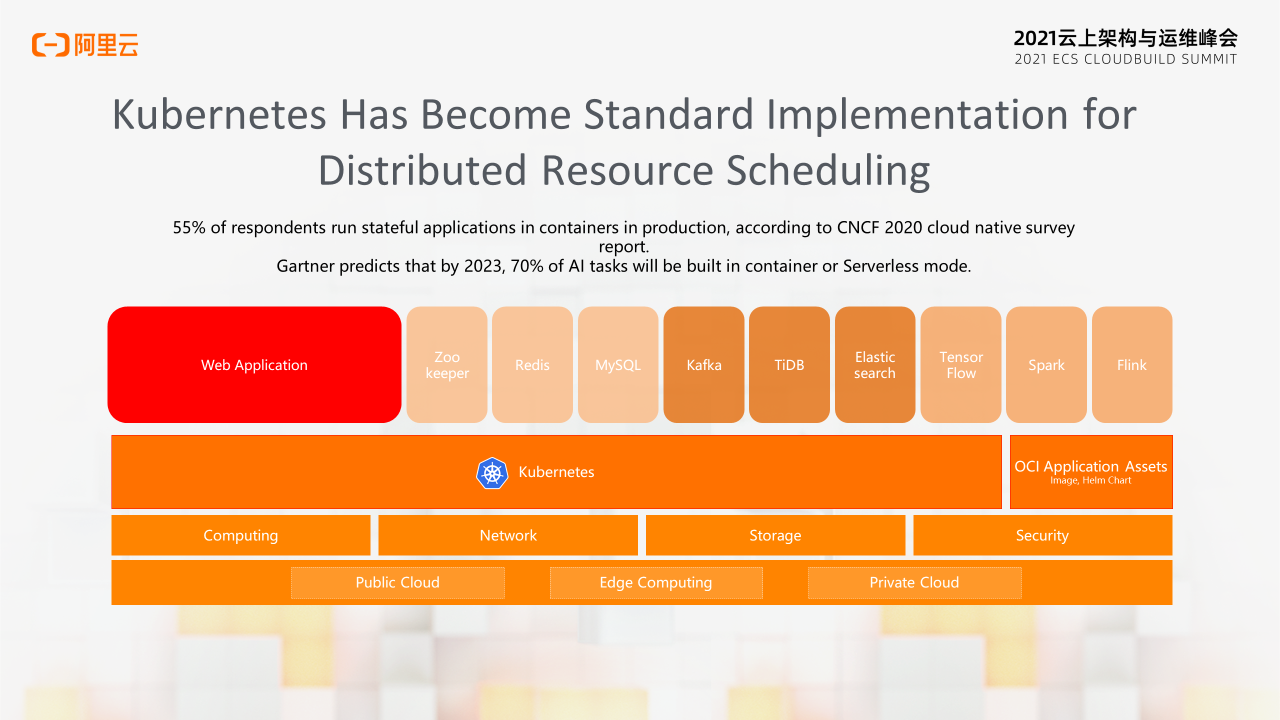

Currently, container images have become the standard for distributed application delivery. Kubernetes has become the standard for distributed resource scheduling.

More applications are managed and delivered through containers, including stateless Web applications, stateful databases, messages, and other applications or data-based and intelligent applications.

The CNCF 2020 survey report shows that 55% of the respondents are already running stateful applications in containers in production. Gartner predicts 70% of AI tasks will be built in containers or Serverless mode by 2023.

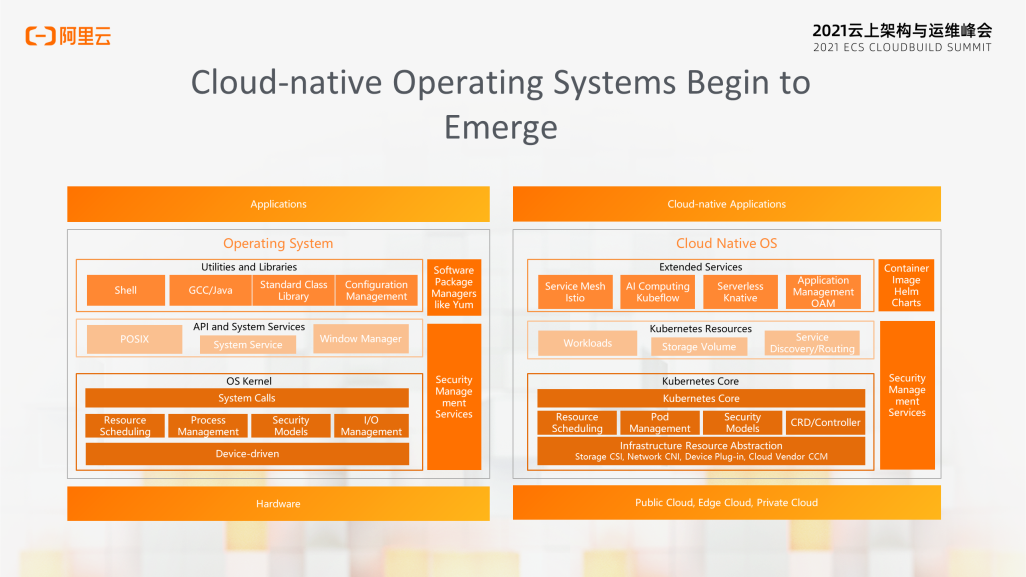

In terms of the classic Linux operating system and the conceptual model of Kubernetes, their goals are to encapsulate lower-layer resources and support upper-layer applications. They provide standardized APIs to support the application lifecycle and improve application portability.

The difference is that the computing scheduling unit of Linux is a process, and the scheduling range is limited to one compute node. However, the scheduling unit of Kubernetes is Pod, a process group. Its scheduling scope is a distributed cluster that supports the migration of applications between different environments, such as public clouds and private clouds.

Kubernetes is the best platform for the O&M Team to implement the CloudOps concept.

The first reason is that Kubernetes uses declarative APIs that allow developers to focus on the application itself rather than the system execution details. For example, Kubernetes provides abstractions of different types of application loads, such as Deployment, StatefulSet, and Job. Declarative API is an important cloud-native design concept. It allows the system to pass complexity to the infrastructure for implementation and continuous optimization.

In addition, Kubernetes provides a scalable architecture. All Kubernetes components are implemented and interact with each other based on consistent and open APIs. Developers can also provide domain-related extensions through Custom Resource Definition (CRD) / Operator, which broadens the application scenarios of Kubernetes.

Finally, Kubernetes provides platform-independent technical abstractions, such as CNI network plug-ins and CSI storage plug-ins. These abstractions can shield infrastructure differences for upper-layer business applications.

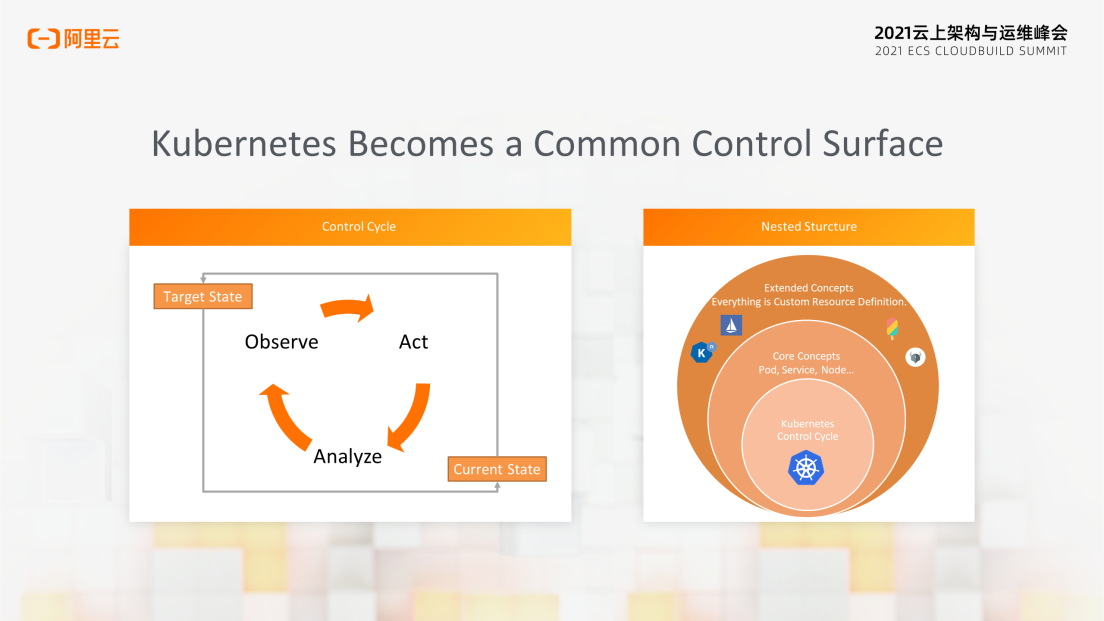

The magic behind the success of Kubernetes is the control loop. Kubernetes has several simple concepts.

First of all, everything is a resource, and the resource is managed automatically by the controller.

The user can declare the target state of the resource. When the controller finds that the current state of the resource is inconsistent with the target state, it will continue to adjust to make the resource state close to the target state. Various situations can be handled in a unified manner through this method, such as scaling according to the number of application duplicates and automatic application migration after node breakdown.

As a result, the resource range Kubernetes supports far exceeds container applications. For example, Service Mesh can declaratively manage application communication traffic. Crossplane can use Kubernetes CRD to manage and abstract cloud resources, such as ECS and OSS.

The ideal taking care of complexity on its end and leaving users with the simplicity of the Kubernetes controller is praiseworthy, but realizing an efficient and robust controller is full of technical challenges.

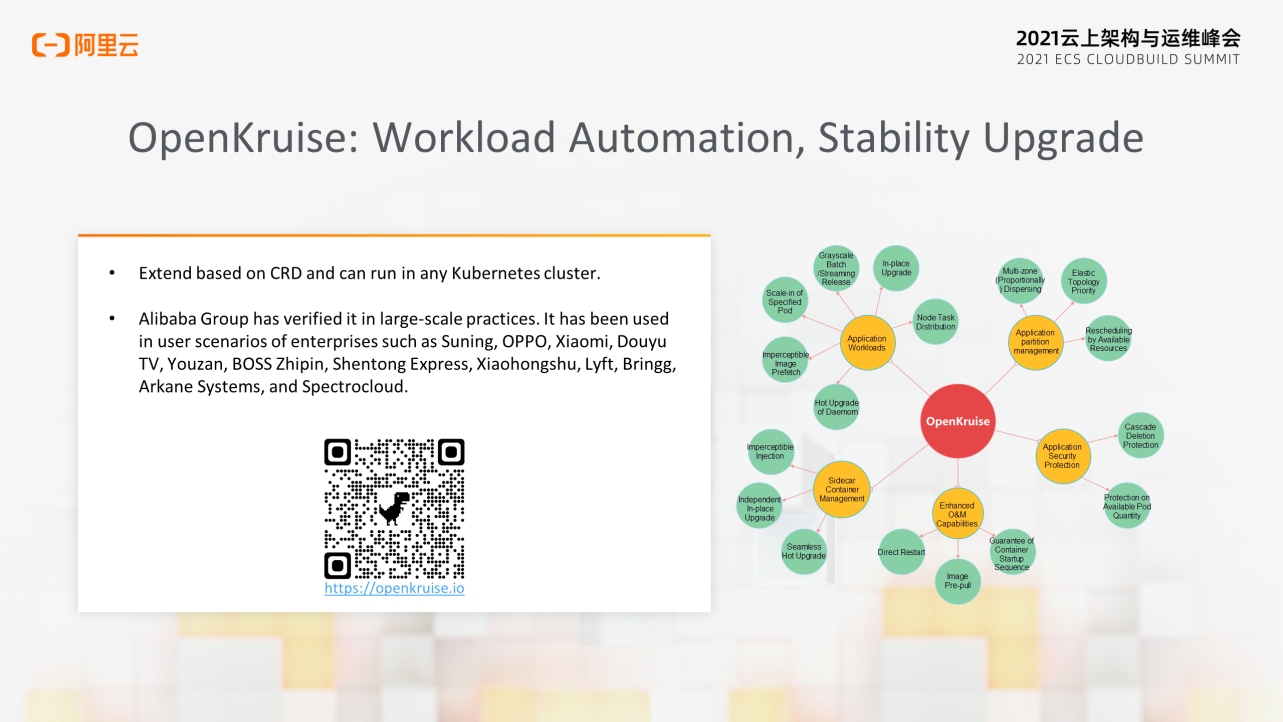

OpenKruise is Alibaba Cloud's open-source automated management engine of cloud-native applications. It is also a Sandbox Level Project hosted by the Cloud Native Computing Foundation (CNCF). It comes from Alibaba's years of containerization and cloud-native technology accumulation. It aims to solve the automation and stability challenges faced by container applications in large-scale production environments.

OpenKruise provides various capabilities, including enhanced application canary release, stability protection, and Sidecar container extension.

The open-source implementation of OpenKruise is consistent with the internal version code of the Group. It supports the cloud-native transformation of all Alibaba Group applications and has also been widely used by Suning, OPPO, and other enterprises. You're welcome to build the community together and give us your valuable feedback.

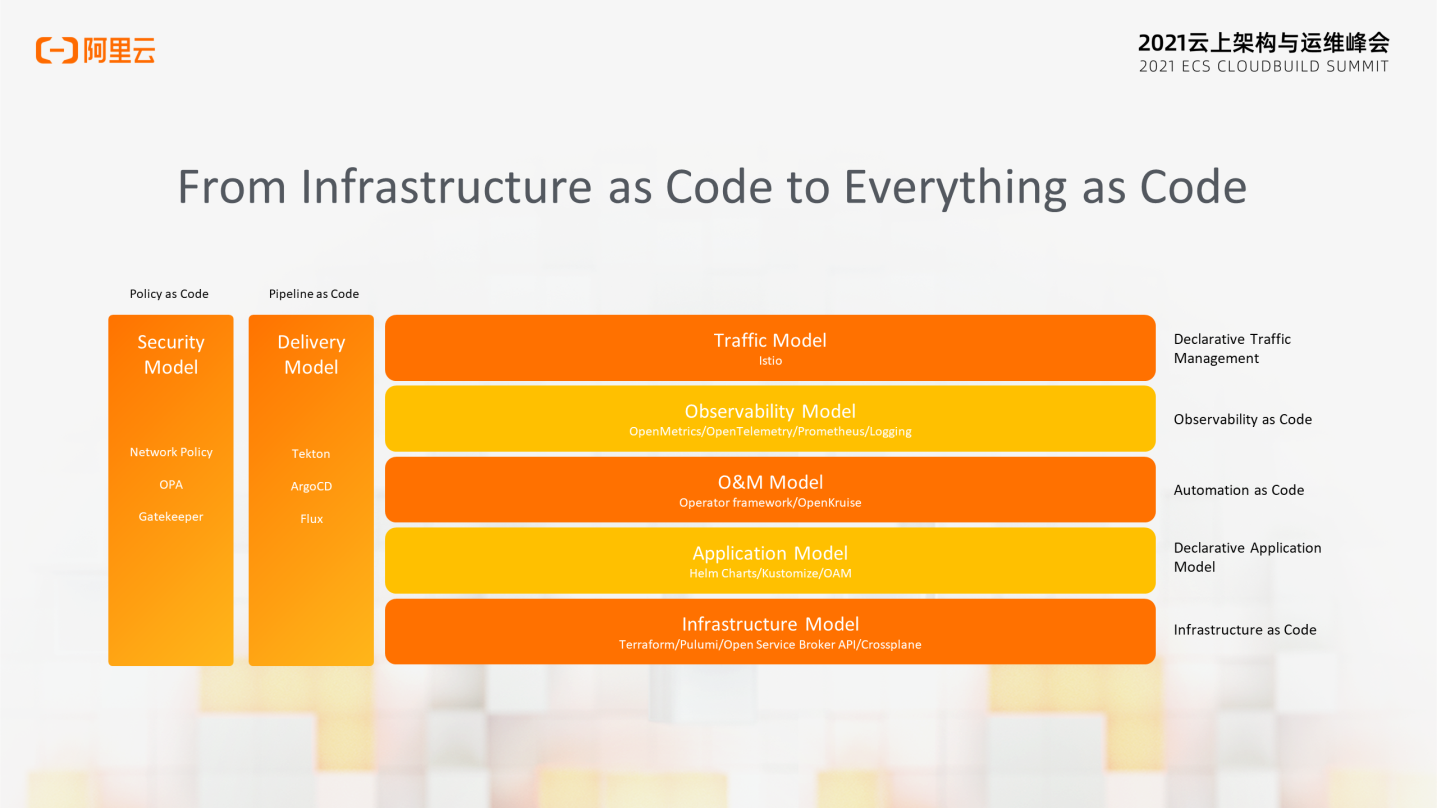

Infrastructure-as-Code (IaC) is a typical declarative API that changes the ways of resource management, configuration, and collaboration on the cloud. We can automate the creation, assembly, and configuration change of different cloud resources using IaC tools, such as cloud servers, networks, and databases.

We can extend the IaC concept to the entire processes of delivery and O&M of cloud-native software, namely Everything as Code. This figure shows the various models involved in cloud-native applications. We can manage the configuration of applications in a declarative manner, including infrastructure and application definition to application delivery management and security systems.

For example, we can use Istio to declaratively handle application traffic switching and use Open Policy Agent (OPA) to define runtime security policies.

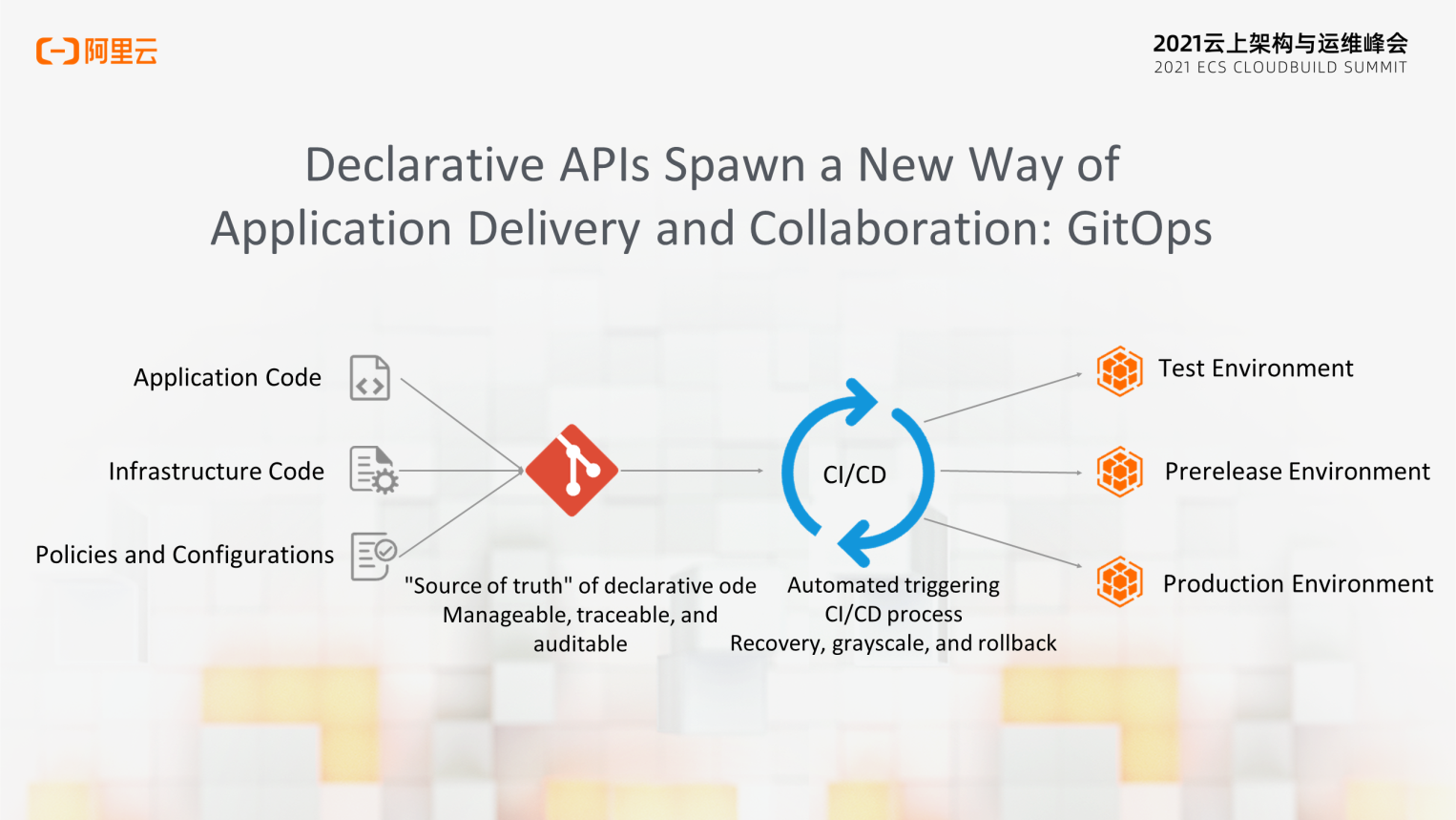

Further, we can manage all the environment configurations of the application through Git, the source code control system, and deliver and change through automated processes. This is the core concept of GitOps.

First, all configurations from the application definition to the infrastructure environment are saved in Git as source code. All changes and approvals are also recorded in the Git historical state. This way, Git becomes the source of truth. We can trace the change history and roll it back to a specified version.

GitOps is combined with declarative APIs and immutable infrastructure to ensure the reproducibility of application environments and improve delivery and management efficiency. GitOps has been widely used in Alibaba Group and is also supported in Alibaba Cloud Container Service for Kubernetes (ACK). Currently, the GitOps open-source community is improving relevant tools and best practices. Stay tuned for more information.

Distributed systems are highly complex. Problems anywhere in the application, infrastructure, and deployment process may lead to business system failures.

Faced with such uncertain risks, we have two approaches; one is resign to fate and the other is to take the initiative systematically to improve the system certainty.

In 2012, Netflix introduced the concept of chaos engineering. Netflix can discover the weakness of the system in advance by actively injecting faults. This helps them drive the improvement of the architecture and realize the business resilience. We can compare the way chaos engineering works to vaccines. Inoculating us with an inactivated vaccine allows our immune system to fight against diseases.

The success of the Double 11 Global Shopping Festival is inseparable from the large-scale practice of chaos engineering, such as stress testing in comprehensive procedures. Alibaba’s Teams have accumulated rich practical experience in this field.

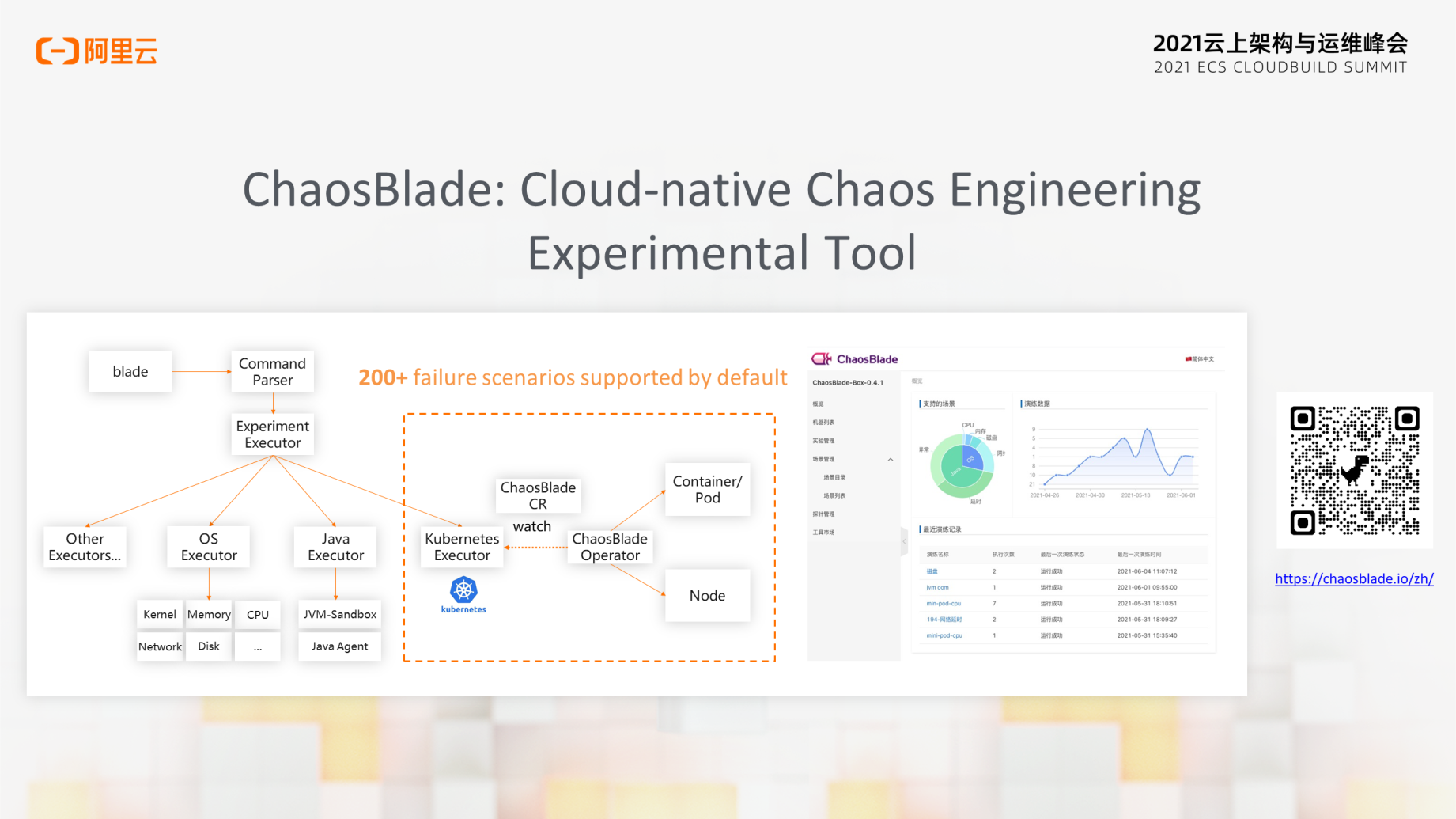

ChaosBlade is a group of experimental tools that follow the concept of chaos engineering. It can be applied in many scenarios and is easy to use. It has become a Sandbox Level Project of CNCF. It supports different running environments, such as Linux, Kubernetes, and Docker, and multiple languages, such as Java, JavaScript, C++, and Golang. ChaosBlade has built-in test solutions for over 200 scenarios.

chaosblade-box is a newly introduced chaos engineering console that enables the platform management of experimental environments, simplifying the user experience and lowering the threshold for use. You are welcome to join contribute to the ChaosBlade community. We also welcome you to use Alibaba Cloud Application High Availability Service(AHAS).

Finally, I will introduce some of our explorations in CloudOps based on Alibaba's practice.

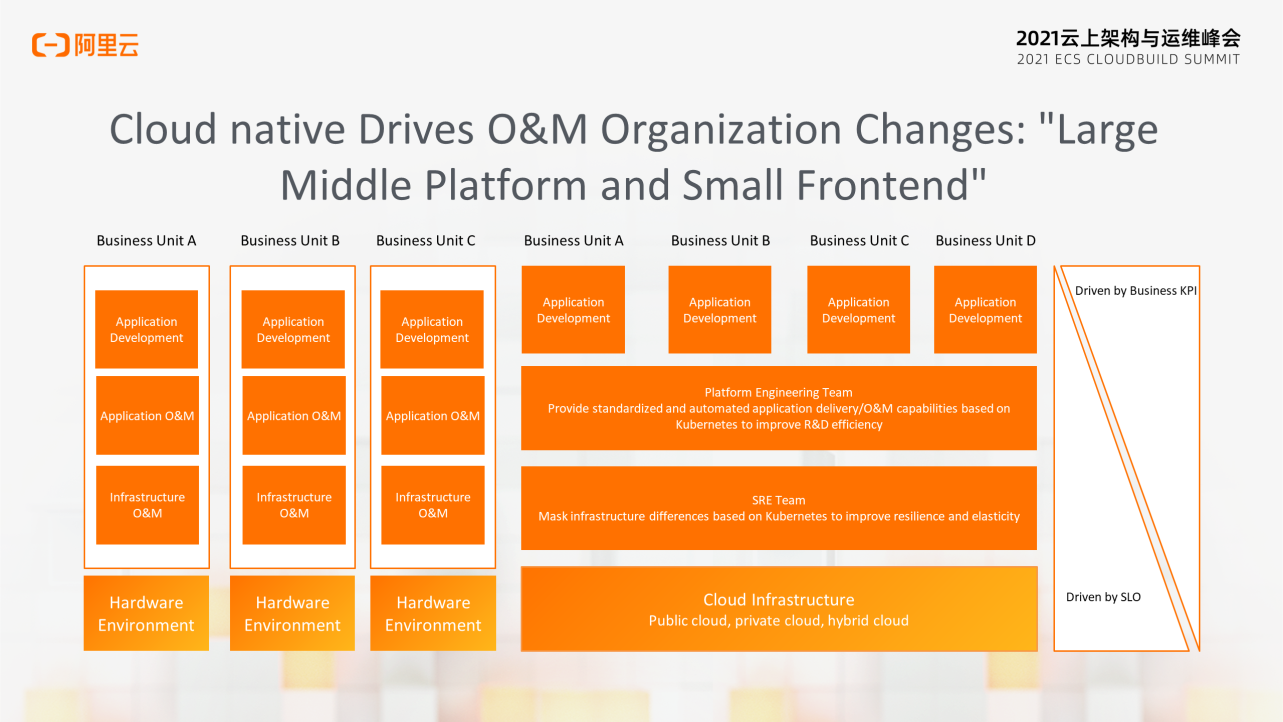

Development and O&M roles are separated in traditional organizations. Different business lines have built various information silo architectures. Teams in infrastructure environment and O&M, application O&M, and development are all independent. There is not enough collaboration and reuse among them.

The advent of the cloud era is also changing modern IT organizations and processes.

First, the public cloud and private cloud have become the infrastructure shared among different business departments.

Then, the concept of Site Reliability Engineering (SRE) began to be widely accepted. It uses software and automation to solve the O&M complexity and stability of the system. Due to the standardization, scalability, and portability of Kubernetes, more enterprise SRE teams manage cloud environments based on Kubernetes, improving enterprise O&M efficiency and resource efficiency.

On top of this, the Platform Engineering Team began to build an enterprise PaaS platform and CI/CD process based on Kubernetes to support middleware and application deployment and O&M of different business departments. This will improve the standardization and automation level of enterprises and the efficiency of application R&D and delivery.

In such a hierarchical structure, the lower-layer teams are more driven by service-level objectives (SLO), so the upper-layer systems have better predictability of the underlying dependent technology. The upper-layer teams are more driven by business, so they can support business development better.

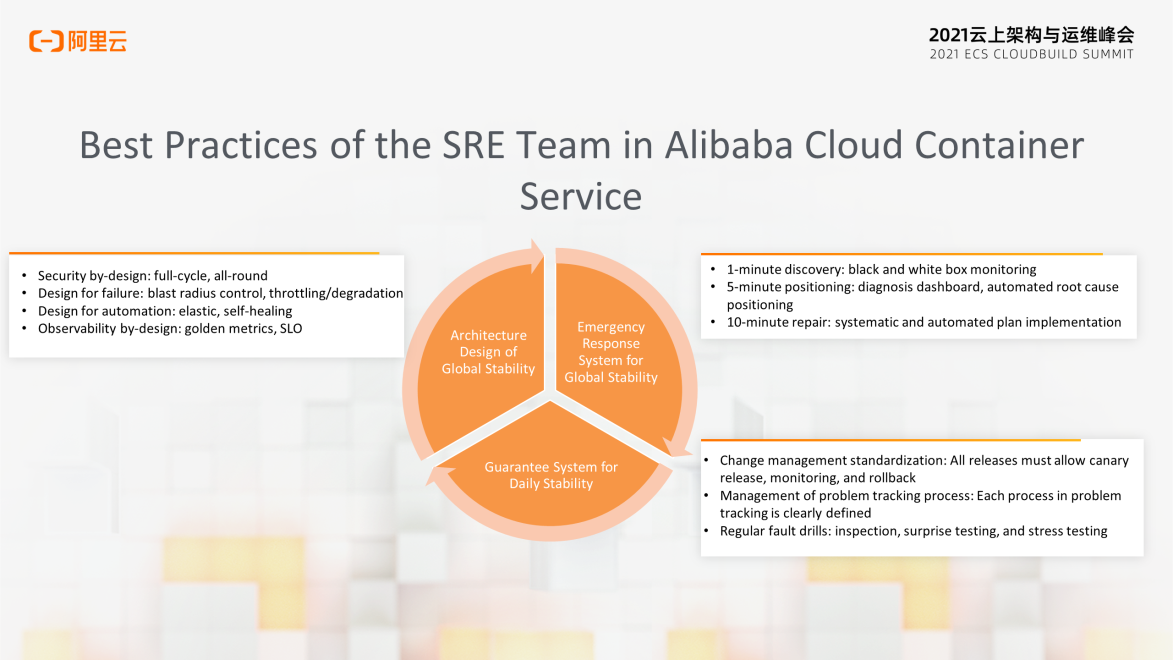

The SRE Team of Alibaba Cloud Container Service has also been practicing the best practices of CloudOps. Here is a brief summary.

The first thing is the global stability architecture design, which allows the entire platform to prepare for emergencies in advance.

The second thing is building a stability emergency response system. We call it 1-5-10 quick recovery capability. It refers to:

The last thing is the daily stability guarantee, which mainly includes:

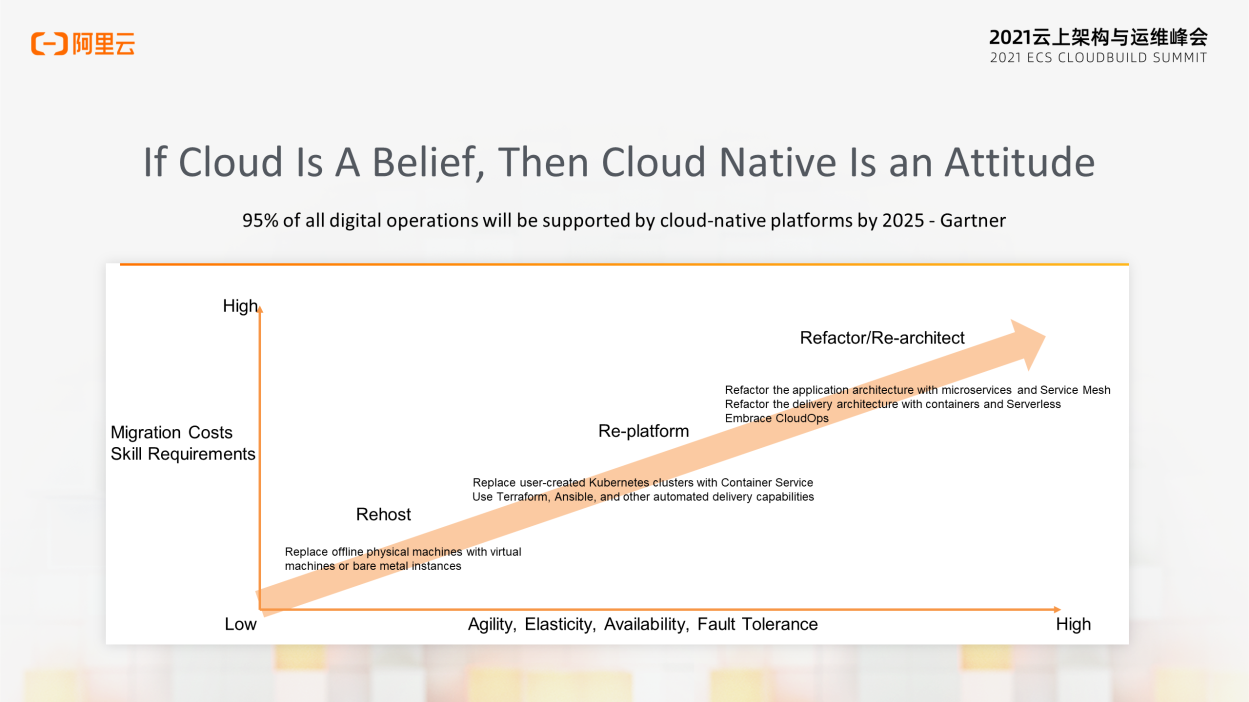

Cloud-native has become an unstoppable technological trend. Gartner predicts 95% of digital O&M will be supported by cloud-native platforms by 2025.

We can choose an appropriate cloud migration path based on enterprise capabilities and business objectives. It can be divided into several stages:

The complexity and the required skills of migration from Rehost, Re-platform, and Re-architect are increasing, but the benefits of agility, elasticity, availability, and fault tolerance are also increasing.

Alibaba Group has also gone through such a process in its cloud migration. Last year, 100% of our businesses operated on the public cloud. This year, we achieved 100% cloud-native application. This improved the R&D efficiency of Alibaba's business by 20% and resource utilization by 30%.

In summary, based on cloud-native technologies, such as Docker and Kubernetes, the open community standards, immutable infrastructure, and declarative APIs provided will become the best practices of enterprise CloudOps. On this basis, data-based and intelligent systems will be driven to simplify O&M, allowing enterprises to focus on business innovations. Alibaba Cloud will also continue to provide services based on its capabilities accumulated in the ultra-large-scale cloud-native practice and exploration and work with more enterprises and developers to fully embrace the cloud-native O&M technology system.

March Mega Sale: A Shopping Guide for the Best Alibaba Cloud Offers

1,320 posts | 464 followers

FollowAlibaba Developer - December 16, 2021

Alibaba Cloud Native - February 7, 2021

Alibaba Cloud Native Community - March 14, 2022

Alibaba Developer - January 5, 2022

Alibaba Cloud Native Community - December 6, 2022

Alibaba Cloud Native Community - November 23, 2022

1,320 posts | 464 followers

Follow Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by Alibaba Cloud Community