By Xining Wang

As a basic core technology used to manage application service communication, service mesh brings safe, reliable, fast, application-unaware traffic routing, security, and observability for calls between application services.

The service mesh technology represented by Istio has been around for four to five years, and Alibaba Cloud is one of the first vendors to support the service mesh cloud service. The service mesh technology decouples from the application by sidecar-izing the capabilities of service governance. These sidecar proxies form a mesh data plane through which traffic between all application services can be processed and observed.

The management component responsible for how the data plane performs is called the control plane, which is the brain of service mesh. It provides open APIs for users to manipulate network behavior easier. With service mesh, Dev/Ops/SRE will address application service management issues in a unified, declarative manner.

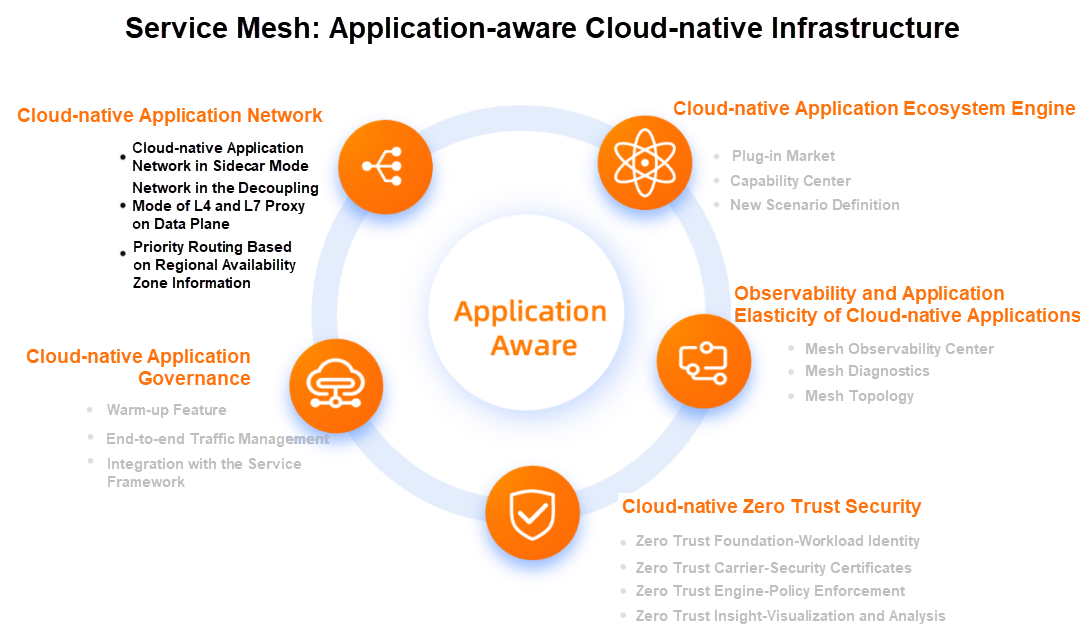

We think of service mesh as a cloud-native application-aware infrastructure. It is about service governance and includes cloud-native application-aware features and capabilities in several other dimensions. We divide it into five parts:

Let's look at the cloud-native application network. We divide it into three parts:

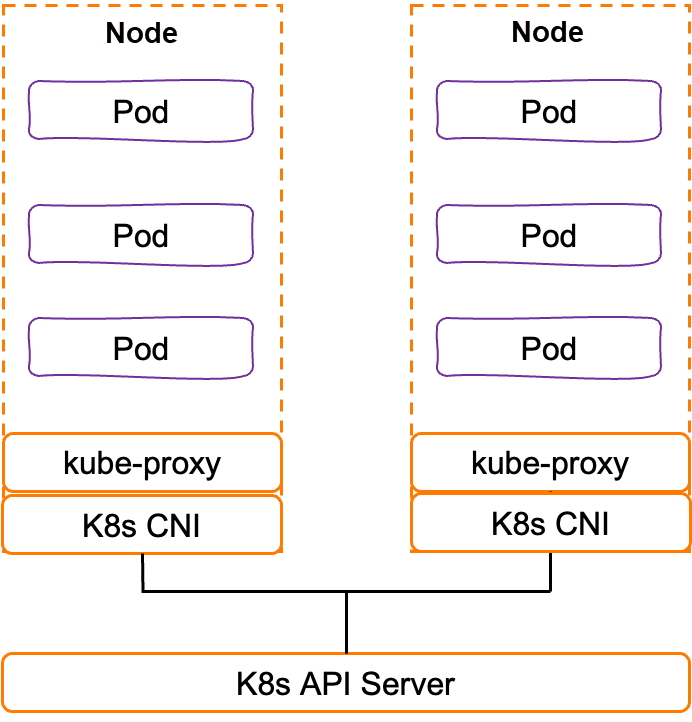

The network is the core part of Kubernetes, involving communication between pods, communication between pods and services, and communication between services and external systems. Kubernetes clusters use CNI plug-ins to manage the container network functions and use Kube-proxy to maintain network rules on nodes, such as performing load balancing to the traffic destined for services and sending it to the correct backend pods through ClusterIP and port.

The container network has become a new interface for users to use IaaS networks. The ACK Terway network is used as an example. Based on the Alibaba Cloud VPC Elastic Network Interface (ENI), it has high-performance features and seamlessly connects with the Alibaba Cloud IAAS network. However, kube-proxy settings are global, with no fine-grained control for each service, and kube-proxy operates exclusively at the network packet level. It cannot meet the needs of modern applications, such as traffic management, tracing, and authentication on the application layer, etc.

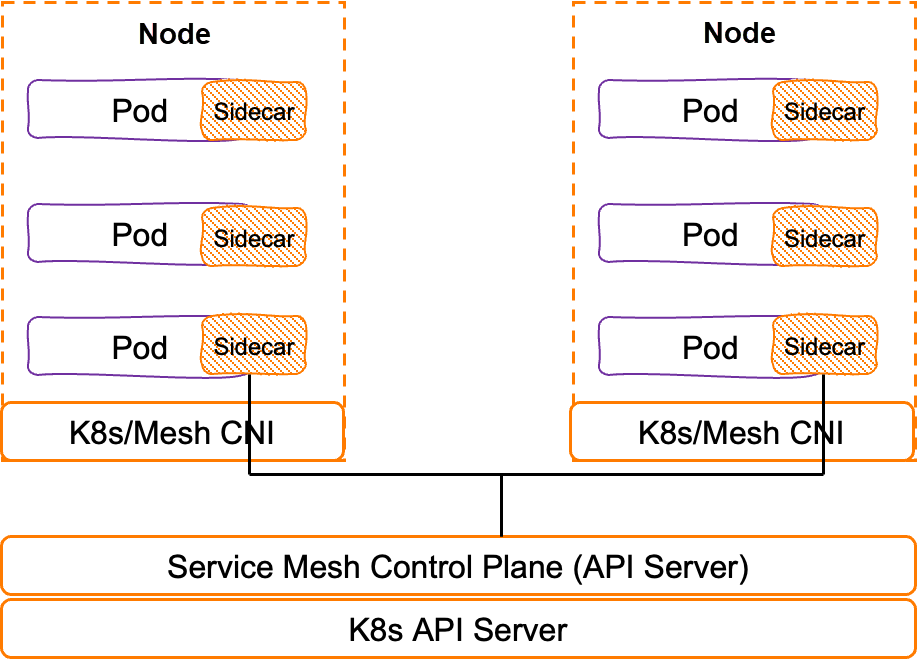

Let's look at how the cloud-native application network in service mesh sidecar proxy mode solves these problems. Service mesh decouples traffic control from the service layer of Kubernetes through sidecar proxies, injects proxies into each pod, and manages these distributed proxies through the control plane, allowing for more fine-grained traffic control between these services.

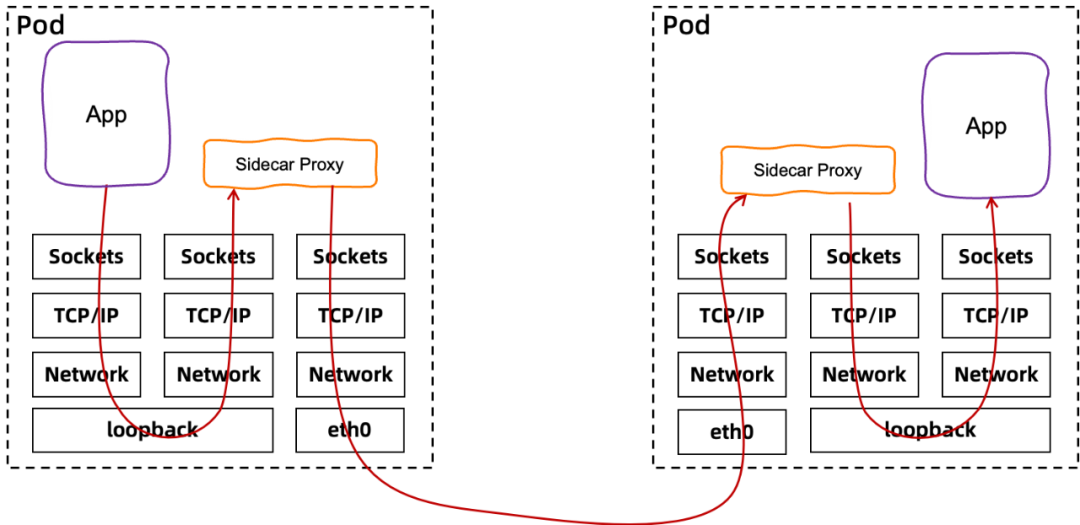

What is the process of transmitting network packets under the sidecar proxy?

The current Istio implementation involves the overhead of the TCP/IP stack. It uses the netfilter of the Linux kernel to intercept traffic by configuring iptables and routes the traffic to and from the sidecar proxy based on the configured rules. A typical path from a client pod to a server pod (even within the same host) must traverse the TCP/IP stack at least three times (outbound, client sidecar proxy to server sidecar proxy, and inbound).

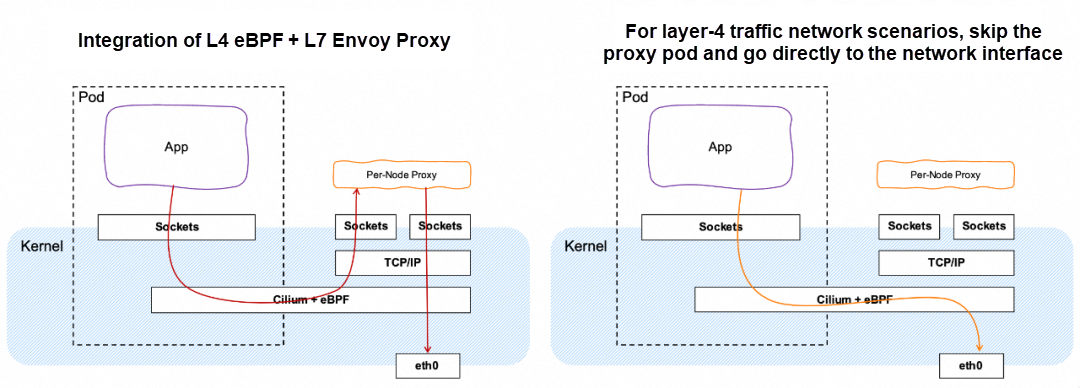

In order to solve this network data path problem, the industry has introduced eBPF to bypass the TCP/IP network stack in the Linux kernel to achieve network acceleration, which reduces latency and improves throughput. Of course, eBPF does not replace the seven-layer proxy capability of Envoy. In the scenario of seven-layer traffic management, the integrated mode of 4-layer eBPF + 7-layer Envoy proxy is still needed. Only in cases where 4-layer traffic network is targeted, can the proxy pod be skipped directly into the network interface.

In addition to the traditional sidecar proxy mode, the industry has begun to explore a new data plane model. Its design concept is – the data plane is layered, layer 4 is used for basic processing, characterized by low resources and high efficiency, and layer 7 is used for advanced traffic processing, characterized by rich features (but requires more resources). This way, users can employ service mesh techniques in progressive increments based on the range of features desired.

Specifically, the processing on layer 4 mainly includes:

The processing on layer 7 mainly includes:

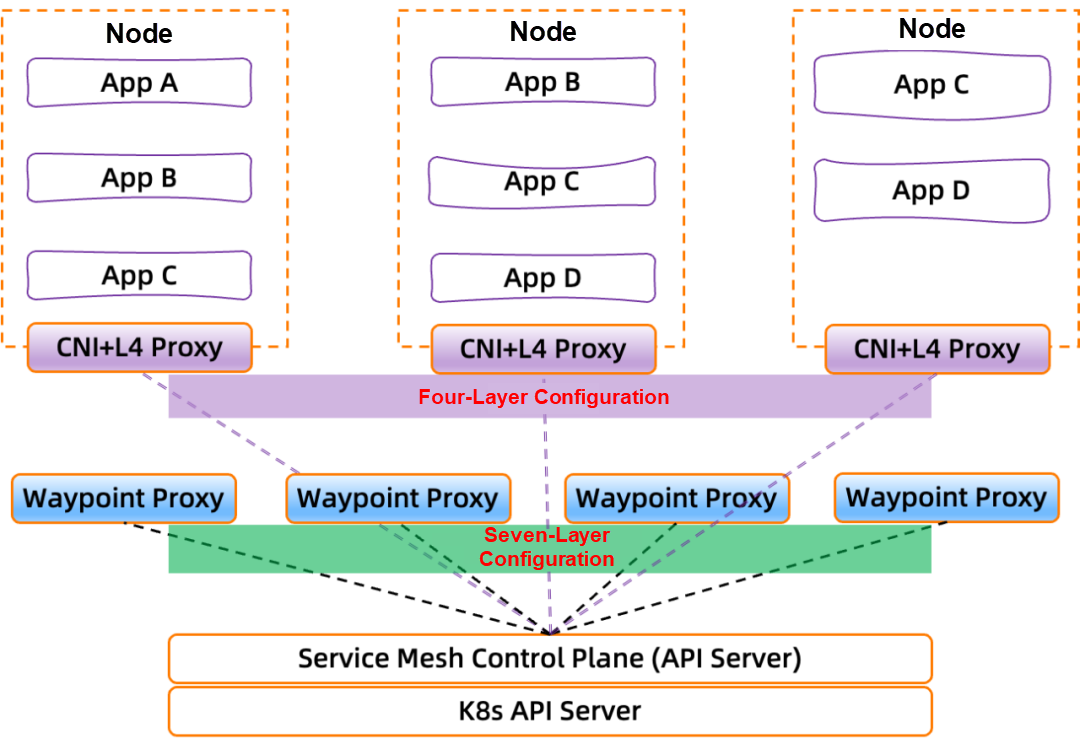

What will be the network topology of service mesh in the decoupling mode of the L4 and L7 Proxy on the data plane?

On the one hand, the L4 Proxy capability is moved down to the CNI component. The L4 Proxy component runs in the form of a DaemonSet and runs on each node separately. This means it is a shared base component that serves all pods running on a node.

On the other hand, the L7 Proxy no longer exists in the Sidecar mode but is decoupled. We call it Waypoint Proxy, which is an L7 Proxy pod created for each Service Account.

The configurations of layer 4 and layer 7 proxy are still managed by the service mesh control plane component.

In short, this decoupling pattern enables further decoupling and separation between the service mesh data plane and the application.

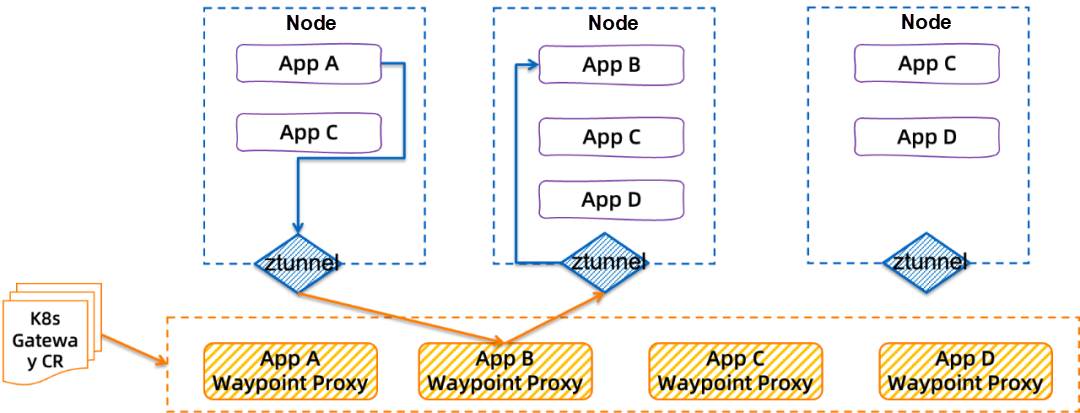

Then, the specific implementation of Istio can be divided into three parts:

An Example of Service Mesh as a Cloud-Native Application Network: Priority Routing Based on Regional Availability Zone Information

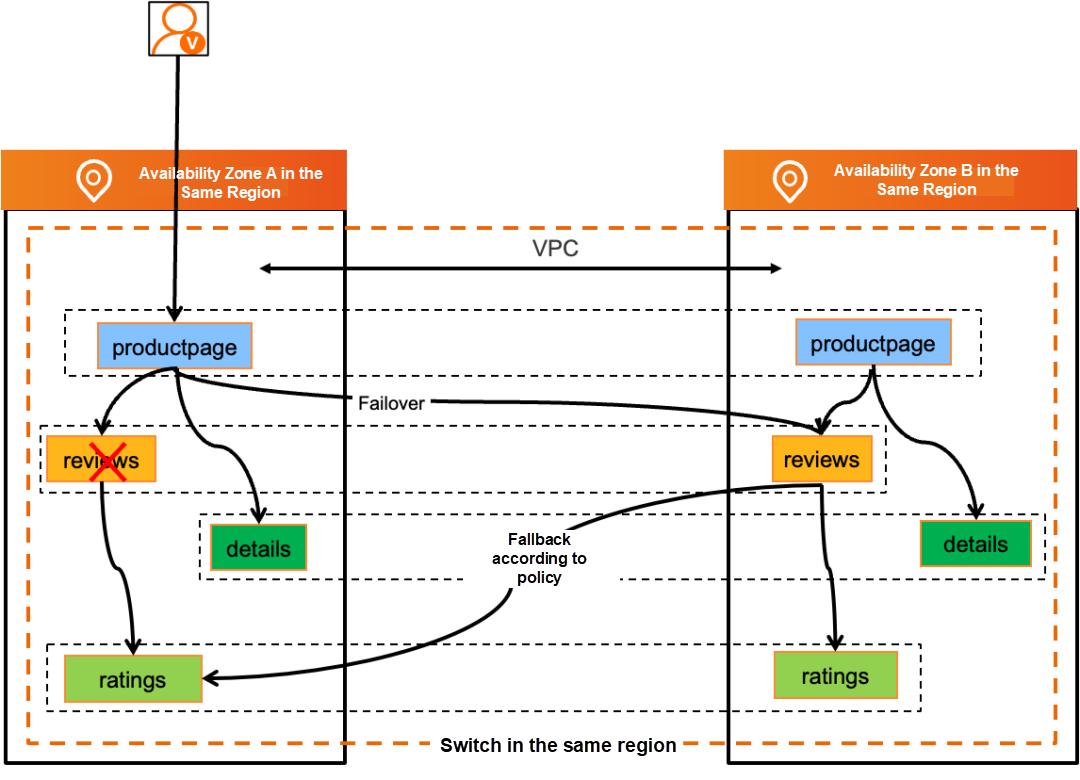

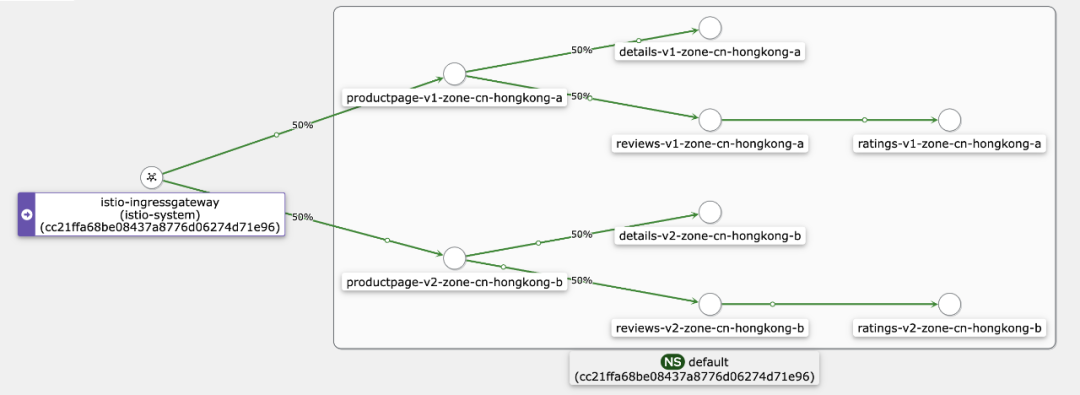

In this example, we deploy two sets of the same application services to two different availability zones, namely, zone A and zone B in this deployment architecture diagram. In normal cases, these application services follow the mechanism of prioritizing traffic routing in the same availability zone. In other words, the application service productpage in zone A points to the reviews service in the current availability zone.

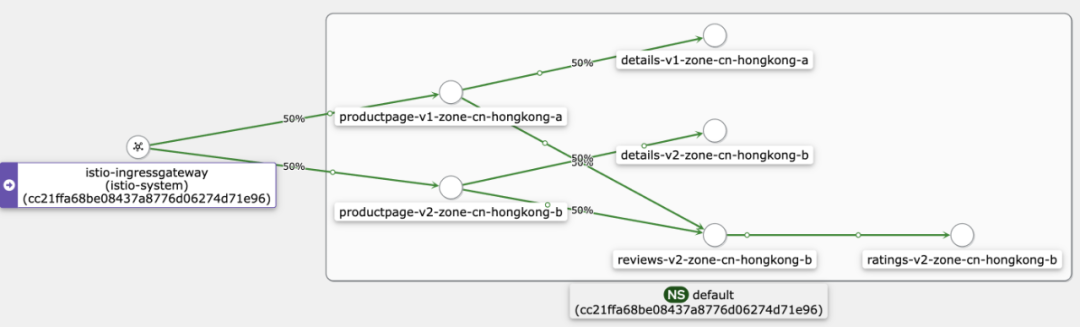

When services in the current availability zone are unavailable (for example, the reviews service fails), the disaster recovery mode is automatically enabled. In this case, the productpage service in zone A points to the reviews service in zone B.

This traffic topology shows the call links displayed by availability zone information in the mesh topology provided by Alibaba Cloud Service Mesh (ASM). The process of implementing the traffic routing in the same availability zone can be completed without modifying the application code.

Similarly, the service mesh technology automatically implements failover to ensure high availability without modifying the application code. In this topology, you can see that the application services in zone A are automatically switched to calling the services in zone B.

Let's look at cloud-native application governance, which contains many dimensions. Here, we focus on three parts:

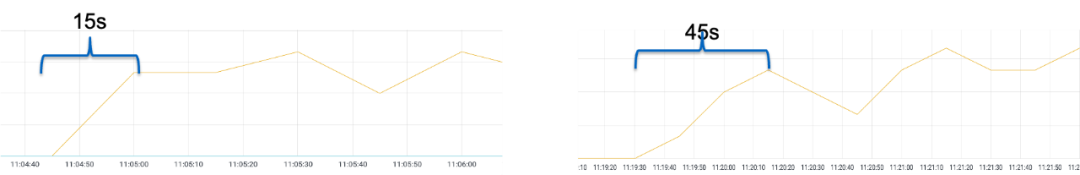

Let's look at the difference before and after the warm-up mode is enabled.

Warm-up mode is not enabled:

Warm-up mode is enabled:

A Customer Case: Support for the Warm-Up Feature for Graceful Service Launch in Scale-out and Rolling Update Scenarios

You can also see from these two figures that after the warm-up mode is enabled, the time required for a newly created Pod to reach a balanced load request is lengthened. This also reflects that the number of requests entering the Pod gradually increases during the warm-up time.

Background:

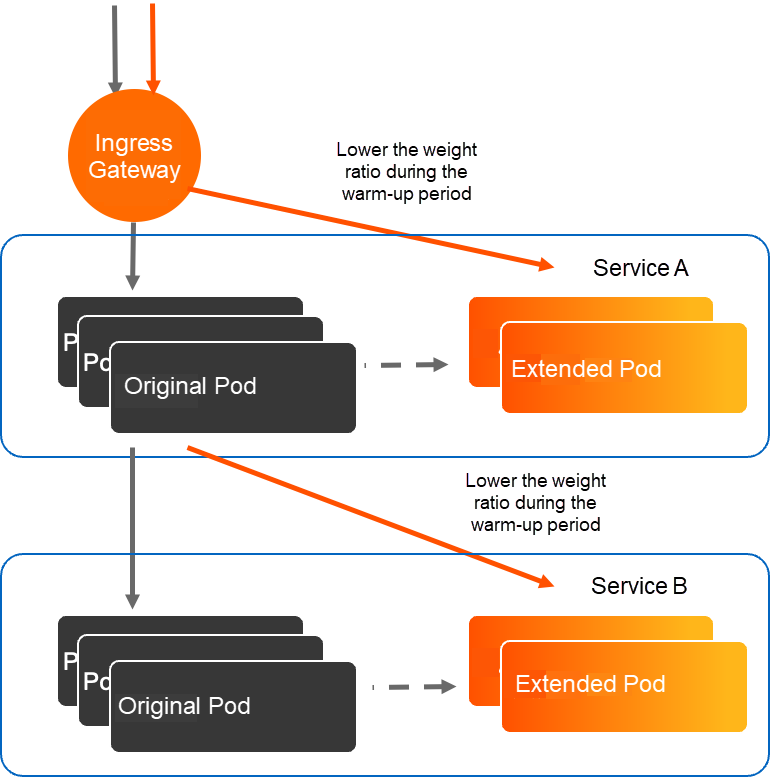

Support for the warm-up feature for graceful service launch:

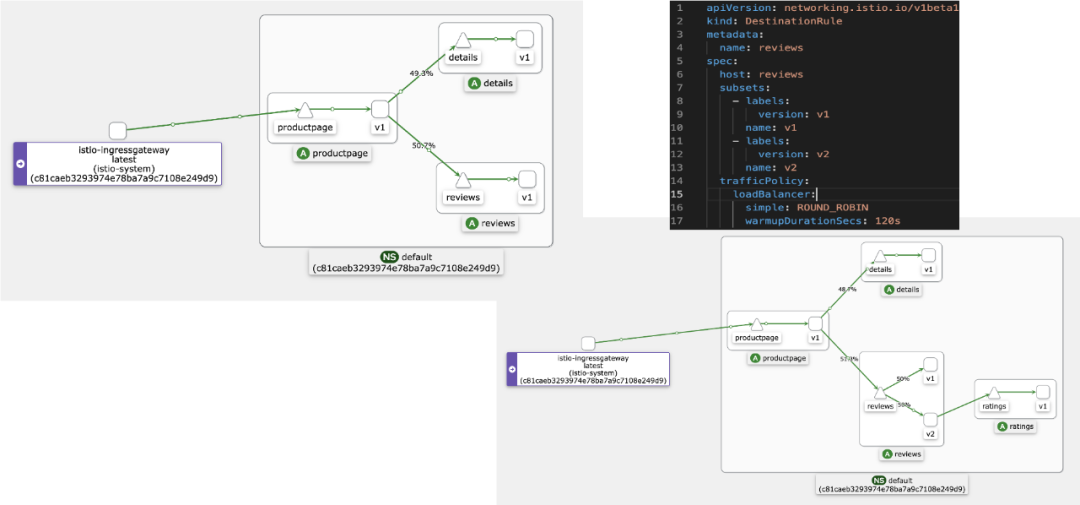

How is this feature implemented in ASM? Simply put, a single line of configuration can realize the warm-up capability. This topology is also a simplified de-identification version of this actual case. You can see the warm-up feature is used.

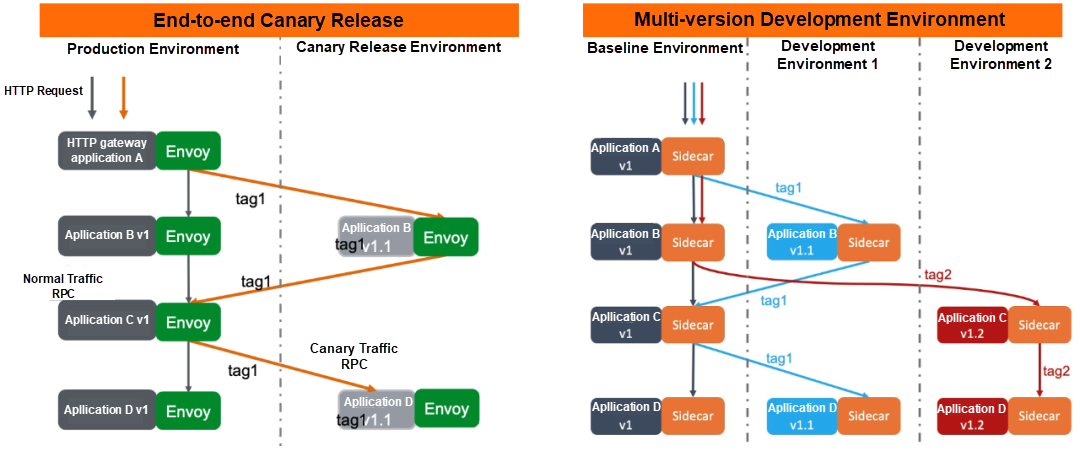

End-to-End Canary Release:

While the production environment is running normally, perform a canary release on some application services. For example, perform a canary release on Application B and Application D in the figure. Without modifying the application logic, the service mesh technology can be used to dynamically route requests to services in different versions according to the request source or request header information. For example, if the request header contains tag 1, Application A will call the canary release version, Application B. If Application C does not have a canary release version, the system will automatically perform the fallback operation to the original version.

Multi-Version Development Environment:

Similarly, there is a baseline environment, and other different development environments can deploy some application services in their versions as needed. According to the request source or request header information, the system dynamically routes requests to services in different versions.

In the entire process, you do not need to modify the application code logic. You only need to tag applications. ASM implements an abstract definition of Traffic Label CRD to simplify the tagging of traffic and application instances.

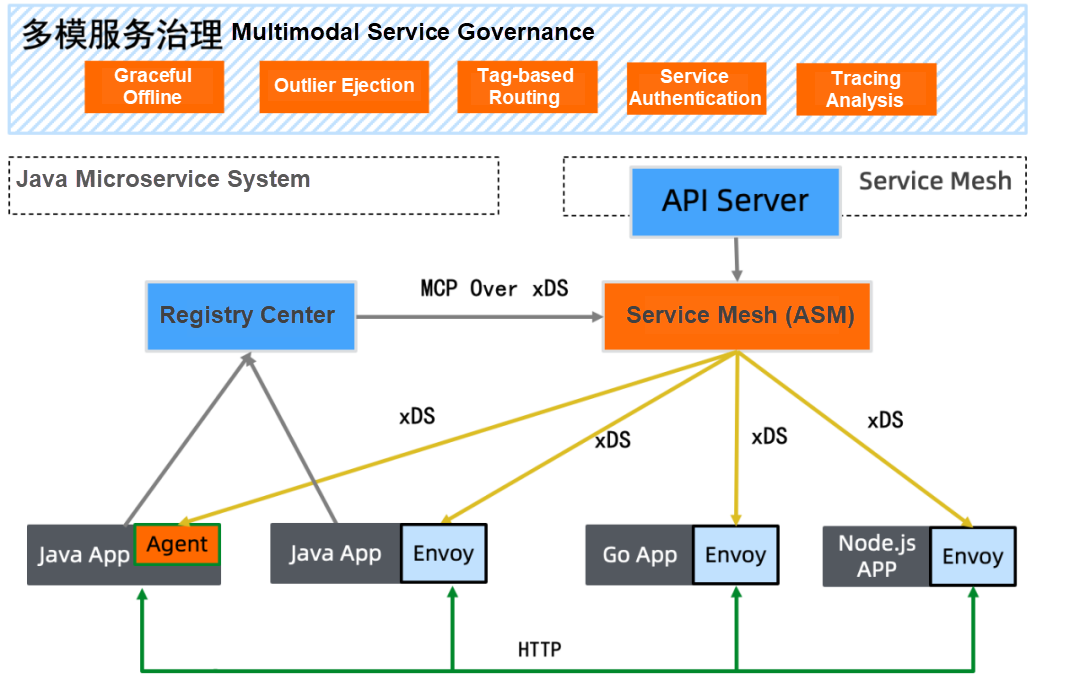

A service framework similar to Spring Cloud or Dubbo is still implemented in the legacy application system. ASM supports multi-model service governance and implements call intercommunication, monitoring intercommunication, and governance intercommunication.

The service information of the original registration center is registered to service mesh through the MCP over the xDS protocol, and the control plane component of service mesh is used to uniformly manage rule configuration and distribute governance rules by the xDS protocol.

Next, look at Zero Trust security for cloud-native applications, including:

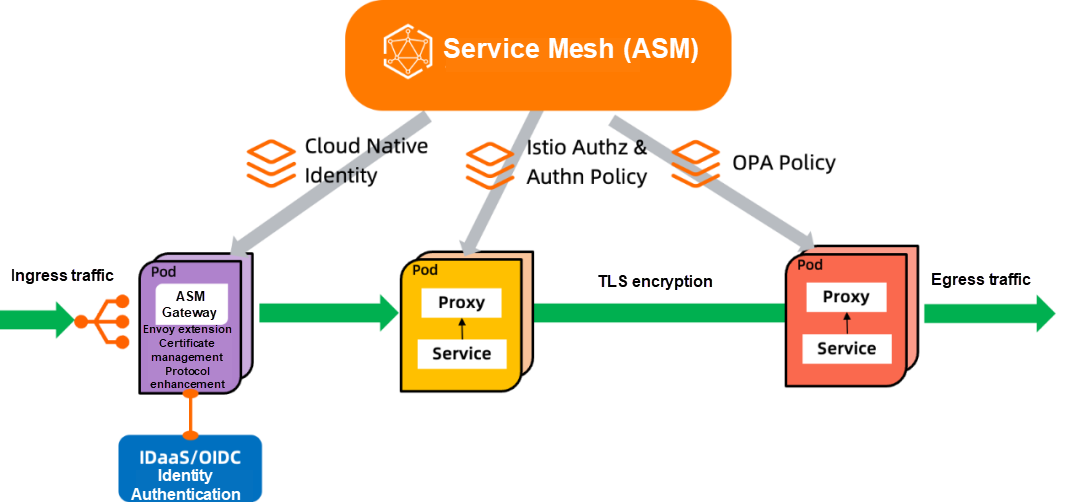

Specifically, building an ASM-based Zero Trust security capability system includes the following aspects.

Using ASM to implement zero trust has the following advantages:

This is a scenario where a platform adopts the ASM-based Zero Trust security technology. As you can see, the customer follows the basic principles of the Zero Trust security architecture:

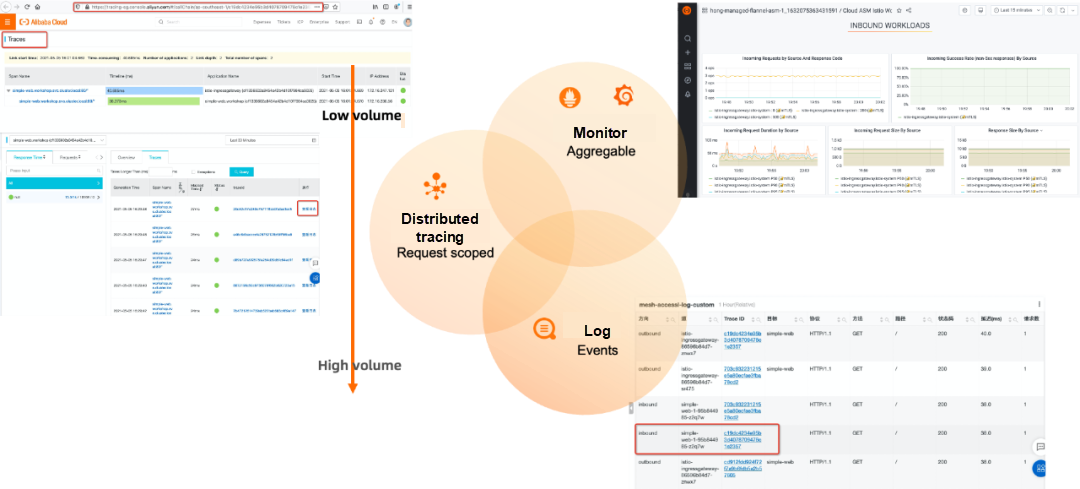

Next, look at the observability and application elasticity of cloud-native applications, including:

Based on our customer support and hands-on experience with the service mesh technology:

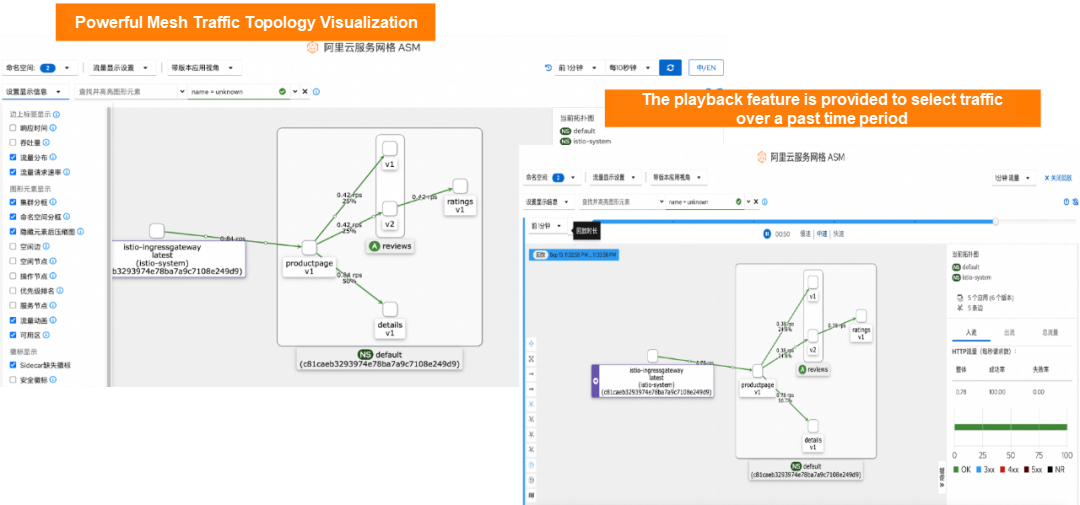

In addition to powerful mesh traffic topology visualizations, a playback feature is provided to select traffic over a past period.

Next, look at the cloud-native application ecosystem engine, including three parts:

Based on the built-in template, you only need to perform simple configurations based on the corresponding parameter requirements to deploy the corresponding EnvoyFilter plug-in. Through such a mechanism, the data plane becomes a more extensible plug-in collection capability.

There is a series of application-centric ecosystems in the industry around the service mesh technology. Fully-managed ASM supports the following ecosystems:

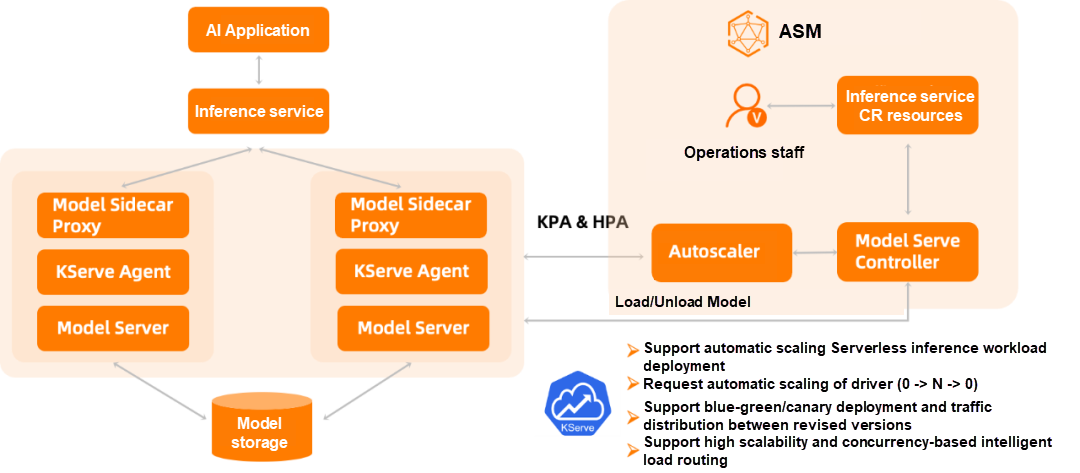

In addition, we are exploring broadening new scenarios driven by service mesh. I will give an AI Serving example here.

This demand comes from our actual customers who want to run KServe based on the service mesh technology to implement AI services. KServe runs smoothly on service mesh to implement blue/green and canary deployment of model services, traffic distribution between revisions, and other capabilities. It supports auto-scaling serverless inference workload deployment, high scalability, and concurrency-based intelligent load routing.

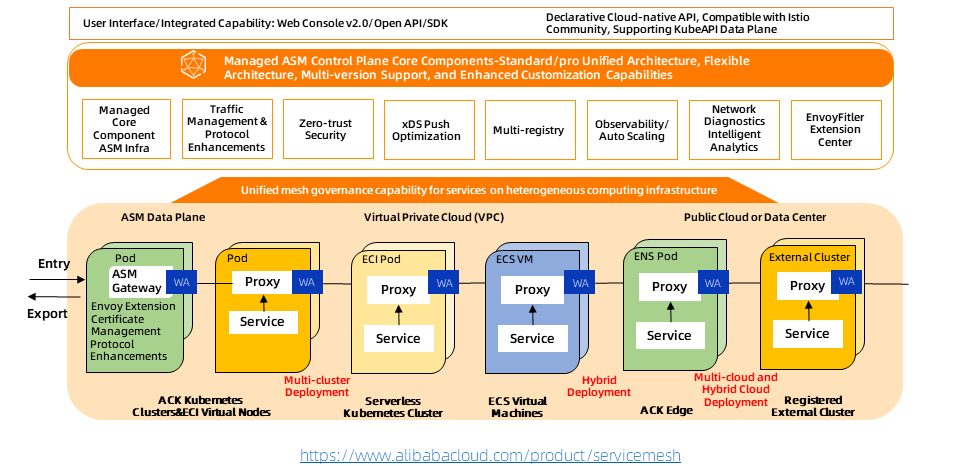

As the first fully managed Istio-compatible service mesh product in the industry, ASM maintained consistency with the community and industry trends in architecture from the very beginning. The components of the control plane are hosted on the Alibaba Cloud side and are independent of the user clusters on the data side. ASM is customized and implemented based on the community's open-source Istio. It provides component capabilities to support refined traffic management and security management on the managed control plane side. The managed mode decouples the lifecycle management of Istio components from the managed Kubernetes clusters, making the architecture more flexible and improving system scalability.

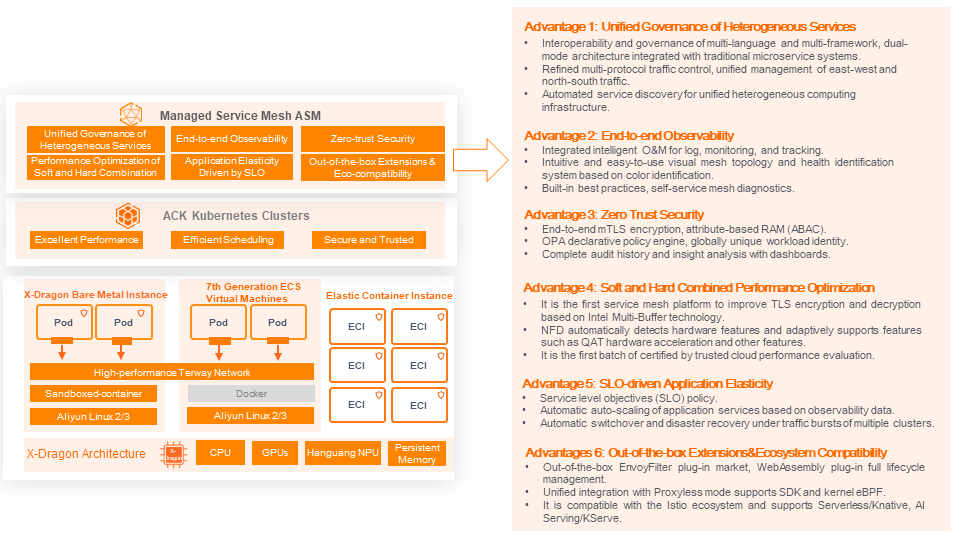

The managed ASM has become the infrastructure for the unified management of various heterogeneous computing services. It provides unified traffic management capabilities, unified service security capabilities, unified service observability capabilities, and WebAssembly-based unified proxy scalability capabilities to build enterprise-level capabilities.

As a basic core technology used to manage application service communication, service mesh brings safe, reliable, fast, application-unaware traffic routing, security, and observability for calls between application services.

The cloud-native application infrastructure supported by service mesh brings important advantages, summarized in the following six aspects:

Advantage 1: Unified Governance of Heterogeneous Services

Advantage 2: End-to-End Observability

Advantage 3: Zero Trust Security

Advantage 4: Soft and Hard Combined Performance Optimization

Advantage 5: SLO-Driven Application Elasticity

Advantage 6: Out-of-the-Box Extensions & Ecosystem Compatibility

Alibaba Cloud Service Mesh FAQ (5): ASM Gateway Supports Creating HTTPS Listeners on the SLB Side

Use ASM to Manage Knative Services (1): An Overview of Knative on ASM

56 posts | 8 followers

FollowAlibaba Cloud Community - August 4, 2023

Alibaba Cloud Native - November 3, 2022

Alibaba Container Service - September 13, 2024

Alibaba Container Service - December 18, 2024

feuyeux - July 6, 2021

5544031433091282 - April 8, 2025

56 posts | 8 followers

Follow Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Xi Ning Wang(王夕宁)