By Shu Xinxin, a technical expert on the Alibaba Cloud E-MapReduce (EMR) team. At present, Shu is working with big data storage and Spark-related work.

During this year's Apsara Conference, the computing and storage separation solution was announced for Alibaba Cloud's E-MapReduce (EMR) Jindo. Jindo and more specifically JindoFS, separate computing and storage while also integrating the advantages of both ends. This announcement was met with much enthusiasm and excitement at the conference.

Separating computing from storage is not exactly new. In fact, it is very much a recent trend in cloud computing. Conventional architectures integrate computing and storage together, but this kind of architecture creates several issues. For example, typically for these architectures, once a cluster is scaled out, its computing capability does not match its storage capacity. Moreover, since both need to be scaled up, users do not have the choice if they want to only scale one.

As a solution, the separation of computing and storage can effectively resolve this problem, allowing users to focus only on the computing capacity of the entire cluster, while also resolving issues like the ones above.

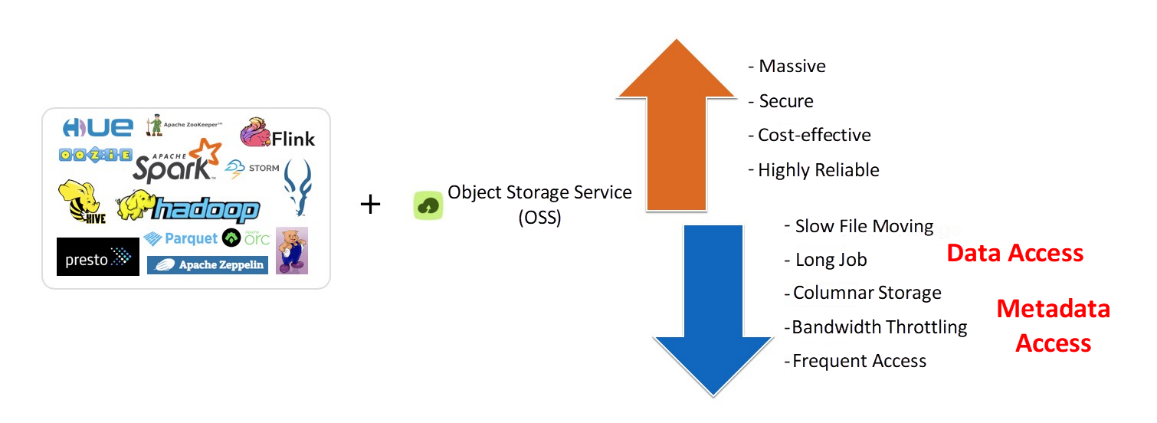

OssFS is a computing and storage separation solution provided by EMR. It is built on Alibaba Cloud's Object Storage Service (OSS) and is compatible with Apache Hadoop's file system. At the same time, it also allows users to access data in OSS. Therefore, OssFS while providing all these features still retains certain advantages of OSS, of which there's massive storage, general cost effectiveness, and high reliability. However, OssFS also has defects. For example, file renaming can be slow, with bandwidth being throttled in OSS. In short, this excessive bandwidth utilization in OSS may be a result of data being frequently accessed. By contrast, JindoFS retains the advantages of OssFS, while also overcoming the preceding defects of OssFS, making it the better solution.

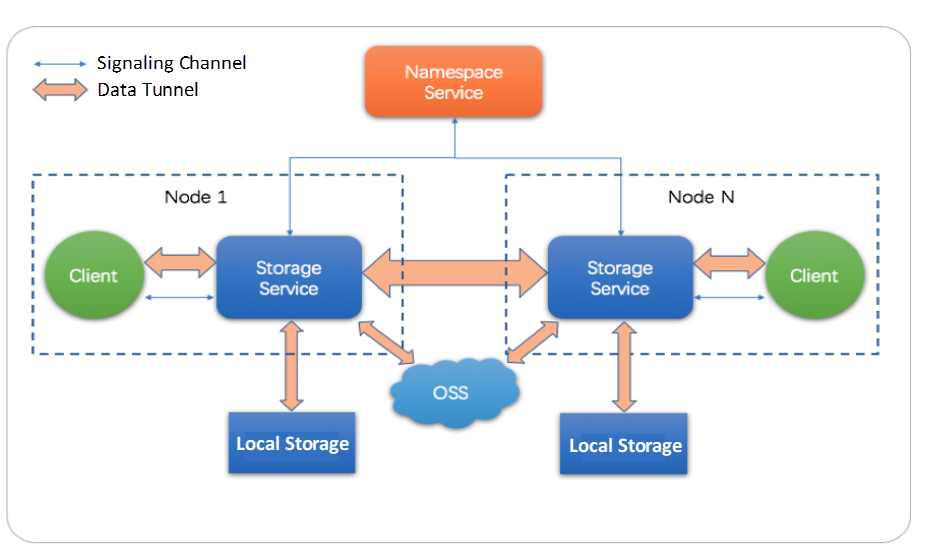

JindoFS consists of two service components: the Namespace service and the Storage service. The Namespace service mainly manages JindoFS metadata and the Storage service. The Storage service mainly manages user data, including local data and data in OSS. JindoFS is a cloud-native file system that features both the performance of local storage and also the ultra-large capacity of OSS. The following paragraphs describe the key features of both services.

The Namespace service is mainly used to manage the metadata of users, including the metadata of the JindoFS file system, blocks, and the Storage service. The JindoFS Namespace service supports different namespaces in a single cluster. With this feature, you can specify namespaces by service so that different namespaces store different service data. In addition, different storage back ends can be configured for namespaces. Currently, RocksDB is supported, and the support for OTS is expected to be available in a future release. You can optimize Namespace-related performance in a large scale, for example, optimizing concurrency control by directory and caching metadata by directory.

The Storage service is mainly responsible for managing actual data, locally cached data, and OSS data. It supports different storage back ends and storage media. Currently, the supported storage back ends include the local file system and OSS. The local storage system supports storage media such as the hard disk drive (HDD), solid-state drive (SSD), and data center persistent memory (DCPM), which accelerate caching. In addition, the Storage service provides optimizations for scenarios where a user has a large number of small files. This prevents excessive small files from imposing too much load on the local file system and deteriorating the overall performance.

In terms of the entire ecosystem, JindoFS supports all computing engines in the EMR framework, including Apache Hadoop, Hive, Spark, Flink, Impala, Presto, and HBase. Users can use JindoFS simply by replacing the format of file access paths with JFS. In terms of machine learning, the Python SDK will be available in the next JindoFS release to help machine learning users efficiently access the data on JindoFS. In addition, JindoFS is highly integrated with EMR Spark, allowing you to optimize materialized views and cubes based on Spark, to achieve ad hoc analysis in seconds.

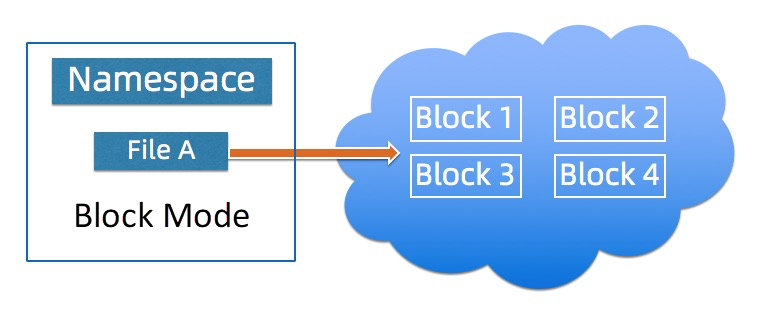

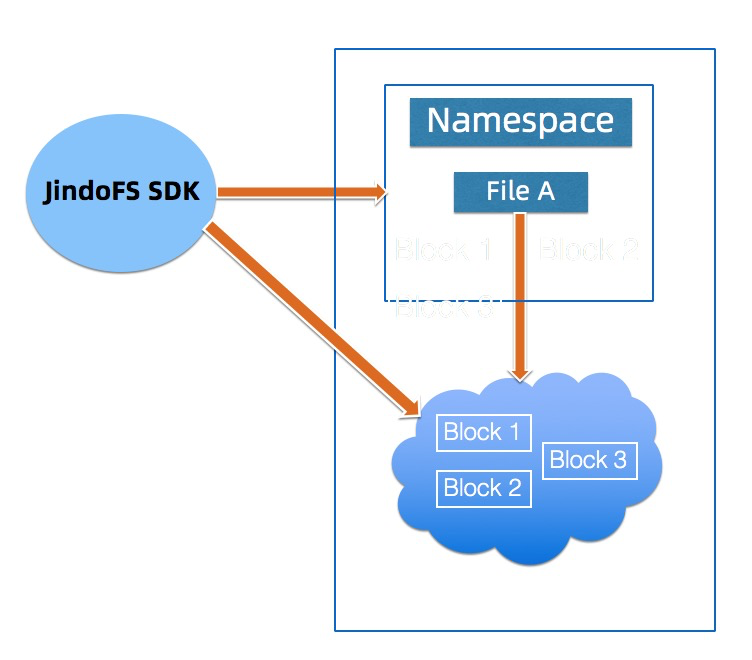

In block mode, JindoFS files are divided into blocks and stored on local disks and OSS. With OSS, users can see only the blocked data. The local Namespace service manages metadata and constructs file data by using local metadata and block data. JindoFS performance is better in this mode than in cache mode. The block mode is applicable to scenarios where users have demanding performance requirements on data and metadata. However, in block mode, users need to migrate data to JindoFS.

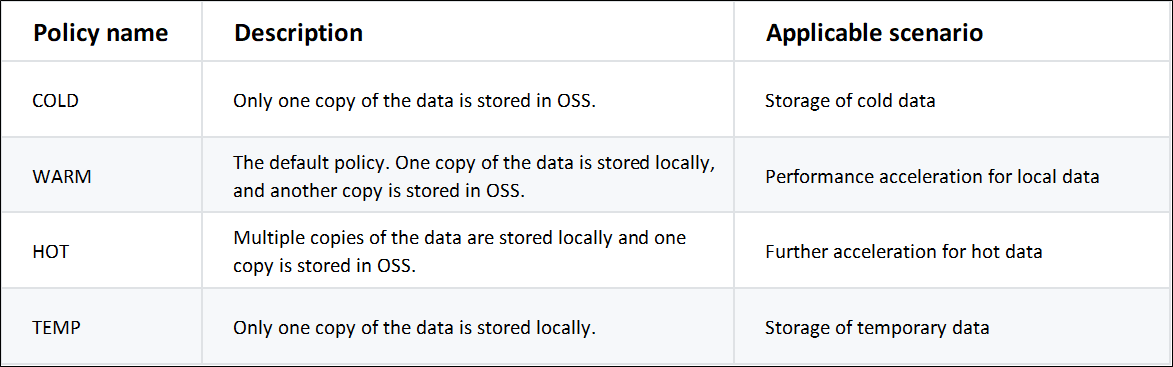

The block mode provides users with different storage policies to adapt to different use cases.

Compared with the Hadoop distributed file system (HDFS), JindoFS in block mode has the following advantages:

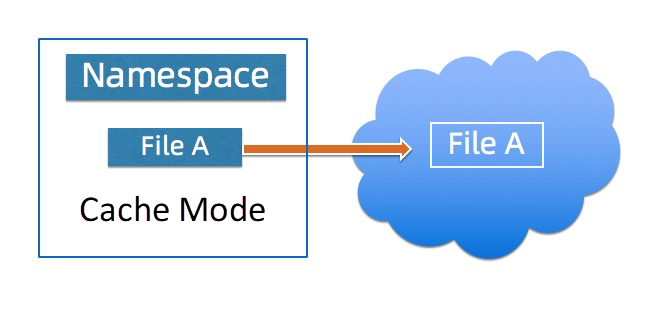

In cache mode, JindoFS files are stored as objects in OSS. By using OSS, users can view the original directory structure and files. Data and metadata are cached to accelerate data reading and writing performance. In addition, users do not need to migrate data to OSS. However, the performance in this mode is relatively lower than that in block mode. To synchronize metadata, users can select different metadata synchronization policies as required.

Compared with OssFS, JindoFS in cache mode has the following advantages:

The external client allows users to access JindoFS from the outside of the EMR cluster. Currently, the client only supports JindoFS in block mode. Permissions for the client are bound to permissions for OSS. To access the data of JindoFS by using the external client, you must have the corresponding permissions for OSS.

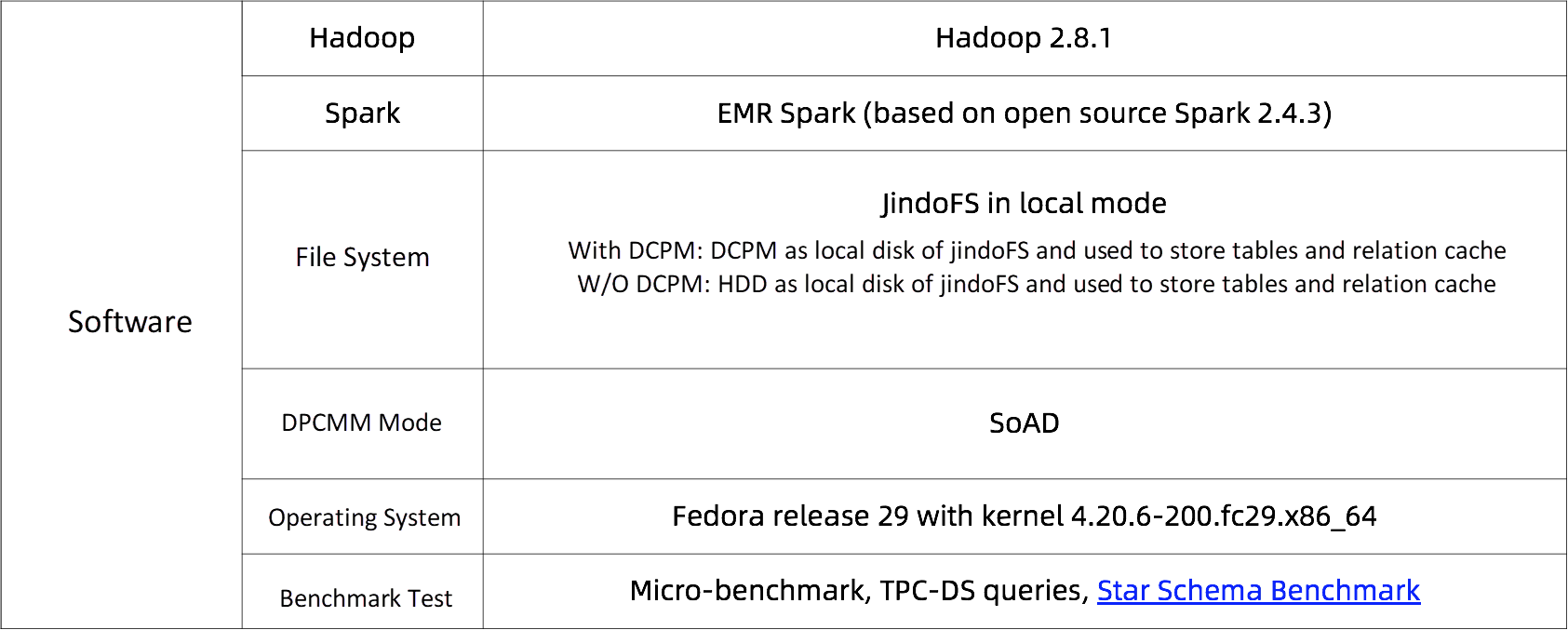

The following describes a test on the performance of JindoFS with DCPM. The test is divided into three parts: micro-benchmark, TPC Benchmark® DS (TPC-DS) query, and star schema benchmark (SSB) in Spark Relational Cache (RC) with JindoFS. DCPM is the Intel® Optane™ persistent memory for data centers.

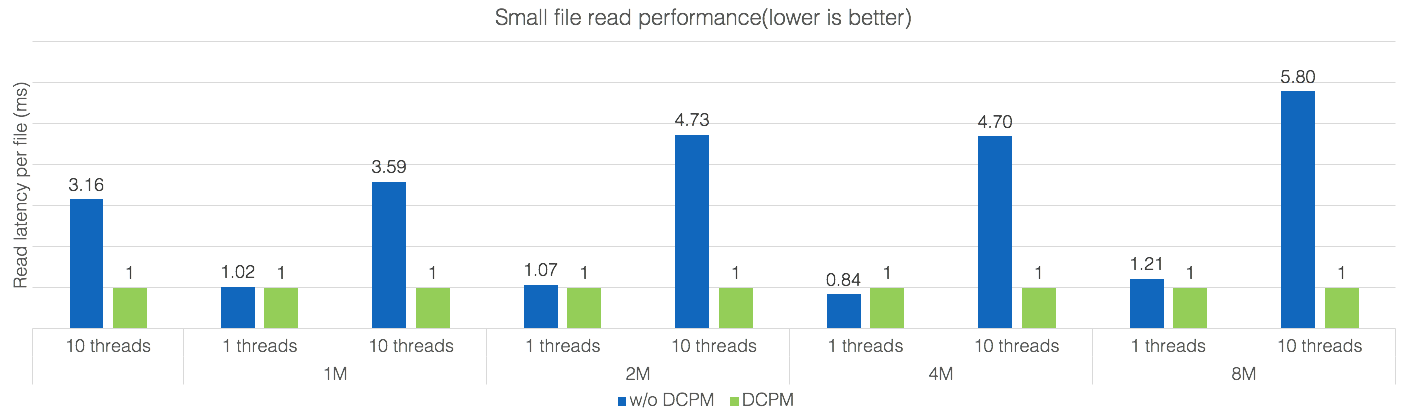

The preceding figure shows the performance measured by micro-benchmark. Reading operations on 100 small files with different file sizes (512 KB, 1 MB, 2 MB, 4 MB, and 8 MB) and different parallelism degrees (1 to 10) are tested. As the figure shows, DCPM significantly improves the performance of reading operations on small files, and the performance improvement is more apparent when more files with a higher degree of parallelism are read.

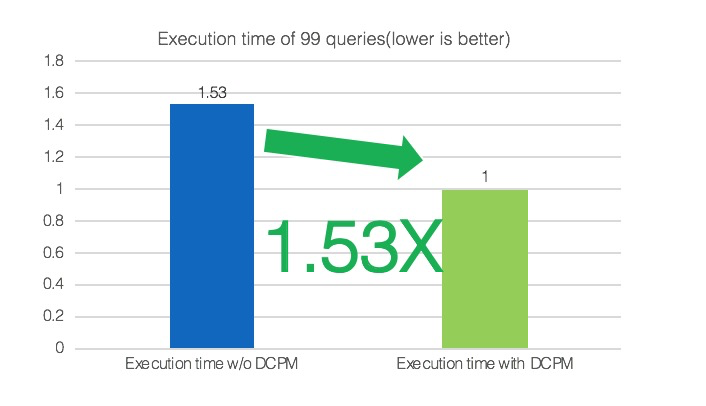

The preceding figure shows the result of a TPC-DS test. A total of 99 queries are executed in the entire TPC-DS with 2 TB of data. According to the normalized execution time, DCPM boosts the overall performance by 1.53 times.

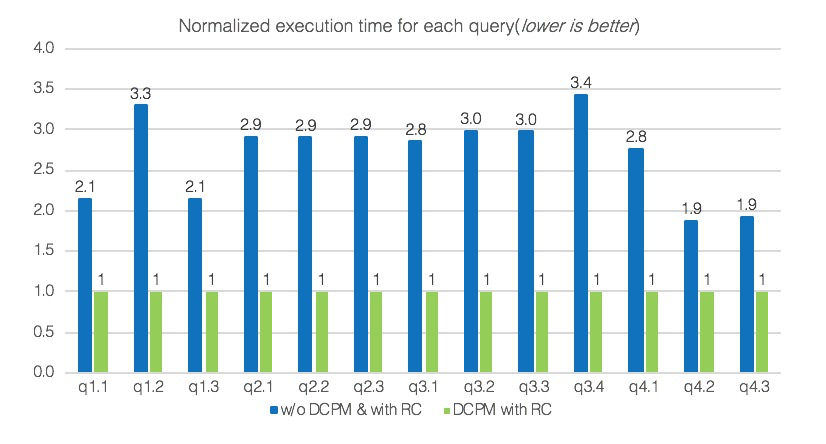

The preceding figure shows the result of an SSB test on Spark Relational Cache with JindoFS. Based on TPC-H, SSB is a benchmark used for testing the performance of star schema database systems. Relational Cache is an important feature in EMR Spark. It accelerates data analysis mainly by pre-organizing and pre-computing data, and provides materialized views that are similar to conventional data warehouses. In the SSB test, each query is executed with 1 TB of data, and the system cache is cleared between queries. According to the normalized execution time, DCPM boosts the overall performance by 2.7 times. For each query, the performance is improved by 1.9 to 3.4 times.

Using Data Preorganization for Faster Queries in Spark on EMR

Alibaba Cloud E-MapReduce Sets World Record Again on TPC-DS Benchmark

57 posts | 5 followers

FollowAlibaba EMR - April 30, 2021

Alibaba EMR - March 16, 2021

Alibaba EMR - November 4, 2020

Alibaba EMR - May 7, 2020

Alibaba EMR - November 18, 2020

Alibaba Clouder - April 14, 2021

57 posts | 5 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR