E-MapReduce (EMR) Serverless Spark is a fully managed and end-to-end serverless data computing platform that is provided by the open-source big data platform E-MapReduce based on Apache Spark. It provides users with a full range of product services such as job development, debugging, release, scheduling, and O&M. This significantly simplifies the workflow of big data computing and enables users to focus more on data analysis and data value extraction.

Alibaba Cloud Realtime Compute for Apache Flink is a fully managed serverless service that is built on Apache Flink. It delivers powerful capabilities for entire project lifecycles, including draft development, data debugging, operation and monitoring, Autopilot, and intelligent diagnostics for real-time applications.

Apache Paimon is a lake format that enables building a Realtime Lakehouse Architecture with Flink and Spark for both streaming and batch operations. It innovatively combines lake format and LSM structure, bringing realtime streaming updates into the lake architecture.

This article uses Flink Datagen to simulate upstream data sources. Data is ingested in real time through Realtime Compute for Apache Flink, stored in the Apache Paimon lake format, and then analyzed through EMR Serverless Spark. At the same time, offline compact jobs are configured to manage Paimon tables based on the scheduling capabilities of EMR Serverless Spark.

1. Create a Paimon catalog and set the metadata storage type to DLF (DLF is used to implement unified metadata management and seamless integration between multiple engines). The following code shows the complete configuration of the catalog.

catalog name: paimon

metastore: dlf

warehouse: oss://test/warehouse

dlf.catalog.id: x

dlf.catalog.accessKeyId: x

dlf.catalog.accessKeySecret: x

dlf.catalog.endpoint: dlf-vpc.cn-hangzhou.aliyuncs.com

dlf.catalog.region: cn-hangzhou2. Create a Paimon table and partition it by date and hour, where the category is simulated as a random number from 1 to 10 and used as the column for subsequent execution of the zorder. Set write-only to true to disable automatic compaction. This i mproves the efficiency of writing.

create table if not exists `paimon`.`test_paimon_db`.`test_append_tbl`

(

id string

,data string

,category int

,ts string

,dt string

,hh string

)

partitioned by (dt, hh)

with (

'write-only' = 'true'

)

;3. Run the data ingestion job, continuously generate data by using Flink Datagen, and write it to the Paimon table. The complete SQL statement of the streaming job is as follows:

CREATE TEMPORARY TABLE datagen

(

id string

,data string

,category int

)

WITH (

'connector' = 'datagen'

,'rows-per-second' = '100'

,'fields.category.kind' = 'random'

,'fields.category.min' = '1'

,'fields.category.max' = '10'

)

;

INSERT INTO `paimon`.`test_paimon_db`.`test_append_tbl`

SELECT

id

,data

,category

,cast(LOCALTIMESTAMP as string) as ts

,cast(CURRENT_DATE as string) as dt

,cast(hour(LOCALTIMESTAMP) as string) as hh

from datagen

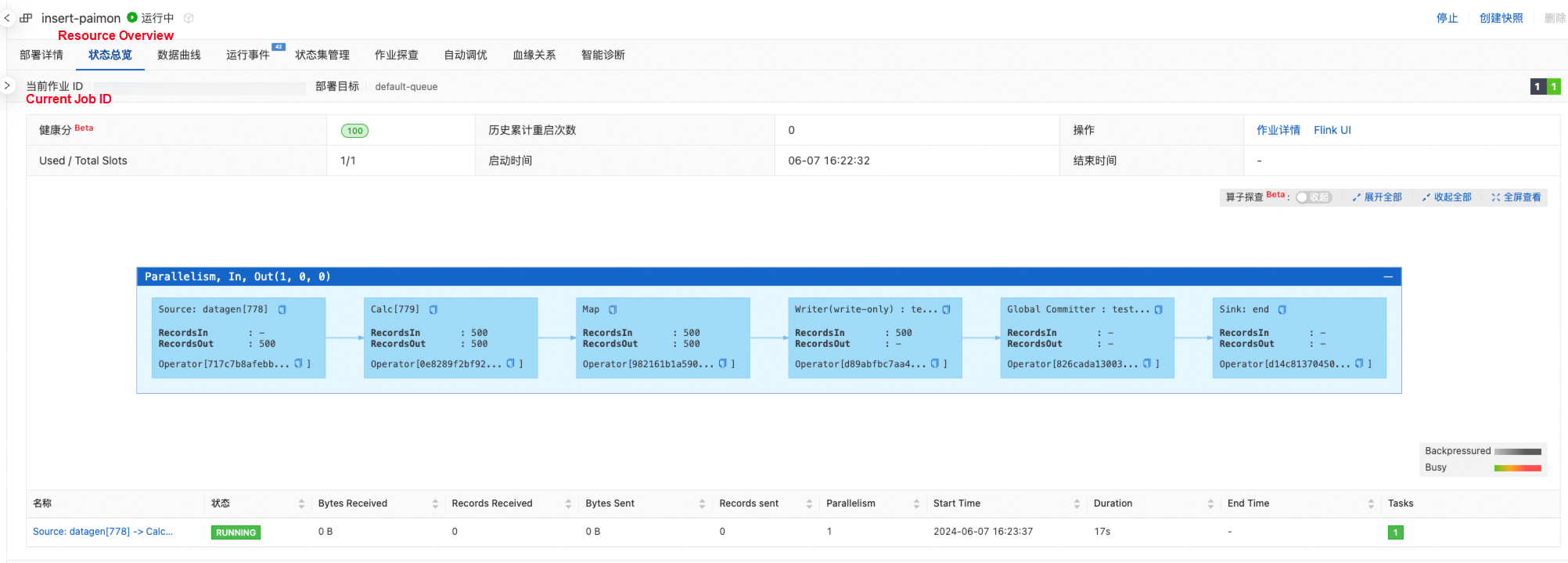

;The following figure shows the job:

EMR Serverless Spark supports interactive queries and job submission. The former can be used for quick query and debugging, while the latter provides full lifecycle workflows such as job development, debugging, scheduling, and O&M.

During the data writing, we can use the EMR Serverless Spark to perform interactive queries on the Paimon table at any time.

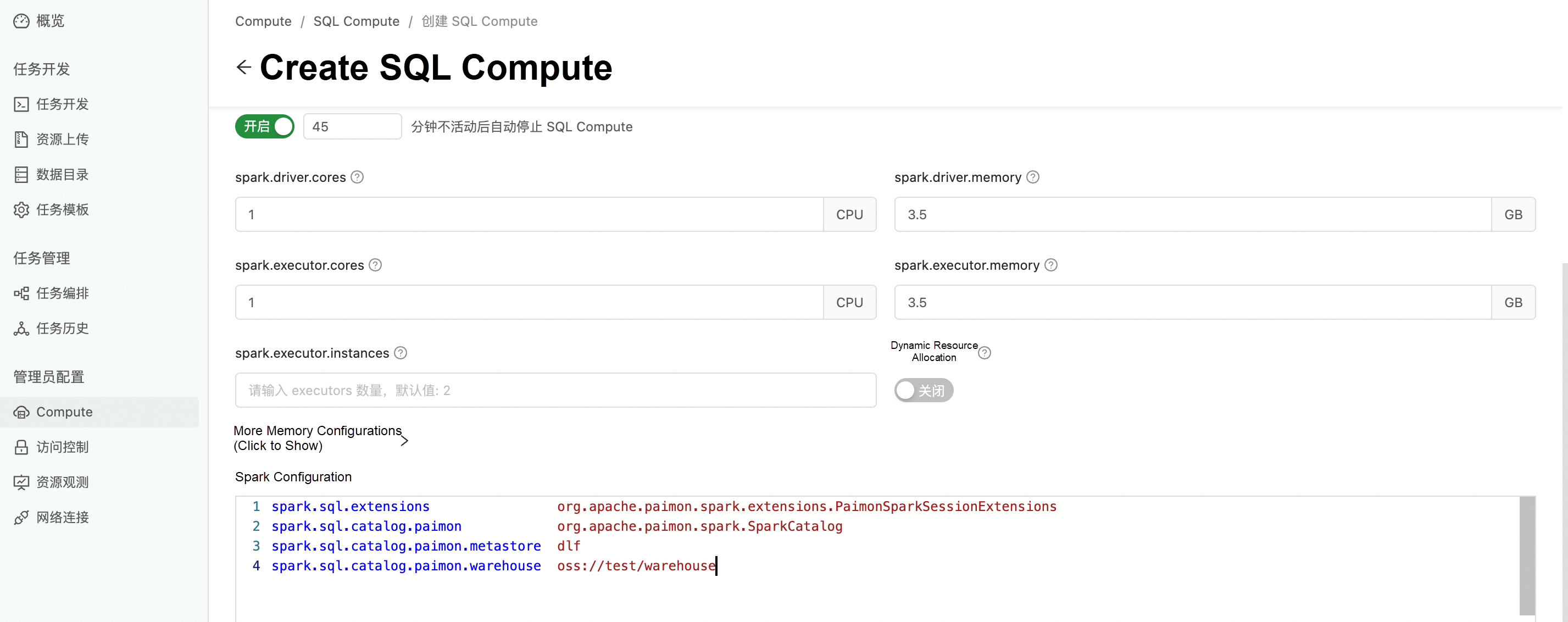

1. Create an SQL Compute and configure parameters related to Paimon.

spark.sql.extensions org.apache.paimon.spark.extensions.PaimonSparkSessionExtensions

spark.sql.catalog.paimon org.apache.paimon.spark.SparkCatalog

spark.sql.catalog.paimon.metastore dlf

spark.sql.catalog.paimon.warehouse oss://test/warehouse2. Run the SQL Compute, go back to the Drafts page, and perform interactive queries.

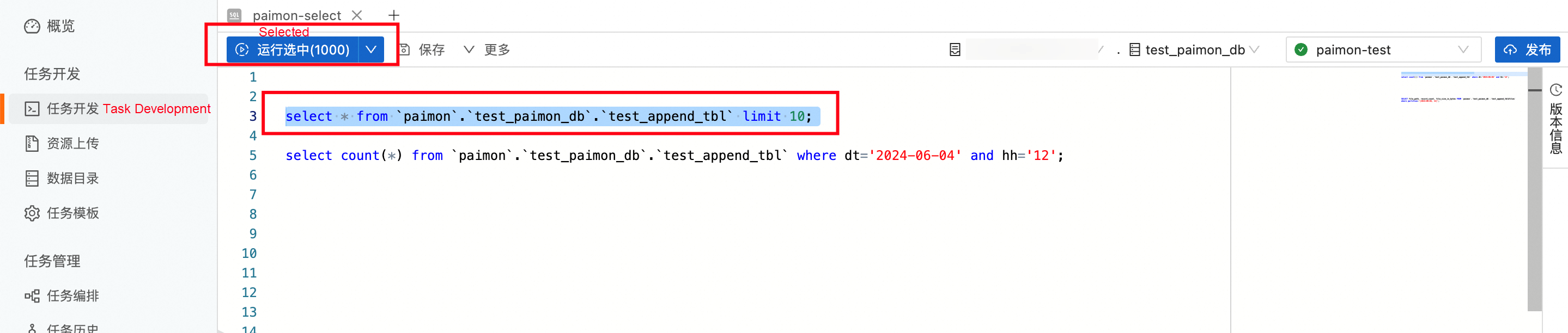

Example 1:

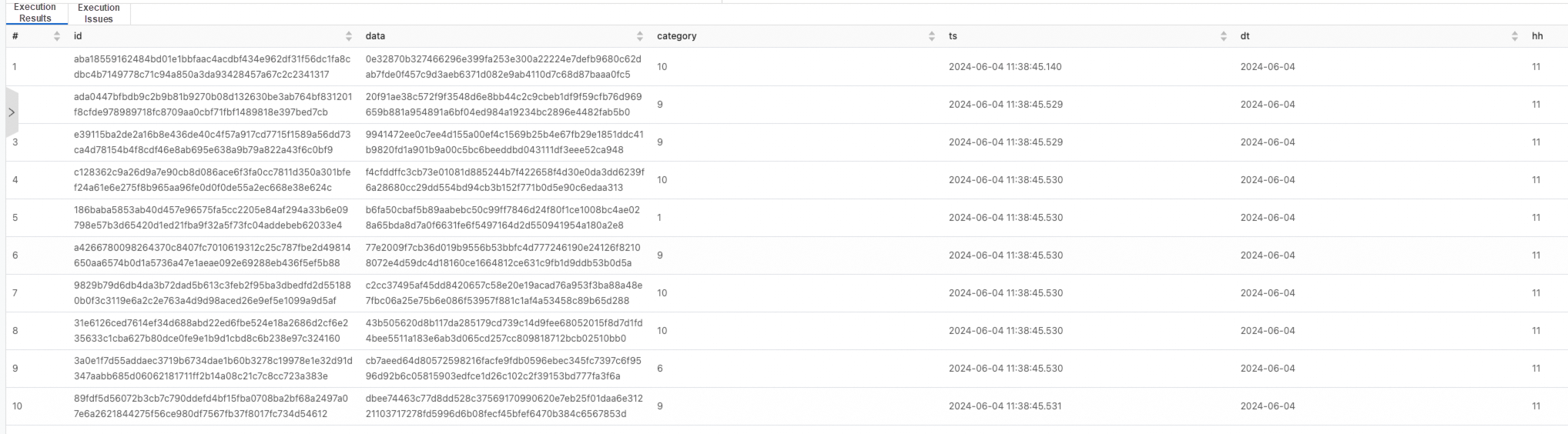

select * from `paimon`.`test_paimon_db`.`test_append_tbl` limit 10;Returned results:

Example 2:

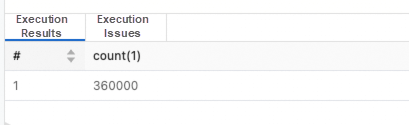

select count(*) from `paimon`.`test_paimon_db`.`test_append_tbl` where dt='2024-06-04' and hh='12';Returned results:

EMR Serverless Spark also supports job scheduling. We publish the developed task, create a workflow, orchestrate the task, and publish the workflow. We can also configure scheduling policies to implement periodic scheduling. The following uses this module to implement Compact scheduling for Paimon tables.

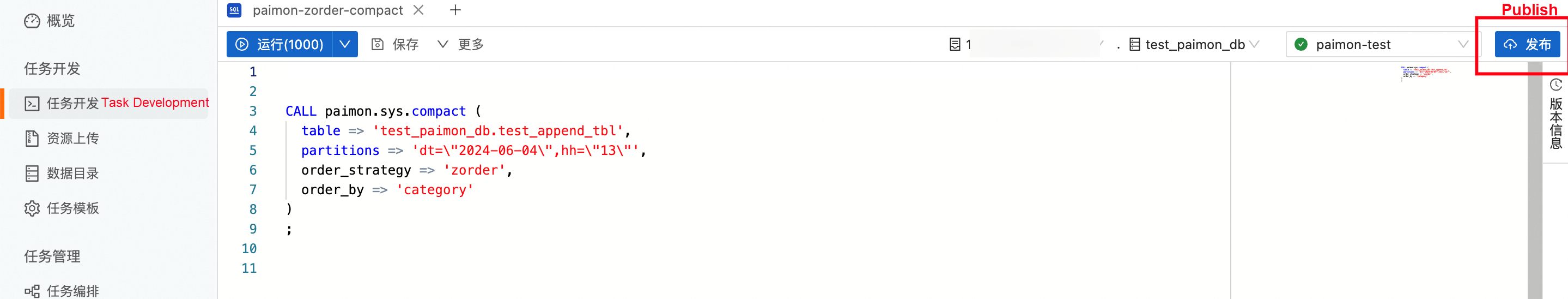

1. On the Drafts page, write a Paimon Compact SQL and publish it.

CALL paimon.sys.compact (

table => 'test_paimon_db.test_append_tbl',

partitions => 'dt=\"2024-06-04\",hh=\"12\"',

order_strategy => 'zorder',

order_by => 'category'

)

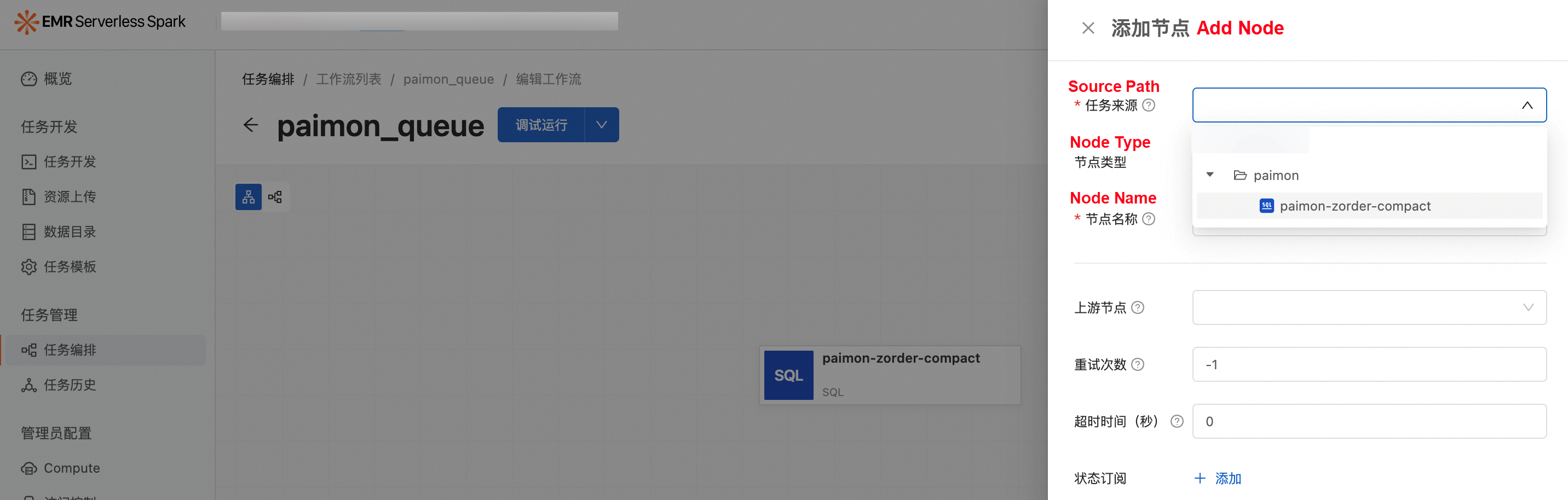

;2. On the Workflows page, create a workflow, add a node, and configure it as the newly published task.

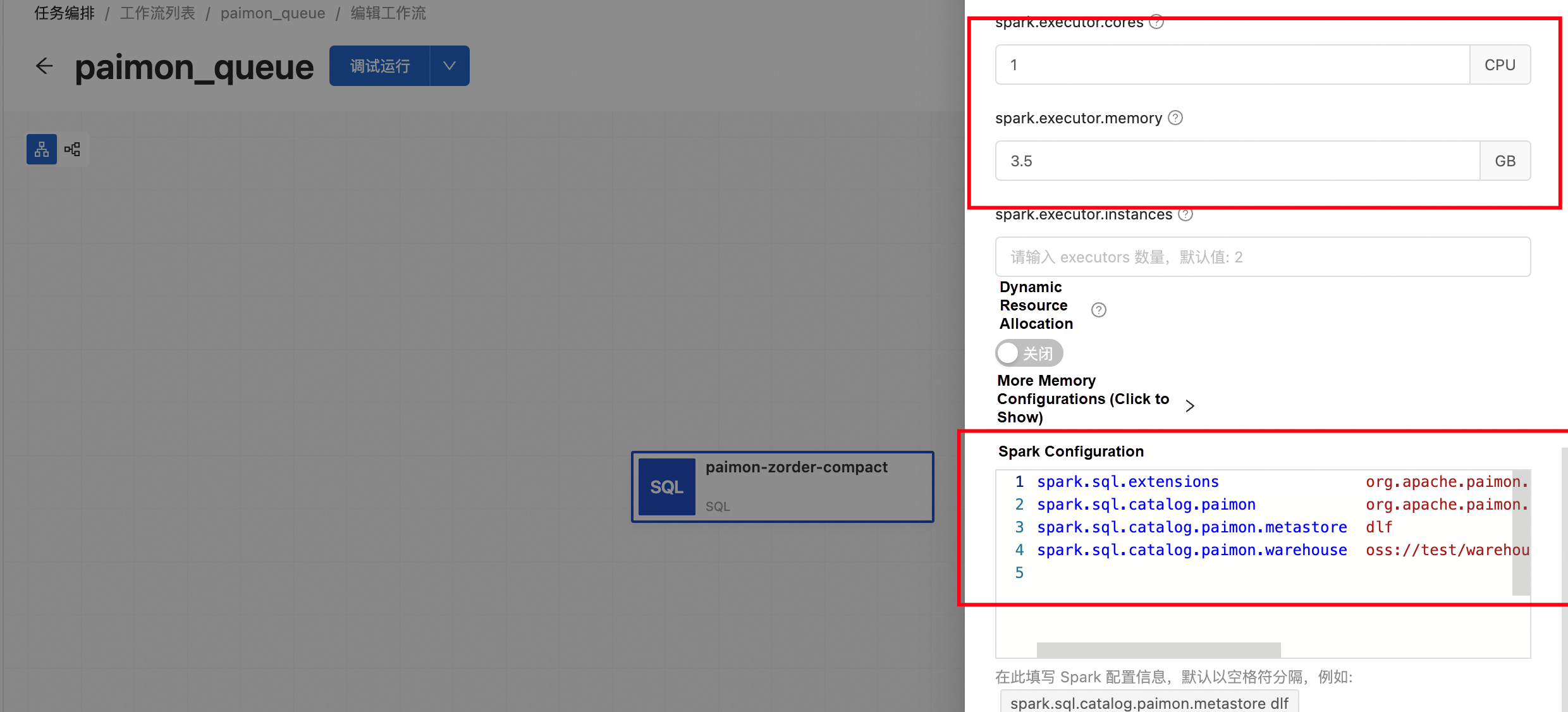

Each node in the workflow can be configured with its dedicated engine version and Spark running configuration.

For this node, we configure the Paimon as follows.

spark.sql.extensions org.apache.paimon.spark.extensions.PaimonSparkSessionExtensions

spark.sql.catalog.paimon org.apache.paimon.spark.SparkCatalog

spark.sql.catalog.paimon.metastore dlf

spark.sql.catalog.paimon.warehouse oss://test/warehouse3. Run the workflow manually.

4. Verify the task. After the job scheduling of the workflow is successfully executed, we can use the SQL Compute to query the files system table of Paimon to quickly obtain the changes of files before and after Compact.

For example, query the file information of the dt=2024-06-04/hh=12 partition:

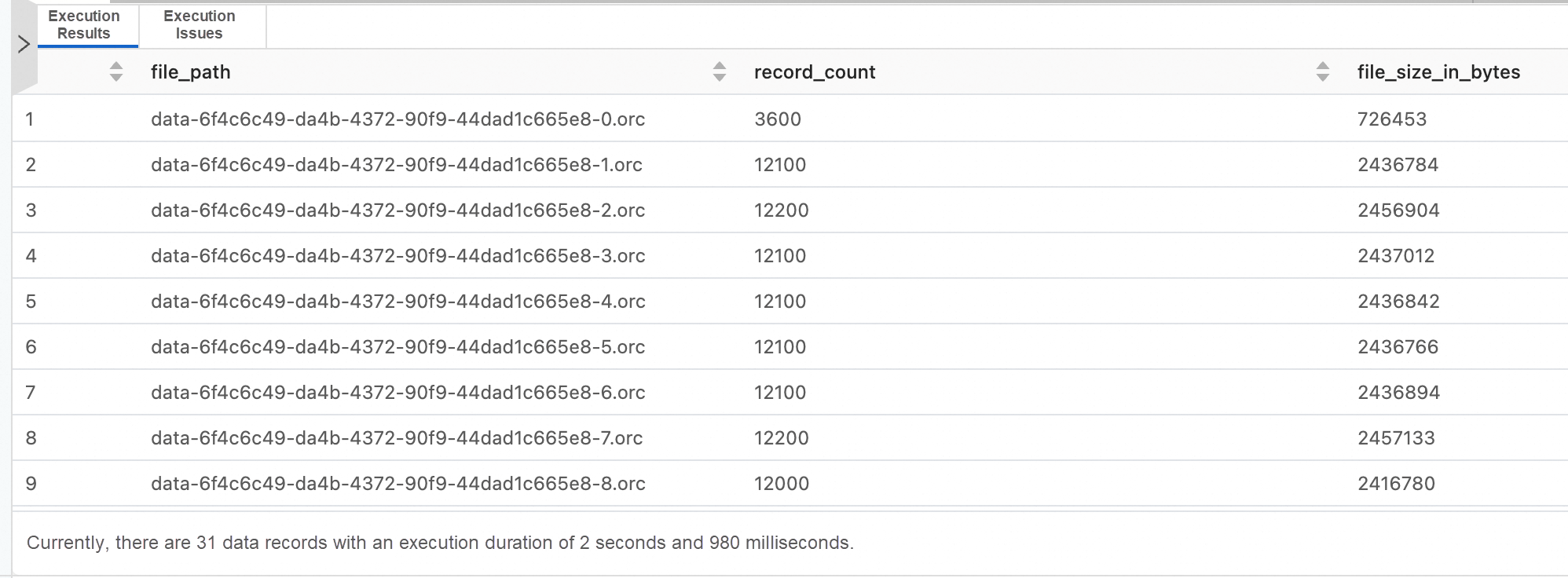

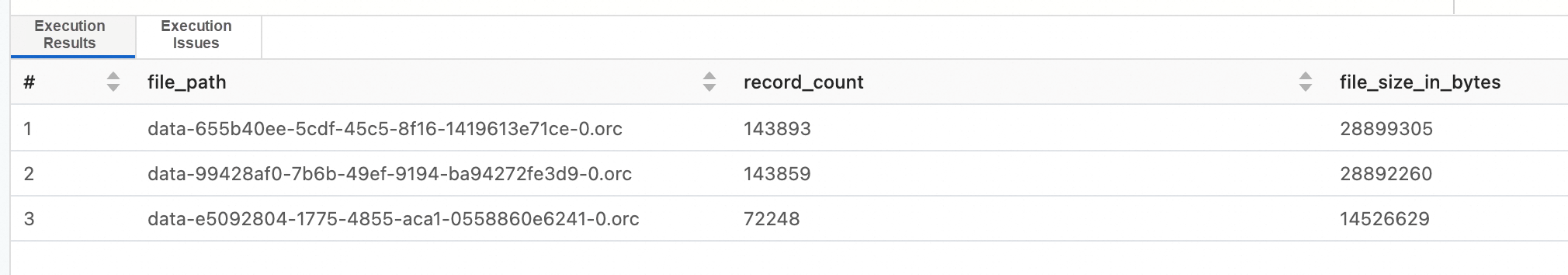

SELECT file_path, record_count, file_size_in_bytes FROM `paimon`.`test_paimon_db`.`test_append_tbl$files` where partition='[2024-06-04, 12]';Returned results (before Compact):

Returned results (after Compact):

This article demonstrates how to use Alibaba Cloud Realtime Compute for Apache Flink + EMR Serverless Spark to quickly build Paimon data lake analytics, including OSS data ingestion, interactive queries, and offline compact. EMR Serverless Spark is fully compatible with Apache Paimon. It uses the built-in metadata of DLF to communicate with other cloud products such as Realtime Compute for Apache Flink, thus providing a complete solution for unified batch and streaming processing. At the same time, it also supports flexible job running mode and parameter configuration to meet multiple requirements such as real-time analysis and production scheduling.

Use Cases for EMR Serverless Spark | Use EMR Serverless Spark to Submit a PySpark Streaming Job

Use Cases for EMR Serverless Spark | Use the spark-submit CLI to Submit a Spark Job

62 posts | 7 followers

FollowApache Flink Community - March 7, 2025

Apache Flink Community - May 10, 2024

Apache Flink Community - April 16, 2024

Apache Flink Community - July 18, 2024

Apache Flink Community - November 21, 2025

Apache Flink Community - July 5, 2024

62 posts | 7 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Batch Compute

Batch Compute

Resource management and task scheduling for large-scale batch processing

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR