By Youdong Zhang

We have seen many online cases and community issue inquiries regarding MongoDB, which mainly focus on its memory consumption and usage. These questions include:

In this article, we hope to address some of the most common queries regarding MongoDB usage, focusing on its memory usage.

After the Mongod process boots up, in addition to being the same as an ordinary process, it loads each binary and reliant library the memory, which serve as the DBMS. It must also be responsible for a large number of tasks such as client connection management, request processing, database metadata and storage engine. All of these tasks involve allocation and freeing up memory, and under default conditions the MongoDB uses Google tcmalloc as a memory allocator. The largest shares of the memory are occupied by "storage engine" and "customer terminal connection and request processing".

MongoDB 3.2 and after use as a default the WiredTiger storage engine. The upper limit of the memory used by the WiredTiger engine can be set by cacheSizeGB, and the general recommended setting is about 60% of the system's usable memory (default setting).

To cite an example, if cacheSizeGB is set at 10 GB, you can think of it as if the total amount of memory allocated through tcmalloc by the WiredTiger engine does not exceed 10 GB. In order to control the use of memory, WiredTiger begins elimination when the memory use approaches a certain threshold, to prevent user requests from being blocked when the memory use is full.

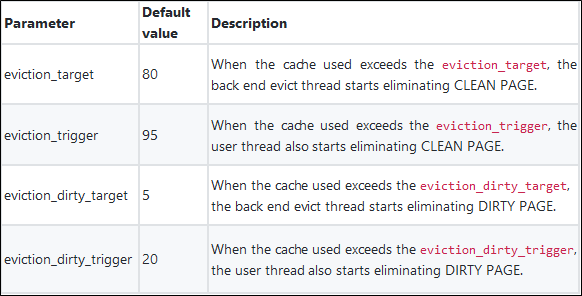

At present there are 4 configurable parameters that support the eviction policy of the WiredTiger storage engine (in general it does not require revision), which means:

Under this rule, in an instance where MongoDB is operating normally, the cache used will generally be 0.8 * cacheSizeGB and under, and there is no major problem if this is occasionally exceeded; if used > = 95% or dirty >= 20% occurs and is sustained for a time, this means that the memory elimination pressure is very large, and the users' request threads will be blocked from participating in page elimination; when a request is delayed the pressure increases, and at this time "increasing memory" or "switching to a faster disk to raise IO capabilities" can be considered.

The MongoDB Driver will establish a TCP connection with the Mongod process, and will send database requests and receive responses on the connection. In addition to the TCP protocol stack maintaining the socket database for connection, each connection will have a read buffer and a write buffer, and the users receive and send network packets. The size of the buffer is set by sysctl system parameters like those below, and these are respectively the minimum value, default value and maximum value of the buffers. Google can be used for the detailed interpretation.

net.ipv4.tcp_wmem = 8192 65536 16777216

net.ipv4.tcp_rmem = 8192 87380 16777216On redhat7 (such detailed information has moreover not been derived on redhat6) it is possible to check the information of the buffer of each connection by ss-m. An illustration is provided below. The read and write buffers account for 2357478 bytes and 2626560 bytes respectively, that is about 2 MB; 500 similar connections take up about 1 GB of memory; the question of how much is taken up by the buffer is determined by the size of the data packets that are sent/received in response on the connection, the network quality, etc.; this buffer also will not increase too much; and if a packet is relatively large, it will be relatively easy for this buffer to increase a lot.

tcp ESTAB 0 0 127.0.0.1:51601 127.0.0.1:personal-agent

skmem:(r0,rb2357478,t0,tb2626560,f0,w0,o0,bl0)In addition to the memory overhead on the protocol stack, Mongod will start a separate thread for each connection, and this will be exclusively responsible for processing the request on this connection. Mongod has set a protocol stack of a maximum of 1 MB for the thread for processing the connection request, and ordinarily the actual use is around several tens of KB, and the actual overhead of these stack threads are seen through the proc document system. In addition to the thread that processes the request, Mongod also has a series of back end threads, for example, such threads as master-slave synchronization, regular refreshed journal, TTL, and evict. The default is the thread stack of each thread's largest ulimit -s (typically 10 MB) of each thread. Due to the fact that the total number of threads in this batch is relatively fixed, the memory occupied is also relatively controllable.

# cat /proc/$pid/smaps

7f563a6b2000-7f563b0b2000 rw-p 00000000 00:00 0

Size: 10240 kB

Rss: 12 kB

Pss: 12 kB

Shared_Clean: 0 kB

Shared_Dirty: 0 kB

Private_Clean: 0 kB

Private_Dirty: 12 kB

Referenced: 12 kB

Anonymous: 12 kB

AnonHugePages: 0 kB

Swap: 0 kB

KernelPageSize: 4 kB

MMUPageSize: 4 kBWhen a thread is processing a request, it must allocate a temporary buffer to store the data packet that is received, and in order to request to establish the context (OperationContext) it stores the results of interim processing (e.g. ordering, aggregation, etc.) and the final response results, etc.

When a large number of requests are concurrent, one may observe a rise in the memory used by Mongod, and then a gradual release after the number of requests declines. This is due to the tcmalloc memory management policy. tcmalloc takes performance into consideration. Each thread will have its own local free page cache and central free page cache; during memory application, the memory can be used according to a local thread free page cache ==> central free page cache search. If usable memory can't be found, then it will only apply from the stack; when the memory is released, it will then return to the cache, and the tcmalloc back end will be slowly returned to the OS. Under default conditions, tcmalloc will at most [something missing] memory of cache min (1GB,1/8 * system_memory), and it is possible to set this value by setParameter.tcmallocMaxTotalThreadCacheBytesParameter. However, in general, revision is not recommended, and adjustment and optimization are done at the access level to the maximum extent possible)

As far as the management policy of the tcmalloc cache is concerned, the MongoDB level exposes several parameters for adjustment. In general adjustment is not required. If it is possible to clearly understand the tcmalloc principles and parameter meaning, then it is possible to do targeted adjustment and optimization; the memory status of MongoDB tcmalloc can be viewed through db.serverStatus().tcmalloc. You can see the tcmalloc file for specific meaning. For emphasis you can focus on the total_free_bytes. This value tells you how much memory is self-cached and has not been returned to the OS.

mymongo:PRIMARY> db.serverStatus().tcmalloc

{

"generic" : {

"current_allocated_bytes" : NumberLong("2545084352"),

"heap_size" : NumberLong("2687029248")

},

"tcmalloc" : {

"pageheap_free_bytes" : 34529280,

"pageheap_unmapped_bytes" : 21135360,

"max_total_thread_cache_bytes" : NumberLong(1073741824),

"current_total_thread_cache_bytes" : 1057800,

"total_free_bytes" : 86280256,

"central_cache_free_bytes" : 84363448,

"transfer_cache_free_bytes" : 859008,

"thread_cache_free_bytes" : 1057800,

"aggressive_memory_decommit" : 0,

...

}

}cacheSizeGB based on the memory quota allocated to Mongod, and this may be set according to about 60% of the quota.A detailed analysis has already been done regarding the TCP connection for the memory overhead upper surface of Mongod. Many fellow students have a certain misunderstanding about concurrence, and believe that "The higher the number of concurrent connections is, the higher the QPS of the database will be." Actually, in the network models of the majority of databases, an excessively high number of connections will cause the back end memory pressure to become large and the context switching overhead to become large, resulting in a decline in performance.

When the MongoDB driver is connected to Mongod, it will maintain a connection pool (ordinarily a default of 100), and when a large number of clients simultaneously access the same Mongod, it is necessary to consider reducing the size of each client connection pool. In the case of Mongod, it is possible to limit the maximum number of concurrent connections by configuring the net.maxIncomingConnections settings, and thereby preventing an overload of the database pressure.

The recommendation in the official file is as follows, with the gist being that you should set SWAP and avoid OOM of Mongod because too much memory is being used.

For the WiredTiger storage engine, given sufficient memory pressure, WiredTiger may store data in swap space.

Assign swap space for your systems. Allocating swap space can avoid issues with memory contention and can prevent the OOM Killer on Linux systems from killing mongod. There are advantages and disadvantages to both turning on and not turning on SWAP. If SWAP is turned on, then when the memory pressure is large, it will use the SWAP disk space to alleviate the memory pressure. At this time the entire database service will slow down, but the specific extent to which it will slow down is uncontrollable. If SWAP is not turned on, when the entire memory exceeds the device memory online, this will trigger the OOM killer to kill the process, which is actually telling you that it might be necessary to expand the memory resources or to optimize access to the database.

The question of whether or not to turn on SWAP is actually a choice between a "good death" and "barely living". Speaking in terms of some important business scenarios, you should first plan enough memory for the database, and when the memory is insufficient, "adjusting capacity expansion in a timely manner" is better than "uncontrollably slow".

Building a Web-Based Platform with PolarDB: Yuanfudao Case Study

ApsaraDB - November 13, 2019

Data Geek - August 6, 2024

Alibaba Clouder - July 25, 2019

Alibaba Cloud Industry Solutions - January 13, 2022

Alibaba Clouder - November 20, 2018

ApsaraDB - January 22, 2021

ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB