By Xu Xiaobin, Senior Technical Expert at Alibaba Cloud

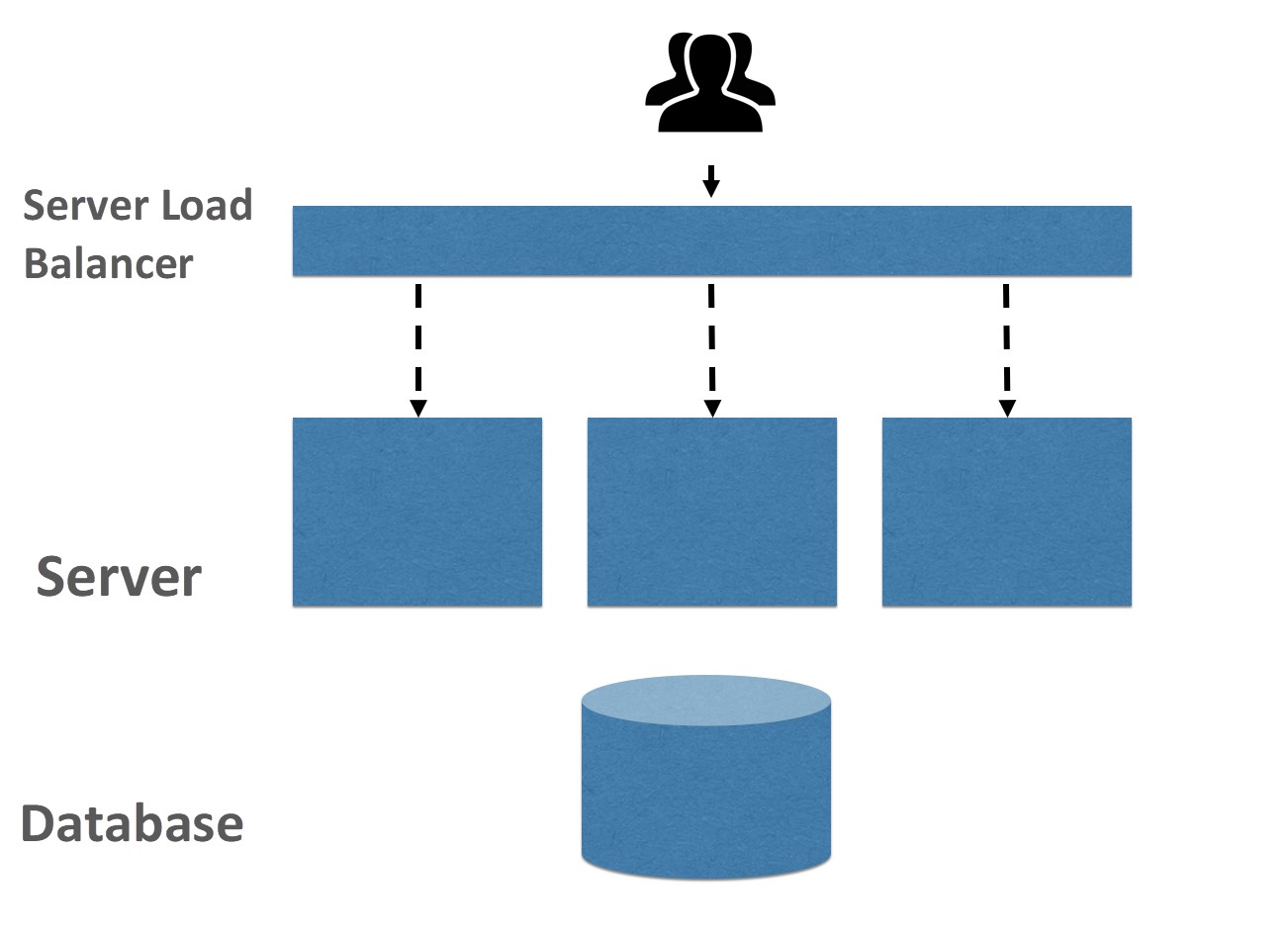

More than a decade ago, standalone applications were the mainstream application architecture. A standalone application consists of a server and a database. In this architecture, O&M personnel carefully maintained the server to ensure service availability.

As businesses grew, this simple type of application architecture faced two problems. First, if the single server failed, for example, due to hardware damage, the service became unavailable. Second, as the service volume grew, the resources of the single server would soon be unable to cope with all the traffic.

The most efficient way to resolve these two problems is to add a Server Load Balancer (SLB) at the traffic ingress. This way, you can deploy standalone applications on multiple servers to prevent single-points of failure. In addition, this provides the standalone application with horizontal scaling capability.

Standalone Architecture - Horizontal Scaling

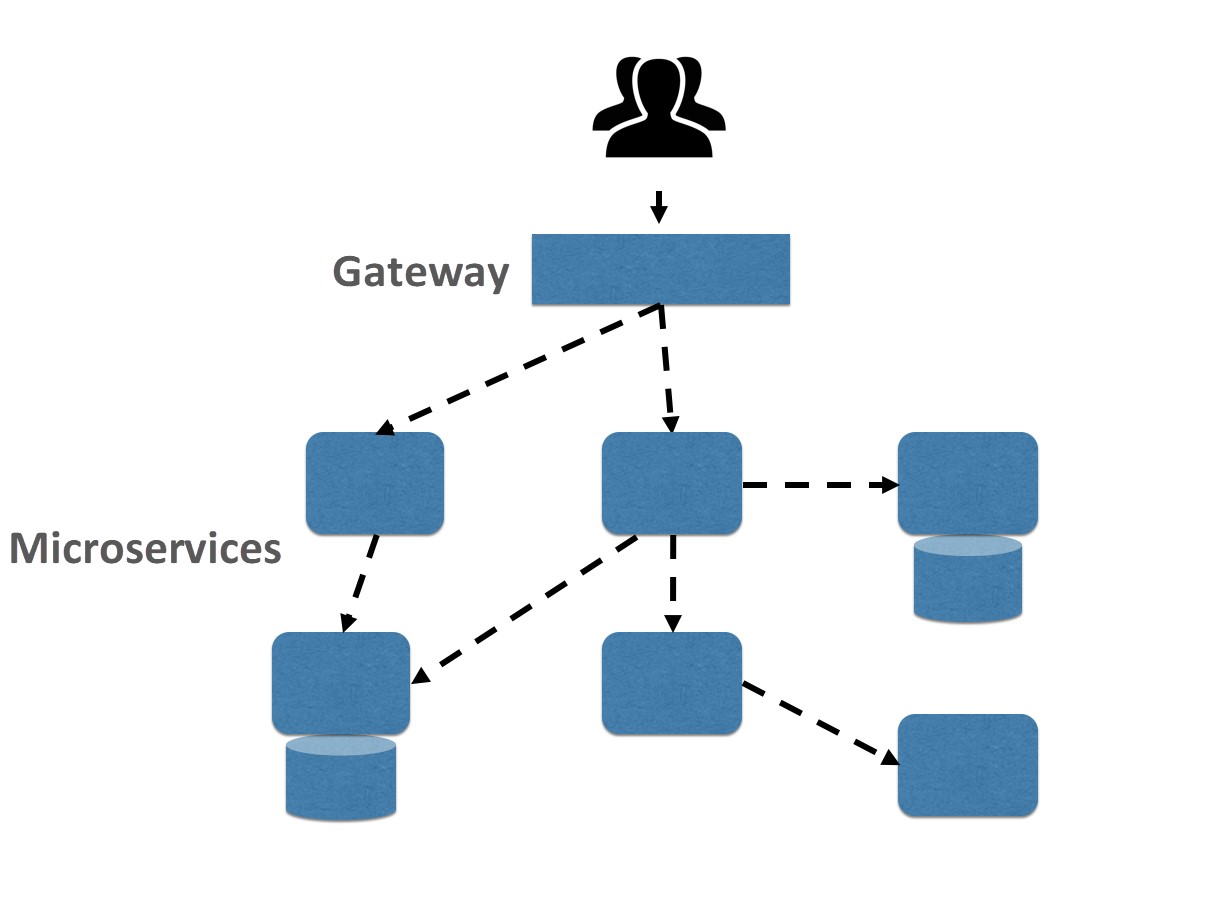

As business volumes continued to grow, more R&D engineers joined the team and developed features for standalone applications. The code of standalone applications did not have clear physical boundaries. As a result, various conflicts soon occurred. Manual coordination and a large number of conflict merge operations were required, sharply decreasing the R&D efficiency.

To resolve these problems, we started to split standalone applications into microservice applications that could be independently developed, independently tested, and independently deployed. Services of the microservice applications communicated with each other through APIs, using HTTP, GRPC, or Dubbo. By splitting up standalone applications based on Bounded Context in domain-driven design, the microservice model could significantly improve the R&D efficiency of mid-size and large teams.

After applications evolved from a standalone architecture to a microservices model, the applications became physically distributed by default. At this time, application architects had to face the new challenges posed by the distributed mode. In this process, we started to adopt certain distributed services and frameworks. For example, distributed services included the cache service Redis, the configuration service ACM, the state coordination service ZooKeeper, and the message service Kafka. Distributed frameworks included communication frameworks, such as GRPC or Dubbo, and distributed tracing systems.

In addition to the challenges posed by distributed environments, the microservices model also created new challenges for O&M. Previously, developers only needed to maintain one application. Now, developers may have to maintain ten or more applications. This multiplied the work involved in security patch upgrades, capacity evaluation, fault diagnosis, and other transactions. Therefore, distribution standards, lifecycle standards, monitoring standards, and auto scaling capability become more important.

Microservices Model

Whether the architecture grows up on the cloud determines whether the architecture is cloud-native. This is a simple understanding of cloud-native. "Growing up on the cloud" does not simply mean using the IaaS-layer services of the cloud, such as basic computing and storage services like ECS and OSS. Instead, cloud-native architectures use distributed services on the cloud, such as Redis and Kafka, which directly affect the business architecture. Distributed services are necessary for the microservices model. Previously, developers developed such services on their own or maintained them based on open-source versions. In the cloud-native era, business teams can directly use cloud services.

The other two technologies that I should mention are Docker and Kubernetes. Docker standardizes application distribution standards. Both applications written in Spring Boot and applications written in Node.js are distributed by using images. Kubernetes defines application lifecycle standards based on Docker technology. The entire process from start to publishing, health checks, and unpublishing is uniformly standardized.

With its application distribution standards and lifecycle standards, the cloud can provide standardized application hosting services, including application version management, publishing, monitoring, and self-recovery. In this way, the R&D of a stateless application would not be affected by the failure of an underlying physical node because the application hosting service could automatically perform failover based on the standard application lifecycle. Specifically, the application hosting service would disable the application containers on the faulty physical node and enable the same number of application containers on a new physical node. As you can see, cloud-native provides a wide range of benefits.

It allows application hosting services to monitor the runtime data of an application, including the traffic concurrency, CPU load, and memory usage. Based on these metrics, the business team can configure scaling rules. Then, the platform executes these rules to increase or decrease the number of containers based on the actual business traffic. This is the fundamental feature of cloud-native: auto scaling. This feature helps you avoid a waste of resources during off-peak hours, allowing you to reduce costs and improve O&M efficiency.

As application architectures evolved, R&D and O&M personnel gradually freed themselves from manually dealing with machines and allowed the platform system to manage the machines instead, which reduced their workloads. This is a simple explanation of the concept of serverless.

Xu Xiaobin is a Senior Technical Expert at Alibaba Cloud. Currently, he is responsible for the building of Alibaba Group's Serverless R&D and O&M platforms. Previously, he worked on the AliExpress microservices model, Spring Boot framework, and R&D efficiency improvement. He was also the author of "Practices of Maven" and once maintained Maven Central Repository.

OpenYurt: Alibaba's First Open-Source, Cloud-Native Project for Edge Computing

How to Achieve Stable and Efficient Deployment of Cloud-Native Applications?

664 posts | 55 followers

FollowAlibaba Developer - February 26, 2020

Alibaba Clouder - November 11, 2020

Alibaba Developer - February 1, 2021

Alibaba Developer - February 1, 2021

Alibaba Developer - April 7, 2020

Alibaba Clouder - February 14, 2020

664 posts | 55 followers

Follow Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community