This article uses an example to demonstrate how to use Flink CDC and Doris Flink Connector to monitor data from MySQL databases and store data in the tables corresponding to Doris data warehouses in real-time. The main contents include:

Change Data Capture (CDC) can synchronize incremental change records of source databases to one or more data destinations (Sinks). You can perform certain processing on data during synchronization, such as GROUP BY and multi-table JOIN.

For example, for an e-commerce platform, user orders are written to a source database in real-time. Department A needs to aggregate the real-time data per minute and save it to Redis for a query. Department B needs to temporarily store the data of the current day to Elasticsearch for report display. Department C needs to store the data in ClickHouse for a real-time warehouse. Over time, subsequent departments D and E will have data analysis requirements. In this scenario, the traditional copy distribution method for multiple copies is inflexible, while CDC can implement one change record and then process and deliver it to multiple destinations in real-time.

CDC has many technical solutions. Currently, the mainstream implementation mechanisms in the industry can be divided into two types:

CDC Based on Queries

CDC Based on Logs

Flink has added the CDC feature in version 1.11, which is referred to as Change Data Capture. The name is a bit messy. Let's look at the contents of CDC from the previous data architecture.

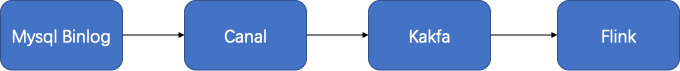

The preceding is the previous mysql binlog log processing process. For example, canal listens to binlog and writes logs to Kafka. Apache Flink consumes Kafka data in real-time to synchronize MySQL data or other content. It can be divided into the following phases:

The overall processing link is long, and many components need to be used. Apache Flink CDC can obtain a binlog from the database for downstream business computing and analysis.

It provides MySQL CDC 2.0. The core features include:

There are test documents on the Internet showing that the customer table in the TPC-DS dataset was tested. The Flink version is 1.13.1, the customer table has 65 million pieces of data, the source concurrency is 8, and in the full read phase:

Flink Doris Connector is an extension of the Doris community to use Flink to read and write Doris data tables. Currently, Doris supports Flink 1.11.x, 1.12.x, and 1.13.x. Scala: 2.12.x.

Currently, the Flink Doris connector controls warehousing through two parameters:

These two parameters work at the same time, and the condition that enters first triggers the operation of writing the Doris table.

Note: The http v2 version is to be enabled, and the enable_http_server_v2=true is configured in fe.conf. At the same time, since the be list is obtained through fe http rest api, the users that need to be configured have admin permissions.

First of all, we need to compile Doris's Flink connector. You can download it here.

Note: Since Doris's Flink Connector is developed based on Scala 2.12.x, select the version corresponding to Scala 2.12 when using Flink. If you use the preceding address to download the corresponding jar, ignore the following compilation content.

Compile under the Docker compilation environment apache/incubator-doris:build-env-1.2 of Doris. Since the JDK version under 1.3 is version 11, there will be compilation problems.

Execute in the source directory extension/flink-doris-connector/:

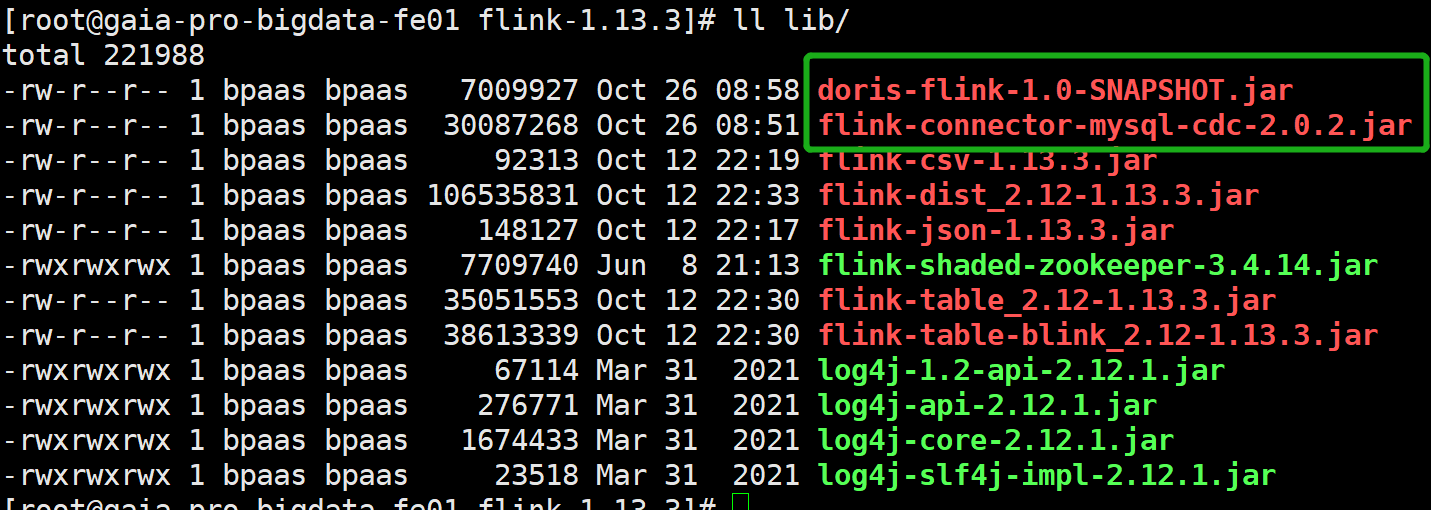

sh build. ShAfter the compilation is successful, a file doris-flink-1.0.0-SNAPSHOT.jar is generated in the output/ directory. Copy this file to the Flink ClassPath to use the Flink-Doris-Connector. For example, for a Flink that runs in Local mode, put this file under the jars/ folder. If Flink runs in Yarn cluster mode, put this file in the pre-deployment package.

For Flink 1.13.x Version Adaptation Issues

<properties>

<scala.version>2.12</scala.version>

<flink.version>1.11.2</flink.version>

<libthrift.version>0.9.3</libthrift.version>

<arrow.version>0.15.1</arrow.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<doris.home>${basedir}/../../</doris.home>

<doris.thirdparty>${basedir}/../../thirdparty</doris.thirdparty>

</properties>You need to change the flink.version here to the same version as your Flink cluster and edit it again.

Here, we do it the Flink SQL Client way.

The software version used:

First, download and install Flink

The local standalone mode is used here:

# wget https://dlcdn.apache.org/flink/flink-1.12.5/flink-1.12.5-bin-scala_2.12.tgz

# tar zxvf flink-1.12.5-bin-scala_2.12.tgzDownload the Flink CDC related JAR package

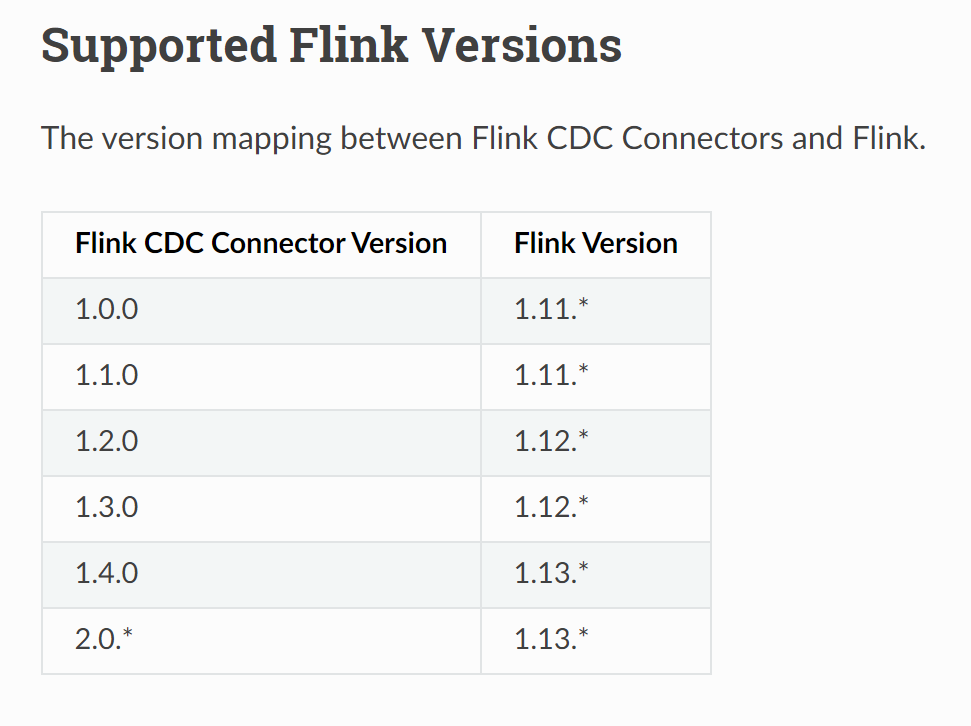

Note: The correspondence between the versions of Flink CDC and Flink.

Here, we use the local standalone mode:

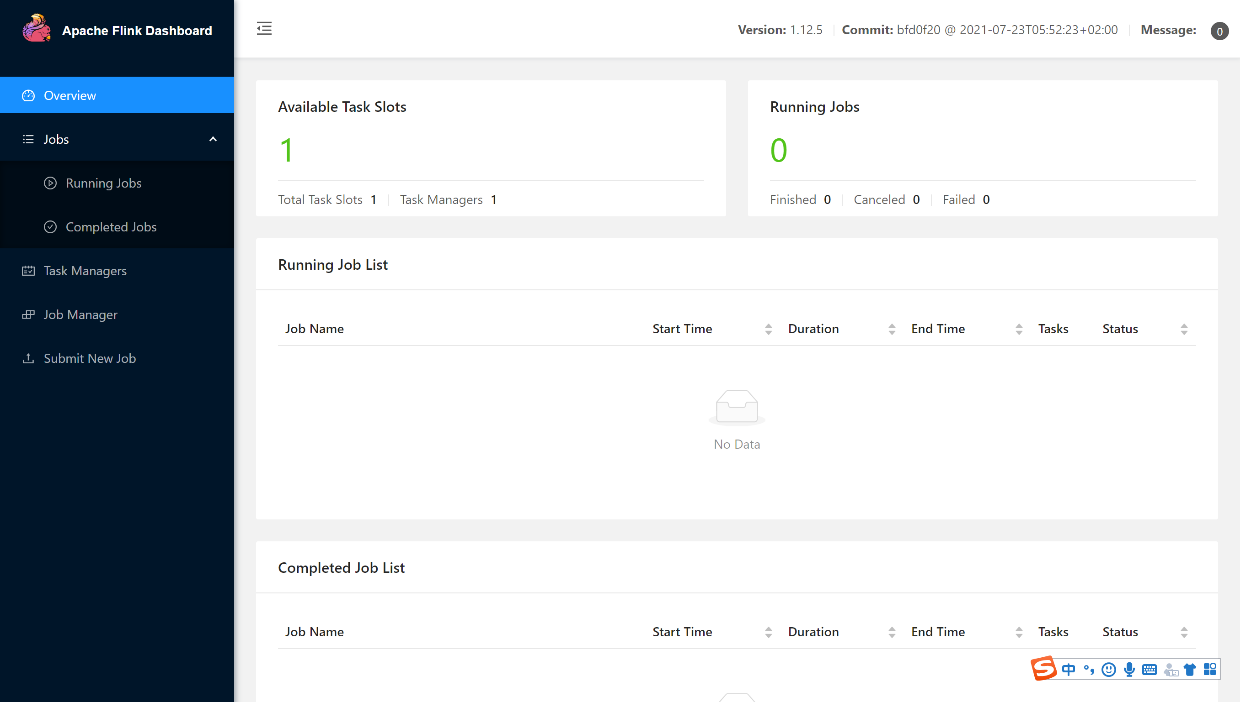

# bin/start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host doris01.

Starting taskexecutor daemon on host doris01.We start the Flink cluster through web access (the default port is 8081). You can see the cluster starts normally.

Please refer to this link (article in Chinese) for specific methods of installing and deploying Doris:

1. Install MySQL and use Docker to install and configure MySQL. Please refer to this link (article in Chinese) for details

2. Open MySQL binlog, enter the Docker container to modify the /etc/my.cnf file, and add the following content under [mysqld]:

log_bin=mysql_bin

binlog-format=Row

server-id=1Then, restart MySQL:

systemctl restart mysqld3. Create a MySQL database table:

CREATE TABLE `test_cdc` (

`id` int NOT NULL AUTO_INCREMENT,

`name` varchar(255) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB CREATE TABLE `doris_test` (

`id` int NULL COMMENT "",

`name` varchar(100) NULL COMMENT ""

) ENGINE=OLAP

UNIQUE KEY(`id`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`id`) BUCKETS 1

PROPERTIES (

"replication_num" = "3",

"in_memory" = "false",

"storage_format" = "V2"

);./bin/sql-client.sh embedded

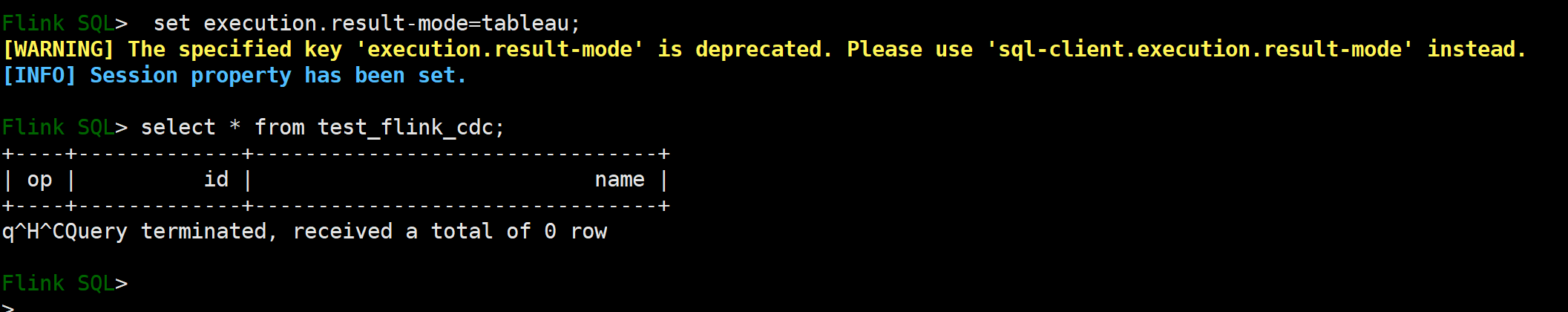

> set execution.result-mode=tableau;

CREATE TABLE test_flink_cdc (

id INT,

name STRING,

primary key(id) NOT ENFORCED

) WITH (

'connector' = 'mysql-cdc',

'hostname' = 'localhost',

'port' = '3306',

'username' = 'root',

'password' = 'password',

'database-name' = 'demo',

'table-name' = 'test_cdc'

);The MySQL mapping table created by executing the query is displayed normally.

select * from test_flink_cdc;

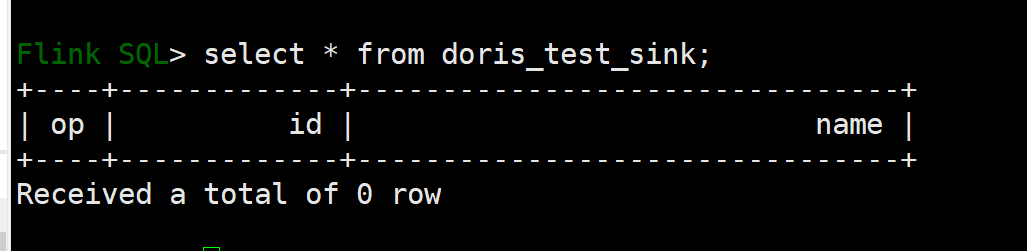

Use the Doris Flink Connector to create a Doris mapping table:

CREATE TABLE doris_test_sink (

id INT,

name STRING

)

WITH (

'connector' = 'doris',

'fenodes' = 'localhost:8030',

'table.identifier' = 'db_audit.doris_test',

'sink.batch.size' = '2',

'sink.batch.interval'='1',

'username' = 'root',

'password' = ''

)Execute the preceding statement on the command line. You can see the table is created. Then, execute the query statement to verify whether it is normal.

select * from doris_test_sink;

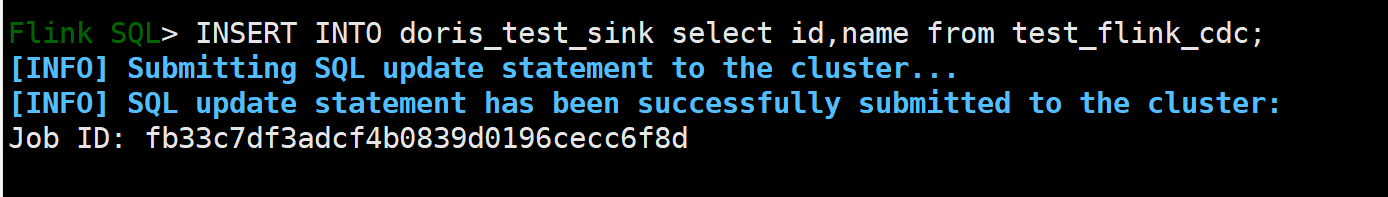

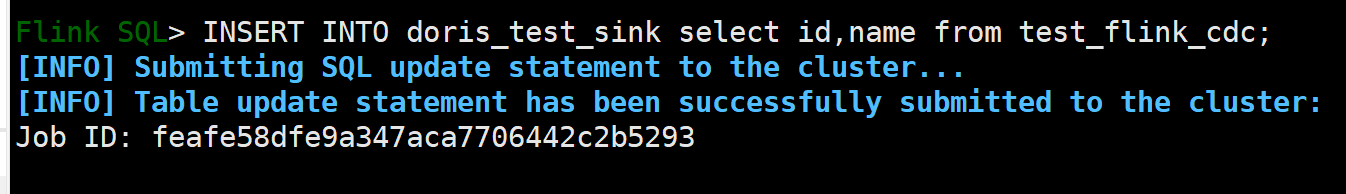

Execute the insert operation to insert the MySQL data into Doris using Flink CDC and Doris Flink Connector:

INSERT INTO doris_test_sink select id,name from test_flink_cdc

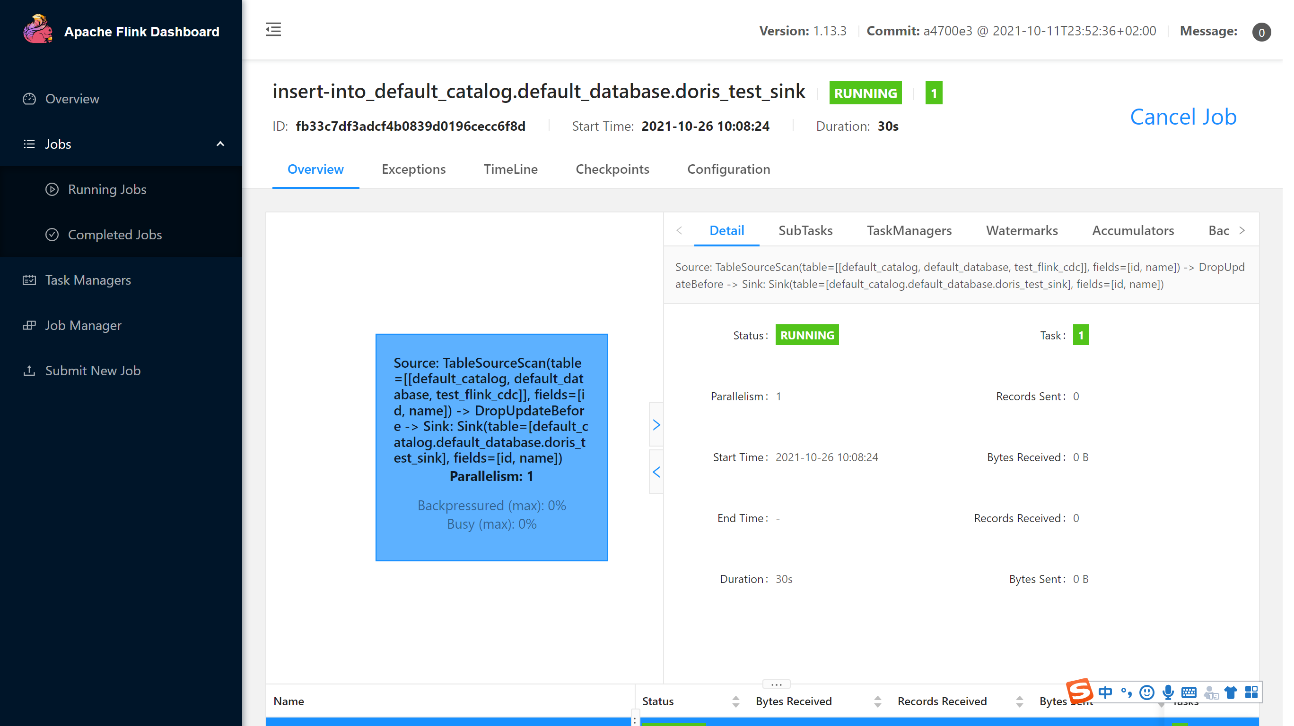

After submission, we can see the relevant job information on the web interface of Flink:

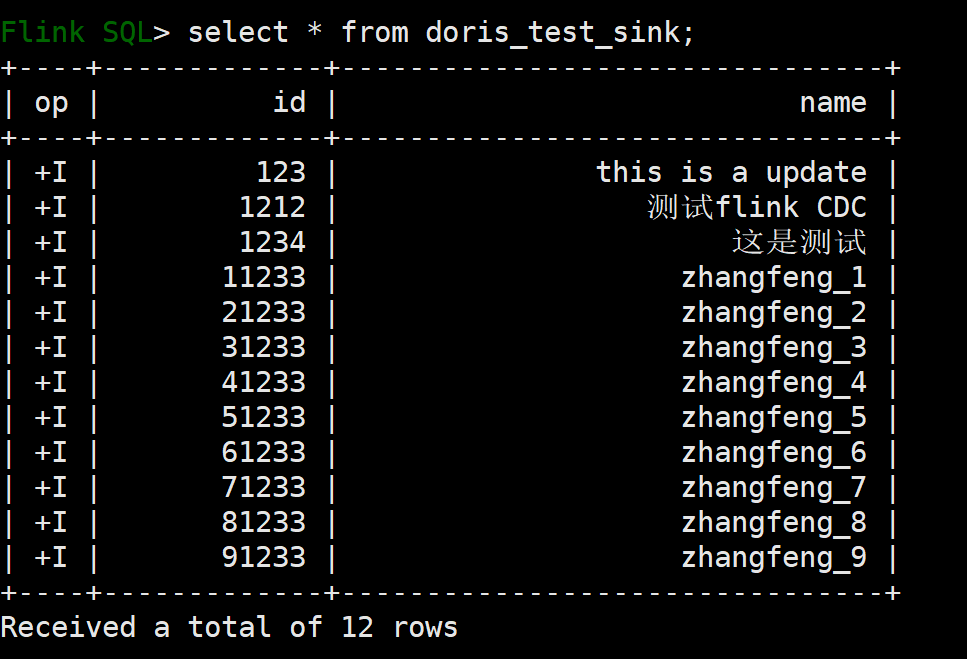

INSERT INTO test_cdc VALUES (123, 'this is a update');

INSERT INTO test_cdc VALUES (1212, ' test flink CDC');

INSERT INTO test_cdc VALUES (1234, 'this is a test');

INSERT INTO test_cdc VALUES (11233, 'zhangfeng_1');

INSERT INTO test_cdc VALUES (21233, 'zhangfeng_2');

INSERT INTO test_cdc VALUES (31233, 'zhangfeng_3');

INSERT INTO test_cdc VALUES (41233, 'zhangfeng_4');

INSERT INTO test_cdc VALUES (51233, 'zhangfeng_5');

INSERT INTO test_cdc VALUES (61233, 'zhangfeng_6');

INSERT INTO test_cdc VALUES (71233, 'zhangfeng_7');

INSERT INTO test_cdc VALUES (81233, 'zhangfeng_8');

INSERT INTO test_cdc VALUES (91233, 'zhangfeng_9');First, stop the Insert into the task. Since there is only one task in the local standalone mode, this task should be stopped, and the data can be seen when executing the query statement on the command line.

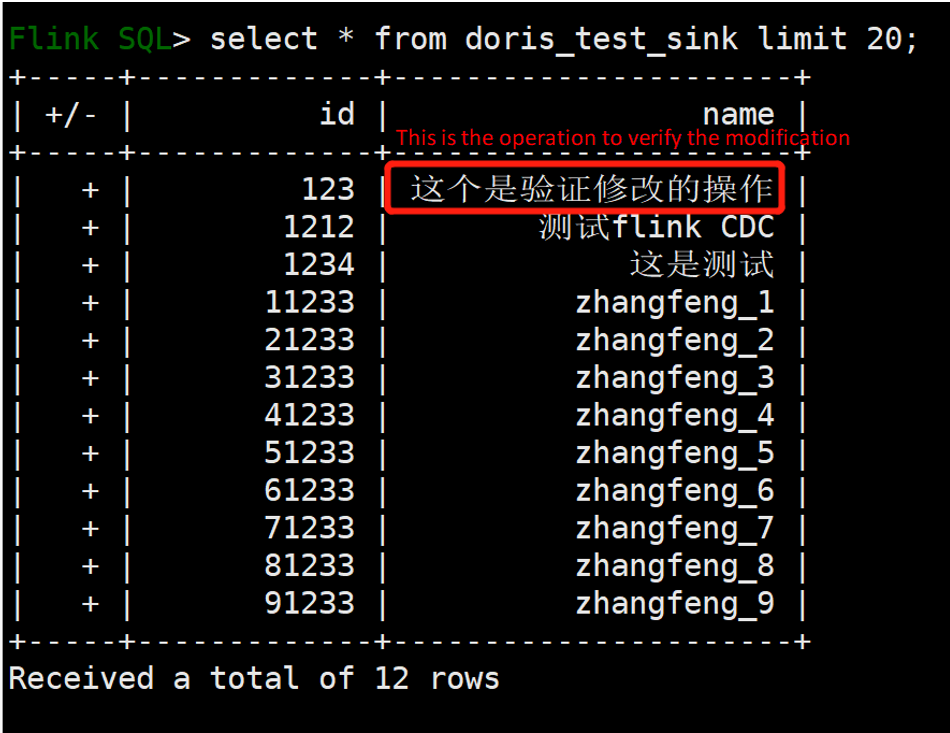

Restart the Insert into the task:

Modify the data in the MySQL table:

update test_cdc set name='This is an operation to verify modification' where id =123If you look at the data in the Doris table again, you will find that it has been modified.

Note: If you want to modify the data in the MySQL table, the data in Doris is also modified. The model of the Doris data table is a Unique key model, and other data models (Aggregate Key and Duplicate Key) cannot update the data.

Currently, the Doris Flink Connector does not support the delete operation, but it will add this operation later.

Flink CDC Series – Part 4: Real-Time Extraction of Oracle Data, Demining, and Tuning Practices

Principle Analysis of Apache Flink CDC Batch and Stream Integration

206 posts | 54 followers

FollowApache Flink Community - May 30, 2024

Apache Flink Community - May 28, 2024

Apache Flink Community - August 14, 2025

Apache Flink Community - January 31, 2024

Apache Flink Community China - May 18, 2022

ApsaraDB - February 29, 2024

206 posts | 54 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Apache Flink Community