This article is a summary of Wu Yi (Yunxie) and Xu Bangjiang (Xuejin)'s speech from Flink Forward Asia 2021. The speech explains how to use Flink CDC to simplify the entry of real-time data into the database in five chapters. The following are the main contents of the article:

In a broad sense, technologies that can capture data changes can be called CDC technologies. CDC technology is used to capture data changes in a database. Its application scenarios are extensive, including:

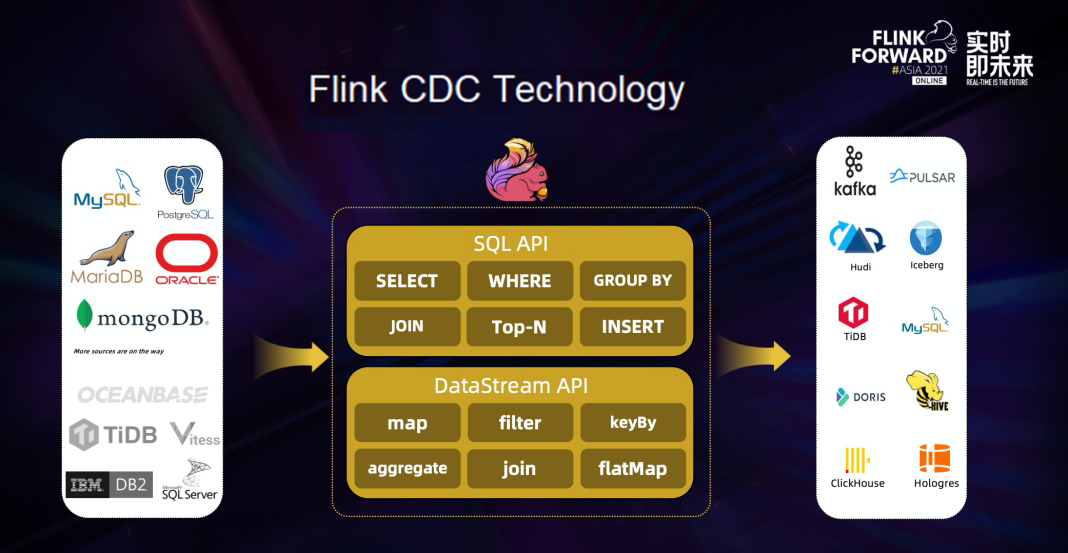

Based on the Change Data Capture technology of database logs, Flink CDC implements integrated reading capabilities of full and incremental data. With the excellent pipeline capabilities and rich upstream and downstream ecosystems of Flink, Flink CDC supports capturing changes from various databases and synchronizing these changes to downstream storage in real-time.

Currently, the upstream of Flink CDC supports a wide range of data sources, such as MySQL, MariaDB, PG, Oracle, and MongoDB. Support for databases, including OceanBase, TiDB, and SQLServer, is being planned in the community.

Flink CDC supports writing to Kafka and Pulsar MSMQ, databases (such as Hudi and Iceberg), and various data warehouses.

At the same time, the changelog mechanism that is native to Flink SQL enables easy processing of CDC data. You can use SQL to clean, widen, and aggregate full and incremental data in a database, reducing the use barriers. In addition, the Flink DataStream API helps users write code to implement custom logic, providing users with the freedom to deeply customize their business.

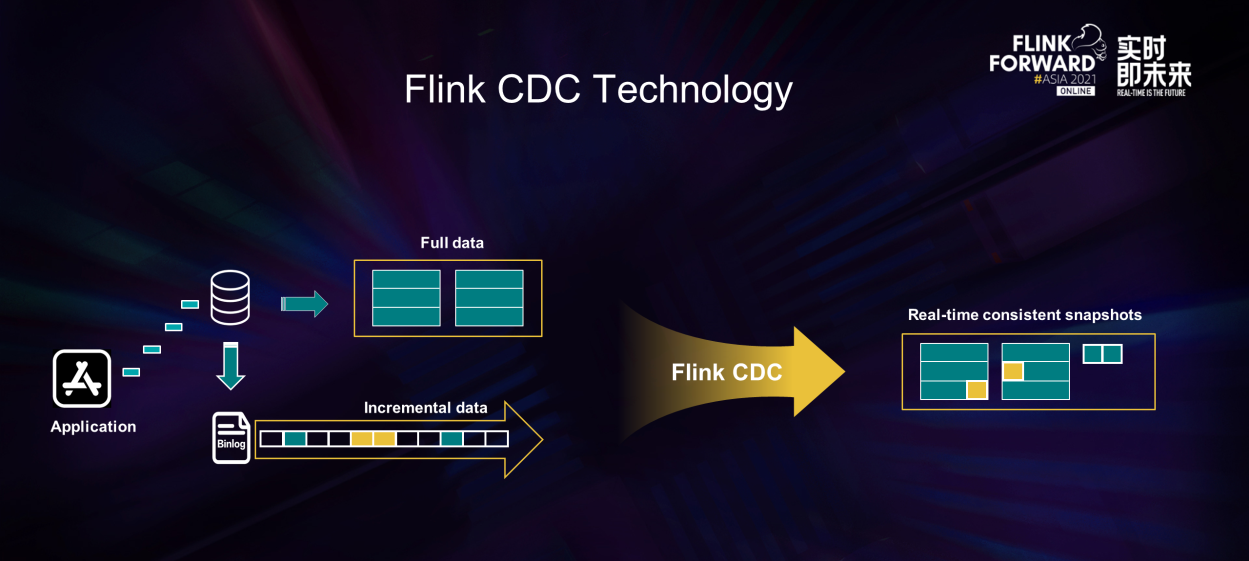

The core of Flink CDC technology is to support real-time consistency synchronization and processing of full data and incremental data in tables so that users can easily obtain real-time consistency snapshots of each table. For example, a table contains historical full business data and incremental business data is continuously written and updated. Flink CDC captures incremental update records in real-time and provides snapshots consistent with those in the database in real-time. If the records will be updated, it will update the existing data. If records will be inserted, the data will be appended to the existing data. Flink CDC provides consistency assurance during the whole process, which means there is no duplication and lost data.

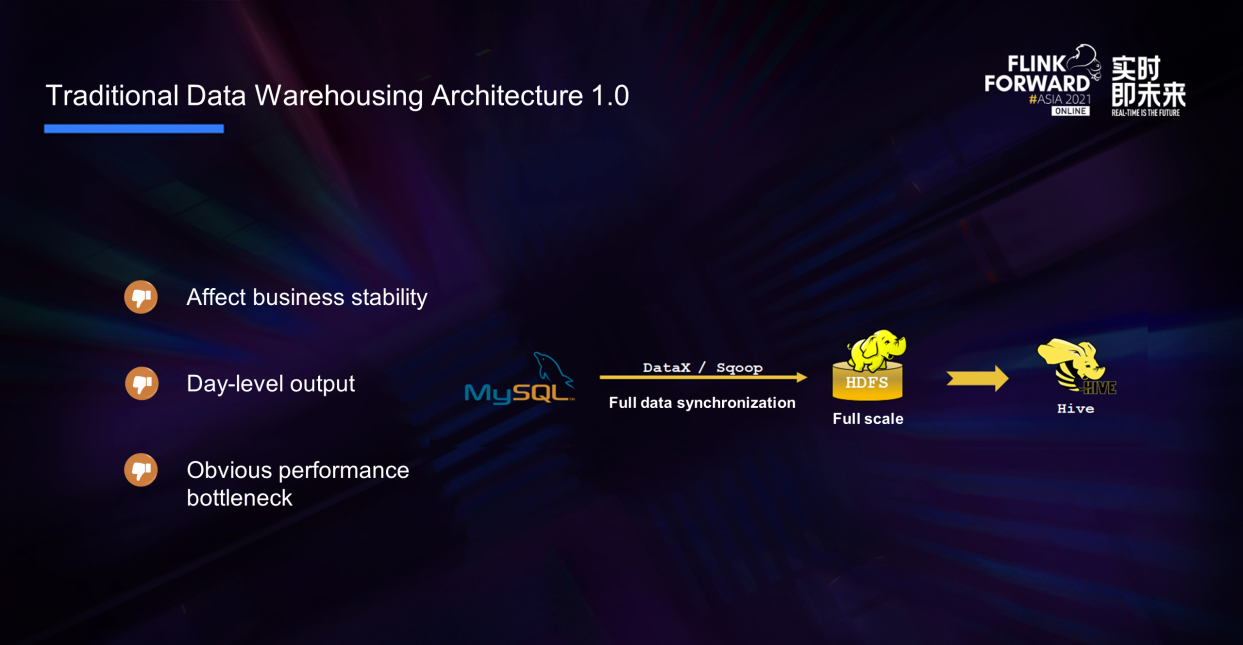

What kind of changes can Flink CDC technology bring to the existing data warehousing architecture? First, we can look at the architecture of traditional data warehousing.

In the early data warehousing architecture, you usually select the full data to be imported into several warehouses every day and perform offline analysis. This architecture has several disadvantages:

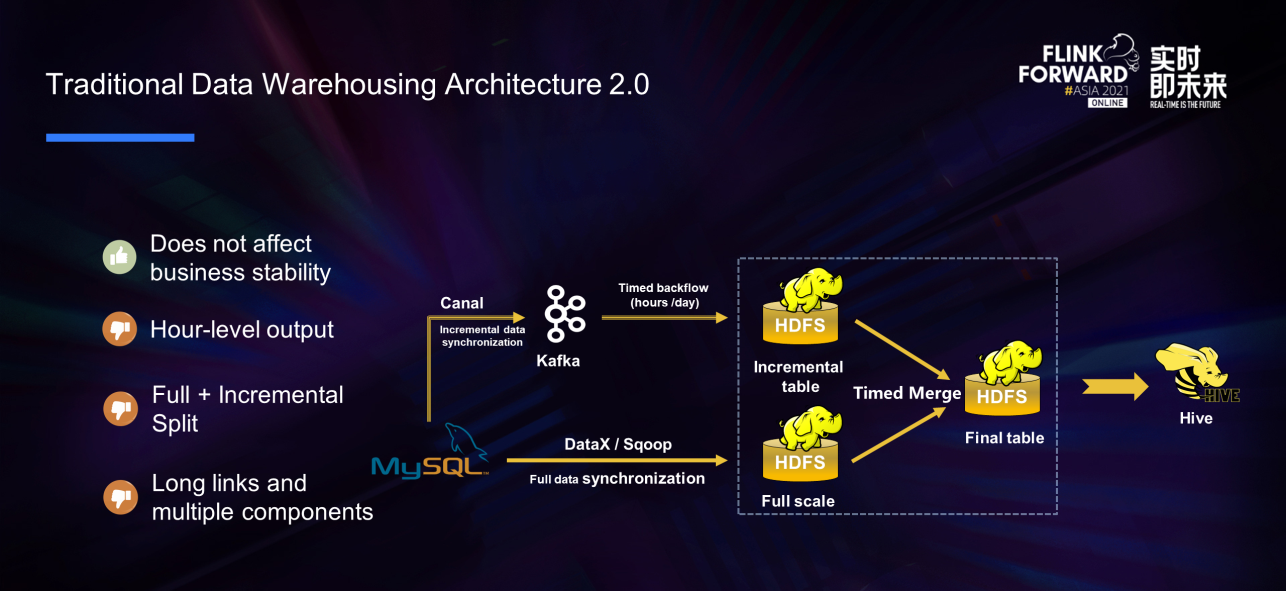

In the 2.0 era of data warehousing, data warehousing has evolved into a Lambda architecture, which adds links for synchronously importing increments in real-time. On the whole, Lambda architecture's extensibility is better and no longer impacts stability, but there are still some problems:

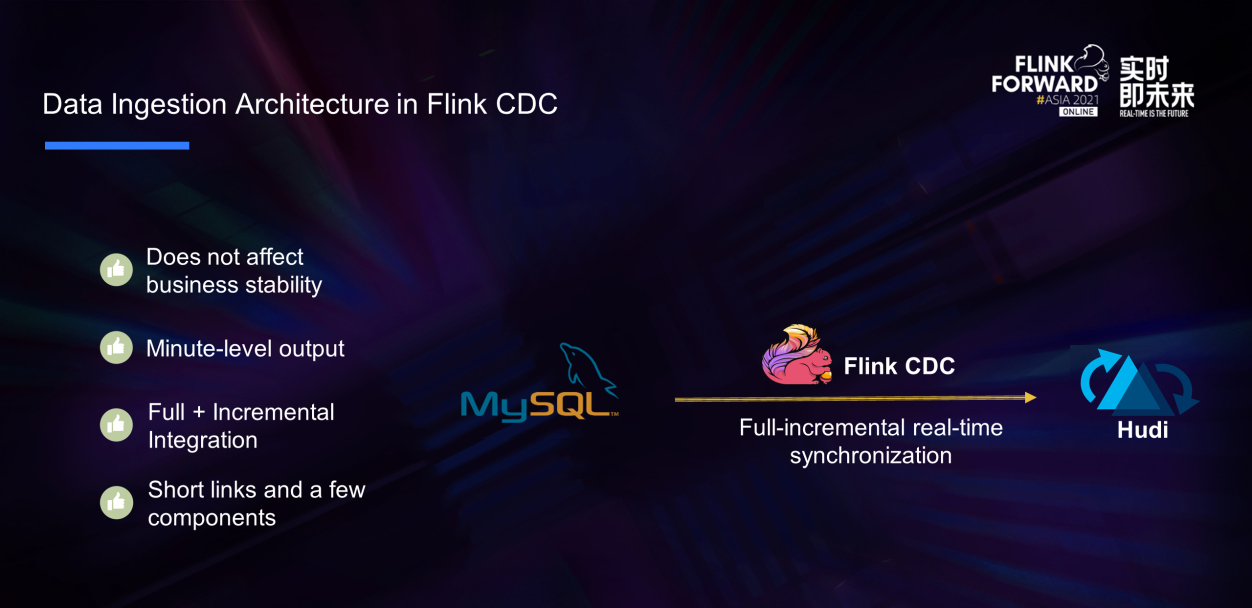

The emergence of Flink CDC provides some new ideas for the data warehousing architecture for the problems existing in the traditional data warehousing architecture. With the full-incremental integrated real-time synchronization capability of Flink CDC technology and the update capability provided by data lakes, the entire architecture becomes simple. We can use Flink CDC to read full and incremental data from MySQL and write and update data to Hudi.

This simple architecture has advantages. First, it does not affect business stability. Second, it provides minute-level output to meet the needs of near real-time businesses. At the same time, the full and incremental links are unified and integrated synchronization is realized. Finally, the architecture has shorter links and fewer components to maintain.

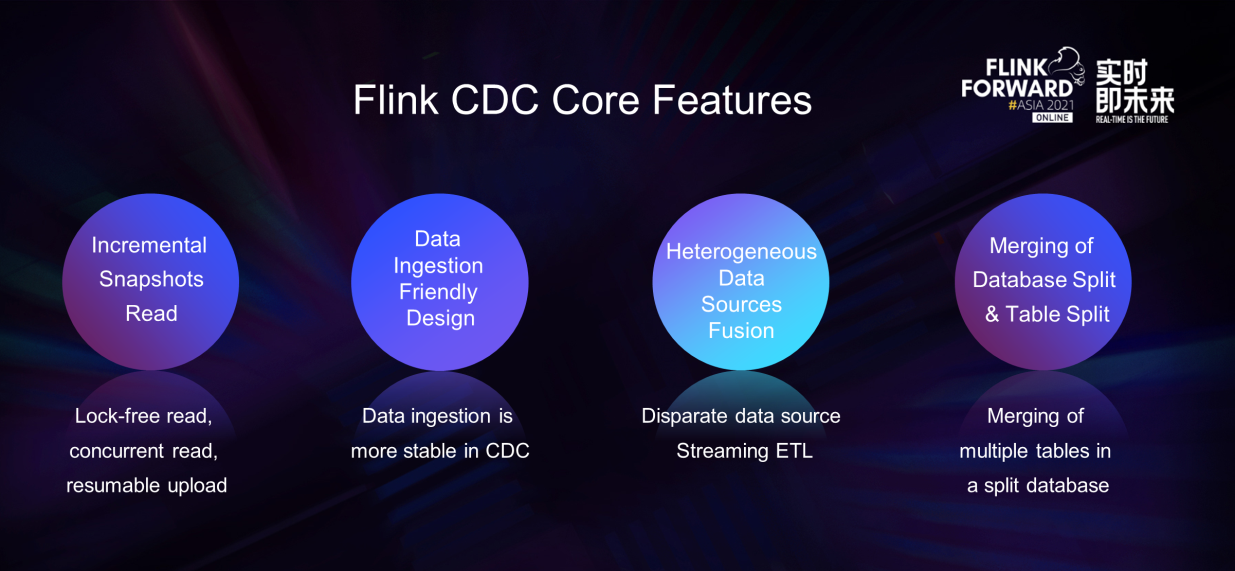

The core features of Flink CDC can be divided into four parts:

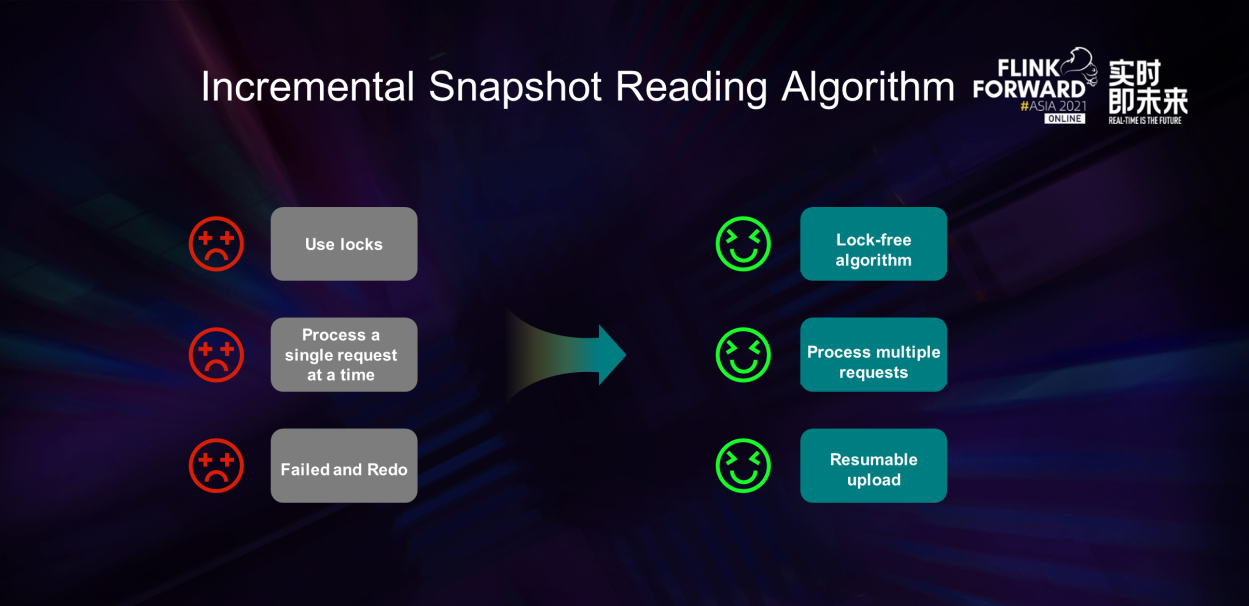

In Flink CDC 1.x, MySQL CDC has three major pain points that affect product availability:

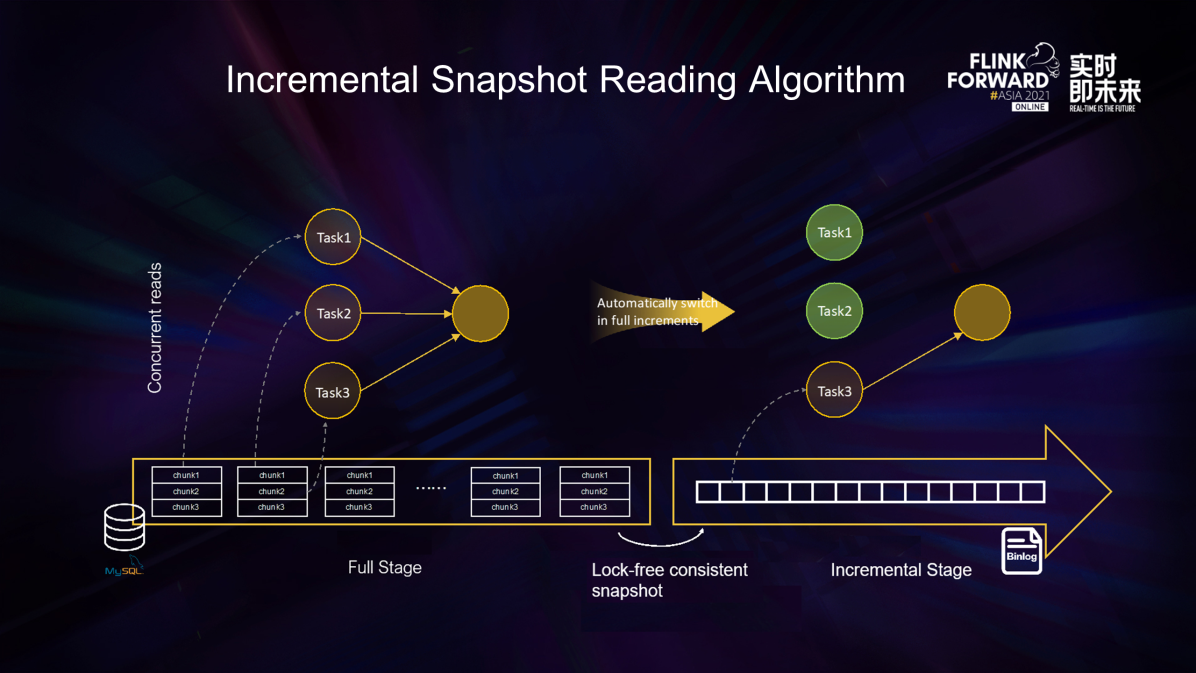

In simple terms, the core idea of the incremental snapshot reading algorithm is to divide the table into chunks for concurrent reading in the full reading phase. After entering the incremental phase, only one task is required to read binlog logs concurrently. When the full and incremental data are automatically switched, the lock-free algorithm is used to ensure consistency. This design improves the reading efficiency while further saving resources. The data synchronization of all increments is realized. This is an important landing on the road of stream and batch integration.

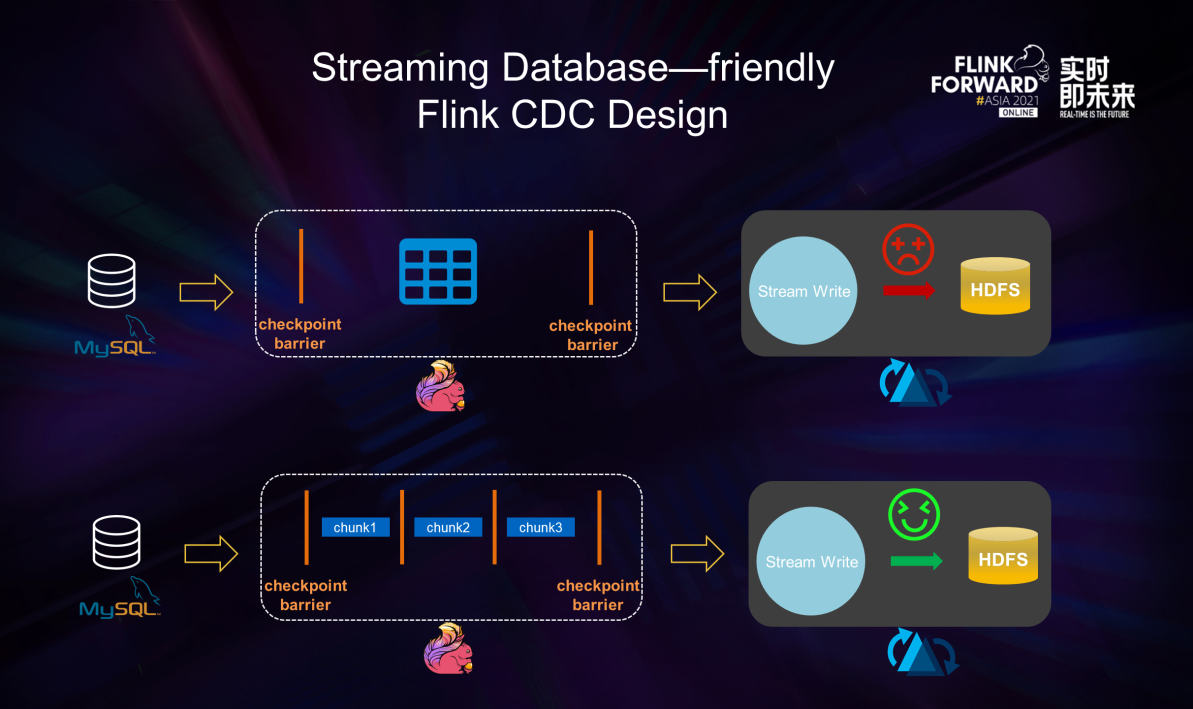

Flink CDC is a stream-friendly framework. The database scenario was not considered in earlier versions of Flink CDC. Checkpoints are not supported in the full phase. Full data is processed in one checkpoint. This is unfriendly to databases that rely on checkpoints to submit data. The Flink CDC 2.0 was designed with the database scenario in mind. It is a stream-friendly design. In the design, full data is split. Flink CDC can optimize the checkpoint granularity from table granularity to chunk granularity, which reduces the buffer usage during database writing. Also, it is more friendly.

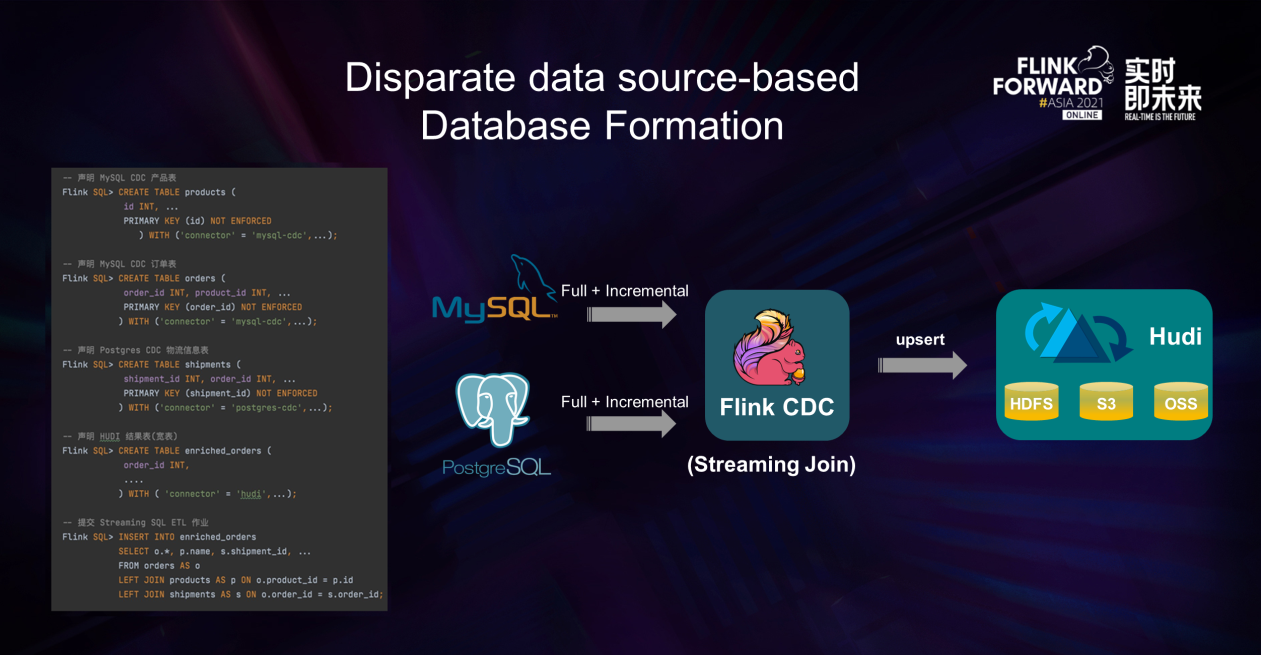

A core point that distinguishes Flink CDC from other Data Integration frameworks is the stream-batch integrated computing capability provided by Flink. This makes Flink CDC a complete ETL tool with excellent E and L capabilities and powerful Transformation capabilities. Therefore, we can easily implement database construction based on heterogeneous data sources.

On the SQL on the left side of the preceding figure, we can associate the real-time product table, order table in MySQL, and logistics information table in PostgreSQL in real-time, which is Streaming Join. The associated results are updated to Hudi in real-time, and the data lake based on the disparate data source is easily constructed.

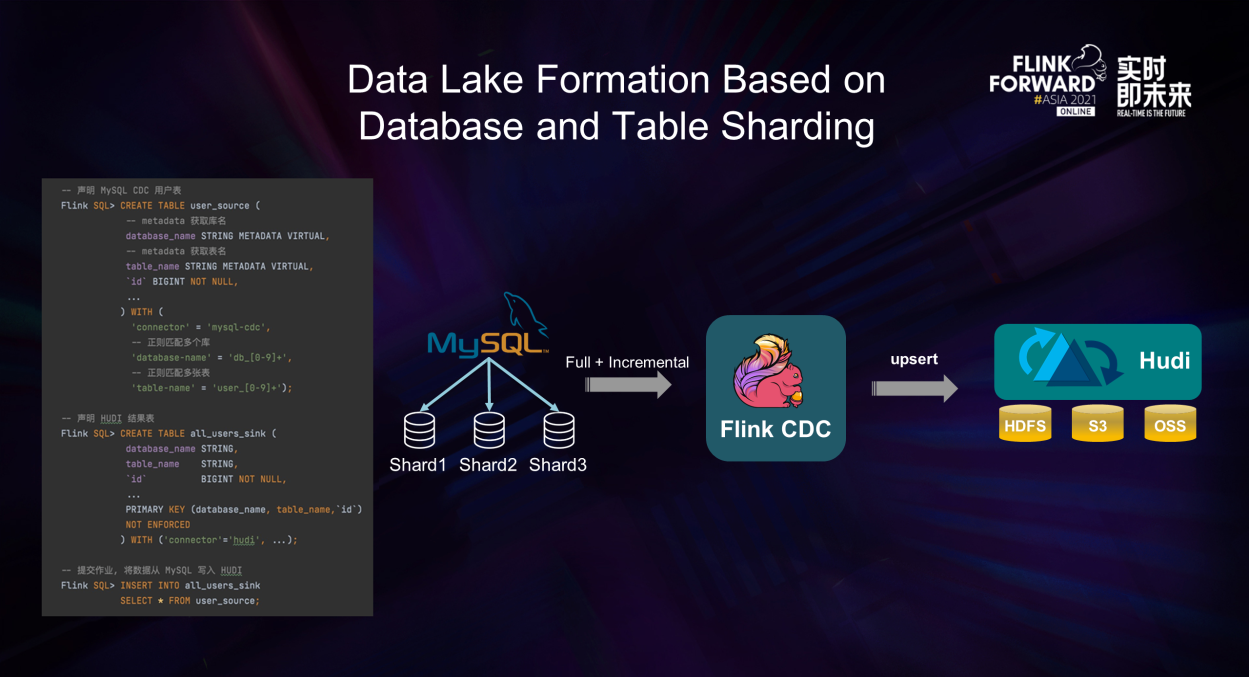

In OLTP systems, a single large table is split in a database to solve the problem of a large amount of data in a single table and improve the system throughput. However, if you want to facilitate data analysis, you need to merge the tables split from database and table splits into a large table when they are synchronized to the data warehouse and database. Flink CDC can complete this task.

In the SQL statement on the left of the preceding figure, we declare a user_source table to capture the data of all user database splits. We use regular expressions to match these tables using the table configuration items database-name and table-name. The user_source table also defines two metadata columns to distinguish which database and table the data comes from. In the declaration of the Hudi table, we declare the library name, table name, and primary key of the original table as the joint primary key in Hudi. After the two tables are declared, a simple INSERT INTO statement can merge the data of all database splits and table splits into one table in Hudi. This completes the Database Formation based on database splits and table splits, which facilitates subsequent unified analysis on the lake.

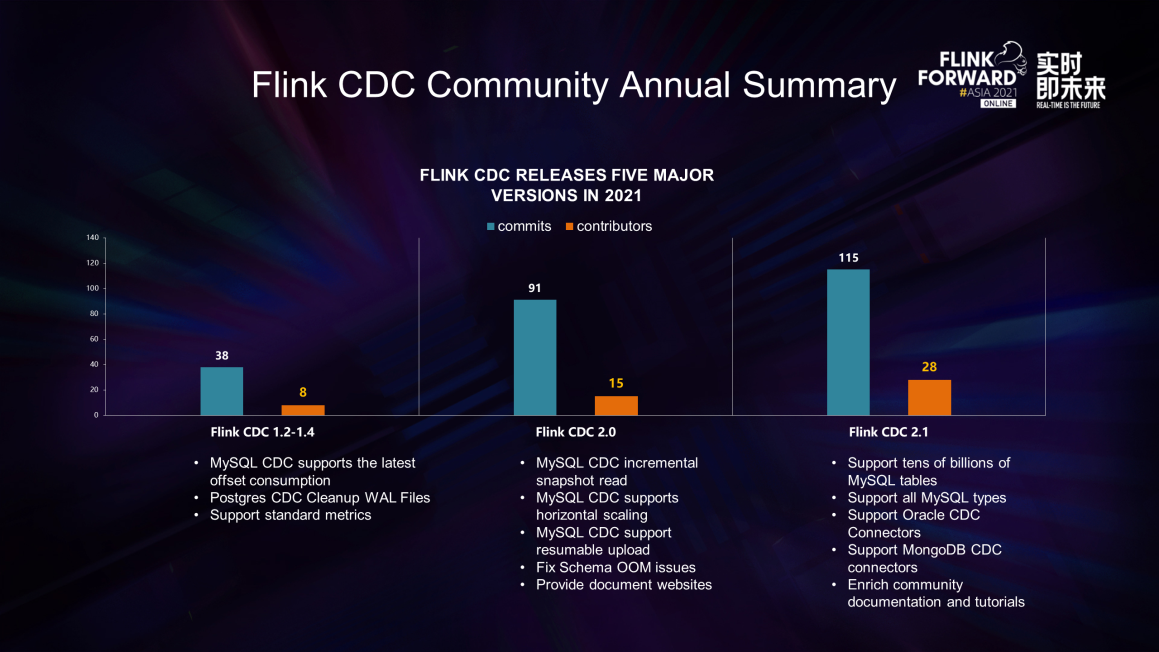

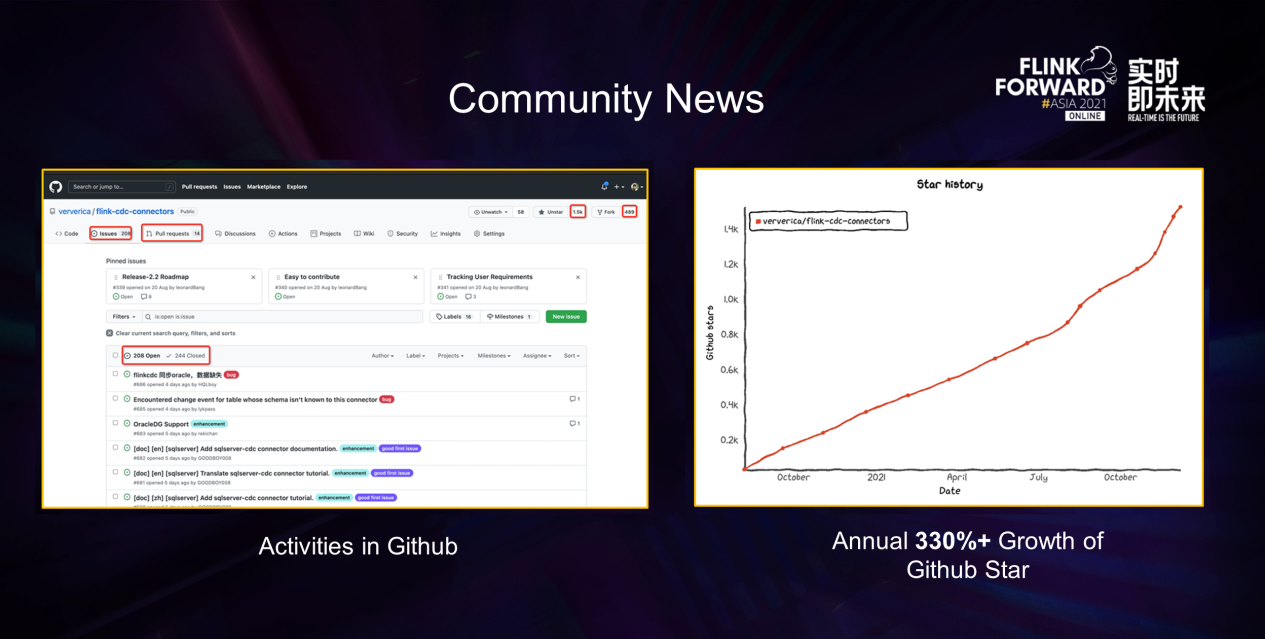

Flink CDC is an independent open-source project. The project code is hosted on GitHub. The community has released five versions this year. The three versions of the 1.x series have introduced some small functions. The 2.0 version of MySQL CDC supports advanced functions, such as lock-free reading, concurrent reading, and resumable upload, with 91 commits and 15 contributors. Version 2.1 supports Oracle and MongoDB databases, with 115 commits and 28 contributors. The growth of commits and contributors in the community is clear.

Documents and help manuals are an important part of the open-source community. The Flink CDC community has launched a versioned document website, including documents for version 2.1, to help users. The document provides many quick-start tutorials. Users can get started with Flink CDC as long as they have a Docker environment. In addition, an FAQ guide is provided to solve common problems.

The Flink CDC community achieved rapid development in 2021. GitHub's PR and issue are quite active, and GitHub Star has increased 330% year on year.

Flink CDC has large-scale practice and landing in Alibaba and encountered some pain points and challenges in the process. We will explain how we improved and solved it.

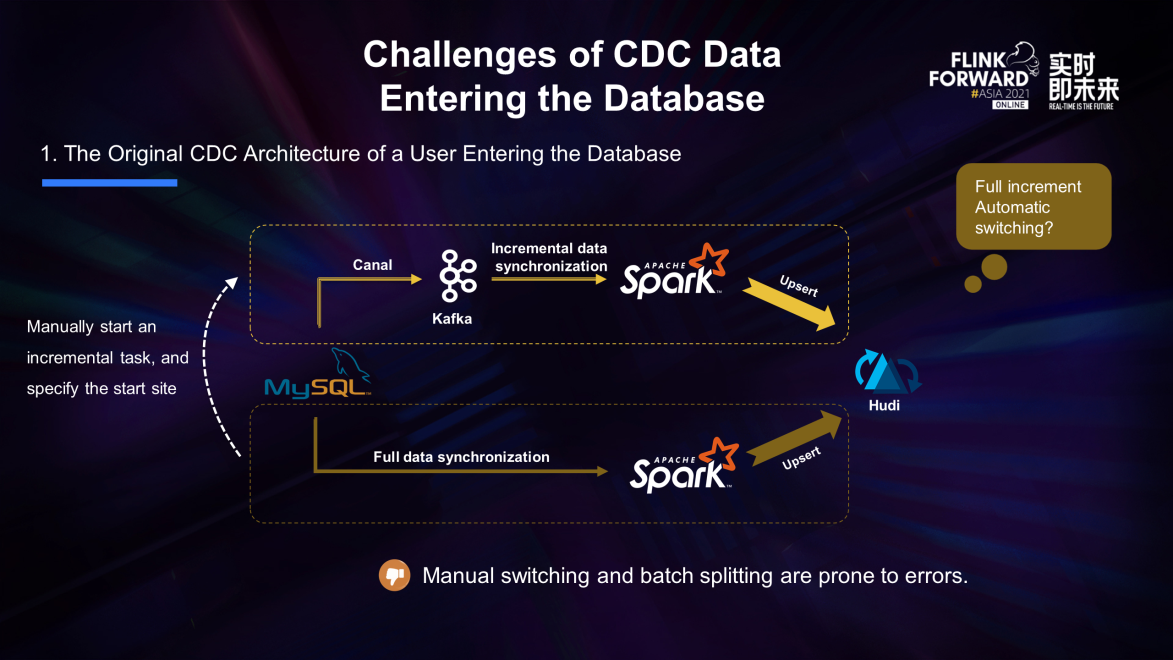

Let's look at some of the pain points and challenges that the CDC encountered when entering the database. This is a user's original CDC data entering the database architecture, divided into two links:

This architecture takes advantage of the update capability of Hudi, but it does not need to periodically schedule full merge tasks and can achieve minute-level latency. However, full and incremental jobs are still separate. The switchover between full and incremental still requires manual intervention, and an accurate incremental start point needs to be specified. Otherwise, there is a risk of data loss. This architecture is fragmented and not unified. Just now, Xuejin introduced that one of the biggest advantages of Flink CDC is the automatic switching of full increments, so we replaced the original architecture with Flink CDC.

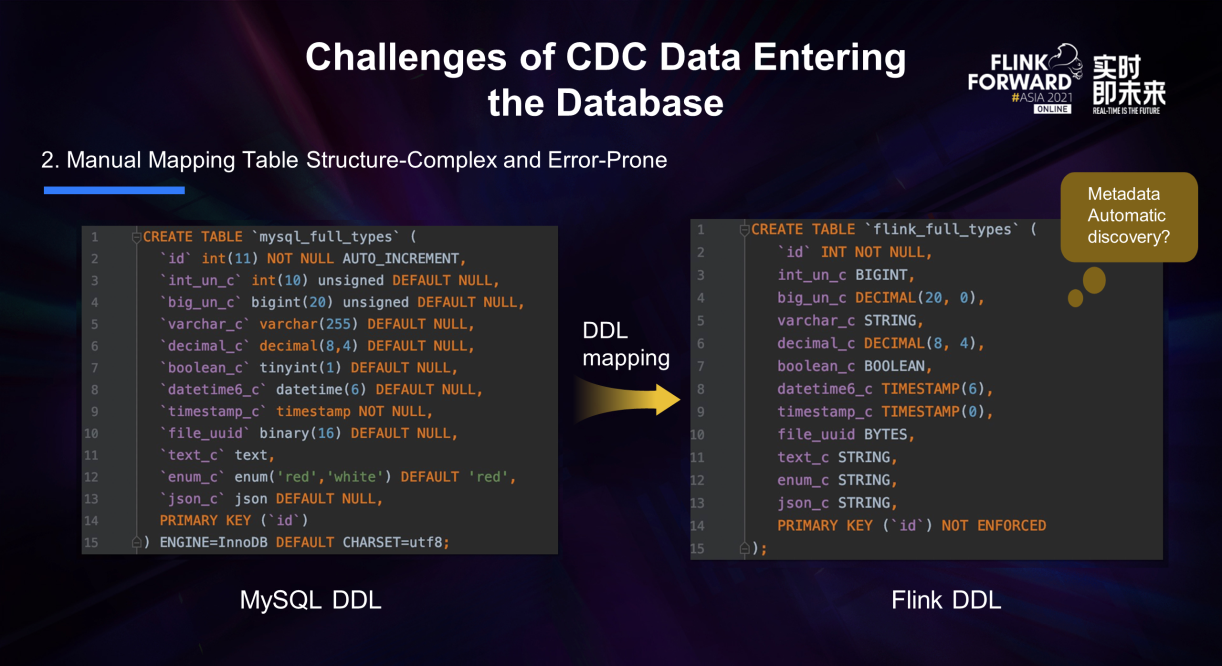

However, after you use Flink CDC, the first pain point is how you need to manually map the DDL of MySQL to the DDL of Flink. Manual mapping of table structures is tedious, especially when the number of tables and fields is large. Manual mapping is also error-prone. For example, MySQL's BIGINT UNSINGED cannot be mapped to BIGINT of Flink but DECIMAL(20). If the system can help users automatically map the table structure, it will be much simpler and safer.

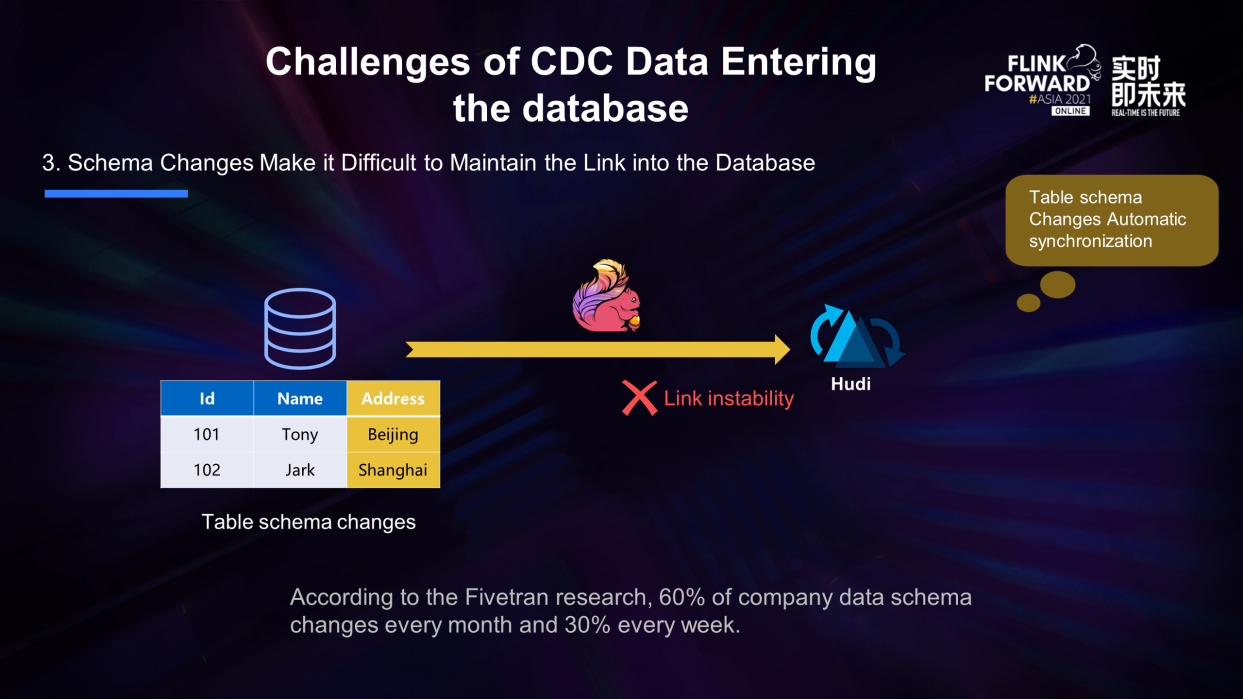

Another pain point is that the change in the table structure makes it difficult to maintain the link in the database. For example, you have a table with the id and name columns, but a column address is suddenly added. This new column of data may not be synchronized to the database or even cause the link to enter the database to hang, affecting the stability. In addition to column changes, there may also be column deletion and type changes. Fivetran did a research report and found that the schema of 60% of companies changes every month and 30% changes every week. This shows that every company will face the data integration challenges brought about by schema changes.

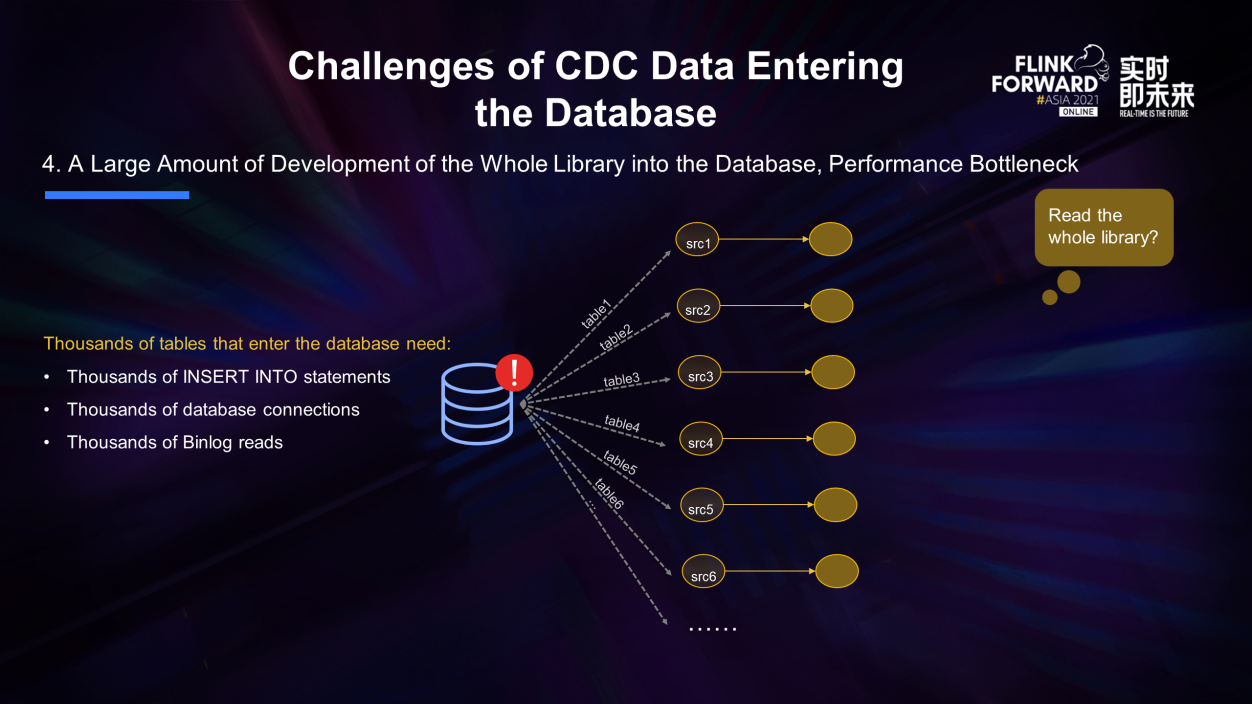

The last challenge is the whole library ingestion. Since users mainly use SQL, they need to define an INSERT INTO statement for the data synchronization link of each table. Some MySQL instances even contain thousands of business tables, and users need to write thousands of INSERT INTO statements. What's more, each INSERT INTO task creates at least one database connection and reads Binlog data once. Thousands of connections are required to enter the database, and thousands of Binlog reads are repeated. This will put a lot of pressure on MySQL and the network.

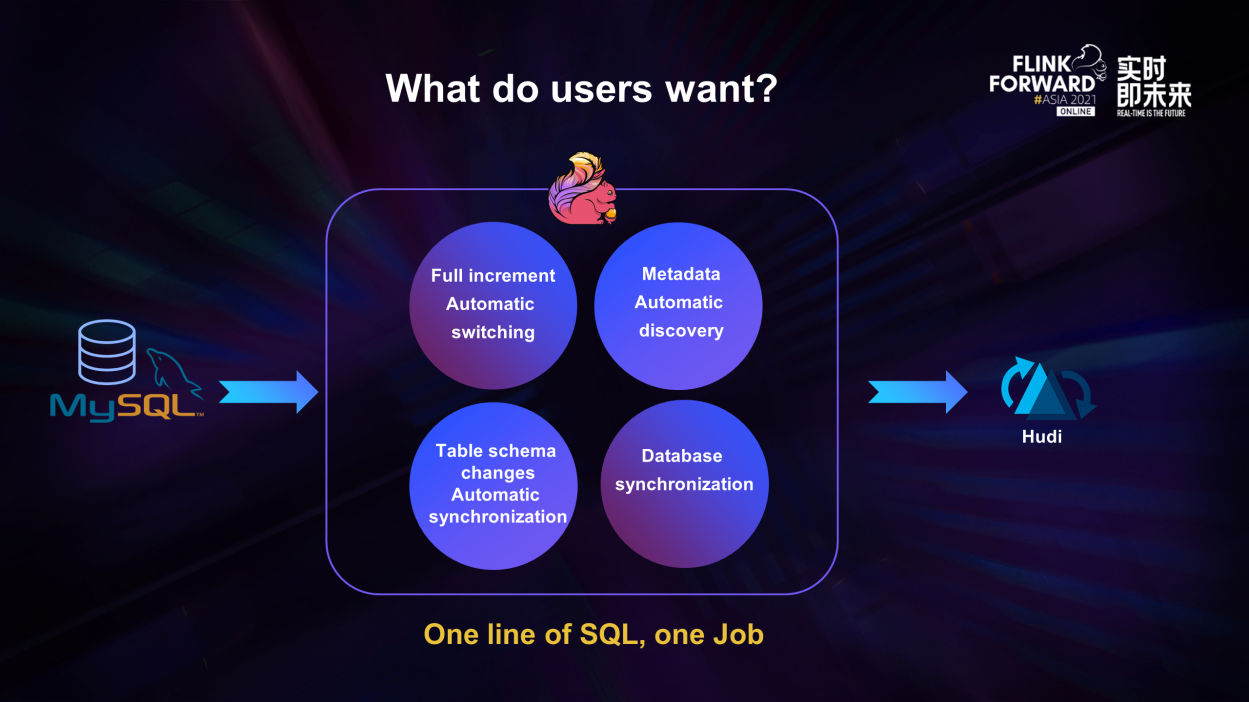

Just now, we introduced many pain points and challenges of CDC data entering the database. We can think about it from the perspective of users. What do users want in the scenario of the database entering the lake? We can regard the Data Integration system as a black box. What kind of capabilities do users expect this black box to provide to simplify the work of entering the database?

These four core functions constitute the Data Integration system that users expect, and all this is more perfect if only one line of SQL and one job are needed. We call the middle system fully automated Data Integration because it fully automates the entry of the database into the lake and solves several core pain points. Flink is a suitable engine to achieve this goal.

Therefore, we spent a lot of effort building this fully automated Data Integration based on Flink. It mainly revolves around the four points just mentioned.

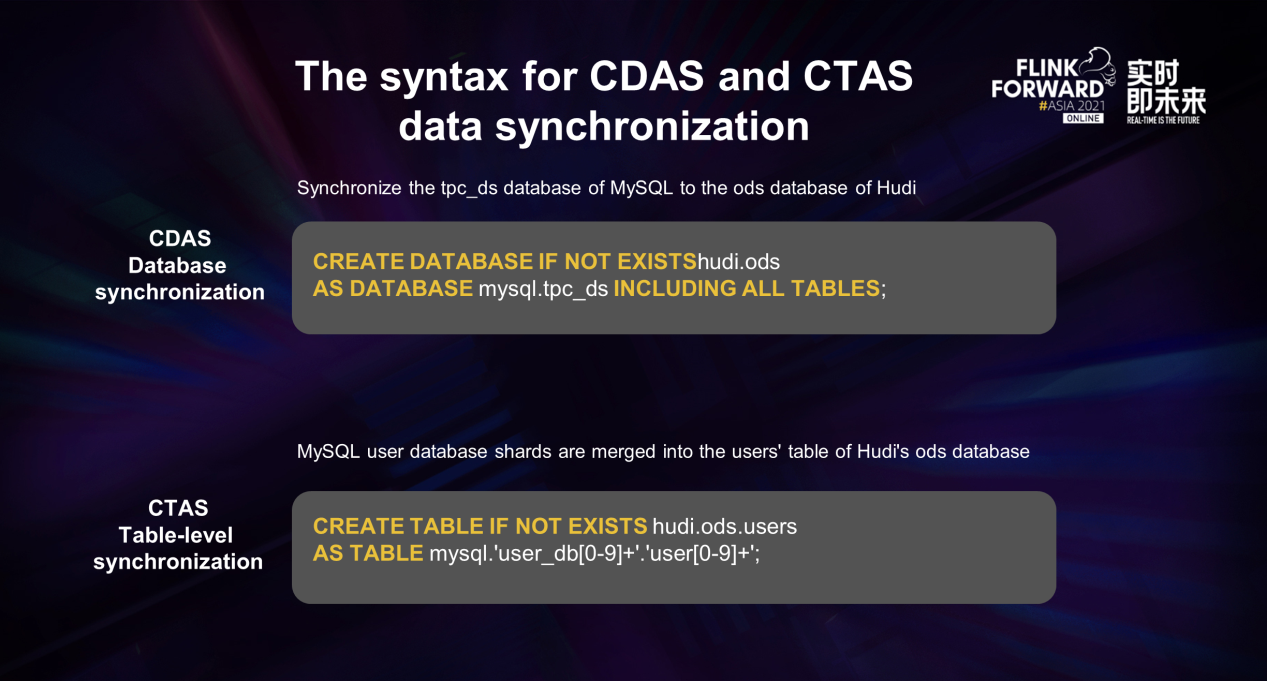

We also introduced the data synchronization syntax of CDAS and CTAS to support whole-database synchronization. Its syntax is simple. CDAS syntax is create database as database, which is used for whole database synchronization. The statement shown here completes the synchronization from the tpc_ds library of MySQL to the ods library of Hudi. Similarly, we have a CTAS syntax, which can be easily used to support table-level synchronization. You can also use regular expressions to specify database names and table names to complete database splits and table split synchronization. In this example, the user database splits of MySQL are merged into the users' table of Hudi. The syntax of CDAS CTAS automatically creates a destination table on the destination side and then starts a Flink job to synchronize full and incremental data and table schema changes in real-time.

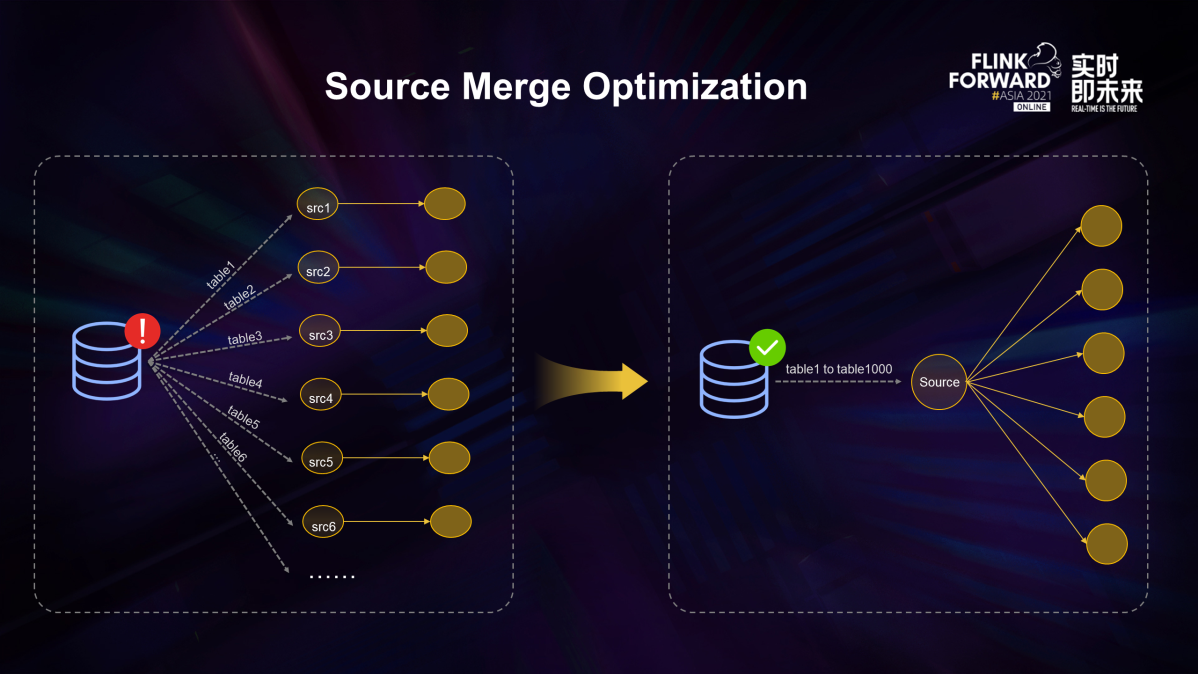

As mentioned earlier, when thousands of tables enter the database, too many database connections are established. Repeated reading of binlogs will cause a lot of pressure on the source database. We have introduced the optimization of source merging to solve this problem. We will try to merge the sources in the same job. If all the sources are read from the same data source, they will be merged into a source node. The database only needs to establish a connection, and the binlog only needs to be read once, thus realizing the reading of the entire database and reducing the pressure on the database.

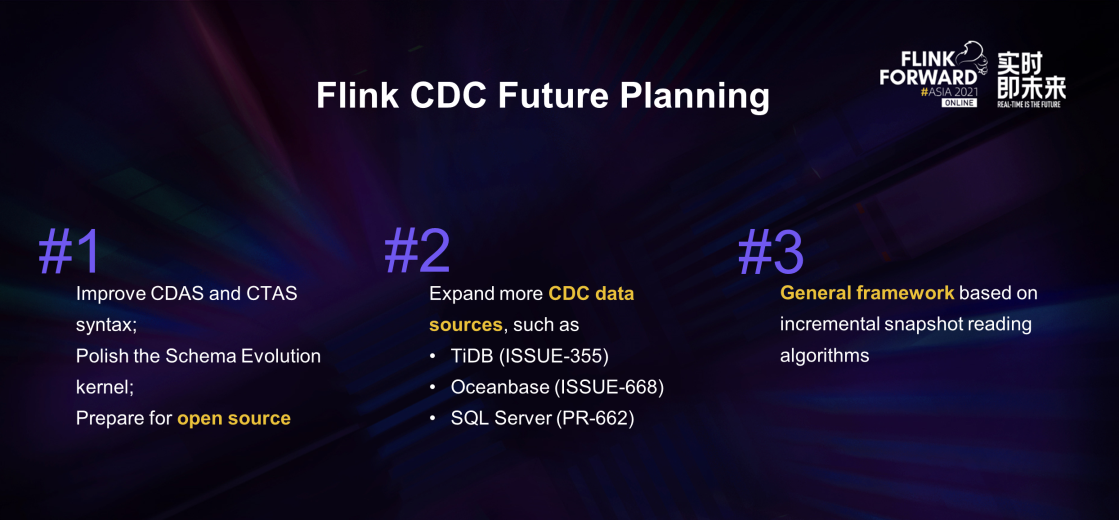

Finally, there are three main plans for the future of Flink CDC.

I also hope that more like-minded people can join in the construction and contribution of the Flink CDC open-source community and build a new generation of data integration frameworks together!

Exploration of Advanced Functions in Pravega Flink Connector Table API

Flink CDC Series – Part 2: Flink MongoDB CDC Production Practices in XTransfer

206 posts | 54 followers

FollowApache Flink Community - March 14, 2025

Apache Flink Community - August 14, 2025

Alibaba Cloud Indonesia - January 24, 2025

ApsaraDB - February 29, 2024

Alibaba Cloud Indonesia - March 23, 2023

Apache Flink Community - March 31, 2025

206 posts | 54 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Apache Flink Community