Data lake management has gradually gravitated to refined management, meaning that the original direct reading and writing of storage by computing engines should use a standard method. However, the "standard method" is not actually defined in the industry and is deeply bound to a specific computing engine. Therefore, the ability to support multiple computing engines has become a criterion for measuring data lakes.

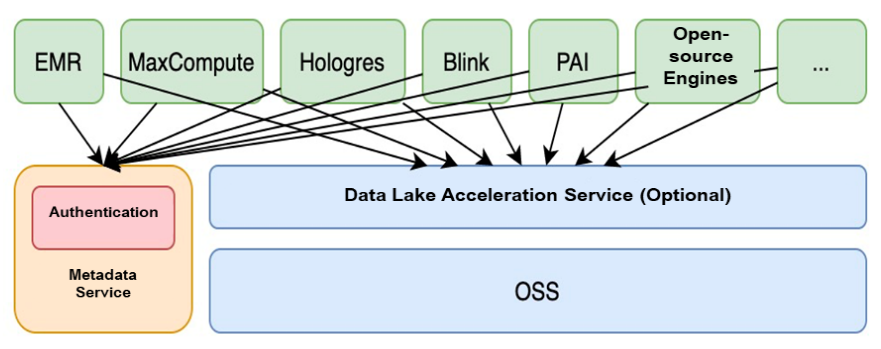

Alibaba Cloud Data Lake Formation (DLF) can connect to a variety of computing engines, including Alibaba Cloud E-MapReduce (EMR), MaxCompute (in development), Blink (in development), Hologres (in development), PAI (in development), as well as, open source Hive, Spark, and Presto.

The connection in a data lake involves connections between metadata and storage engines. Metadata is shared by all users and is accessible through unified APIs. Each engine uses a customized metadata access client to access metadata. The metadata service provides tenant isolation assurance and authentication services for each user. For data storage, users use their own OSS to store data, and each engine can access OSS. It is not a problem for Alibaba Cloud services and for most engines that support HDFS storage. In the upper layer of OSS, DLF also provides an optional, simple-to-use data lake acceleration service. Users only need to replace the access path to OSS with the path provided by the acceleration service on the engine side.

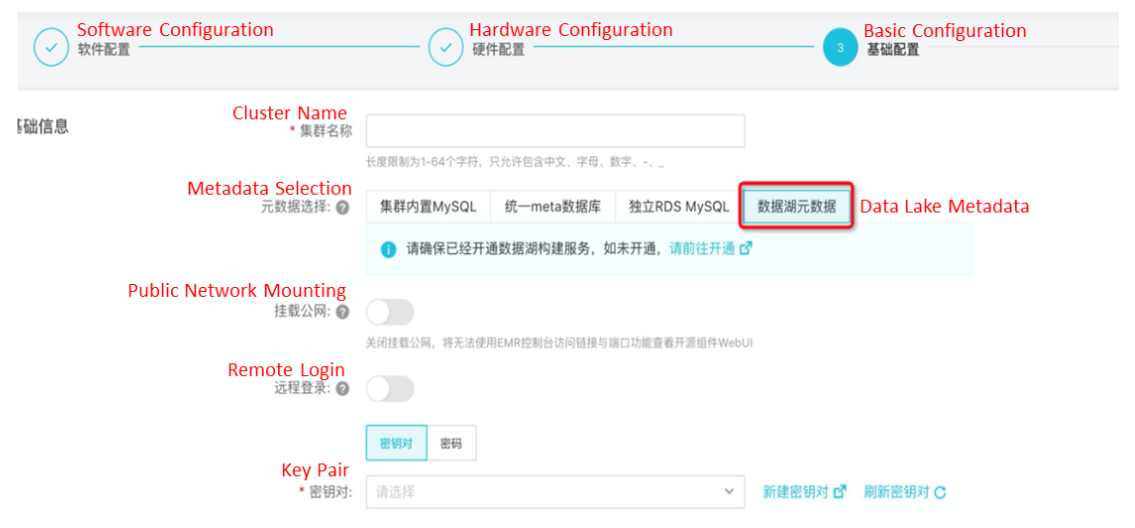

Accessing DLF through EMR is the simplest option. When creating an EMR cluster, users can directly use the metadata service of DLF as the metadata database. EMR and DLF are deeply connected in terms of authentication. As long as the user's authentication is obtained, using EMR based on the data lake metadata service is similar to using the local metadata database. Besides, DLF-related SDKs have already been automatically installed in the creation phase of EMR clusters. Meanwhile, Spark, Hive, and Presto all support DLF, so users can get the best experience of data lake analysis by using the EMR engine.

In a scenario, where you do not choose the data lake metadata service when creating a cluster, you can later transfer the metadata to the data lake metadata service by modifying the configuration. The data lake is built based on OSS with no cross-service authentication issue. EMR has built-in access support for OSS and provides enhanced JindoFS features. As a result, EMR users can better access data from a data lake than from native OSS.

JindoFS is an OSS-based file system designed for big data usage, with unique advantages in metadata operation and data caching. For more information about JindoFS, click on the link at the end of this article. If already using EMR, DLF provides a one-click migration tool for transferring metadata from the local metadata database to the data lake metadata service. Users can choose to store data in local HDFS or migrate to OSS.

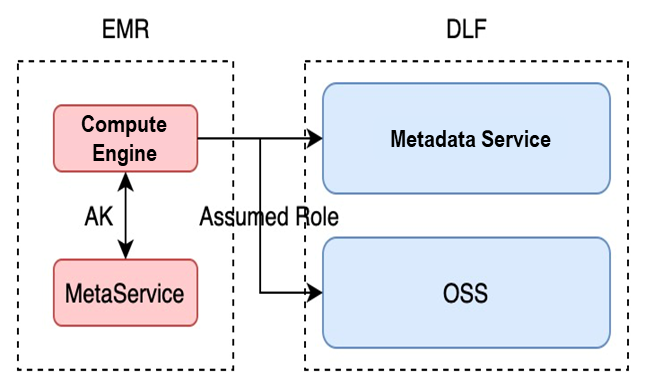

It is worth mentioning that EMR provides AK-free DLF access. Users, who have authorized DLF, can access the metadata service and OSS data without AK. Implementing this function depends upon the MetaService of EMR. On the premise of user authorization, MetaService provides the AK information of Assume Role for the local machine request. Thus, it allows the caller to access the target service as the user. AK-free access minimizes the risk of losing confidential information.

Alibaba Cloud services such as MaxCompute, Realtime Compute, Hologres, and PAI use DLF to interconnect the underlying data. To some extent, they satisfy the computing requirements of different scenarios with one same piece of data. For example, MaxCompute can directly read the data content of DLF and work with DataWorks to offer better user experience in using data warehouses. Realtime Compute can transfer data to a data lake in real-time. This enables stream-batch integrated computing based on the data lake. Hologres can deal with scenarios requiring relatively high performance. It can accelerate the analysis of warm and cold data in a data lake and process the warm and hot data with built-in storage. Thus, it can develop an all-in-one analysis solution covering the whole data lifecycle from production to archiving. PAI can use a data lake as the data source and store offline features of other engines in the data lake for modeling.

DLF provides various methods for quickly connecting open-source services to a data lake. However, this may require patch operation based on open-source code to embed the access for data lake metadata as plug-ins. OSSFileSystem, developed by the EMR team in the Hadoop community, can access users' OSS storage to provide data access. Therefore, as long as the engine can read and write HDFS, it can read and write OSS. To implement access control on metadata and OSS, users can use Alibaba Cloud RAM for both to ensure the same user experience.

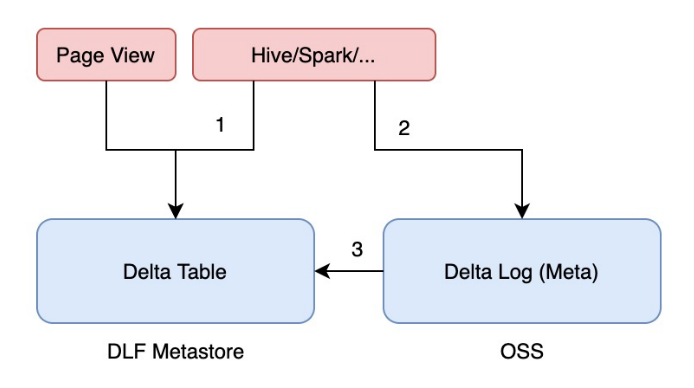

Besides computing engines, some engines can also read and write specific table formats, such as Delta Lake, Hudi, and Iceberg. DLF is not limited to the storage formats that users use. However, since these table formats have their own metadata, users still need to do extra engine connection work. Taking Delta Lake as an example, its metadata is stored both in OSS and in metadata service. This leads to two problems: The first is which metadata should the engine read. The second is how to ensure metadata consistency if two pieces of metadata are necessary.

For the first problem, the engine will read the metadata in OSS if the components are open source. It is also crucial to save metadata in the metadata service, which can be used for the page display and save the huge performance loss of OSS metadata parsing. For the second problem, DLF has developed a hook tool. Whenever Delta Lake commits an event, it automatically triggers the hook to synchronize the metadata in OSS to the metadata service. The Delta Lake metadata design also allows multiple engines, such as Spark, Hive, and Presto, to use one same piece of metadata. Users do not have to maintain different metadata for different engines as open-source products do.

As the figure shows, the Delta Table in the metadata service is the image of the Delta Log in OSS and is synchronized through committing hook (step 3). Users can use the Delta Table for page display and necessary information (like table path and InputFormat/OutputFormat) reading by Hive or Spark (step 1). After the engine obtains the required information, it reads the metadata of the Delta Table under the table path and completes metadata parsing and data reading (step 2). After the engine completes data operations and commits events, Delta Log is synchronized to Delta Table (step 3) through the committing hook. At this time, a cycle is completed.

The multi-engine support for DLF is still under development in many aspects. In the future, Alibaba Cloud will continue to work on the following aspects:

EMR Remote Shuffle Service: A Powerful Elastic Tool of Serverless Spark

62 posts | 7 followers

FollowAlibaba EMR - July 9, 2021

Alibaba EMR - June 8, 2021

Apache Flink Community - May 10, 2024

Alibaba Cloud Big Data and AI - December 29, 2025

Alibaba Cloud New Products - January 19, 2021

Alibaba EMR - July 20, 2022

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba EMR