By Zhou Bo (Senior Development Engineer of Alibaba Cloud Intelligence and Apache RocketMQ Committer)

We often see RocketMQ in e-commerce systems, financial systems, and logistics systems. It is easy to understand the phenomenon. As the scope of digital transformation expands and its process speeds up, the data of business systems is surging every day. At this time, it is necessary to share the operating pressure to ensure the stable operation of the system. RocketMQ plays such a role. Its asynchronous message processing and high concurrent reading and writing capabilities ensure that the reconstruction of the system bottom will not affect the functions of upper applications. Another advantage of RocketMQ is scalability, which enables the system to buffer traffic in the face of traffic uncertainty. In addition, the sequential design of RocketMQ makes it a natural queuing engine. For example, when three applications initiate requests to a background engine at the same time, RocketMQ can ensure that no crash accidents are caused. Therefore, RocketMQ is used in scenarios (such as asynchronous decoupling, peak cut, and transactional messages).

However, digital transformation has turned more users' attention to data value. How can we make greater use of it? RocketMQ cannot analyze data. However, many users want to obtain data from RocketMQ topics and perform online or offline data analysis. Although it is a feasible solution to use commercially available data integration or data synchronization tools to synchronize RocketMQ topic data to some analysis systems, new components will be introduced, resulting in long data synchronization links, high latency, and poor user experience.

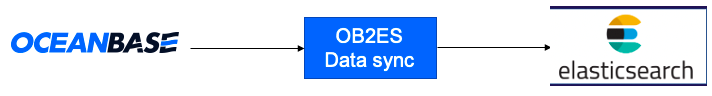

For example, if you use OceanBase as a data store in a business scenario and want to synchronize the data to Elasticsearch for full-text search, there are two feasible data synchronization solutions.

Solution 1: Obtain data from OceanBase, write data to the Elasticsearch component, and synchronize data. This solution is feasible when there are a small number of data sources. Once the number of data sources increases, the development and maintenance are complex. As such, a second solution is needed.

Solution 2: Message-oriented middleware is introduced to decouple the upstream and downstream components. This can solve the problems of the first solution, but some complex problems have not been completely solved. For example, how can we synchronize data from the source data to the target system and ensure high performance? How can we ensure that data synchronization still proceeds normally when some nodes of the synchronization task are suspended? How can we ensure resumable upload when the nodes are recovered? At the same time, with the increase in data pipelines, how to manage data pipelines becomes difficult.

There are five main challenges in the data integration process:

Challenge 1 – Multiple Data Sources: There may be hundreds of data sources on the market, and the systems of each data source vary immensely. Therefore, it takes a lot of work to realize data synchronization between any two data sources, and the research and development cycle is long.

Challenge 2 – High-Performance Issue: How can we efficiently synchronize data from the source data system to the target data system and ensure high performance?

Challenge 3 – High Availability Issue/ Failover Capability: When a node is suspended, whether the task of this node is stopped, and whether the task can achieve resumable upload

Challenge 4 – Auto Scaling Ability: This ability allows you to dynamically increase or decrease the number of nodes based on the system traffic. It can meet the business requirements during peak hours by scaling out, reducing the number of nodes during off-peak hours, and reducing costs.

Challenge 5 – Management and Maintenance of Data Pipelines: With the increase of data pipelines, data pipelines for operation and maintenance monitoring become more complex. How can we efficiently manage and monitor a large number of synchronization tasks?

First, standardize the Data Integration API (Open Messaging Connect API). The Connect component is added to the RocketMQ ecosystem. On the one hand, it abstracts the data integration process, standard data format, and schemas that describe data. On the other hand, it abstracts synchronization tasks. Task creation and sharding are abstracted into a set of standardized processes.

Second, Connect Runtime is implemented based on a standard API. Runtime provides cluster management, configuration management, offset management, and load balancing capabilities. With these capabilities, developers or users only need to pay attention to how to obtain or write data to quickly build a data ecosystem. For example, developers or users can quickly establish connections and build data integration platforms with OceanBase, MySQL, and Elasticsearch. The construction of the entire data integration platform is simple. It can be done with a simple call of RESTFull API provided by Runtime.

Third, it provides comprehensive operation and maintenance tools to facilitate the management of synchronization tasks. It provides rich metrics information and convenient ways to view information (such as TPS and traffic of synchronization tasks).

Here are two scenarios of RocketMQ Connect:

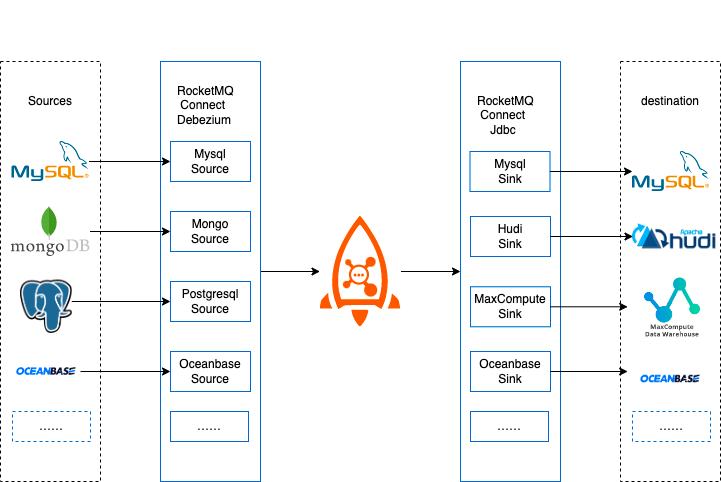

Scenario 1: As an intermediate medium, RocketMQ can connect upstream and downstream data. For example, during the migration process of old and new systems, MySQL can meet your business requirements when your business volume is not large. However, as the business grows, MySQL’s performance cannot meet the business requirements. At this time, you should upgrade your system and use OceanBase (a distributed database) to improve the system’s performance.

How can we seamlessly migrate data from the old system to OceanBase? RocketMQ Connect can play a role in this scenario. RocketMQ Connect can build a data pipeline from MySQL to OceanBase to realize smooth data migration. RocketMQ Connect can be used to build data lakes, search engines, ETL platforms, and other scenarios. For example, if you integrate data from various data sources to a RocketMQ topic, you only need to connect the target storage to Elasticsearch to build a search platform. If the target storage is a data lake, you can build a data lake platform.

In addition, RocketMQ can be used as a data source to synchronize data from one RocketMQ cluster to another cluster. This can build the multi-active disaster recovery capability of RocketMQ. That's what Replicators being incubated by the community can do.

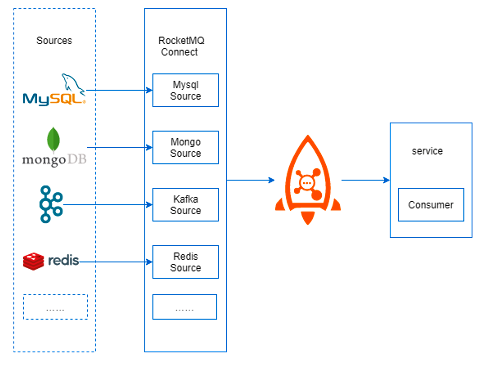

Scenario 2: RocketMQ serves as the endpoint. The RocketMQ ecosystem provides a component for stream computing - RocketMQ Streams. Connector integrates the data of each storage system into the RocketMQ topic, and the downstream can use the stream computing capability of RocketMQ Streams to build a real-time stream computing platform. It can cooperate with the Service of the business system to enable the business system to quickly obtain data from other storage.

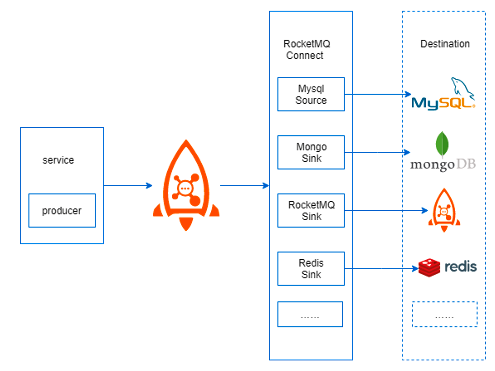

You can make RocketMQ serve as the upstream endpoint, send business messages to topics, and use Connectors to persist or store data.

As a result, RocketMQ has data integration capability to synchronize data between any two heterogeneous data sources. It has unified cluster management, monitoring capabilities, and configurable data pipeline construction capabilities. Developers or users only need to focus on data copy. Simple configuration can provide a configurable, low-code, low-latency, and highly available data integration platform that supports fault handling and auto scaling.

How is RocketMQ Connect implemented?

Before introducing the implementation principle, let's review two concepts:

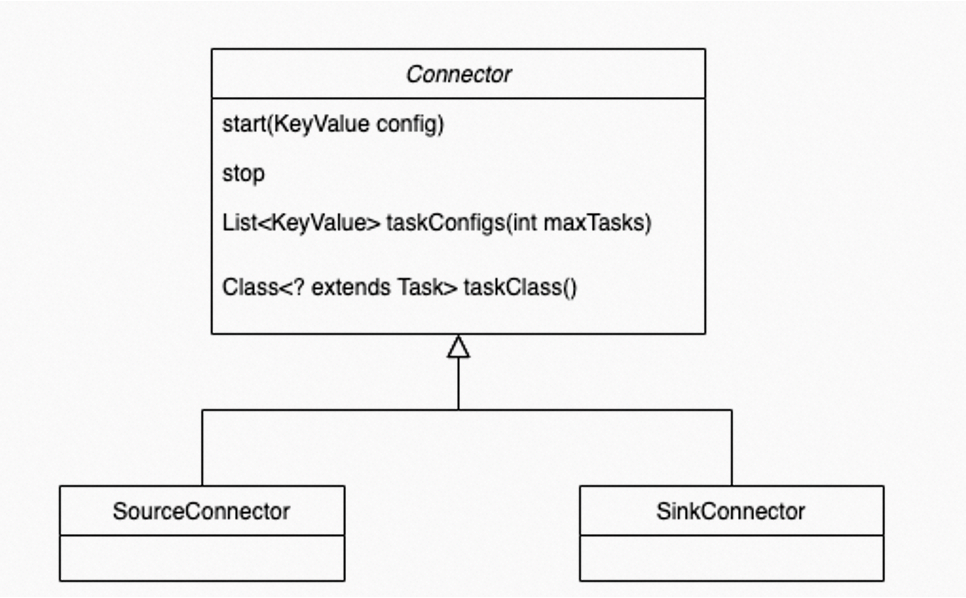

Concept 1: What Is a Connector? It defines where data is copied from and to. If it reads data from the source data system and writes data to RocketMQ, it is a SourceConnector. If it reads data from RocketMQ and writes data to the target system, it is a SinkConnector. Connector determines the number of tasks to be created and receives the configuration from Worker to pass to the tasks.

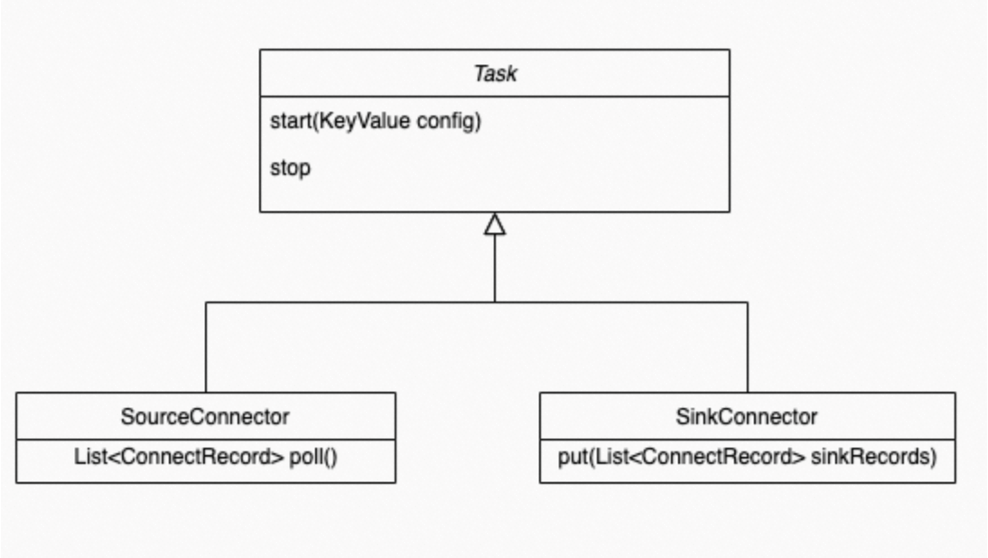

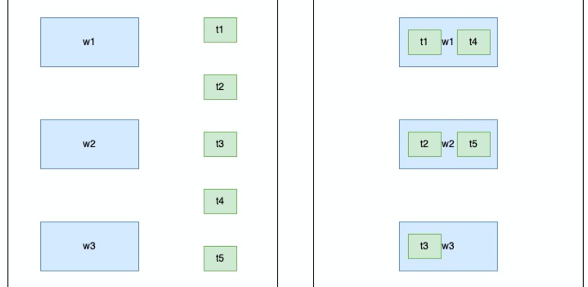

Concept 2: What Is a Task? A task is the smallest allocation unit of a Connector task fragmentation. It is the actual executor that replicates data from the source data source to RocketMQ (SourceTask) or reads data from RocketMQ to the target system (SinkTask). Tasks are stateless and can dynamically start and stop tasks. Multiple tasks can be executed in parallel. The parallelism of data replicated by a Connector is mainly reflected in Task. A Task can be understood as a thread, and multiple Tasks run in a multithreaded manner.

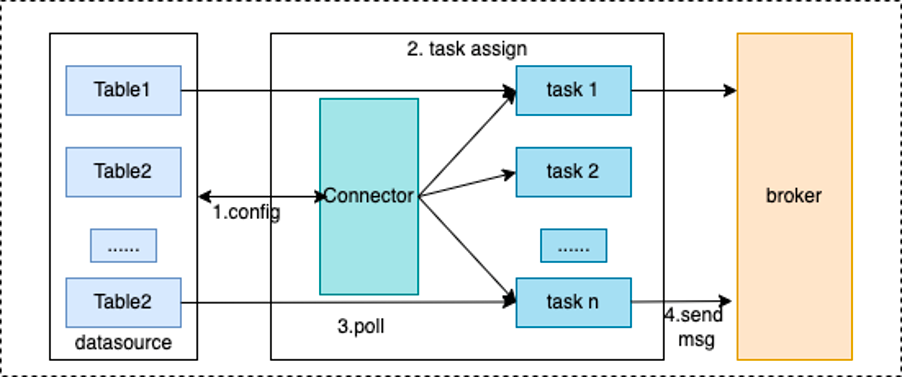

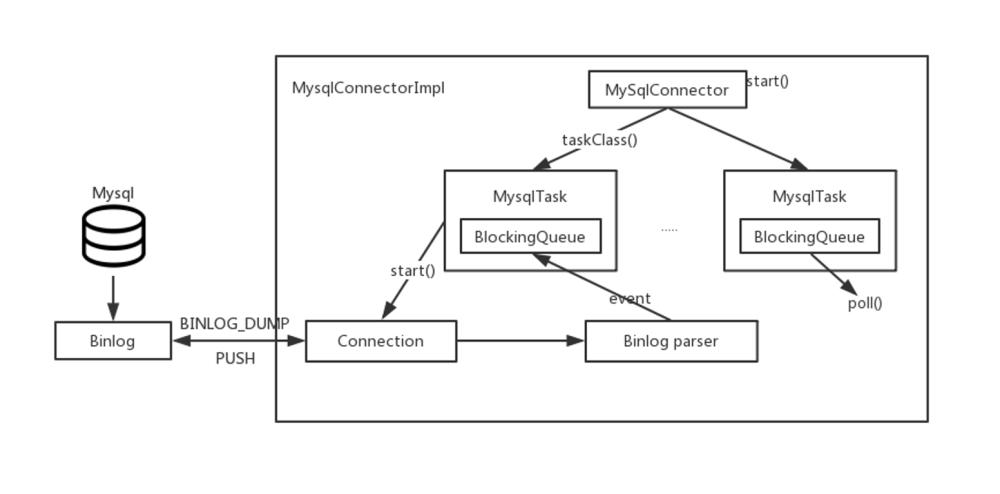

Through the API of Connect, you can see the respective responsibilities of Connector and Task. When Connector is implemented, the flow direction of data replication has been determined. Connector receives the configuration related to the data source. The taskClass obtains the task type to be created, determines the number of tasks according to the number of taskConfigs, and assigns the configuration for the Task. After the Task obtains the configuration, it establishes a connection to the data source and writes data to the target storage. The following two figures show the basic processes of Connector and Task.

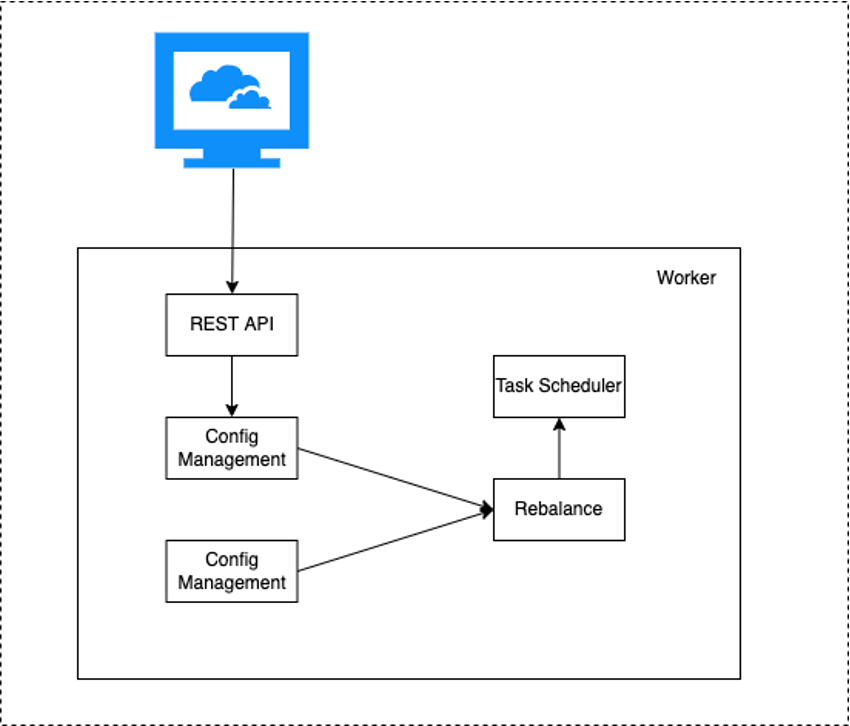

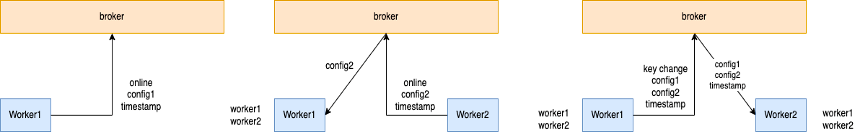

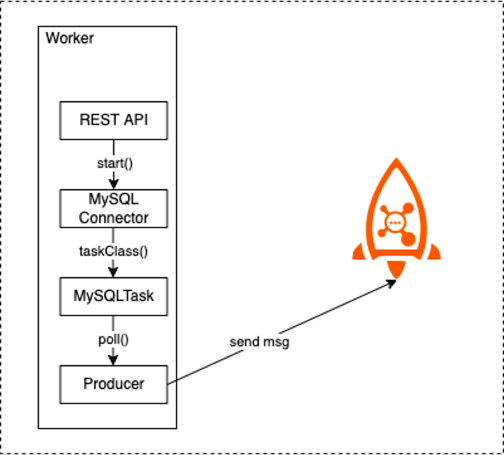

A RocketMQ Connect cluster contains multiple Connectors. Each Connector corresponds to one or more Tasks. These Tasks are run in the Worker (process). Worker process is the running environment of Connector and Task. It provides RESTFull capability, receives HTTP requests, and transfers the obtained configuration to Connector and Task. It is responsible for starting Connector and Task, saving Connector configuration information, and saving the offset information of Task synchronization data. In addition, Worker provides load balancing capability. The high availability, scalability, and fault handling of the Connect cluster mainly depend on Worker's load balancing capability. The process for a worker to provide services is listed below:

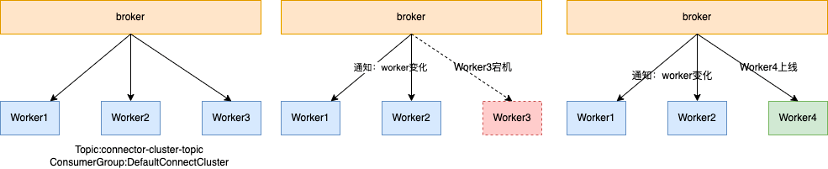

The implementation principle of service discovery and load balancing provided by Worker is listed below:

Developers that have used RocketMQ should know that it is simple to use. It has two functions: sending and receiving messages. The consumption mode is divided into cluster consumption and broadcast consumption. In cluster consumption mode, multiple Consumers can consume messages in a Topic. The RocketMQ broker can detect the online or offline consumption of any Consumer and can inform other nodes of the online or offline information of the client. This feature makes worker service discovery possible.

Each Worker subscribes to the same Topic, and different Workers use different Consumer Groups. This way, the configuration and offset information synchronization of a Connector can be implemented, and Worker nodes can consume all data of the same Topic, which is the Connector configuration/offset information, and it is similar to the broadcast consumption mode. This data synchronization mode can ensure that when any Worker fails, the tasks on the Worker can still be pulled up and run normally on the surviving Worker. You can obtain the offset information corresponding to the task to implement resumable upload, which is the basis for failover and high availability.

There is a load balancing capability between the consumer client and the Topic Queue in RocketMQ consumption scenarios. The Connector is similar, except its load balancing objects are different. The Connector is the load balancing between the Worker node and the Task. Like the load balancing of the RocketMQ client, you can select different load-balancing algorithms based on the usage scenarios.

As mentioned above, RocketMQ Connect provides the RESTFull API capability. The RESTFull AP allows you to create a Connector, manage the Connector, and view the status of the Connector. For example:

Currently, Connector supports two deployment modes: standalone and cluster. The cluster mode must have at least two nodes to ensure high availability. In addition, the clusters can be dynamically increased or decreased. Dynamic control is achieved, and this can improve cluster performance and save costs. The standalone mode makes it easier for developers to develop and test Connectors.

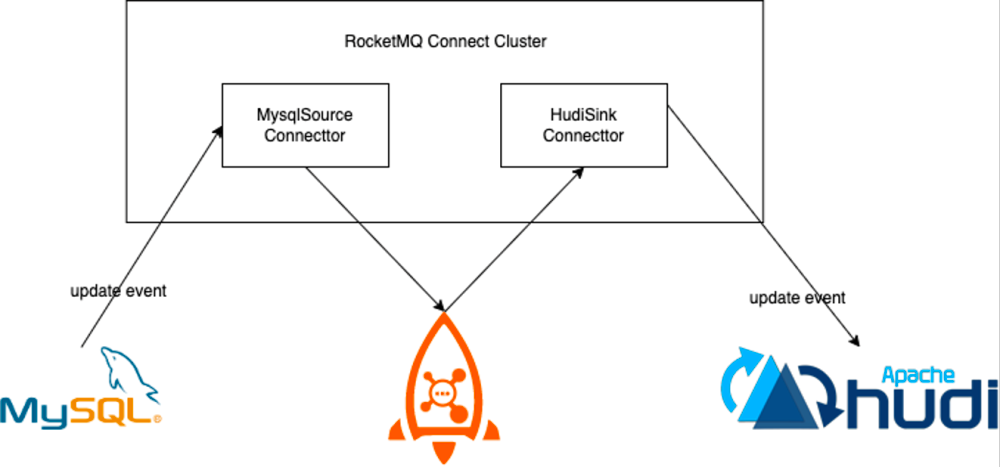

How can we implement a Connector? Let's take a look at a specific scenario. For example, business data is currently written into a MySQL database, and you want to synchronize data from MySQL to Hudi (a data lake) in real-time. You only need to implement MySQL Source Connector and Hudi Sink Connector.

Let's use MySQLSource Connector as an example to learn how to implement it.

The most important thing when implementing Connector is to implement two APIs. The first is Connector API. In addition to implementing its lifecycle-related API, it is necessary to know how to allocate tasks by Topic, Table, or database dimension. The second API is the Task to be created. Connector transfers relevant configuration information to the Task through task assignment. Task will create a database connection after obtaining the information (such as database account number, password, IP, and port). Then, obtain the table data through BINLOG provided by MySQL and write the data into a blocking queue. The task has a poll method. When implementing Connector, you only need to call the poll method to obtain data. This way, Connector is implemented. Then, it is provided as a JAR package and loaded to the Worker nodes.

After a Connector task is created, one or more threads are created in the Worker to continuously poll the Poll method to obtain data from the MySQL table and then send the data to the RocketMQ Broker through the RocketMQ Producer. This is the overall process from the implementation to the running of Connector:

The development process of RocketMQ Connect is divided into three stages:

Open Messaging Connect API version 1.0 was implemented in the early stage of RocketMQ Connect development (also known as the Preview phase). Based on this version, RocketMQ Connect Runtime was implemented, and more than ten Connector implementations were provided, including MySQL, Redis, Kafka, Jms, and MongoDB. RocketMQ Connect can implement end-to-end data source synchronization in this phase, but the function is not perfect enough. Its ecosystem is relatively poor. It does not support data conversion, serialization, and other capabilities.

In version 1.0, the Open Messaging Connect API was upgraded to support Schema, Transform, Converter, and other capabilities. On this basis, the Connect Runtime was upgraded to support data conversion and serialization, and complex schemas were fully supported. The API and Runtime capabilities of this phase have been improved. On this basis, there are more than thirty Connector implementations, including CDC, JDBC, SFTP, NoSQL, cache Redis, HTTP, AMQP, JMS, data lake, real-time data warehouse, and Replicator. Kafka Connector Adaptors that can run the Connectors of the Kafka ecosystem are also made.

RocketMQ Connect is currently at this stage, focusing on the development of the Connector ecosystem. When there are more than one hundred Connector ecosystems of RocketMQ, RocketMQ can connect to any data system.

Currently, the RocketMQ community is working with the OceanBase community to carry out research and development on synchronizing data from OceanBase to RocketMQ Connect. We provide two access modes: JDBC and CDC. They will be released in the community in the future. Interested parties are welcome to try them.

RocketMQ is a reliable data integration component with distributed, scalable, and fault-tolerant capabilities that enable data flow in and out between RocketMQ and other data systems. You can use RocketMQ Connect to implement CDC, build a data lake, and combine stream computing to realize data value.

Dubbo 3.2: New Features and Enhancements for Cloud Nativeization

664 posts | 55 followers

FollowAlibaba Cloud Native Community - May 16, 2023

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - March 22, 2023

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native Community - December 17, 2025

JeffLv - December 2, 2019

664 posts | 55 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native Community