By Jianbin Chen (GitHub ID: a364176773)

Seata is an open-source distributed transaction solution with more than 23,000 stars and is a highly active community. It is committed to delivering high-performance and easy-to-use distributed transaction services under a microservices architecture. This article analyzes the core features of Seata 1.6.x to give users a deeper understanding of Seata.

First of all, in 1.6.x, we have enhanced the core and original AT mode to support more common syntax features, such as MySQL UPDATE JOIN and multiple primary keys in Oracle and PostgreSQL.

In terms of the overall communication architecture, the network communication model is further optimized, and the batch and pipeline features are applied to the largest extent to improve the overall throughput from Seata Server to Client.

In terms of global locking, the configuration items of optimistic/pessimistic lock acquisition are added to be closer to the business usage scenario, and different locking strategies are selected according to different business scenarios. The three points above will be described in detail in the subsequent AT mode enhancement chapter.

In terms of technical exploration and advancement, we supported JDK17 early in 1.5.x. In 1.6.1, the community took the lead in supporting springboot3, which was fully compatible with springboot2 instead of in a separate branch form. These two major features will make business practitioners more relaxed and freer in selecting the underlying kernel version.

In the service exposure discovery model, we added the feature of exposing Seata-Server to multiple registries at the same time. It will be shared in subsequent chapters.

Seata 1.6.x has more optimizations and bug fixes, but I will not go into details here. You are welcome to try the latest release version of Seata. If you have any questions, please communicate and discuss them on GitHub.

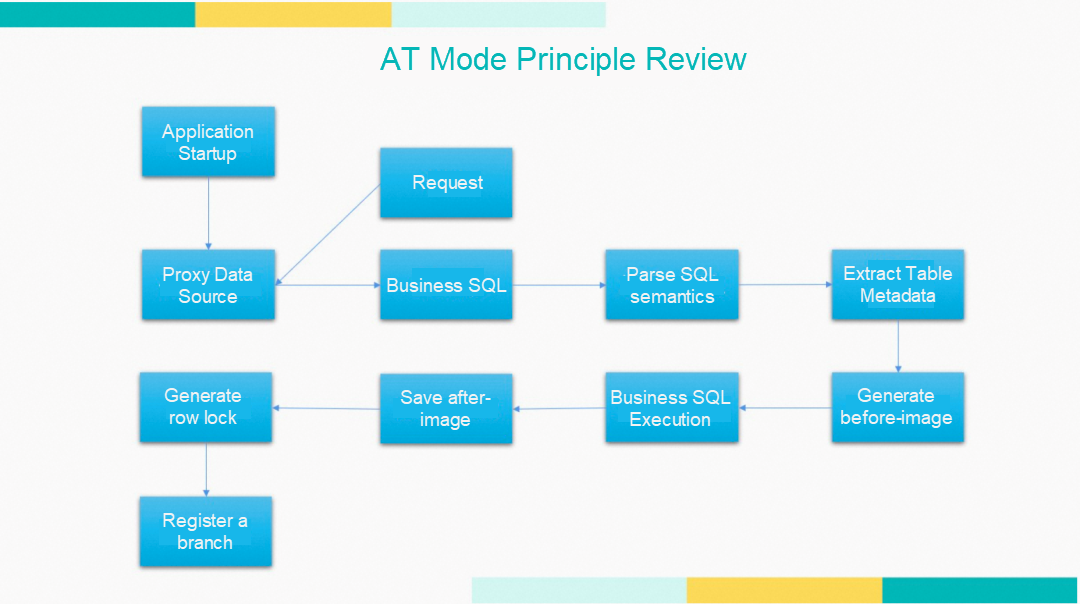

First of all, let's recall the process and principle of AT mode to understand the relevant features more easily. As shown in the figure, Seata will automatically proxy users' DataSource when the application starts. Users familiar with the JDBC operation are familiar with DataSource. Getting DataSource means mastering the data source connection and being able to perform some operations behind it. At this time, users will not perceive the invasion.

When the business request comes in, and the business SQL is executed, Seata will parse the users' SQL, extract the metadata in the table, generate the before-image, save the after-image after the execution of SQL by executing the business SQL, generate the global lock, and register the branch, which will be carried to the Seata-Server, which is TC side. (As for the user of the after-image, we will talk about it later.)

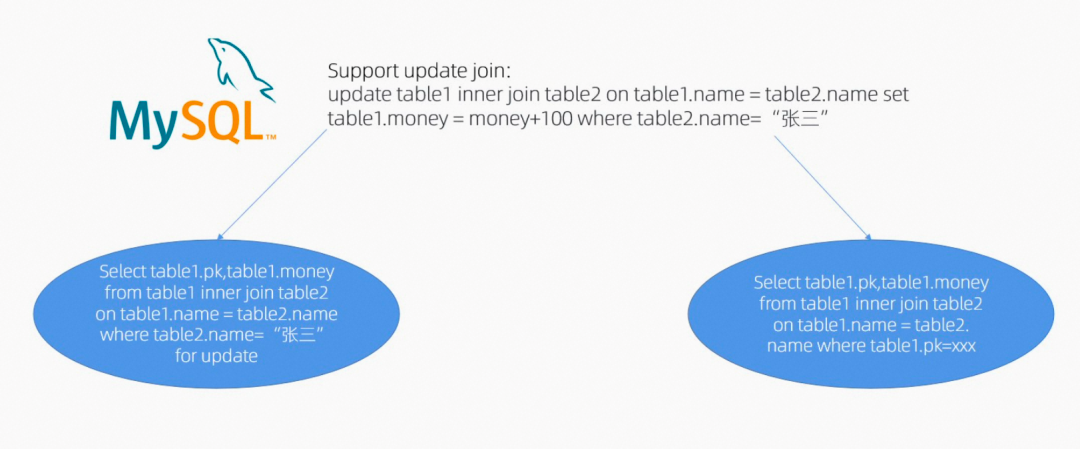

First of all, according to the AT principle, we can know that when a dml statement is executed, its statement will be parsed, and the corresponding before-image query statement and after-image query statement will be generated. Let’s take UPDATE JOIN as an example. UPDATE JOIN will connect multiple tables, and the modified rows may be involved in multiple tables, so we need to extract the number of joined tables to obtain the number of joins. For a table whose table row data is modified, construct a SELECT statement to query the data before the modification. The statement is listed below:

update table1 inner join table2 on table1.name=table2.name set table1.money=money +100 where table2.name=“Tom”It can be found that two tables are involved, and multiple data changes in one table. Therefore, we need to generate a before-image for table2.name=Tom.

Select table1.pk,table1.money from table1 inner join table2 on table1.name=table2.name where table2.name=“Tom” for updateAs mentioned above, we extracted the JOIN statement and the WHERE clause. If another table such as table2 is also involved, a table query statement with name=Tom will be generated for the query.

Through the before-image SQL above, we can build the information of the before-image, and the after-image will be simpler. Let’s take the before-image SQL as an example. The after-image only needs to use the primary key found by the before-image as a condition. It avoids retrieving non-indexed columns again, and the efficiency is improved. The SQL statement is listed below:

Select table1.pk,table1.money from table1 inner join table2 on table1.name=

table2. name where table1.pk=xxx

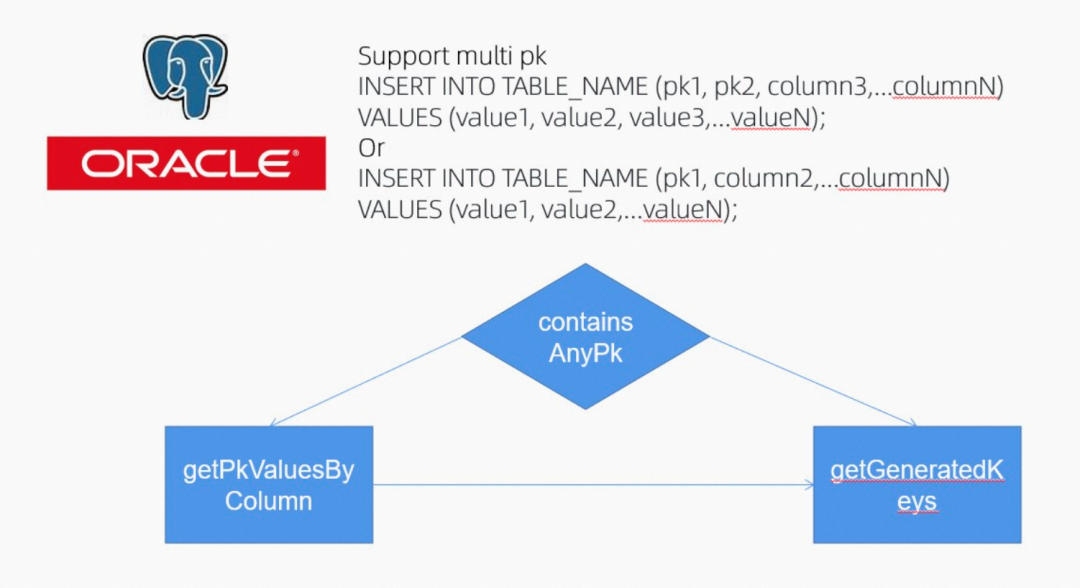

Next, let’s discuss how multiple primary keys are supported.

The ultimate goal of supporting multiple primary keys is to obtain the single primary key, which is indispensable for Seata. It is the only index that locates a piece of data that has been modified. If this piece of data has no primary key, it means the subsequent two-phase rollback may not be able to locate the accurate data and cannot be rolled back. Therefore, for this purpose, let's analyze the support for auto-increment primary keys and non-auto-increment primary keys in the preceding figure.

INSERT INTO TABLE_NAME (pk1, pk2, column3,...columnN)

VALUES (value1, value2, value3,...valueN);First of all, the first SQL statement will specify the values of multiple primary keys in the inserted column, so Seata can read multiple primary keys through the value of the inserted column. Since Seata will obtain the metadata of the table, it is clear which field the primary key is.

INSERT INTO TABLE_NAME (pk1, column2,...columnN)

VALUES (value1, value2,...valueN);As for the second SQL statement, Seata caches the metadata of the table and finds that only one primary key (pk) value can be obtained from the inserted data. Therefore, the driver's unified API should be adjusted at this time. getGeneratedkeys returns the value of the primary key to Seata from the driver level. Since the primary key can be obtained in both cases, the two-phase rollback of the subsequent AT mode is not a problem.

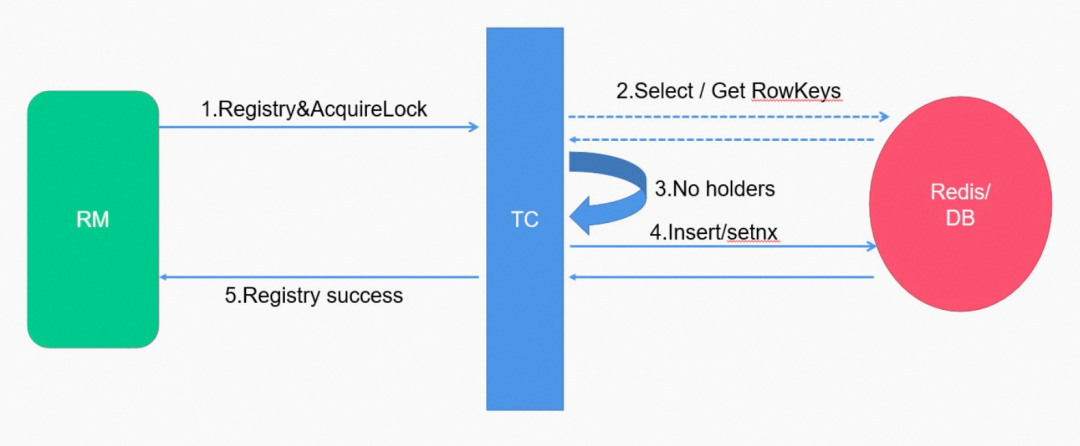

Now, let's introduce a new concept. In Seata1.6.x, we introduce the concept of lockStrategyMode. There are two models: OPTIMITIC and PESSIMITIC. First, the process of obtaining a global lock in Seata is listed below:

When the branch is registered, the primary key value obtained when the before-image inserts data will be brought to tc as a global lock. At this time, the database will be queried using this global lock information. When the lock is not recorded in the database, the lock will be inserted. It seems to be a simple process, but there are certain problems, which means in the second step where the dotted line is drawn. Those who are careful may ask why we want to query whether the lock has a record. The database has a unique index, and Redis provides setnx, so we can try to insert it simply.

However, based on the two points above, many of you should find that there is no lock reentrance in more than 90% of business scenarios, and the lock reentrance will cause additional disk and network I/O overhead, resulting in a further decline in throughput once the application uses the Seata at mode. Therefore, we have introduced optimistic and pessimistic locking strategies in 1.6.x. In pessimistic cases, we believe locks must be held by others, so the logic is to check first and insert later, while in optimistic cases, locks can be held for branch transactions, thus reducing the one-time query overhead and improving the overall throughput. However, in the first point, due to problems (such as troubleshooting and tracing of the transaction link), Seata only abandons the query lock when it contends for the lock for the first time and automatically switches to the pessimistic mode when it retries to obtain more than one global lock. This can improve the throughput in specific scenarios without causing additional troubleshooting costs.

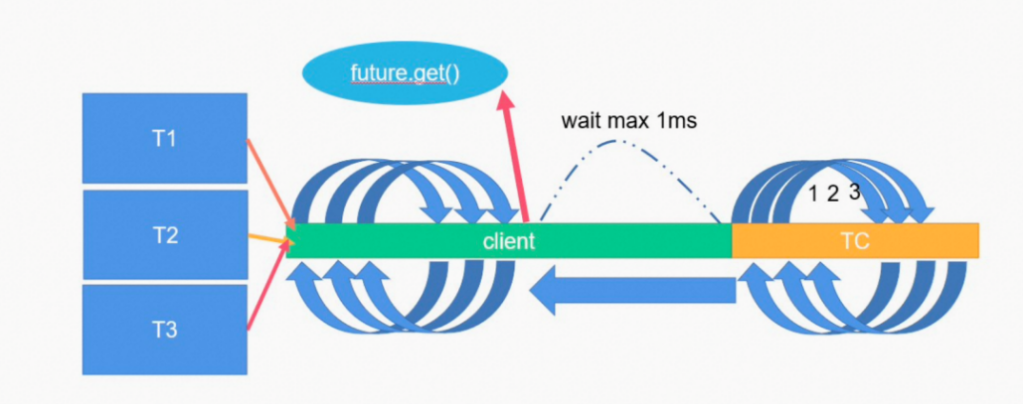

Let's review the network modes of the prior release of 1.5. As shown in the figure, T1, T2, and T3 are three threads. When they are concurrent in the same client, T1, T2, and T3 will put their respective rpcmessage into the local batch queue and use futureget to wait for the response from the server. At this time, there will be a batch merge thread on the rm side to merge and send the requests in the same millisecond concurrency to tc. When the tc side receives the request, it will process the T1, T2, and T3 messages in sequence and send them together.

Based on the information above, we can see some problems with the network communication model before Seata 1.5.

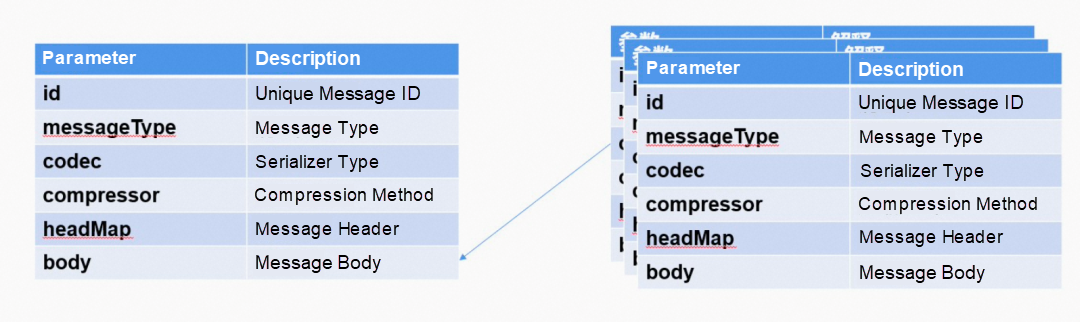

After discovering these problems, the community made timely adjustments and reconstructions. Let's look at what each request/response looks like:

We can see that each message has id, messagetype, codec, compressor, headmap, and body, and merge requests will merge messages of the same serialization type and compression method (create another mergerequest and put msg of other requests into its body), so decompression and deserialization on tc side will be easy.

For the head-of-line blocking problem, this is just like the pipeline introduced by http1.1. After being sent the data over in batch to the server side, it must be responded to together. Requests with longer processing times will affect other requests, so the http1.1 pipeline is useless.

However, after Seata 1.5, when multiple merge requests arrive at the server side, the processing of multiple requests will be submitted to forkjoinpool through CompletableFuture, thus avoiding additional thread pool overhead (outside Seata's service thread pool), and the number of threads depends on the number of CPU cores. When all requests are processed, multiple responses will be returned to the client together.

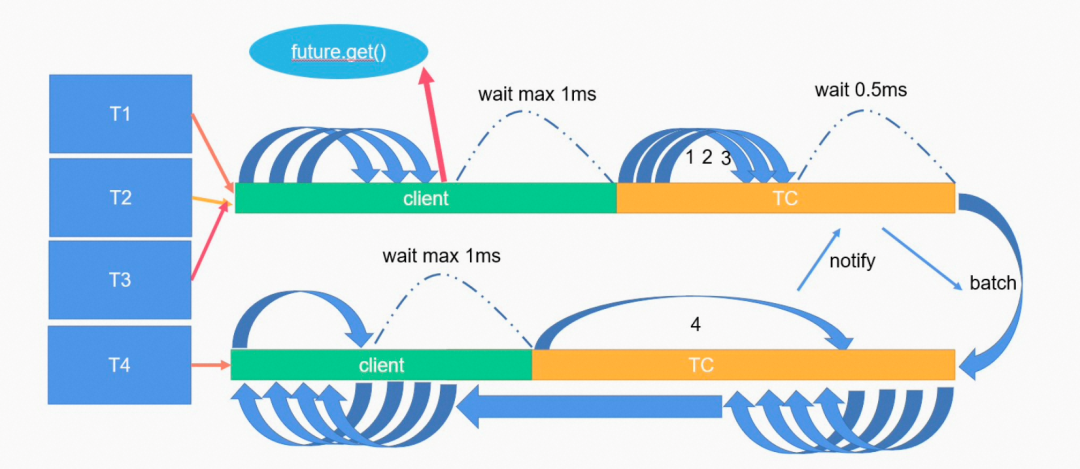

The community has realized out-of-order batch response to solve the problems in the second and third points. It does not need to wait for the request to be executed orderly. As long as the request processing ends, it can return the response content together with other requests that finish the processing at the same time. (Note: It is not necessarily T1, T2, or T3 but may be other threads.) Please see the following schematic diagram for more details.

When the requests of T1, T2, and T3 are merged into tc, tc will process the requests of T1, T2, and T3 in parallel, and the response of T1, T2, and T3 will be the same as the client, waiting at most 1ms. This response is sent to a batch thread for asynchronous waiting and response. If no response can be returned together to the client when 1ms is up, it will directly respond to the client.

As shown in the figure above, when the response of T1, T2, and T3 is waiting for 1ms, assuming that the request of T4 arrives at tc and has completed the processing, the response of T1, T2, and T3 is waiting for 0.5ms and will be woken up by the response of T4. At this time, after the batch thread is activated due to the new response of T4, all the responses with the same compression method and serialization are merged into a batch response. This processing has no particular order. T1, T2, and T3 can be thrown into the batch thread queue after any request processing is completed. When the client receives the response, it will match the id in response from the id in rpcmsg and find the future object of the corresponding client to perform setresult. At this time, the thread of client future.get will get the response and then continue the corresponding processing on the client side.

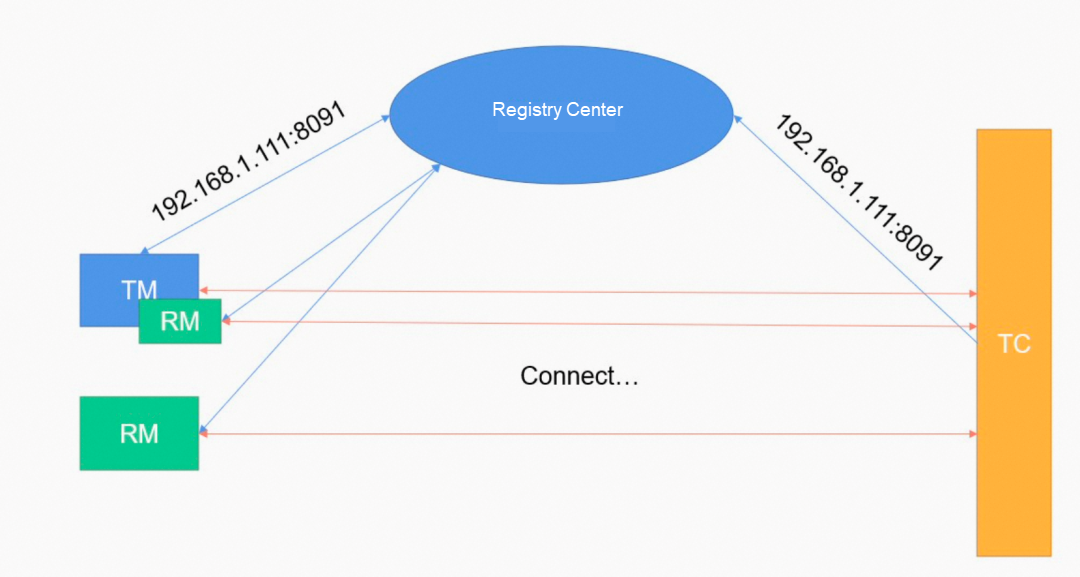

As shown in the figure, the service discovery models used by the client and server of Seata are the same as that used by the traditional RPC framework. TC exposes the service address to the registry. TM and RM discover the TC address from the registry and then make a connection. However, there are special problems in this model. Let's take a look.

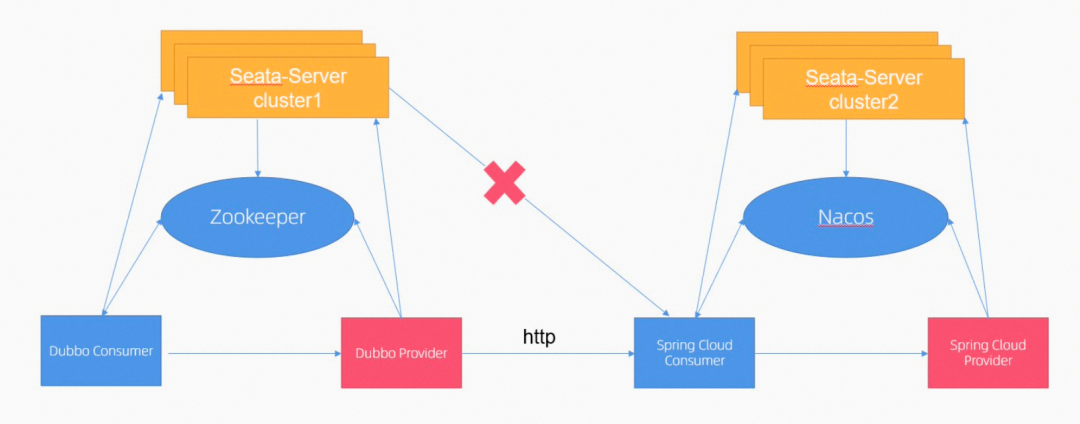

Let's take the figure above as an example. Let’s suppose a company chooses Spring Cloud + Dubbo in the microservices framework. If Dubbo is migrated to Spring Cloud or vice versa, there may be Dubbo services using http to call Spring Cloud services or call each other. We know most Spring Cloud services will use Eureka or Nacos, and Dubbo mostly uses Zookeeper and Nacos.

Then, if the two have different registries, there will be a situation where Dubbo's applications discover Seata-server through Zookeeper, and Spring Cloud's applications discover Seata-server through Eureka. Since Seata-server does not expose services to multiple registries at the same time, users are likely to build two clusters to string the transactions on both sides. However, the two clusters only string the transactions together and cannot be successfully issued in the two phases because one of the client nodes only communicates with the tc phase of the corresponding registry, which will lead to the issuance failure. Then, the execution of the two phases is delayed. So, let's summarize the existing problems.

The latest service discovery model can solve the problems above.

In 1.6.x, we supported the service exposure of multiple registries, which easily eliminated the problems above. On the Seata-Server side, multiple registries can be configured at one time, and after the relevant configurations of multiple registries are set, Seata-server will be registered in the corresponding registries when it is started. This enables relevant enterprises and users to no longer be constrained by the fact that Seata-server does not support the exposure of multiple registries in the face of microservices architecture selection, migration, mixed parts and mixed use, and other scenarios, reducing the maintenance cost of multiple clusters.

Update join&Multi pk support expands Seata's unique AT mode features and integrates more SQL features into Seata distributed transactions. The optimistic and pessimistic global dual strategies take a variety of business scenarios into account and improve performance.

The newly designed network communication model keeps requests from blocking, and the rational application of batch and multithreading makes great use of modern high-performance multi-core server resources to improve Seata-Server throughput.

Service registration and discovery of multiple registries help enterprises reduce costs and increase efficiency, enabling businesses to select a variety of microservices architectures. The business is based on the features of Seata listed above. The application architecture can be flexibly selected according to the actual scenarios.

Building a Streaming Data Processing Platform Based on RocketMQ Connect

639 posts | 55 followers

FollowAlibaba Cloud Native Community - June 25, 2021

Alibaba Cloud Native Community - September 14, 2023

Alibaba Developer - October 20, 2021

Alibaba Cloud Native Community - August 8, 2023

Alibaba Clouder - May 24, 2019

Alibaba Developer - August 2, 2021

639 posts | 55 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community