By Zhou Bo (Alibaba Cloud Intelligence Senior Development Engineer and Apache RocketMQ Committer)

Data synchronization between different data sources is common in business systems or big data systems. Traditional point-to-point data synchronization tools will cause NN problems when more data sources are involved in point-to-point data synchronization. The development and maintenance costs are very high. Since the upstream and downstream are coupled, the logic adjustment of one data source may affect the data synchronization between multiple data pipelines. Are these problems solved by introducing message middleware to decouple the upstream and downstream?* With message middleware, the processing logic of upstream and downstream data sources is relatively independent. However, if we directly use RocketMQ producers and consumers to build data pipelines, we need to consider the following challenges.

It is relatively simple to develop a complete data pipeline through RocketMQ producers and consumers, which may be completed in a few days. However, if we want to solve the problems above, it may take several months. Once we complete such a system, we will find that it is very similar to RocketMQ Connect, and the problems above have been perfectly solved by RocketMQ Connect. The following section describes how RocketMQ Connect solves these problems.

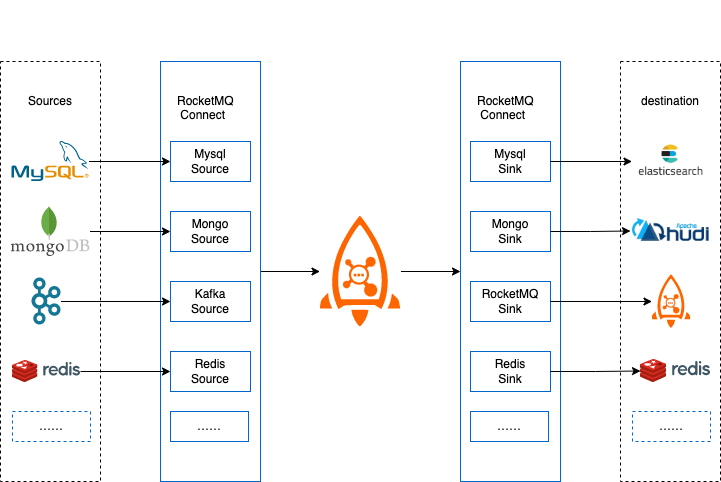

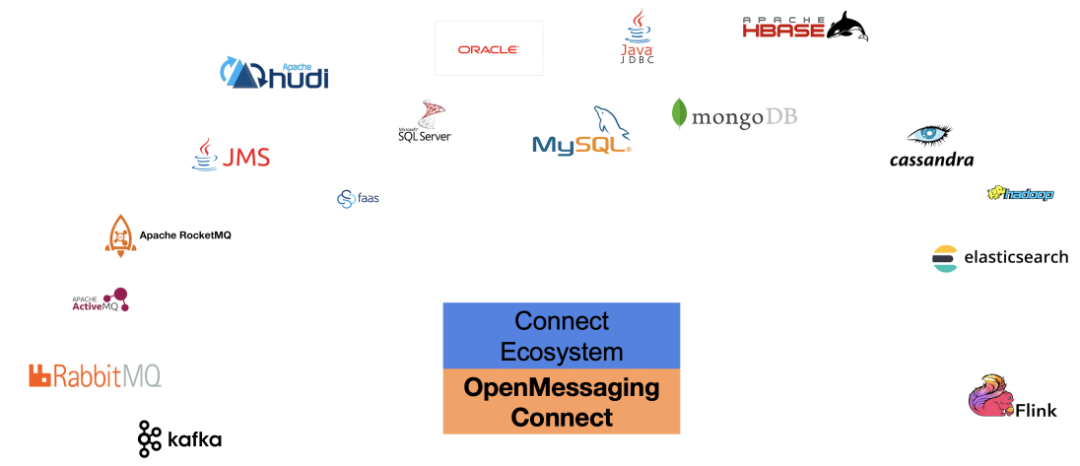

RocketMQ Connect is an important component of RocketMQ for data integration. It allows data from various systems to flow into and out of RocketMQ in an efficient, reliable, and streaming manner. As a separate distributed, scalable, and fault-tolerant system independent of RocketMQ, it has the characteristics of low latency, high reliability, high performance, low code, and strong extensibility. It can realize the connection of various heterogeneous data systems, build data pipelines, perform ETL, implement CDC, build data lakes, and other capabilities.

RocketMQ Connect provides the following benefits:

RocketMQ Connect is an independent, distributed, scalable, and fault-tolerant system. It mainly provides the ability to flow data from various external systems in and out of RocketMQ. With a simple configuration, you can use RocketMQ Connect without programming. For example, to synchronize data from MySQL to RocketMQ, you only need to configure the MySQL account, password, URL, database, and table name required for synchronization.

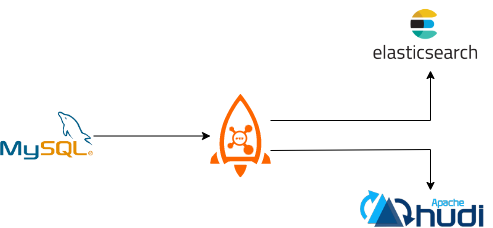

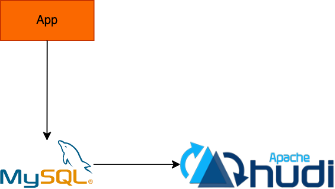

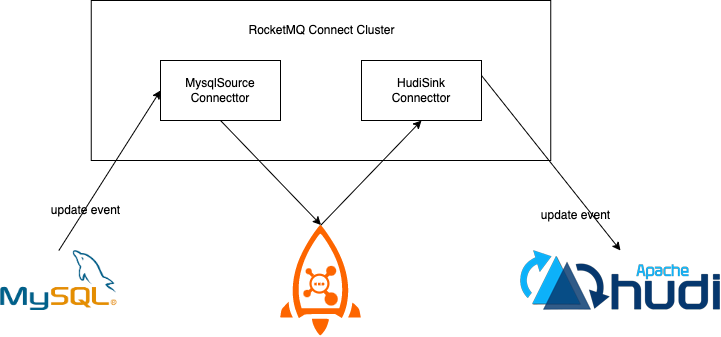

In the business system, we use MySQL's perfect transaction support to perform data addition, deletion, and modification and use Elasticsearch and Solr to achieve powerful search capabilities or synchronize the generated business data to the data analysis system or data lakes (such as Hudi) to process the data so that the data can generate higher value. It is easy to use RocketMQ Connect to realize the capability of such a data pipeline. Only three tasks need to be configured. The first task is to obtain data from MySQL, and the second and third task is to consume data from RocketMQ to Elasticsearch and Hudi. These three tasks realize two data pipelines from MySQL to Elasticsearch and MySQL to Hudi, which can meet the needs of transactions and searches in the business and build a data lake.

With the development of the business, the old system is often optimized or refactored. In the new system, new components and new technical frameworks may be adopted, and the old system cannot be directly shut down to migrate data to the new system. How can we dynamically migrate data from the old storage system to the new storage system? For example, the old system uses ActiveMQ, and the new system uses RocketMQ. You only need to configure a Connector task from ActiveMQ to RocketMQ to migrate data from the old system to the new system in real-time. This allows users to migrate data from ActiveMQ to RocketMQ without affecting the business, and this process is imperceptible to users.

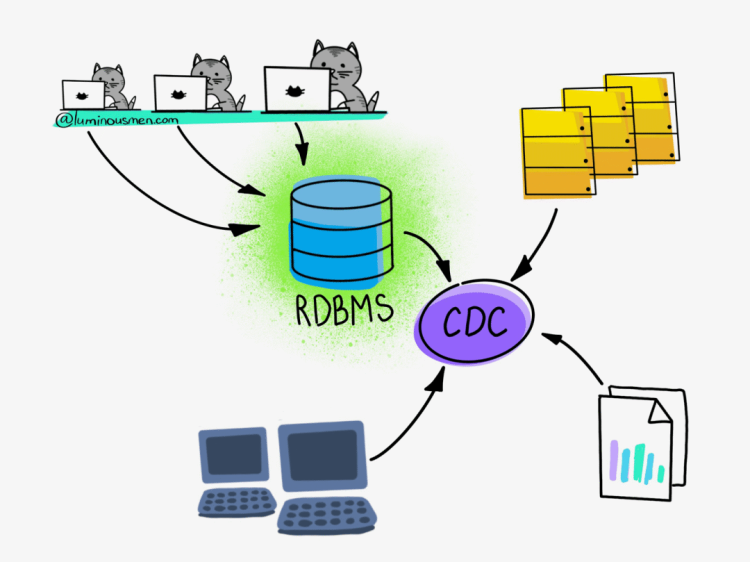

Photo Source: https://luminousmen.com/post/change-data-capture

As one of the ETL modes, CDC can incrementally capture the INSERT, UPDATE, and DELETE changes of databases in near real-time. RocketMQ Connect supports streaming data transfer and has high availability and low latency. CDC can be easily implemented through Connector.

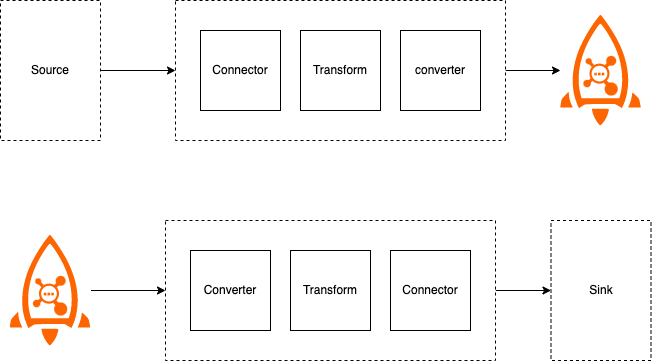

The basic introduction to RocketMQ Connect has been given. We know that RocketMQ Connect can realize data synchronization between many data sources. How is the data forwarded and processed in RocketMQ Connect? Converter and Transform play an important role in data serialization and conversion. The following describes Converter and Transform.

Converter, Transform, and Connector are all plugins of RocketMQ Connect. You can use the built-in implementation of RocketMQ Connect or customize the processing logic. With the loading capability of RocketMQ Connect Worker, you can load the custom implementations into the Worker runtime, and custom plugins are available after simple configuration.

Converter: It is mainly responsible for data serialization and deserialization. The Converter can convert and serialize data and data schemas. It can also register schemas in Schema Registry.

Transform: Single Message Transform transforms the Connect data object. For example, it filters out unnecessary data, filters fields, replaces data keys, and transforms uppercase and lowercase.

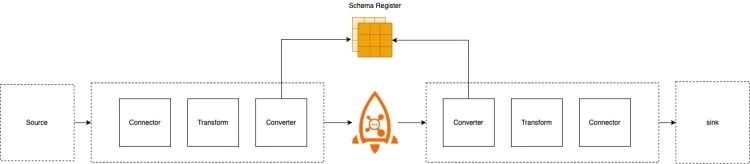

The following is the basic process of Connector data processing. Source Connect obtains data from the source data system and encapsulates it into a Connect-standard data object. Then, it transforms a single piece of data using Transform. The transformed data passes through Converter, which processes the data and schema into a supported format and serializes it. If Schema Registry is supported, the Converter registers the schema of the data into the Schema Registry and serializes the data. Finally, the serialized data is sent to RocketMQ. The processing process of Sink Connect is to pull data from the RocketMQ Topic, deserialize the data, and then transform each message. If Transform is configured, Connector writes the data to the target storage.

The Connector Worker node provides RESTful APIs to facilitate the creation and stop of Connector and the configuration of information acquisition APIs. You can use these APIs to manage Connector easily. You only need to call the corresponding APIs with corresponding configurations. The following describes some commonly used interfaces.

• POST /connectors/{connector name}

• GET /connectors/{connector name}/config

• GET /connectors/{connector name}/status

• POST /connectors/{connector name}/stop

You can use these APIs to create a Connector to the Connect Worker, stop the Connector, and query the configuration and status of the Connector.

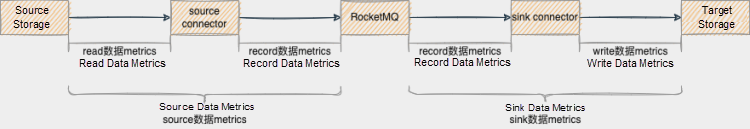

Observability plays a very important role in many systems. It can help observe the operation of the system, whether the system is running normally, when is the peak period and the idle period of the system, and help monitor the system and locate the problem. Worker nodes provide a wealth of Metrics information. Metrics can be used to view the total TPS of all Workers, the number of messages, the TPS of each task, the number of successful processing, and the number of failed processing. It is easy to operate and maintain the Connector with this Metrics information.

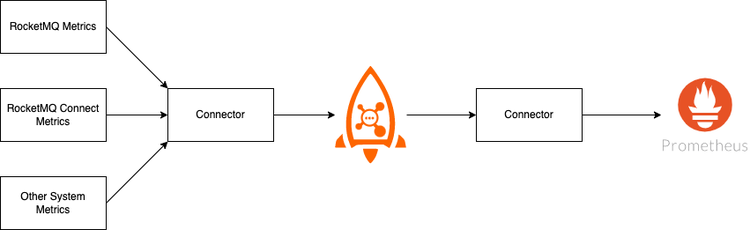

Many systems choose to display Metrics information in Prometheus. Prometheus provides an access method, implementing a Prometheus Exporter. You can modify the Exporter to add or modify Metrics, which is a way to access Metrics to Prometheus. RocketMQ Connect provides a new access method to implement a common Sink Prometheus Connector. Source Connector is a Connector required to obtain Metrics. For example, if the Metrics information of RocketMQ and RocketMQ Connect is written in a file, the Connector that obtains Metrics is the SFTP Source Connector. As such, the Metrics information can be connected to Prometheus through Connector.

RocketmQ Connect, like RocketMQ, implements the semantics of at least once, ensuring that all messages are delivered at least once. If the Source Connector fails to send a message to RocketMQ, the producer retries. If the retry fails, you can skip or stop the task. You can make a choice through configurations. The Sink Connector also retries to pull messages and sends the messages to the dead-letter queue when the number of retries reaches a certain value. This ensures that the messages are not lost.

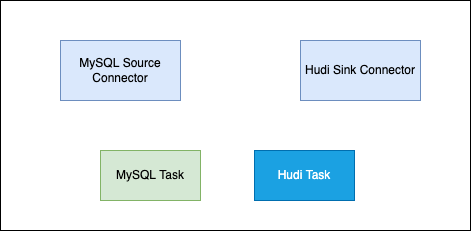

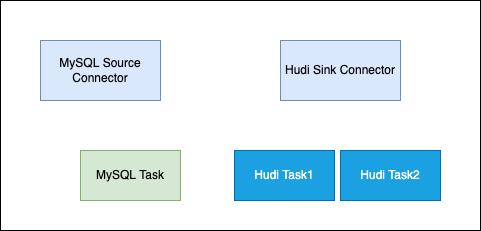

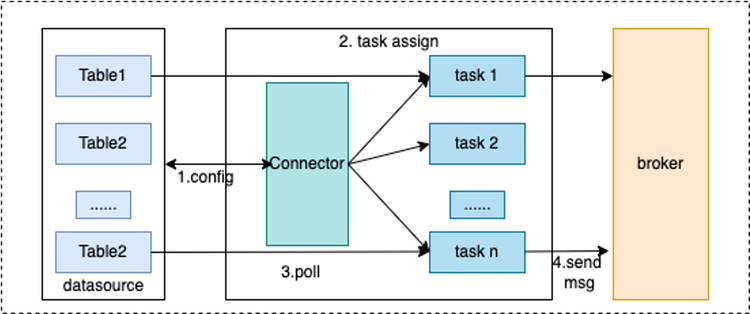

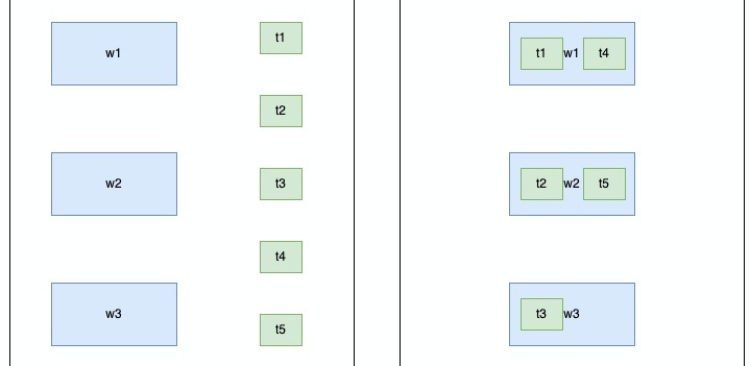

The creation of a Connector is usually done through configuration. The Connector typically contains a logical Connector and a Task that performs data replication, namely a physical thread. The following figure shows two Connector Connectors and their corresponding running tasks.

A Connector can also run multiple tasks at the same time to improve the parallelism of the Connector. For example, the Hudi Sink Connector shown in the following figure has two tasks, each of which processes different sharded data. This increases the parallelism of the Connector and improves the processing performance.

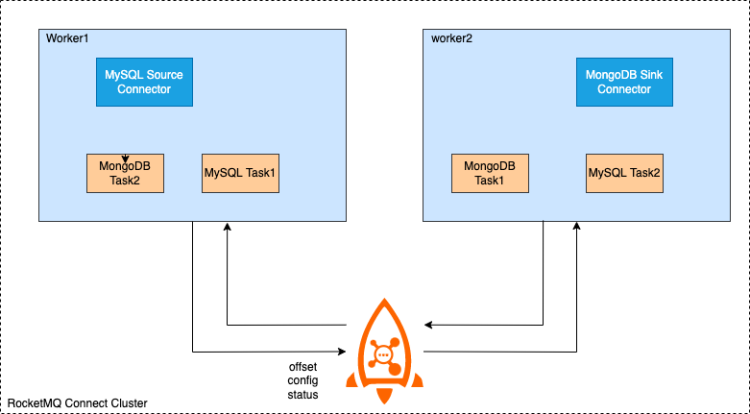

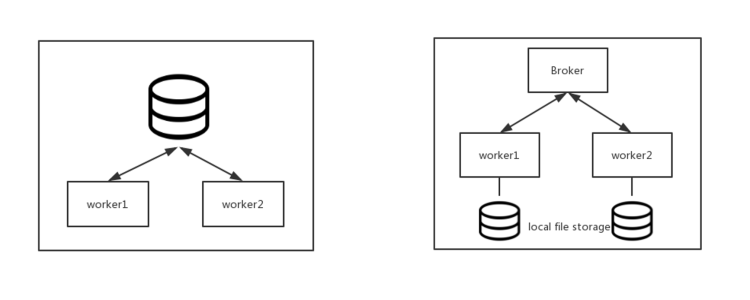

The RocketMQ Connect Worker supports two running modes: cluster mode and standalone mode. As the name implies, multiple Worker nodes are required. It is recommended that at least two Worker nodes are required to form a high-availability cluster. The configuration information, offset information, and status information between clusters are stored through the specified RocketMQ Topic. The newly added Worker node will also obtain the configuration information, offset information, and status information in the cluster, and trigger load balancing to reallocate tasks in the cluster to make the cluster reach a balanced state. Reducing Worker nodes or Worker downtime will also trigger load balancing. This ensures that all tasks in the cluster can run in a balanced manner among the surviving nodes in the cluster.

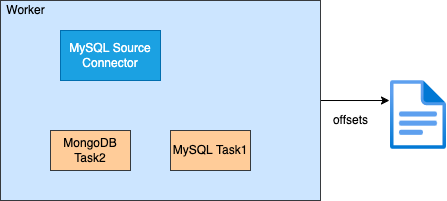

In standalone mode, the task of the Connector runs on a standalone server. The Worker is not highly available, and the task offset information is persisted locally. This mode is suitable for scenarios that do not require high availability or do not require Worker to ensure high availability. For example, you can deploy this mode in a Kubernetes cluster to ensure high availability.

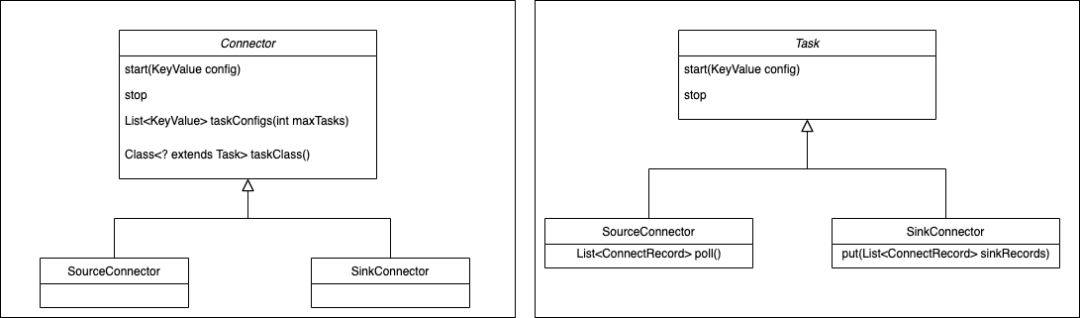

The Connector defines where the data is copied from and to. The SourceConnector reads data from the source data system and writes it to RocketMQ. The SinkConnector reads data from RocketMQ and writes it to the target system. The Connector determines the number of tasks to be created and receives the configuration from the Worker to pass to the tasks.

Task is the minimum allocation unit of a Connector task shard. It is the actual executor that replicates data from the source data source to RocketMQ (SourceTask) or reads data from RocketMQ and writes it to the target system (SinkTask). Task is a stateless task that can be dynamically started and stopped. Multiple Tasks can be executed in parallel. The parallelism of the Connector replicating data is mainly reflected in the number of Tasks.

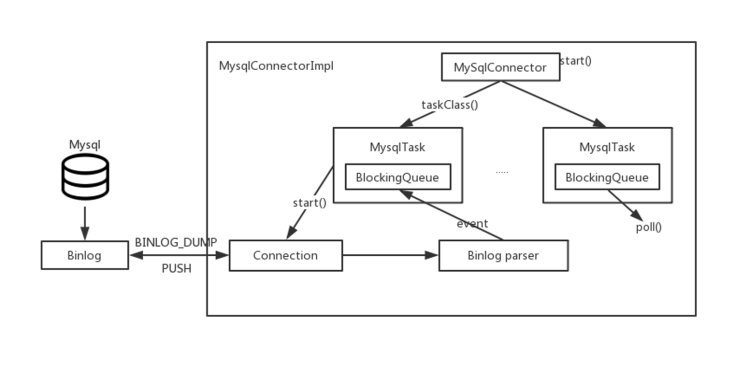

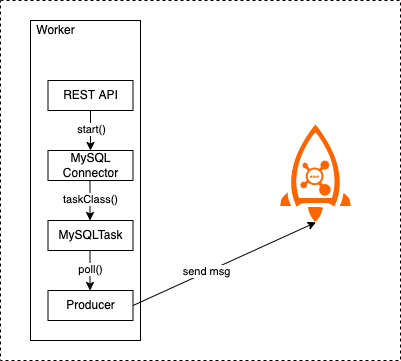

You can also see the responsibilities of Connector and Task through the Connect API. When Connector is implemented, the flow direction of data replication has been determined. Connector receives the configuration related to the data source, and taskClass obtains the task type to be created, specifies the maximum number of tasks through taskConfigs, and assigns the configuration to the Task. After the Task obtains the configuration, it takes data from the data source and writes it to the target storage. The following two figures show the basic processing process of Connectors and Tasks:

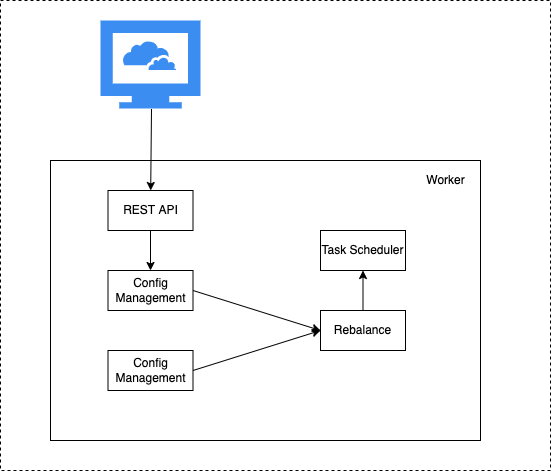

The Worker process is the runtime environment for Connector and Task. It provides RESTful capabilities, accepts HTTP requests, and passes the obtained configurations to Connectors and Tasks. In addition, it is responsible for starting Connectors and Tasks, saving Connector configuration information, and saving the location information of Task synchronization data. It also has the load balancing capability. The high availability of Connect cluster, scaling, and fault handling are mainly implemented depending on the load balancing capability of the Worker process.

From the figure above, we can see that Worker receives HTTP requests through the provided REST API and passes the received configuration information to the configuration management service. The configuration management service saves the configuration locally, synchronizes it to other Worker nodes, and triggers load balancing at the same time.

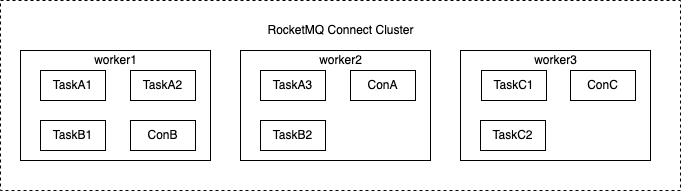

We can see from the following figure how multiple Worker nodes in the Connect cluster start Connectors and Tasks on their respective nodes after load balancing.

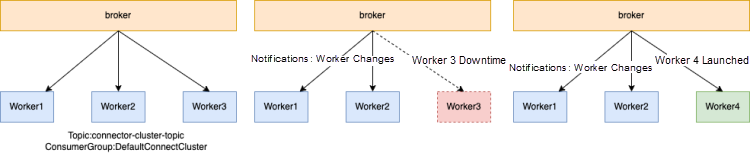

When learning and using RocketMQ, we know that NameSrv is an important component of RocketMQ service registration and discovery, which is simple, lightweight, and easy to maintain. As described in the preceding section, RocketMQ Connect Worker can be deployed in cluster mode. How is the service discovery implemented between Worker nodes? Does the service discovery of Workers depend on external components?

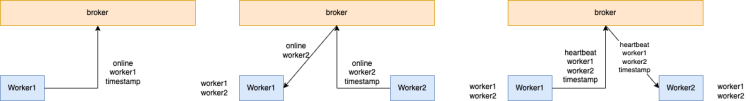

When using a technology or middleware, relying on external components as little as possible is very important for the stability and maintainability of the system, and the learning cost will be lower. This is also easy to understand. If you rely on one more component, you need to maintain one more component, and the learning cost and later maintenance cost will be higher. The same principle goes for RocketMQ Connect, relying on as few or no external components as possible. From the previous introduction to RocketMQ Connect, we know that RocketMQ Connect is an important RocketMQ component of data integration and data inflow and outflow. Therefore, the operation of RocketMQ Connect depends on the RocketMQ cluster. Can we use the features of RocketMQ to realize Worker service discovery? The answer is yes. The RocketMQ client has a consumer change notification mechanism. You only need to start a consumer for each Worker, set the same ConsumerGroup for all consumers, and register to monitor the NOTIFY_CONSUMER_IDS_CHANGED event. Increasing or decreasing Worker nodes will trigger the NOTIFY_CONSUMER_IDS_CHANGED event. When this event is monitored, load balancing of the Worker cluster can be triggered. The client identifiers corresponding to all Workers can be obtained through the RocketMQ client interface, and finally, load balancing can be carried out according to the online clients and tasks.

The subscription consumption of Topic is divided into two types: clustering consumption and broadcasting consumption. However, different clients can use different consumer groups to achieve broadcasting consumption. Metadata synchronization between RocketMQ Connect Workers is implemented based on this principle. All Worker nodes subscribe to the same Topic (connector-config-topic). Each Worker node uses a different consumerGroup to consume the same topic in broadcasting consumption mode. Each Worker node only needs to send configuration information to the connector-config-topic when the configuration changes to synchronize the configuration of each Worker node.

Offset synchronization is similar to configuration synchronization except that different topics and consumerGroups are used.

Service Discovery and Configuration/Offset Management

Connector configuration management is similar to the offset management of tasks. In addition to persisting the configuration and offset information locally, the configuration and offset information are synchronized to other Worker nodes in the cluster. This synchronization mode is implemented by all worker nodes subscribing to the same topic and using different consumerGroups.

The load balancing of Connect is similar to RocketMQ's consumer-side load balancing. They all run the same load balancing algorithm on each node, except the load balancing objects are different. RocketMQ consumer-side load balancing is the load balancing between the same ConsumerGroup consumer and the MessageQueue. Connect is the load balancing between worker nodes and connectors, worker nodes, and tasks, but the logic is similar. The load balancing algorithm obtains all workers and all connectors and tasks and sorts the information. According to the positions of the current worker node, perform the mod calculation on the position of all workers in the sorting process, the connector after sorting, the task position, and the current worker position. This allocates connectors and tasks that need to be run for the current worker node, and then the worker starts these connectors and tasks on this node.

Worker scaling, worker downtime, and new Connector configurations will trigger redistribution. Therefore, the Worker cluster is flexible, fault-handled, and dynamically scaled.

Worker loading the JAR package of the Connector plugin is similar to Tomcat loading the War package. Tomcat does not use the parent delegation model to load classes like jvm loading classes. Instead, it uses a custom ClassLoader to load classes and uses different ClassLoaders to load different classes of different war packages. This can avoid referencing the same jar package in different war packages because of different versions of package referencing problems. Worker is the same. Separate ClassLoader is created for different class plugins when loading, thus avoiding various package referencing problems caused by different war packages referencing the same jar and different versions of the same class. Worker is like a distributed Tomcat in terms of class loading.

After understanding the implementation principle of RocketMQ Connect, let's take a look at how to implement a Connector. Look at this example:

Business data is written to MySQL, synchronized to Hudi, and a data lake is built through Hudi, which is similar to the scenario we mentioned at the beginning. How can we implement this process through Connector?

From the previous introduction, it should be clear that two Connectors need to be implemented, one is the SourceConnector from MySQL to RocketMQ, and the other is the Sink Connector that reads data from RocketMQ and then writes it to Hudi.

Take MySqlSourceConnector as an example to describe how to write a Connector.

First of all, we must implement a SourceConnector, implement class MySqlConnectorImpl inheriting SourceConnector interface, and implement configuration initialization, taskClass, taskConfigs, and other interfaces. The taskClass method specifies creating a MySqlTask. taskConfigs configures the received connector to set each task configuration. These configurations include the account, password, address, and port related to connecting to the MySQL instance. Worker starts MySqlTask and transmits the configurations to MySqlTask. The MySqlTask creates a link to the MySQL instance, obtains MySql Binlog, parses the Binlog, encapsulates the parsed data into the ConnectRecord, and puts it in the BlockingQueue. The poll interface retrieves the data from BlockingQueue and returns the data. This implements a Connector.

Package the written Connector to the directory specified by Worker and load it to the Worker process. You can call the Worker HTTP interface to create a MySqlSourceConnector. The Worker starts the MysqlConnector. We can obtain the MySqlTask through MysqlConnector taskClass, and then the Task is started. WorkerSourceTask obtains data from the poll interface and then sends the obtained data to RocketMQ through the Producer. This implements the Connector of the data synchronization from MySQL to RocketMQ.

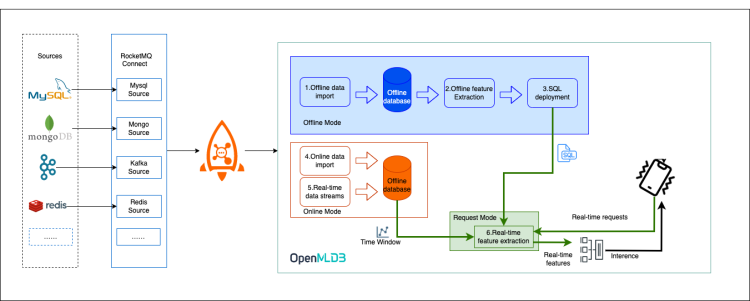

CDC has completed the adaptation with Debezium, JDBC standard protocol has also been supported, and on this basis, it has cooperated with the OpenMLDB community to develop a Connector with the machine learning-oriented database OpenMLDB. The data lake has also established a link with Hudi and will establish a connection with popular storage (such as Doris, ClickHouse, and ES) in the near future. If there is familiar storage, everyone can develop a connector with RocketMQ through OpenMessaging Connect API.

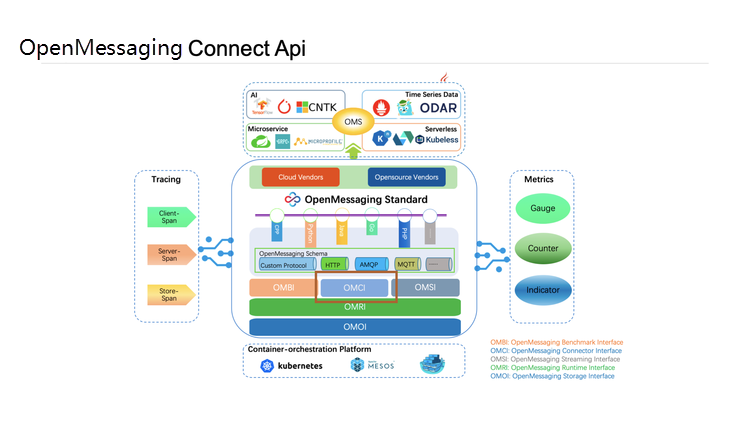

Speaking of OpenMessaging Connect API, let's briefly introduce OpenMessaging. OpenMessaging is the next open-source organization of the Linux Foundation. It is committed to formulating standards in the messaging field. In addition to OpenMessaging Connect API, there are standard OMOI for storage, standard OMBI for stress testing, and standard OMS for stream computing.

https://github.com/apache/rocketmq-connect

OpenMLDB is an open-source machine learning database that provides a production-level platform with online and offline consistency. RocketMQ establishes a connection with the machine learning database OpenMLDB through cooperation with the OpenMLDB community.

This article introduces the concept of RocketMQ Connect, explains the implementation principle of RocketMQ Connect, and gives a preliminary introduction to service discovery, configuration synchronization, offset synchronization, and load balancing. Then, it uses MySqlSourceConnector as an example to explain how to implement a Connector. Finally, it introduces the Connect API and ecology and provides some hands-on tutorials related to RocketMQ Connect. We hope this article will be helpful for anyone learning RocketMQ Connect. Interested students are welcome to join and contribute to the community to implement a Connector from a familiar storage system to RocketMQ.

RocketMQ Connect

https://github.com/apache/rocketmq-connect

Koordinator v1.1: Load-Aware Scheduling and Interference Detection Collection

634 posts | 55 followers

FollowAlibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - May 16, 2023

Alibaba Cloud Native Community - May 16, 2023

Alibaba Cloud Native Community - December 19, 2022

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - December 16, 2022

634 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native Community